Elastic Algorithm Service (EAS) lets you configure a secure and encrypted environment. This enables you to securely deploy and run inference on encrypted models. This method provides multilayered security to protect your data and models throughout their lifecycle. It is suitable for scenarios with high security requirements for sensitive data, such as financial services, government, and enterprise applications. This topic describes the complete process of preparing an encrypted model, performing an encrypted deployment, and running inference.

Workflow

This solution uses the following process for secure encrypted deployment and inference:

Encrypt the model: Use Gocryptfs or Sam to encrypt the model. Upload the encrypted model to a data source, such as Object Storage Service (OSS). This simplifies the process of mounting and deploying the model in EAS.

Store the decryption key: EAS currently supports a self-built Trustee remote attestation service hosted on Alibaba Cloud. You can deploy the Trustee remote attestation service in an Alibaba Cloud ACK Serverless cluster to securely authenticate the model deployment and inference environments. You can use Alibaba Cloud Key Management Service (KMS) as the backend to store the decryption key.

Step 2: Deploy the encrypted model using PAI-EAS

When you deploy an encrypted model using PAI-EAS, the system automatically connects to the Trustee remote attestation service to authenticate the deployment environment. After successful authentication, the system uses the key in KMS to decrypt and mount the model. Then, it deploys the model as an EAS service.

Step 3: Call the service to run secure inference

After the deployment is complete, you can connect to the inference service to send inference requests.

Step 1: Prepare an encrypted model

Before you deploy the model to the cloud, you must encrypt it and upload it to cloud storage. The decryption key for the model is managed by KMS, which is controlled by the remote attestation service. You must perform the model encryption in a local or trusted environment. The following example shows how to deploy the Qwen2.5-3B-Instruct large model.

1. Prepare the model (Optional)

If you already have a model, you can skip this section and go to 2. Encrypt the model.

This example uses the modelscope tool to download the Qwen2.5-3B-Instruct model. This requires Python 3.9 or later. You can run the following commands in the terminal to download the model.

pip3 install modelscope importlib-metadata modelscope download --model Qwen/Qwen2.5-3B-InstructAfter the commands run successfully, the model is downloaded to the ~/.cache/modelscope/hub/models/Qwen/Qwen2.5-3B-Instruct/ directory.

2. Encrypt the model

PAI-EAS supports the following two encryption methods. This topic uses Sam as an example.

Gocryptfs: An encryption mode based on AES256-GCM that complies with the open-source Gocryptfs standard.

Sam: An Alibaba Cloud trusted AI model encryption format that protects the confidentiality of the model and prevents the license content from being tampered with or used illegally.

Method 1: Use Sam encryption

Click to download the Sam encryption module package RAI_SAM_SDK_2.1.0-20240731.tgz. Then, you can run the following command to decompress the package.

# Decompress the Sam encryption module tar xvf RAI_SAM_SDK_2.1.0-20240731.tgzUse the Sam encryption module to encrypt the model.

# Go to the encryption directory of the Sam encryption module cd RAI_SAM_SDK_2.1.0-20240731/tools # Encrypt the model ./do_content_packager.sh <model_directory> <plaintext_key> <key_id>Where:

<model_directory>: The directory where the model to be encrypted is located. You can use a relative or absolute path, such as

~/.cache/modelscope/hub/models/Qwen/Qwen2.5-3B-Instruct/.<plaintext_key>: A custom encryption key. The valid length is 4 to 128 bytes. An example is

0Bn4Q1wwY9fN3P. The plaintext key is the model decryption key that you need to upload to the remote attestation service (Trustee).<key_id>: A custom key identifier. The valid length is 8 to 48 bytes. An example is

LD_Demo_0001.

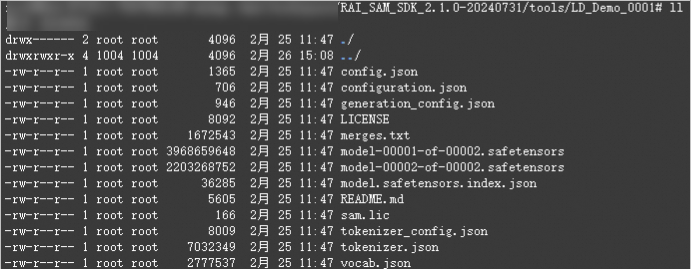

After encryption, the model is stored as ciphertext in the

<key_id>directory in the current path.

Method 2: Use Gocryptfs encryption

Install Gocryptfs, the tool used to encrypt the model. Currently, only Gocryptfs v2.4.0 with default parameters is supported. You can choose one of the following methods to install it:

Method 1: (Recommended) Install from a yum source

If you use the Alinux 3 or AnolisOS 23 operating system, you can install gocryptfs directly from the yum source.

Alinux 3

sudo yum install gocryptfs -yAnolisOS 23

sudo yum install anolis-epao-release -y sudo yum install gocryptfs -yMethod 2: Download the precompiled binary directly

# Download the precompiled Gocryptfs package wget https://github.jobcher.com/gh/https://github.com/rfjakob/gocryptfs/releases/download/v2.4.0/gocryptfs_v2.4.0_linux-static_amd64.tar.gz # Decompress and install tar xf gocryptfs_v2.4.0_linux-static_amd64.tar.gz sudo install -m 0755 ./gocryptfs /usr/local/binCreate a Gocryptfs key file to use as the model encryption key. In a later step, you will need to upload this key to the remote attestation service (Trustee) for management.

In this solution,

0Bn4Q1wwY9fN3Pis used as the key to encrypt the model. The key content is stored in thecachefs-passwordfile. You can also use a custom key. In practice, we recommend that you use a randomly generated strong key.cat << EOF > ~/cachefs-password 0Bn4Q1wwY9fN3P EOFUse the created key to encrypt the model.

Configure the path for the plaintext model.

NoteConfigure the path where the plaintext model you just downloaded is located. If you have other models, replace the path with the actual path of your target model.

PLAINTEXT_MODEL_PATH=~/.cache/modelscope/hub/models/Qwen/Qwen2.5-3B-Instruct/Use Gocryptfs to encrypt the model directory tree.

After encryption, the model is stored as ciphertext in the

./cipherdirectory.mkdir -p ~/mount cd ~/mount mkdir -p cipher plain # Install Gocryptfs runtime dependencies sudo yum install -y fuse # Initialize gocryptfs cat ~/cachefs-password | gocryptfs -init cipher # Mount to plain cat ~/cachefs-password | gocryptfs cipher plain # Move the AI model to ~/mount/plain cp -r ${PLAINTEXT_MODEL_PATH}/. ~/mount/plain

3. Upload the model

EAS supports storing encrypted models in various storage backends. When you deploy a service, you can load the decrypted model into the service instance by mounting it. For more information, see Mount storage.

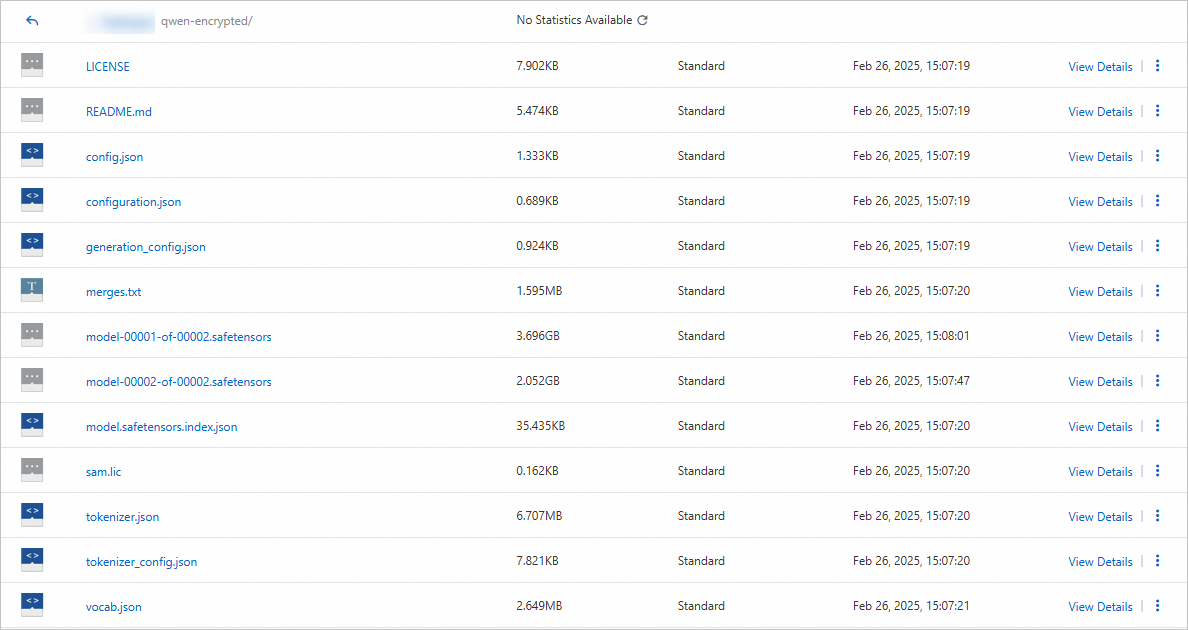

Take OSS as an example. You can create a bucket and a directory named qwen-encrypted, such as oss://examplebucket/qwen-encrypted/. For more information, see Quick Start for the console. Because the model file is large, we recommend that you use ossbrowser to upload the encrypted model to this directory.

To use the command-line tool ossutil, we recommend that you use multipart upload.

The following figure shows the result after you upload the ciphertext model encrypted with Sam. If you use the Gocryptfs encryption method, the file names of the model files are all encrypted into garbled text.

4. Set up the remote attestation service and upload the key

Host the model decryption key in the remote attestation service. The remote attestation service is a user-managed authentication service that is responsible for authenticating the runtime environment of the model and the inference service. The model decryption key is injected to decrypt and mount the model only after the trustworthiness of the EAS model deployment environment meets the specified conditions.

Currently, EAS supports a self-built Trustee remote attestation service hosted on Alibaba Cloud. You can use an Alibaba Cloud ACK Serverless cluster to deploy the remote attestation service and authenticate the model deployment and inference environments. At the same time, you can use Alibaba Cloud KMS to provide professional security for the model decryption key. The procedure is as follows:

The region of the ACK cluster does not need to be the same as the destination region where the EAS service is deployed.

The Alibaba Cloud KMS instance must be in the same region as the Alibaba Cloud Trustee remote attestation service's ACK cluster.

Before you create a KMS instance and an ACK cluster, you must create a VPC and two vSwitches. For more information, see Create and manage a VPC.

First, create an Alibaba Cloud KMS instance to use as the key storage backend.

Go to the Key Management Service console. In the navigation pane on the left, choose . On the Software Key Management tab, create and enable an instance. When you enable the instance, select the same VPC as the ACK cluster. For more information, see Purchase and enable a KMS instance.

Wait about 10 minutes for the instance to start.

After the instance starts, in the navigation pane on the left, choose . On the Key Management page, create a customer master key for the instance. For more information, see Step 1: Create a software-protected key.

In the navigation pane on the left, choose . On the Access Points page, create an application access point for the instance. Set Scope to the KMS instance you created. For more information about other configurations, see Method 1: Quick creation.

After the application access point is created, the browser automatically downloads the ClientKey***.zip file. After you decompress the zip file, it contains:

Application Identity Credential Content (ClientKeyContent): The file name is

clientKey_****.jsonby default.Credential Security Token (ClientKeyPassword): The file name is

clientKey_****_Password.txtby default.

On the page, click the KMS instance name. In the Basic Information area, click Download next to Instance CA Certificate to export the public key certificate file

PrivateKmsCA_***.pemfor the KMS instance.

Create an ACK service cluster and install the csi-provisioner component.

Go to the Create Cluster page to create an ACK Serverless cluster. The key parameter configurations are described below. For more information about other configurations, see Create a cluster.

Cluster Configurations: Configure the following parameters, then click Next: Component Configurations.

Key Configuration

Description

VPC

Select Use Existing and select Configure SNAT For VPC. Otherwise, you cannot pull the Trustee image.

VSwitch

Make sure that at least two virtual switches are created in the existing VPC. Otherwise, you cannot expose the public ALB.

Component Configurations: Configure the following parameters, then click Next: Confirm Order.

Key Configuration

Description

Service Discovery

Select CoreDNS.

Ingress

Select ALB Ingress. For the source of the ALB cloud-native gateway instance, select Create and select two virtual switches.

Confirm Order: Confirm the configuration information and terms of service, then click Create Cluster.

After the cluster is created, install the csi-provisioner (managed) component. For more information, see Manage components.

Deploy the Trustee remote attestation service in the ACK cluster.

First, connect to the cluster over the Internet or an internal network. For more information, see Connect to a cluster.

Upload the downloaded application identity credential (

clientKey_****.json), credential security token (clientKey_****_Password.txt), and CA certificate (PrivateKmsCA_***.pem) for the KMS instance to the environment that is connected to the ACK Serverless cluster. Then, run the following command to deploy the Trustee remote attestation service and use Alibaba Cloud KMS as the key storage backend.# Install the plugin helm plugin install https://github.com/AliyunContainerService/helm-acr helm repo add trustee acr://trustee-chart.cn-hangzhou.cr.aliyuncs.com/trustee/trustee helm repo update export DEPLOY_RELEASE_NAME=trustee export DEPLOY_NAMESPACE=default export TRUSTEE_CHART_VERSION=1.0.0 # Set the region information of the ACK cluster, for example, cn-hangzhou export REGION_ID=cn-hangzhou # Information about the exported KMS instance # Replace with your KMS instance ID export KMS_INSTANCE_ID=kst-hzz66a0*******e16pckc # Replace with the path to your KMS instance application identity credential export KMS_CLIENT_KEY_FILE=/path/to/clientKey_KAAP.***.json # Replace with the path to your KMS instance credential security token export KMS_PASSWORD_FILE=/path/to/clientKey_KAAP.***_Password.txt # Replace with the path to your KMS instance CA certificate export KMS_CERT_FILE=/path/to/PrivateKmsCA_kst-***.pem helm install ${DEPLOY_RELEASE_NAME} trustee/trustee \ --version ${TRUSTEE_CHART_VERSION} \ --set regionId=${REGION_ID} \ --set kbs.aliyunKms.enabled=true \ --set kbs.aliyunKms.kmsIntanceId=${KMS_INSTANCE_ID} \ --set-file kbs.aliyunKms.clientKey=${KMS_CLIENT_KEY_FILE} \ --set-file kbs.aliyunKms.password=${KMS_PASSWORD_FILE} \ --set-file kbs.aliyunKms.certPem=${KMS_CERT_FILE} \ --namespace ${DEPLOY_NAMESPACE}NoteThe first command to install the plugin (

helm plugin install...) may take a long time. If the installation fails, you can run thehelm plugin uninstall cm-pushcommand to uninstall the plugin, and then run the installation command again.The following is an example of the returned result:

NAME: trustee LAST DEPLOYED: Tue Feb 25 18:55:33 2025 NAMESPACE: default STATUS: deployed REVISION: 1 TEST SUITE: NoneRun the following command in the environment that is connected to the ACK Serverless cluster to obtain the endpoint of Trustee.

export TRUSTEE_URL=http://$(kubectl get AlbConfig alb -o jsonpath='{.status.loadBalancer.dnsname}')/${DEPLOY_RELEASE_NAME} echo ${TRUSTEE_URL}An example of the returned result is

http://alb-ppams74szbwg2f****.cn-shanghai.alb.aliyuncsslb.com/trustee.Run the following command in the environment that is connected to the ACK Serverless cluster to test the connectivity of the Trustee service.

cat << EOF | curl -k -X POST ${TRUSTEE_URL}/kbs/v0/auth -H 'Content-Type: application/json' -d @- { "version":"0.1.0", "tee": "tdx", "extra-params": "foo" } EOFIf the Trustee service is running normally, the expected output is as follows:

{"nonce":"PIDUjUxQdBMIXz***********IEysXFfUKgSwk=","extra-params":""}

Configure the Trustee network whitelist.

NoteThis configuration allows the PAI-EAS model deployment environment to actively access the remote attestation service for an environment security check.

Go to the Alibaba Cloud Application Load Balancer (ALB) console, create an access control policy group, and add the Address/CIDR Block that has permission to access Trustee as an IP entry. For more information, see Access control. The CIDR blocks to add are as follows:

The public IP address of the VPC that is attached when you deploy the EAS service.

The egress IP address of the inference client.

Run the following command to obtain the ID of the ALB instance used by the Trustee instance on the cluster.

kubectl get ing --namespace ${DEPLOY_NAMESPACE} kbs-ingress -o jsonpath='{.status.loadBalancer.ingress[0].hostname}' | cut -d'.' -f1 | sed 's/[^a-zA-Z0-9-]//g'The expected output is as follows:

alb-llcdzbw0qivhk0****In the Alibaba Cloud ALB console, in the navigation pane on the left, choose . In the region where the cluster is located, search for the ALB instance obtained in the previous step and click the instance ID to go to the instance product page. At the bottom of the page, in the Instance Properties area, click Disable Configuration Read-only Mode.

Switch to the Listeners tab. In the Access Control column of the target listener instance, click Enable and configure the whitelist to be the access control policy group that you created in the previous step.

Create a credential to store the model decryption key.

The model decryption key managed by Trustee is actually stored in KMS. The key can be accessed only after the remote attestation service authenticates the target environment.

Go to the Key Management Service console. In the navigation pane on the left, choose . On the Generic Secret tab, click Create Secret. The key configurations are as follows:

Secret Name: A custom name for the secret, used to index the key. For example,

model-decryption-key.Set Secret Value: Enter the key used to encrypt the model. For example, 0Bn4Q1wwY9fN3P. Your key may be different.

Encryption Master Key: Select the master key that you created in the previous step.

Step 2: Deploy the encrypted model using PAI-EAS

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Custom Model Deployment area, click Custom Deployment.

On the Custom Deployment page, configure the following key parameters. For information about other parameters, see Custom deployment.

Parameter

Description

Environment Information

Deployment Method

Select Image Deployment.

Image Configuration

Select an image. In this example, select Official Image > chat-llm-webui:3.0-vllm.

Model Configuration

Click the +OSS button and configure the following parameters:

OSS: Select the directory where the model ciphertext is located. For example,

oss://examplebucket/qwen-encrypted/. Your path may be different.Mount Path: The directory where the plaintext model is mounted. For example,

/mnt/model.

Run Command

An example configuration is

python webui/webui_server.py --port=8000 --model-path=/mnt/model --backend=vllm. Note that--model-pathmust be the same as the mount path to read the decrypted model.Port Number

In this solution, the port number is set to 8000.

Environment Variables

You can add environment variables for the Trustee remote attestation service to use for authentication. In this solution, an environment variable is added with the key

eas-testand the value123.Resource Deployment

Deployment Resources

In this solution, the resource specification is set to ml.gu7i.c32m188.1-gu30.

VPC

VPC

Configure a VPC and set an SNAT public IP address for the VPC to enable public network access for EAS. This allows EAS to access the remote attestation service and perform environment security checks.

VSwitch

Security Group Name

Service Features

Configure Secure Encrypted Environment

Turn on the Configure Secure Encrypted Environment switch and configure the following parameters:

File Encryption Method: The encryption method used for model encryption. Sam and Gocryptfs are supported. In this solution, Sam is selected.

System Trust Management Service Address: The address of the deployed Trustee service. For example,

http://alb-ppams74szbwg2f****.cn-shanghai.alb.aliyuncsslb.com/trustee.KBS URI Of Decryption Key: The KBS URI of the model decryption key. The format is

kbs:///default/aliyun/<secret_name_of_the_key>. Replace<secret_name_of_the_key>with the name of the secret you created in the previous step.

The following is an example of the final JSON configuration:

After you configure the parameters, click Deploy.

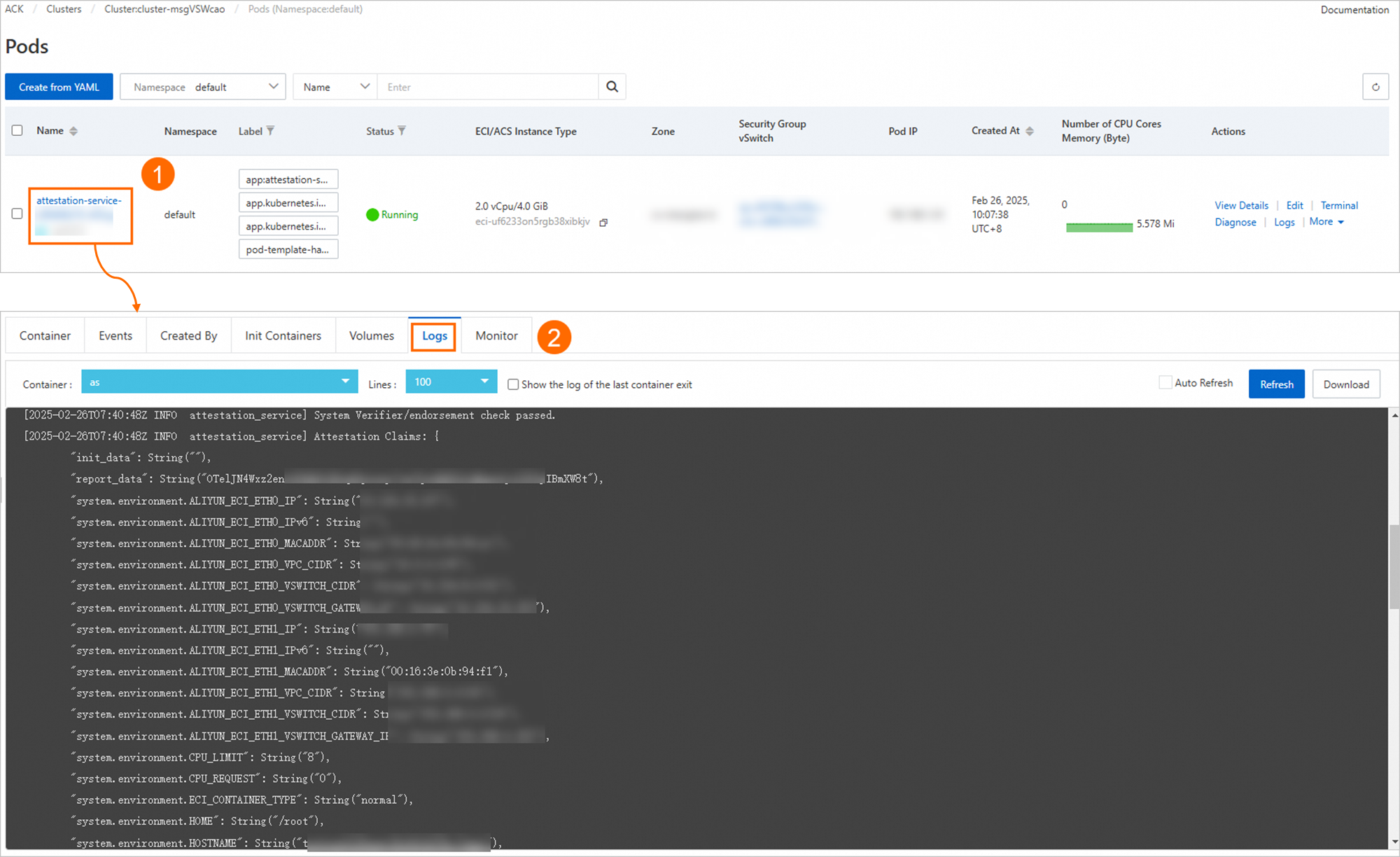

When the Service Status is Running, the service is deployed. You can then go to the Cluster List page, click the target cluster name, and on the Pods page, follow the instructions in the figure below to view the logs of the

attestation-service-*container. The logs show whether the Trustee remote attestation service has authenticated the model deployment environment and provide details about the execution environment.

Step 3: Call the service to run secure inference

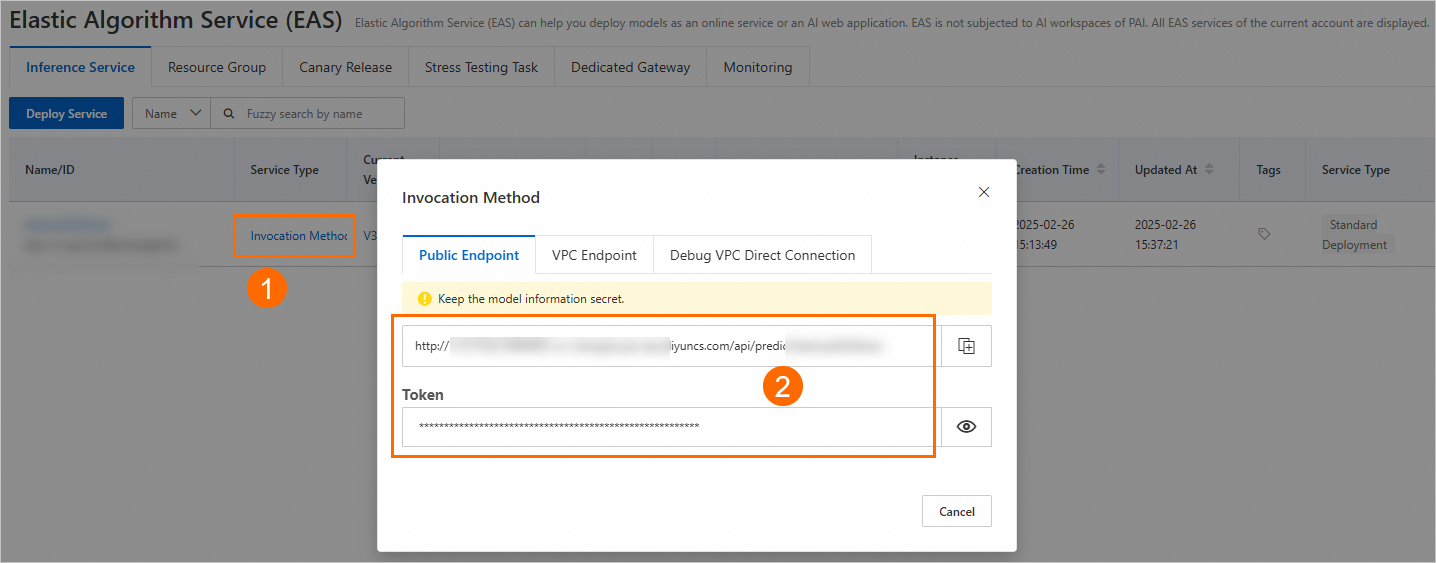

1. View the service endpoint

On the Elastic Algorithm Service (EAS) page, click View Invocation Method in the Service Method column of the target service to obtain the service endpoint and token.

2. Call the EAS service

You can run the following cURL command to send an inference request to the inference service.

curl <Service_URL> \ -H "Content-type: application/json" \ --data-binary @openai_chat_body.json \ -v \ -H "Connection: close" \ -H "Authorization: <Token>"Where:

<Service_URL>: The EAS service endpoint.

<Token>: The EAS service token.

openai_chat_body.json is the original inference request. The following is an example of the request content:

{ "max_new_tokens": 4096, "use_stream_chat": false, "prompt": "What is the capital of Canada?", "system_prompt": "Act like you are a knowledgeable assistant who can provide information on geography and related topics.", "history": [ [ "Can you tell me what's the capital of France?", "The capital of France is Paris." ] ], "temperature": 0.8, "top_k": 10, "top_p": 0.8, "do_sample": true, "use_cache": true }

The following is an example of the returned result:

{ "response": "The capital of Canada is Ottawa.", "history": [ [ "Can you tell me what's the capital of France?", "The capital of France is Paris." ], [ "What is the capital of Canada?", "The capital of Canada is Ottawa." ] ] }