PAI-EAS Spot offers a cost-effective solution for online inference service deployment using preemptible instances. It is ideal for cost-sensitive scenarios that can accommodate some response latency. This document provides developers with best practices in using PAI-EAS Spot to effectively manage resources and minimize costs while ensuring service stability.

Scenarios

Non-critical business: Applications where occasional service interruptions have minimal impact.

Fault-tolerant processing: You can manage temporary service disruptions through retry mechanisms or other fault tolerance methods.

High demand for cost optimization: Businesses and projects that aim to lower operational costs by using more affordable and flexible computing resources.

Create a spot service

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Enter Elastic Algorithm Service (EAS).

On the Model Online Service (EAS) page, click Deploy Service. In the Custom Model Deployment section, click Custom Deployment.

In the Resource Deployment section, select Public Resource as the resource type. Select a resource specification (L or H specifications recommended).

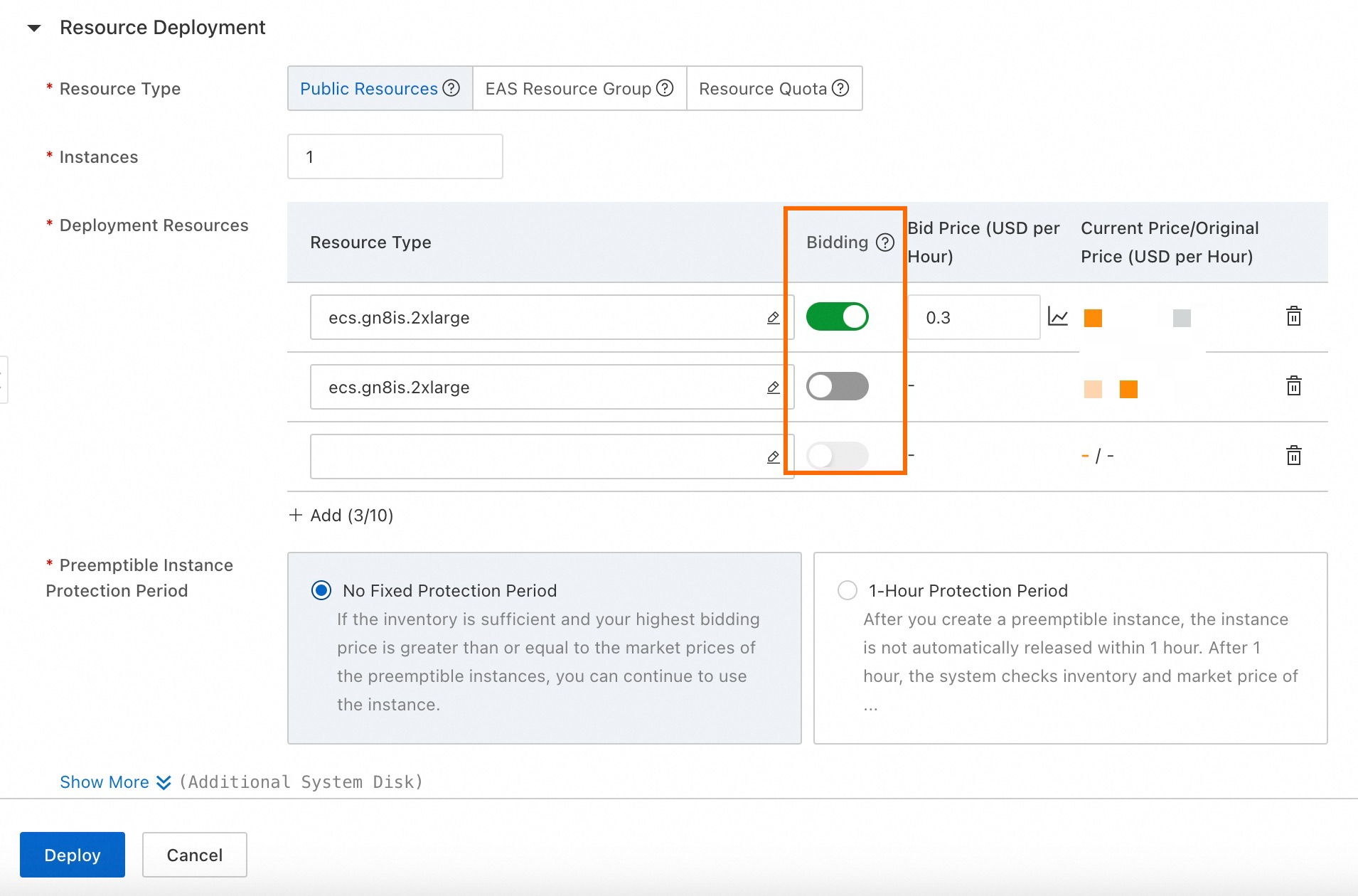

Turn on the Bidding switch, and set your bid. To inform your bid, you can view the historical price curve, which shows the model's market price trends over the past 48 hours.

Important

ImportantThe bid price is not the actual price, which is the market price. The bid price represents the maximum price you are willing to pay. If the market price is lower than your bid price, the resource remains allocated to you and is not released. You are actually charged the market price, not the bid price.

We highly recommend that you set the bid price at 20% of the original price. For example, if the pay-as-you-go price for an instance is $ 2.58 per hour, the market price is unlikely to exceed 20% of the original price, which is $ 0.516 per hour. This strategy allows for significant cost savings while ensuring stable resource availability. For more information, see Specify preemptible instances.

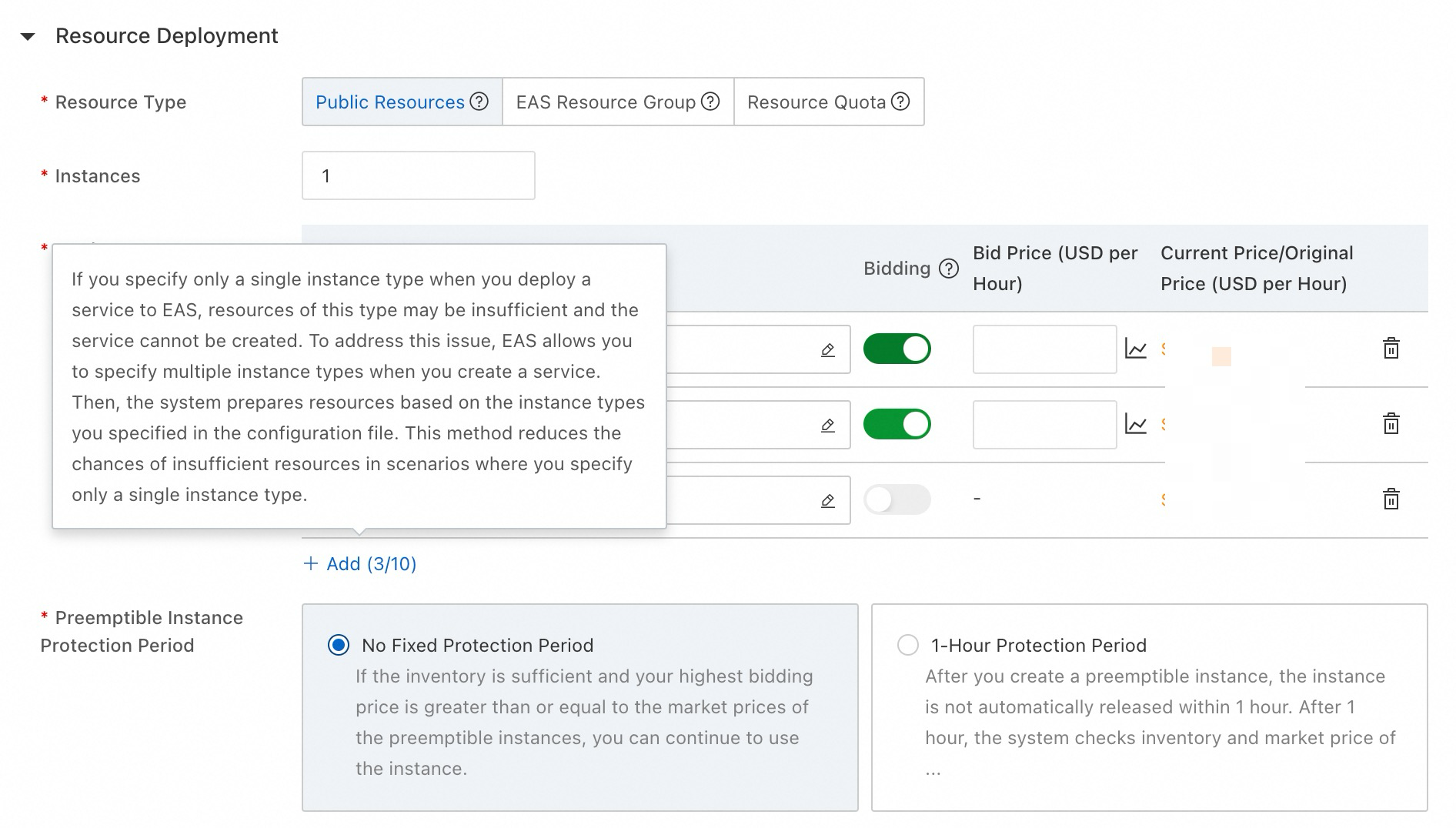

We recommend that you configure regular instances alongside the preemptible instances to prevent service deployment failures in case of instance preemption.

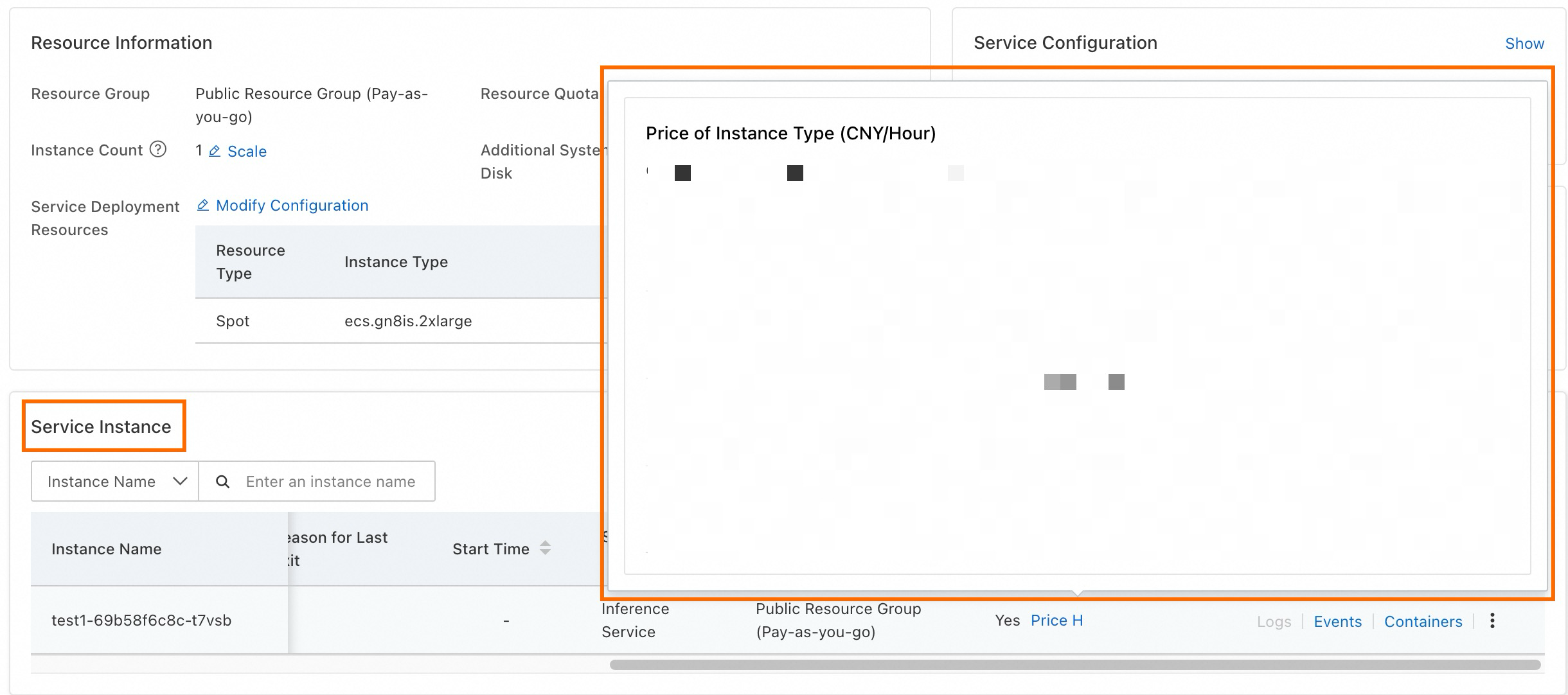

After service deployment, you can monitor the model's price fluctuations over the past 48 hours on the service details page. This insight helps you better understand and manage cost variations.

Recycling and fault tolerance mechanisms

EAS-side recycling mechanism

EAS typically receives a notification about 5 minutes before a spot instance is due for recycling. Upon notification, EAS initiates a graceful shutdown to smoothly divert traffic away from the instance scheduled for recycling, thus preventing service interruptions. At the same time, EAS automatically launches new instances, deploying them in the order specified in your resource configuration to minimize the impact of recycling and maintain uninterrupted customer service.

We recommend that you configure regular instances alongside the preemptible instances to ensure continuous and stable service.

Switch back to spot instances after recycling

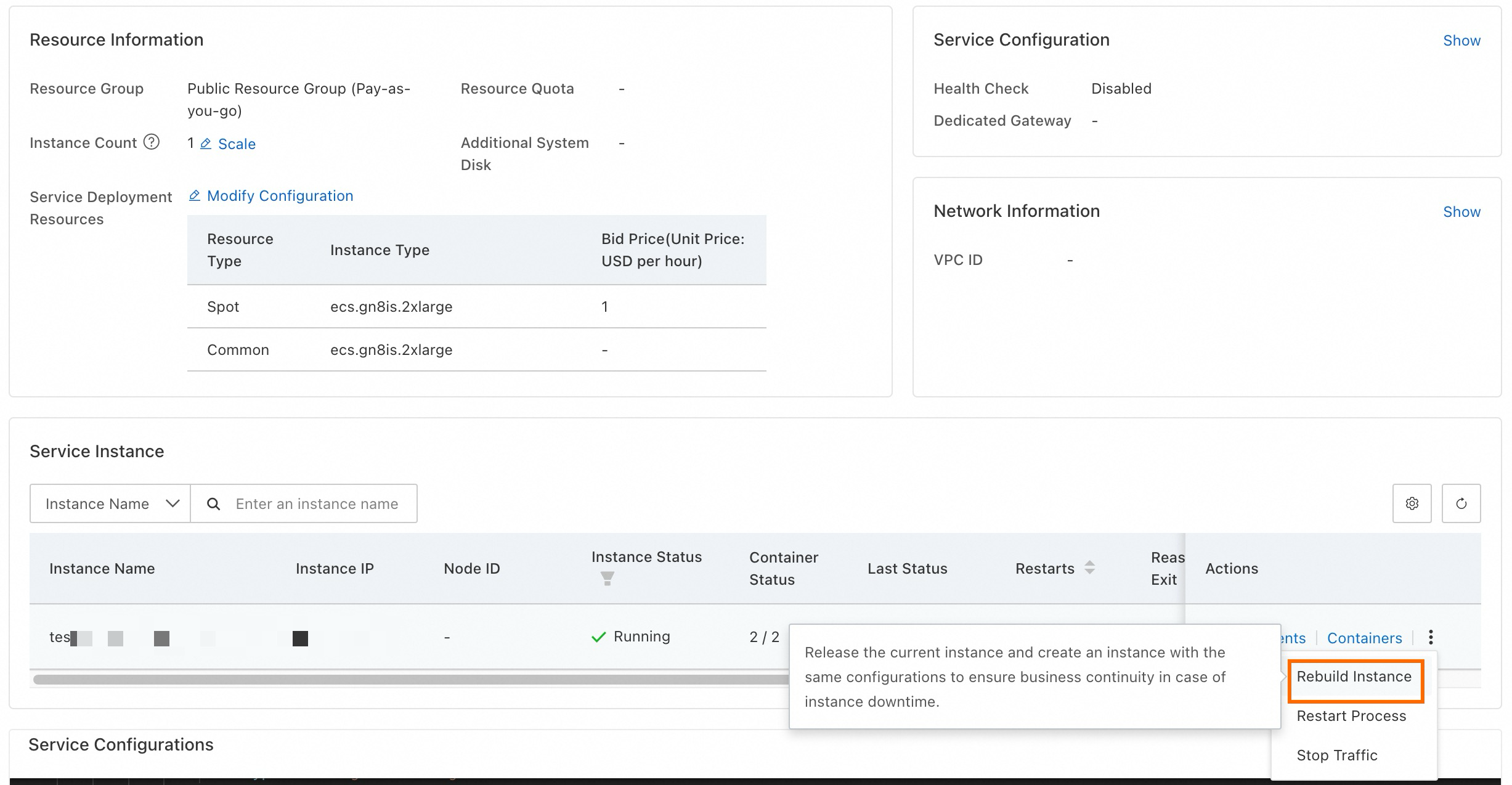

If regular resources replace a recycled spot instance and you want to switch back to the spot instance once inventory is restored, use the Rebuild Instance feature.

Click Rebuild Instance to release resources and recreate an instance of the same configuration:

Recommended configuration strategy

To enhance service stability and reliability and to accelerate the startup of new instances, we recommend the following configuration strategies.

Configure instances of multiple specifications

For service stability and reliability, diversify your resource configurations by selecting various specifications. Include at least one regular resource as a backup in your configuration. The EAS service can sequentially use the specifications based on the specified order, maximizing the stability of service operation. For more information, see Specify multiple instance types.

Configure local directory memory cache

To speed up the process of pulling model files when starting a new instance after recycling, configure a local directory memory cache. This uses idle memory to cache model files, reducing the time needed to read model files during scale-out and mitigating service downtime due to spot recycling.

Enable Memory Caching when creating the EAS service to enhance instance scale-out efficiency. For more information, see Enable memory caching for a local directory.

The following table describes the benefits of Memory Caching (cachefs) using the Stable Diffusion model as an example. Enabling cachefs allows the model to be read from the memory of other instances within the service during scale-out (cachefs remote hit), significantly reducing model loading time compared to reading directly from the mounted OSS directory.

Model | Model size | Model loading time (s) | |

OSS mount | cachefs remote hit | ||

anything-v4.5.safetensors | 7.2G | 89.88 | 15.18 |

Anything-v5.0-PRT-RE.safetensors | 2.0G | 16.73 | 5.46 |

cetusMix_Coda2.safetensors | 3.6G | 24.76 | 7.13 |

chilloutmix_NiPrunedFp32Fix.safetensors | 4.0G | 48.79 | 8.47 |

CounterfeitV30_v30.safetensors | 4.0G | 64.99 | 7.94 |

deliberate_v2.safetensors | 2.0G | 16.33 | 5.55 |

DreamShaper_6_NoVae.safetensors | 5.6G | 71.78 | 10.17 |

pastelmix-fp32.ckpt | 4.0G | 43.88 | 9.23 |

revAnimated_v122.safetensors | 4.0G | 69.38 | 3.20 |

Use ACR Enterprise Edition

To improve image pulling efficiency when starting a new instance after recycling, use the Container Registry (ACR) Enterprise Edition with image acceleration enabled. Deploy EAS services using accelerated images with the _accelerated suffix. Also, when deploying EAS services, select the same VPC associated with your ACR instance.

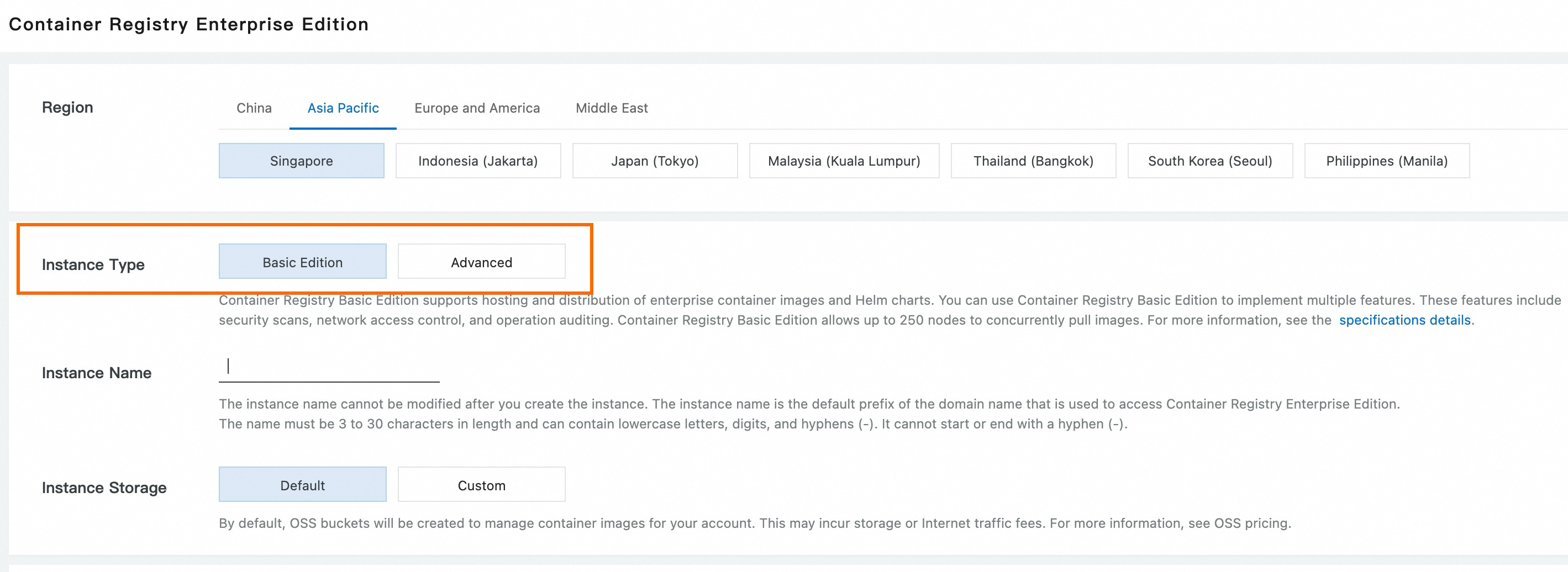

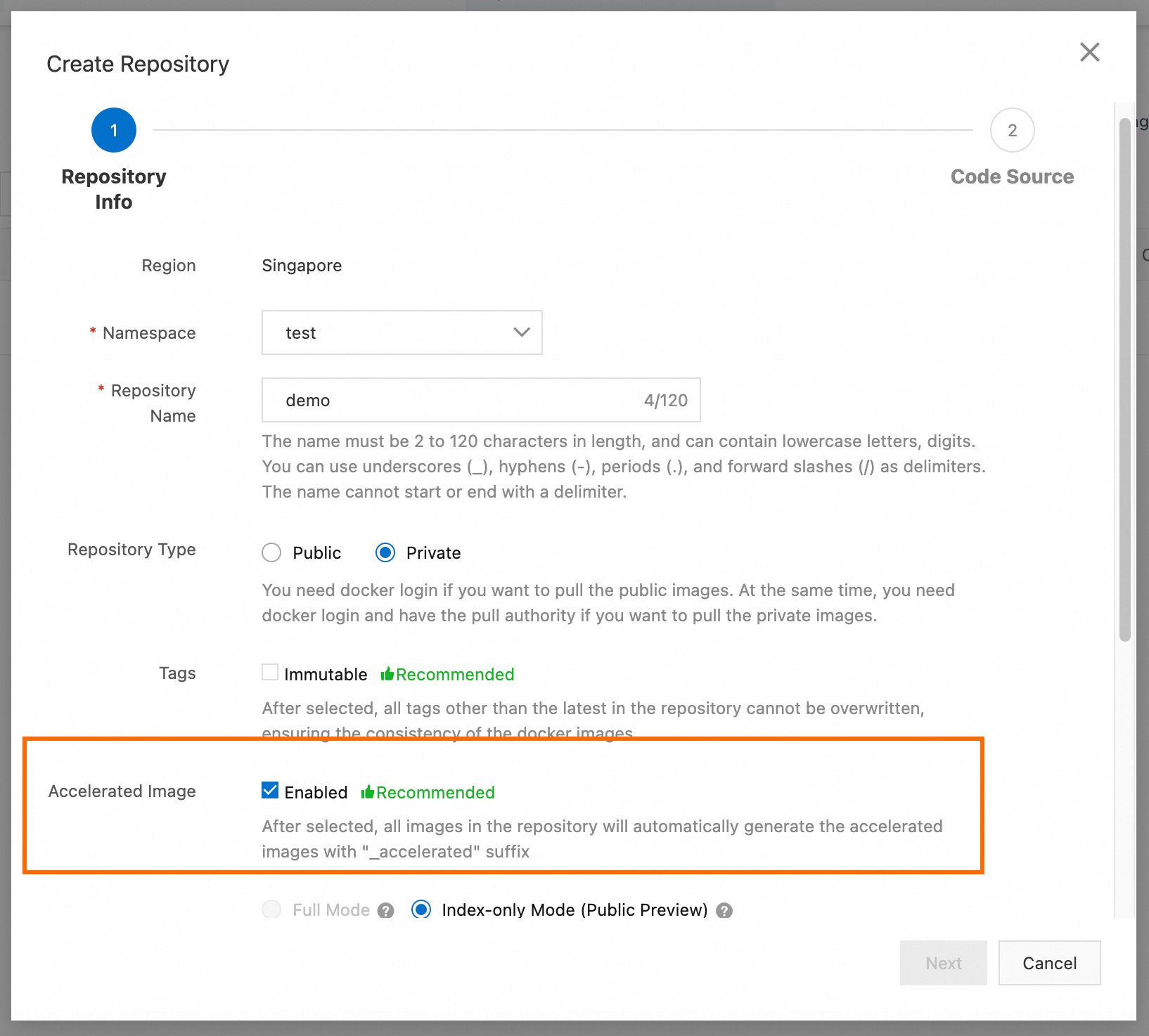

Purchase an ACR Enterprise Edition and choose Standard Edition for Instance Type. Enable image acceleration when creating an image repository to improve the efficiency of new instance scale-out. For more information, see Use an accelerated image in PAI.

Below is an example of the image configuration during EAS custom deployment:

Purchase the ACR instance:

Enable Accelerated Image when creating an image repository:

Combine with scaling

For businesses with significant load fluctuations, we recommend that you enable horizontal auto scale-out and scale-in. Use a combination of dedicated resource group, spot resource scaling, and regular resource to ensure smooth service operation and cost-efficiency. Configuration strategy:

Secure a base level of service traffic by purchasing EAS subscription/pay-as-you-go dedicated resource group. Configure the dedicated resource group in the console to meet GPU, CPU, and memory requirements for service startup.

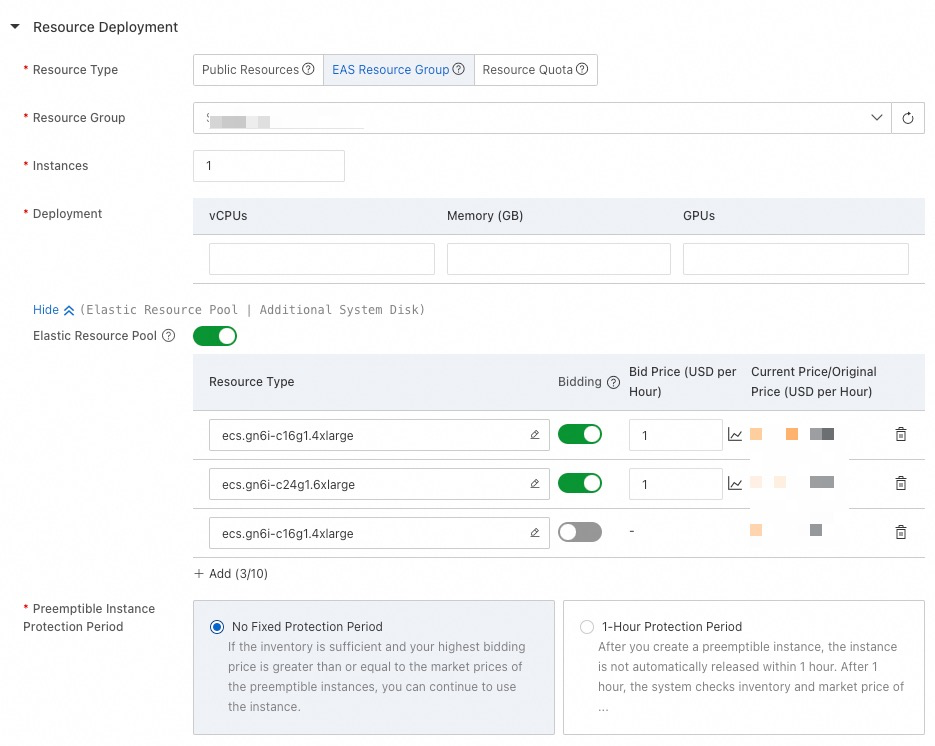

Enable Elastic Resource Pool and configure multiple resource specifications. Prioritize spot resources, and use the fallback resources last. This approach allows for expansion to spot resources with sufficient inventory during peak times. If spot resources are insufficient, regular resources are used to ensure smooth scale-out during business peaks.

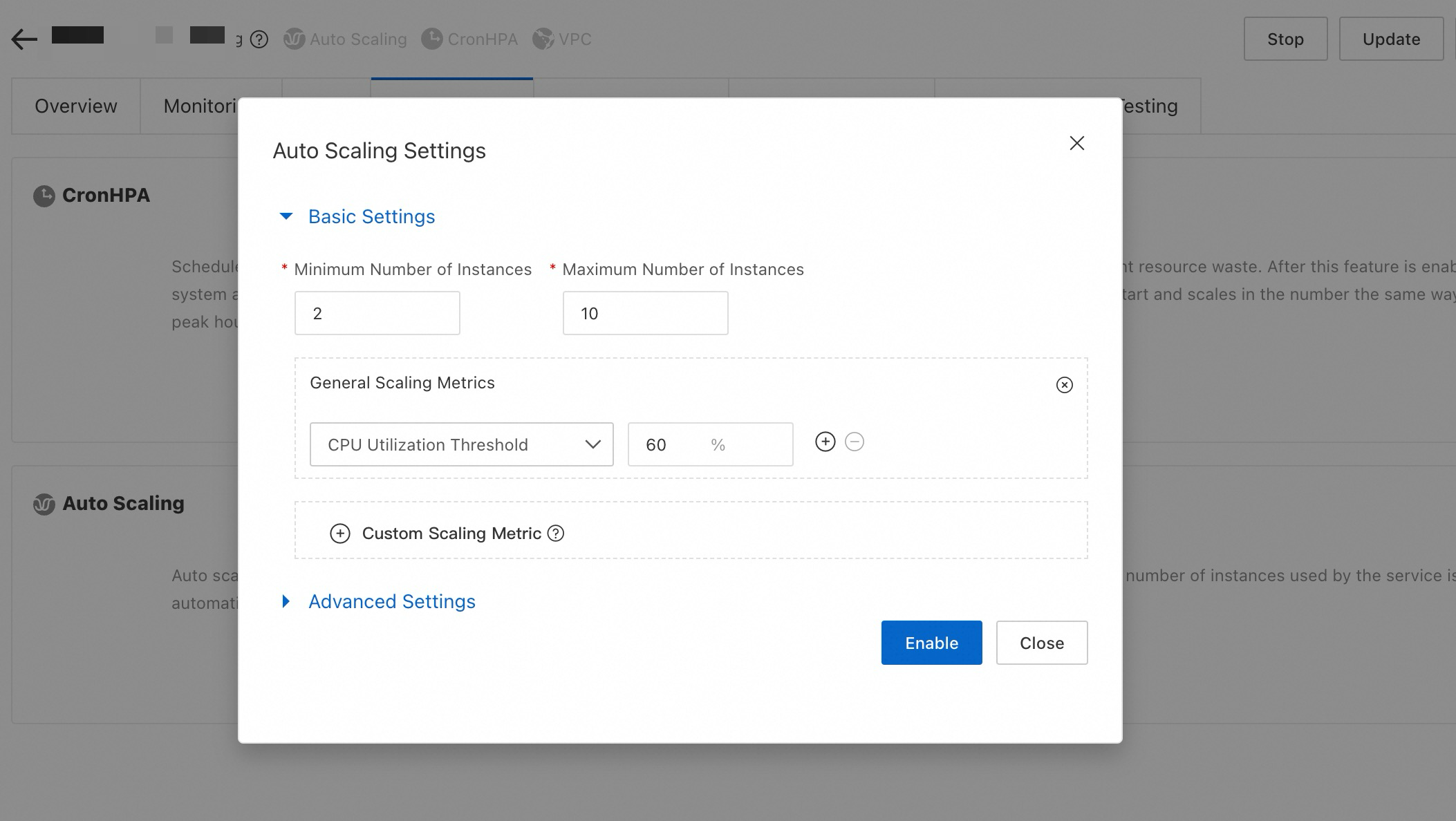

After service deployment, enable Auto Scaling based on your business metrics on the service details page. You can use General Scaling Metrics such as QPS, CPU utilization, and GPU utilization for auto scaling, or you can customize metrics to suit your needs.

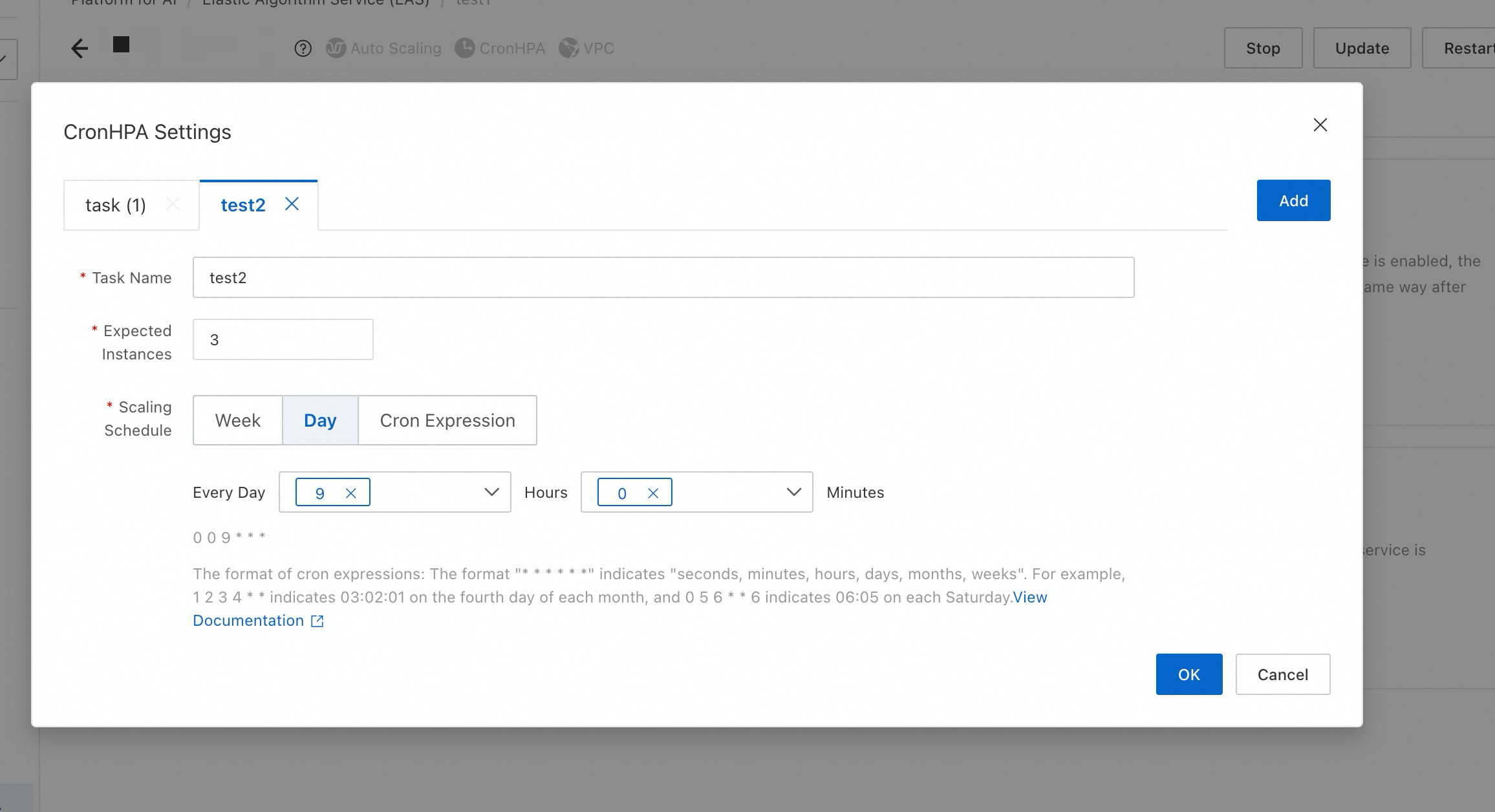

You can also enable Scheduled Scaling to align with predictable traffic fluctuations over time.

When creating the service, the dedicated resource group resources are first used, followed by a sequential allocation of available resources from the elastic resource pool based on the configured sorting.

For example, if the dedicated resource group is fully used and scaling to the public resource pool is necessary, the system will assess how many instances can be created with the current inventory of ecs.gn61-c16g1.4xlarge. If resources are still insufficient, it will then consider ecs.gn61-c24g1.6xlarge, and finally, regular ecs.gn6i-c16g1.4xlarge for any remaining instances.

If 8 instances are required beyond the dedicated resource group, the allocation might be 2 instances from the dedicated resource group, 3 from ecs.gn61-c16g1.4xlarge, 2 from ecs.gn61-c24g1.6xlarge, and 1 from regular ecs.gn61-c16g1.4xlarge.

For more information on EAS scaling, see: