Some data in buckets for which OSS-HDFS is enabled is not frequently accessed but must be retained to meet compliance or archiving requirements. To meet these requirements and reduce storage costs, OSS-HDFS provides the automatic storage tiering feature. This feature automatically moves frequently accessed data to the Standard storage class and rarely accessed data to the Infrequent Access (IA), Archive, Cold Archive, or Deep Cold Archive storage class.

Prerequisites

Data is written to OSS-HDFS.

A ticket is submitted to use the automatic storage tiering feature to convert the storage class of an object to IA, Archive, and Cold Archive. If you want to convert the storage class of an object to Deep Cold Archive, you must submit another ticket. If you never enabled the feature before, simply submit one ticket to enable it to convert the storage class of an object to IA, Archive, Cold Archive, or Deep Cold Archive.

The bucket for which you want to use the automatic storage tiering feature to convert the storage class of an object to IA, Archive, or Cold Archive is located in one of the following regions: China (Hangzhou), China (Shanghai), China (Beijing), China (Shenzhen), China (Zhangjiakou), China (Ulanqab), China (Hong Kong), Singapore, Germany (Frankfurt), US (Silicon Valley), US (Virginia), and Indonesia (Jakarta).

The bucket for which you want to use the automatic storage tiering feature to convert the storage class of an object to Deep Cold Archive is located in one of the following regions: China (Hangzhou), China (Shanghai), China (Beijing), China (Shenzhen), China (Zhangjiakou), China (Ulanqab), and Singapore.

JindoSDK 6.8.0 or later is installed and configured. For more information, see Connect non-EMR clusters to OSS-HDFS.

Usage notes

If the version of JindoSDK is earlier than 6.8.0, you cannot create an object in an IA, Archive, Cold Archive, or Deep Cold Archive directory. If you need to create an object in an IA, Archive, Cold Archive, or Deep Cold Archive directory, you can create an object and save it in a Standard directory. Then, move the object to the IA, Archive, Cold Archive, or Deep Cold Archive directory by using the rename operation.

If you want to directly create an object in the IA, Archive, Cold Archive, or Deep Cold Archive directory, you must upgrade JindoSDK to 6.8.0 or later.

When you convert the storage class of objects to Archive, Cold Archive, or Deep Cold Archive, additional system overheads are generated and data restoration is slow. Proceed with caution.

You can use the automatic storage tiering feature to convert the storage class of objects based on the following rules:

Hot to cold

Cold to hot

Billing rules

Data retrieval fees

You are charged data retrieval fees when you read IA, Archive, Cold Archive, or Deep Cold Archive objects in OSS-HDFS. We recommend that you do not store frequently accessed data as IA, Archive, Cold Archive, or Deep Cold Archive objects. For more information, see Data processing fees.

Tag fees

When you configure a storage policy for data in OSS-HDFS, you must add tags to data blocks. You are charged for the tags based on object tagging rules. For more information, see Object tagging fees.

Storage usage of objects that are stored for less than the minimum storage duration

The minimum storage duration is 30 days for IA objects, 60 days for Archive objects, and 180 days for Cold Archive and Deep Cold Archive objects. To avoid unnecessary costs, make sure that objects meet the minimum storage duration requirements when you use jindofs to convert the storage class of the objects. The following table provides examples.

Storage class conversion

Example

Calculation method of the minimum storage duration

Actual storage usage

Method to prevent storage usage of an object that is stored for less than the minimum storage duration

Hot to cold

From Standard (stored for 10 days) to IA

OSS-HDFS does not recalculate the storage duration when the storage class of the object changes. The number of days in which the object is stored in the Standard storage class before the storage class conversion is included in the minimum storage duration of the IA object.

Storage usage of the Standard object for 10 days

Continue storing the IA object for 20 days

From IA (store for 10 days) to Archive

Storage usage of the IA object for 10 days

Continue storing the Archive object for 50 days

From Standard (store for 10 days) to Cold Archive

OSS-HDFS recalculates the storage duration when the storage class of the object changes. The number of days in which the object is stored in the Standard storage class before the storage class conversion is not included in the minimum storage duration of the Cold Archive object.

Storage usage of the Standard object for 10 days

Continue storing the Cold Archive object for 180 days

From Standard (store for 10 days) to Deep Cold Archive

Storage usage of the Standard object for 10 days

Continue storing the Deep Cold Archive object for 180 days

Cold to hot

From Cold Archive (store for 10 days) to IA

Storage usage of the Cold Archive object for 10 days

Continue storing the Cold Archive object for 170 days

Continue storing the IA object for 30 days after the storage class conversion

Procedure

Connect to an Elastic Compute Service (ECS) instance. For more information, see Connect to an instance.

Download the JindoFS SDK JAR package.

Configure the AccessKey pair and environment variables.

Go to the bin directory of the installed JindoFS SDK JAR package.

The following sample code provides an example on how to go to the bin directory of the

jindofs-sdk-x.x.x-linuxJAR package. If you use a different version of JindoFS SDK, replace the package name with the name of the corresponding JindoFS SDK JAR package.cd jindofs-sdk-x.x.x-linux/bin/Create a configuration file named jindosdk.cfg in the bin directory, and then add the following parameters to the configuration file:

[client] <! -- Specify the AccessKey ID and AccessKey secret that are used to access OSS-HDFS. --> fs.oss.accessKeyId = yourAccessKeyId fs.oss.accessKeySecret = yourAccessKeySecret <!-- In this example, the endpoint of the China (Hangzhou) region is used. Specify your actual endpoint. --> fs.oss.endpoint = cn-hangzhou.oss-dls.aliyuncs.comConfigure environment variables.

NoteSet <JINDOSDK_CONF_DIR> to the absolute path of the

jindofs.cfgconfiguration file.export JINDOSDK_CONF_DIR=<JINDOSDK_CONF_DIR>

Specify a storage policy for the data that is written to OSS-HDFS. The following table describes the storage policy.

Scenario

Command

Result

IA

./jindofs fs -setStoragePolicy -path oss://examplebucket/dir1 -policy CLOUD_IAThe objects in the dir1/ directory have a tag whose key is transition-storage-class and whose value is IA.

Archive

./jindofs fs -setStoragePolicy -path oss://examplebucket/dir2 -policy CLOUD_ARThe objects in the dir2/ directory have a tag whose key is transition-storage-class and whose value is Archive.

Cold Archive

./jindofs fs -setStoragePolicy -path oss://examplebucket/dir3 -policy CLOUD_COLD_ARThe objects in the dir3/ directory have a tag whose key is transition-storage-class and whose value is ColdArchive.

Deep Cold Archive

./jindofs fs -setStoragePolicy -path oss://examplebucket/dir4 -policy CLOUD_DEEP_COLD_ARObjects in the dir4/ directory have a tag whose key is transition-storage-class and whose value is DeepColdArchive.

Enable the automatic storage tiering feature.

Log on to the OSS console.

In the left-side navigation pane, click Buckets. On the Buckets page, click the name of the bucket for which you want to enable the automatic storage tiering feature.

In the left-side navigation tree, choose .

On the OSS-HDFS tab, click Configure.

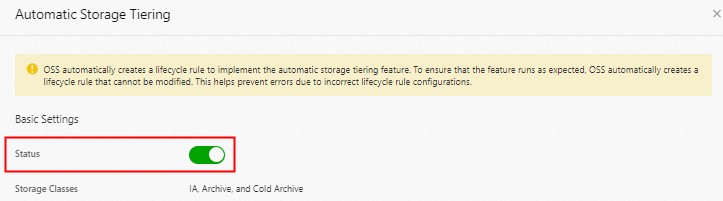

In the Basic Settings section of the Automatic Storage Tiering panel, turn on Status.

To prevent the automatic storage tiering feature from failing to run as expected due to incorrect configurations, OSS automatically creates a lifecycle rule to convert the storage class of data in OSS-HDFS with a specific tag:

The lifecycle rule specifies that the storage class of the objects that have a tag whose key is transition-storage-class and whose value is IA in the .dlsdata/ directory is converted to IA one day after they are last modified.

The lifecycle rule specifies that the storage class of the data that contains a tag whose key is transition-storage-class and whose value is Archive in the .dlsdata/ directory is changed to Archive one day after the data is last modified.

The lifecycle rule specifies that the storage class of the objects that have a tag whose key is transition-storage-class and whose value is ColdArchive in the .dlsdata/ directory is converted to Cold Archive one day after they are last modified.

The lifecycle rule specifies that the storage class of the data that contains a tag whose key is transition-storage-class and whose value is DeepColdArchive in the .dlsdata/ directory is changed to Deep Cold Archive one day after the data is last modified.

ImportantAfter the automatic storage tiering feature is enabled, OSS automatically creates a lifecycle rule to convert the storage class of objects to IA, Archive, Cold Archive, or Deep Cold Archive. Do not modify the lifecycle rule. Otherwise, data or OSS-HDFS exceptions may occur.

Click OK.

OSS-HDFS converts the storage class of objects based on the configured storage policy.

OSS loads a lifecycle rule within 24 hours after the lifecycle rule is created. After the lifecycle rule is loaded, OSS starts to execute the lifecycle rule at 08:00 (UTC+8) every day. The specific time varies based on the number of objects. The objects are converted to the specified storage class within at least 48 hours.

Related commands

Syntax | Description |

| Specifies a storage policy for objects in a path.

Important

|

| Queries the storage policy of data in a specific path. |

| Deletes the storage policy of data in a specific path. |

| Queries the status of the conversion task for objects in a specific path based on the storage policy. Valid values:

Note This command is only used to query the status of OSS-HDFS metadata conversion tasks. You cannot use this command to query the processing status of tasks that were submitted to OSS. |

| Temporarily restores Archive or Cold Archive objects in a specific path.

When you temporarily restore Archive, Cold Archive, or Deep Cold Archive objects, take note of the following items: Important

|