This topic describes how to use Filebeat to collect Apache log data, filter the data with Alibaba Cloud Logstash, and then send it to an Elasticsearch instance for analysis.

Procedure

Step 1: Preparations

Create an Alibaba Cloud Elasticsearch instance and a Logstash instance. The instances must be of the same version and in the same virtual private cloud (VPC).

For more information, see Create an Alibaba Cloud Elasticsearch instance and Create an Alibaba Cloud Logstash instance.

Enable Auto Indexing for the Alibaba Cloud Elasticsearch instance.

The Auto Indexing feature is disabled by default in Alibaba Cloud Elasticsearch for security reasons. However, this feature is required by Beats. Therefore, you must enable auto indexing if you set Collector Output to Elasticsearch. For more information, see Configure YML parameters.

Create an Alibaba Cloud ECS instance in the same VPC as the Elasticsearch and Logstash instances.

For more information, see Create an instance using the wizard.

ImportantBeats supports only Alibaba Cloud Linux, Red Hat, and CentOS operating systems.

Alibaba Cloud Filebeat can collect logs only from an ECS instance that is in the same region and VPC as the Alibaba Cloud Elasticsearch or Logstash instance. Log collection from the Internet is not supported.

Set up the httpd service on the ECS instance.

To simplify log analysis and visualization, you can define the Apache log format as JSON in the httpd.conf file. For more information, see Manually build a Magento 2 e-commerce website (Ubuntu). The following configuration is used as an example in this topic.

LogFormat "{\"@timestamp\":\"%{%Y-%m-%dT%H:%M:%S%z}t\",\"client_ip\":\"%{X-Forwa rded-For}i\",\"direct_ip\": \"%a\",\"request_time\":%T,\"status\":%>s,\"url\":\"%U%q\",\"method\":\"%m\",\"http_host\":\"%{Host}i\",\"server_ip\":\"%A\",\"http_referer\":\"%{Referer}i\",\"http_user_agent\":\"%{User-agent}i\",\"body_bytes_sent\":\"%B\",\"total_bytes_sent\":\"%O\"}" access_log_json # Comment out the original CustomLog and replace it with CustomLog "logs/access_log" access_log_jsonInstall Cloud Assistant Agent and Docker on the target ECS instance.

For more information, see Install Cloud Assistant Agent and Install Docker.

Step 2: Configure and install a Filebeat collector

Log on to the Alibaba Cloud Elasticsearch console.

Navigate to the Beats Data Shippers page.

In the top navigation bar, select a region.

In the left-side navigation pane, click Beats Data Shippers.

Optional: If this is the first time you go to the Beats Data Shippers page, view the information displayed in the message that appears and click OK to authorize the system to create a service-linked role for your account.

NoteWhen Beats collects data from various data sources, Beats depends on the service-linked role and the rules specified for the role. Do not delete the service-linked role. Otherwise, the use of Beats is affected. For more information, see Elasticsearch service-linked roles.

In the Create Collector section, click ECS Logs.

Configure and install the collector.

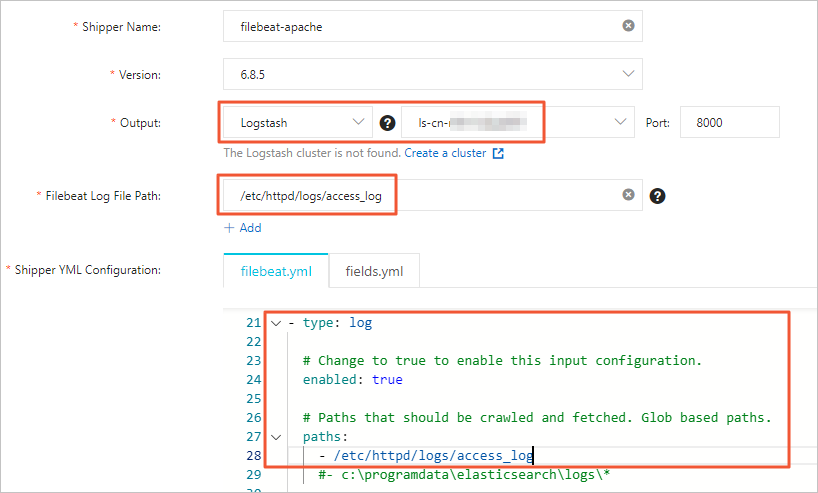

For more information, see Collect ECS service logs and Collector YML configuration. The following configuration is used as an example in this topic.

Note

NoteFor the collector Output, you must specify the instance ID of the target Alibaba Cloud Logstash instance. You do not need to specify the Output again in the YML configuration.

For the Filebeat file path, enter the directory of the data source. You must also enable log data collection and configure the log path in the YML configuration.

Click Next.

In the Collector Installation wizard, select the ECS instance where you want to install the collector.

NoteSelect the ECS instance that you created and configured in Step 1.

Start the collector and check its installation status.

Click Start.

After the collector starts, the Start Successful dialog box is displayed.

Click Go To Collector Center to return to the Beats Data Collector page. In the Collector Management section, find the Filebeat collector that you started.

Wait until the Collector Status is Enabled 1/1. Then, in the Actions column, click View Running Instances.

On the View Running Instances page, check the Collector Installation Status. A Normal Heartbeat status indicates a successful installation.

Step 3: Configure a Logstash pipeline to filter and sync data

In the left navigation pane of the Alibaba Cloud Elasticsearch console, click Logstash Instances.

Click Pipeline Management in the Actions column of the target Logstash instance.

On the Pipeline Management page, click Create Pipeline.

Configure the pipeline.

Configure the pipeline based on the following example. For more information about how to configure a pipeline, see Manage pipelines using configuration files.

input { beats { port => 8000 } } filter { json { source => "message" remove_field => "@version" remove_field => "prospector" remove_field => "beat" remove_field => "source" remove_field => "input" remove_field => "offset" remove_field => "fields" remove_field => "host" remove_field => "message" } } output { elasticsearch { hosts => ["http://es-cn-mp91cbxsm00******.elasticsearch.aliyuncs.com:9200"] user => "elastic" password => "<YOUR_PASSWORD>" index => "<YOUR_INDEX>" } }Parameter

Description

input

Receives data collected by Beats.

filter

Filters the collected data. The JSON plug-in is used to decode the message data. The remove_field parameter is used to delete specified fields.

NoteThe filter configuration in this topic applies only to this test scenario and may not be suitable for all business scenarios. Modify the filter configuration as needed. For more information about the supported filter plug-ins and their usage, see filter plugin.

output

Transfers data to your Alibaba Cloud Elasticsearch instance. The parameters are described as follows:

hosts: Replace this with the endpoint of your Alibaba Cloud Elasticsearch instance. You can obtain the endpoint on the basic information page of the instance. For more information, see View the basic information of an instance.

<YOUR_PASSWORD>: Replace this with the password for your Alibaba Cloud Elasticsearch instance.

<YOUR_INDEX>: Replace this with the name of your index.

Step 4: View the data collection results

Log on to the Kibana console of your Elasticsearch cluster and go to the homepage of the Kibana console as prompted.

For more information about how to log on to the Kibana console, see Log on to the Kibana console.

NoteIn this example, an Elasticsearch V6.7.0 cluster is used. Operations on clusters of other versions may differ. The actual operations in the console prevail.

In the left-side navigation pane of the page that appears, click Dev Tools.

In the Console, you can run the following command to view the collected data.

GET <YOUR_INDEX>/_searchNoteReplace <YOUR_INDEX> with the index name that you specified in the output section of the Logstash pipeline configuration.

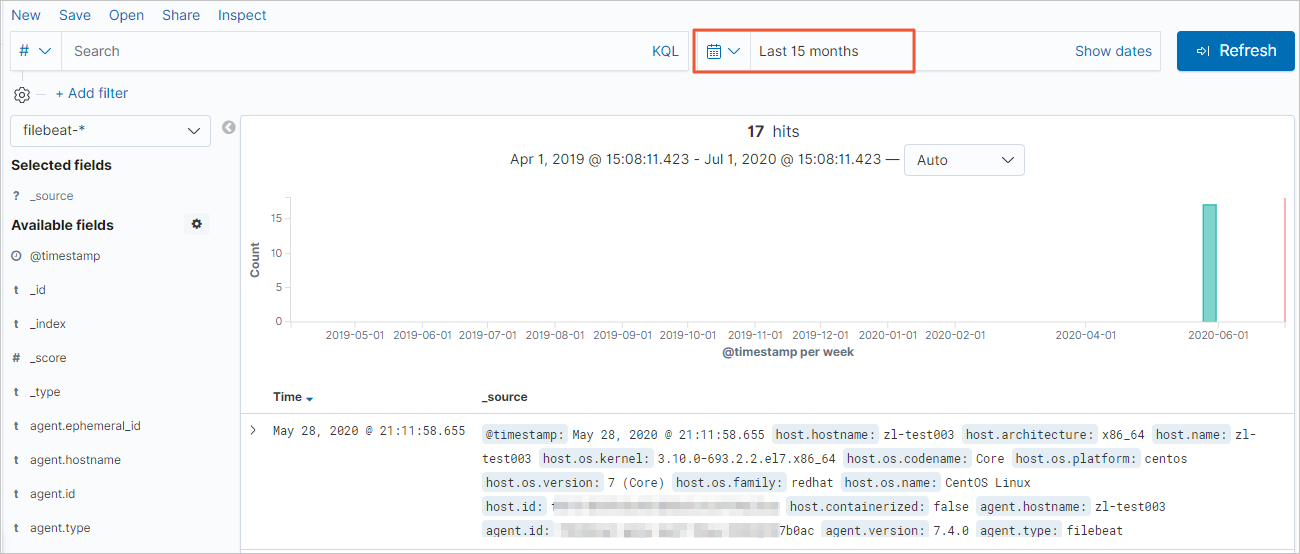

In the left navigation pane, click Discover. Select a time range to view the details of the collected data.

Note

NoteBefore you run the query, make sure that you have created an index pattern for <YOUR_INDEX>. If not, in the Kibana console, click Management. In the Kibana section, click and follow the on-screen instructions.