This topic describes how to upgrade the EMR-HOOK component in an E-MapReduce (EMR) gateway.

Background information

Scenarios: For computing operations in an EMR gateway, if you use EMR-CLI to deploy a gateway and use access frequency metrics that are displayed on the Data Overview tab and data access frequency rules on the Lifecycle Management page in the Data Lake Formation (DLF) console, you need to manually upgrade the EMR-HOOK component.

During the upgrade process, the running computation tasks are not affected. After the upgrade, the tasks will automatically take effect.

Limits

DLF is used to manage metadata in EMR clusters.

The operations described in this topic take effect only for gateways that are deployed by using EMR-CLI.

Upgrade procedure

EMR-5.10.1, EMR-3.44.1, and versions later than EMR-3.44.1

Step 1: Upgrade the JAR package

Log on to the gateway by using SSH and execute the following script. You must have root permissions. Note that you must replace ${region} with the current region ID, such as cn-hangzhou.

sudo mkdir -p /opt/apps/EMRHOOK/upgrade/ sudo wget https://dlf-repo-${region}.oss-${region}-internal.aliyuncs.com/emrhook/latest/emrhook.tar.gz -P /opt/apps/EMRHOOK/upgrade sudo tar -p -zxf /opt/apps/EMRHOOK/upgrade/emrhook.tar.gz -C /opt/apps/EMRHOOK/upgrade/ sudo cp -p /opt/apps/EMRHOOK/upgrade/emrhook/* /opt/apps/EMRHOOK/emrhook-current/Step 2: Modify Hive configurations

${hive-jar} varies depending on the Spark version. For Hive 2, set ${hive-jar} to hive-hook-hive23.jar. For Hive 3, set ${hive-jar} to hive-hook-hive31.jar.

Configuration file | Configuration item | Configuration value |

hive-site.xml (/etc/taihao-apps/hive-conf/hive-site.xml) |

| Append Note The separator is a comma (,). |

| Add | |

hive-env.sh (/etc/taihao-apps/hive-conf/hive-env.sh) |

| Append Note The separator is a comma (,). |

Step 3: Modify Spark configurations

${spark-jar} varies depending on the Spark version. For Spark 2, set ${spark-jar}to spark-hook-spark24.jar. For Spark 3, set ${spark-jar} to spark-hook-spark30.jar.

Configuration file | Configuration item | Configuration value |

spark-defaults.conf (/etc/taihao-apps/spark-conf/spark-defaults.conf) |

| Append Note The separator is a colon (:). |

| Append Note The separator is a colon (:) | |

| Add |

EMR-5.10.1 and versions earlier than EMR-3.44.1

Step 1: Upgrade the JAR package

Log on to the gateway by using SSH and execute the following script. You must have root permissions. You must replace

${region}with the current region ID, such as cn-hangzhou.The following script is used to download and decompress the latest EMR-HOOK JAR package. After the EMR-HOOK JAR package is decompressed, proceed with subsequent steps to upgrade the decompressed JAR packages.

sudo mkdir -p /opt/apps/EMRHOOK/upgrade/ sudo wget https://dlf-repo-${region}.oss-${region}-internal.aliyuncs.com/emrhook/latest/emrhook.tar.gz -P /opt/apps/EMRHOOK/upgrade sudo tar -p -zxf /opt/apps/EMRHOOK/upgrade/emrhook.tar.gz -C /opt/apps/EMRHOOK/upgrade/Rename the decompressed JAR packages. The minor version of the EMR-HOOK component varies based on EMR versions. Therefore, before you replace the original JAR packages, you must manually rename the JAR package to the current EMR-HOOK version before you perform the copy and replace operation.

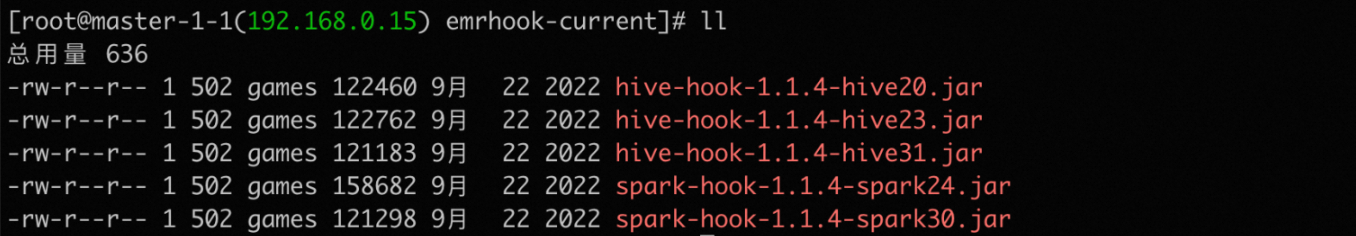

For example, in EMR-3.43.1, the minor version of the EMR-HOOK component is 1.1.4, and the naming convention of JAR packages is hive-hook-${version}-hive20.jar. In this case, you need to replace ${version} with the version of the current EMR-HOOK component.

cd /opt/apps/EMRHOOK/upgrade/emrhook mv hive-hook-hive20.jar hive-hook-1.1.4-hive20.jar mv hive-hook-hive23.jar hive-hook-1.1.4-hive23.jar mv hive-hook-hive31.jar hive-hook-1.1.4-hive31.jar mv spark-hook-spark24.jar spark-hook-1.1.4-spark24.jar mv spark-hook-spark30.jar spark-hook-1.1.4-spark30.jar

After the modification, run the following command:

sudo cp -p /opt/apps/EMRHOOK/upgrade/emrhook/* /opt/apps/EMRHOOK/emrhook-current/

Step 2: Modify Hive configurations

${hive-jar} varies depending on the Spark version. For Hive 2, set ${hive-jar} to hive-hook-${emrhook-version}-hive23.jar. For Hive 3, set ${hive-jar} to hive-hook-${emrhook-version}-hive31.jar. Set ${emrhook-version} to the version of the EMR-HOOK component, such as hive-hook-1.1.4-hive23.jar.

Configuration file | Configuration item | Configuration value |

hive-site.xml (/etc/taihao-apps/hive-conf/hive-site.xml) |

| Append Note The separator is a comma (,). |

| Add | |

hive-env.sh (/etc/taihao-apps/hive-conf/hive-env.sh) |

| Append Note The separator is a comma (,). |

Step 3: Modify Spark configurations

${spark-jar} varies depending on the Spark version. For Spark 2, set ${spark-jar} to spark-hook-${emrhook-version}-spark24.jar. For Spark 3, set ${spark-jar} to spark-hook-${emrhook-version}-spark30.jar. Set ${emrhook-version} to the version of the EMR-HOOK component, such as spark-hook-1.1.4-spark24.jar.

Configuration file | Configuration item | Configuration value |

spark-defaults.conf (/etc/taihao-apps/spark-conf/spark-defaults.conf) |

| Append Note The separator is a colon (:). |

| Append Note The separator is a colon (:) | |

| Add |