Pointblank takes a different approach to data quality. It doesn't have to be a tedious technical task. Rather, it can become a process focused on clear communication between team members. While other validation libraries focus solely on catching errors, Pointblank is great at both finding issues and sharing insights. Our beautiful, customizable reports turn validation results into conversations with stakeholders, making data quality issues immediately understandable and actionable for everyone on your team.

Get started in minutes, not hours. Pointblank's AI-powered DraftValidation feature analyzes your data and suggests intelligent validation rules automatically. So there's no need to stare at an empty validation script wondering where to begin. Pointblank can kickstart your data quality journey so you can focus on what matters most.

Whether you're a data scientist who needs to quickly communicate data quality findings, a data engineer building robust pipelines, or an analyst presenting data quality results to business stakeholders, Pointblank helps you to turn data quality from an afterthought into a competitive advantage.

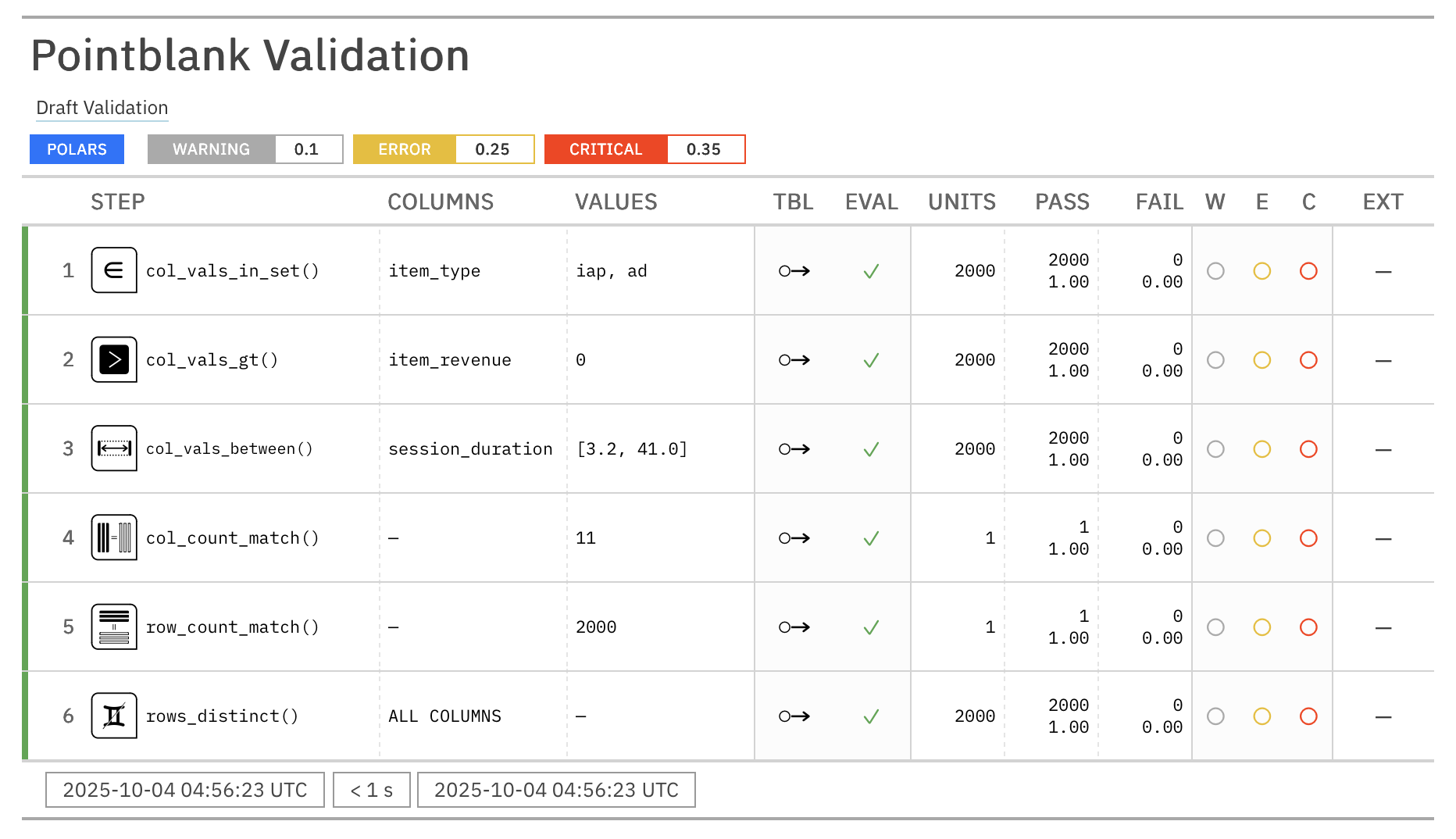

The DraftValidation class uses LLMs to analyze your data and generate a complete validation plan with intelligent suggestions. This helps you quickly get started with data validation or jumpstart a new project.

import pointblank as pb # Load your data data = pb.load_dataset("game_revenue") # A sample dataset # Use DraftValidation to generate a validation plan pb.DraftValidation(data=data, model="anthropic:claude-sonnet-4-5")The output is a complete validation plan with intelligent suggestions based on your data:

import pointblank as pb # The validation plan validation = ( pb.Validate( data=data, label="Draft Validation", thresholds=pb.Thresholds(warning=0.10, error=0.25, critical=0.35) ) .col_vals_in_set(columns="item_type", set=["iap", "ad"]) .col_vals_gt(columns="item_revenue", value=0) .col_vals_between(columns="session_duration", left=3.2, right=41.0) .col_count_match(count=11) .row_count_match(count=2000) .rows_distinct() .interrogate() ) validationCopy, paste, and customize the generated validation plan for your needs.

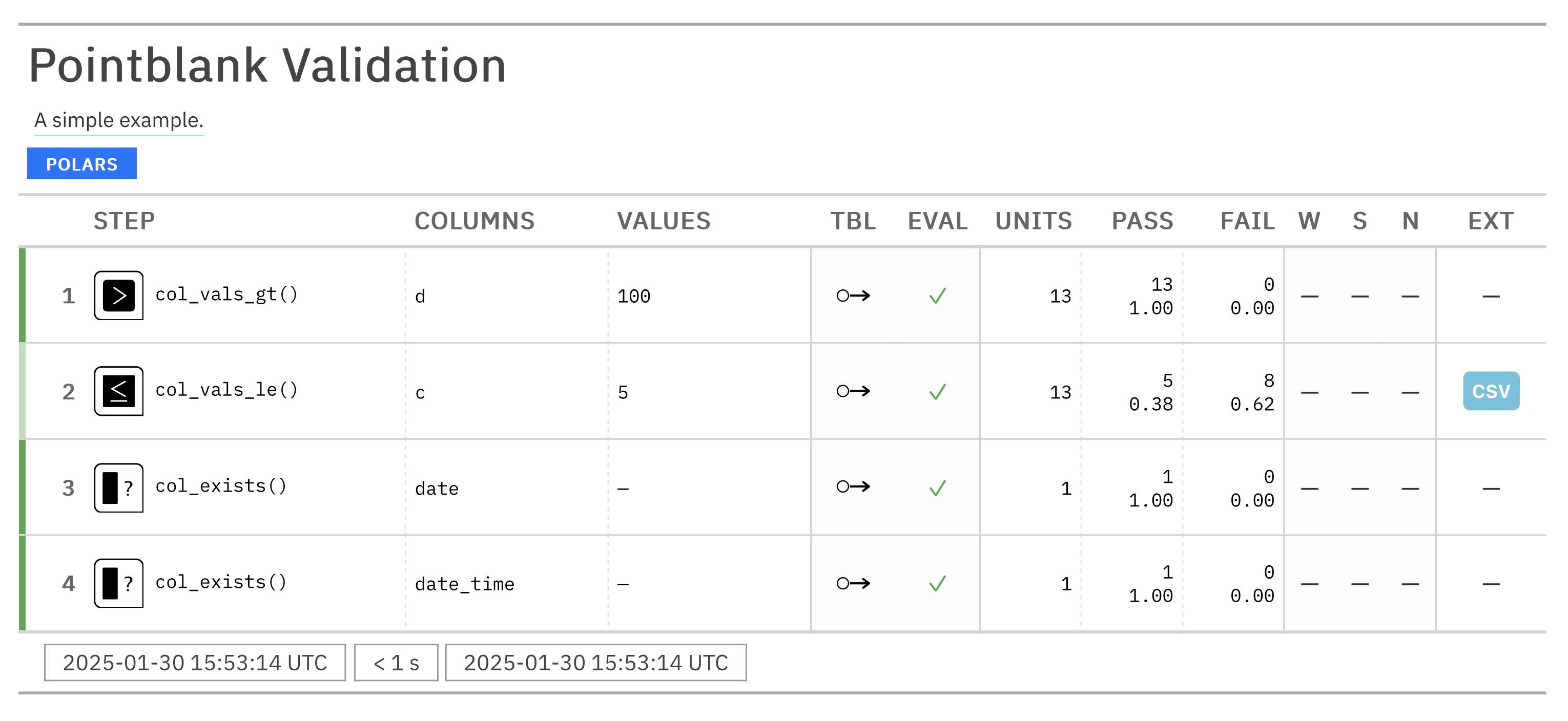

Pointblank's chainable API makes validation simple and readable. The same pattern always applies: (1) start with Validate, (2) add validation steps, and (3) finish with interrogate().

import pointblank as pb validation = ( pb.Validate(data=pb.load_dataset(dataset="small_table")) .col_vals_gt(columns="d", value=100) # Validate values > 100 .col_vals_le(columns="c", value=5) # Validate values <= 5 .col_exists(columns=["date", "date_time"]) # Check columns exist .interrogate() # Execute and collect results ) # Get the validation report from the REPL with: validation.get_tabular_report().show() # From a notebook simply use: validationOnce you have an interrogated validation object, you can leverage a variety of methods to extract insights like:

- getting detailed reports for single steps to see what went wrong

- filtering tables based on validation results

- extracting problematic data for debugging

- Works with your existing stack: Seamlessly integrates with Polars, Pandas, DuckDB, MySQL, PostgreSQL, SQLite, Parquet, PySpark, Snowflake, and more!

- Beautiful, interactive reports: Crystal-clear validation results that highlight issues and help communicate data quality

- Composable validation pipeline: Chain validation steps into a complete data quality workflow

- Threshold-based alerts: Set 'warning', 'error', and 'critical' thresholds with custom actions

- Practical outputs: Use validation results to filter tables, extract problematic data, or trigger downstream processes

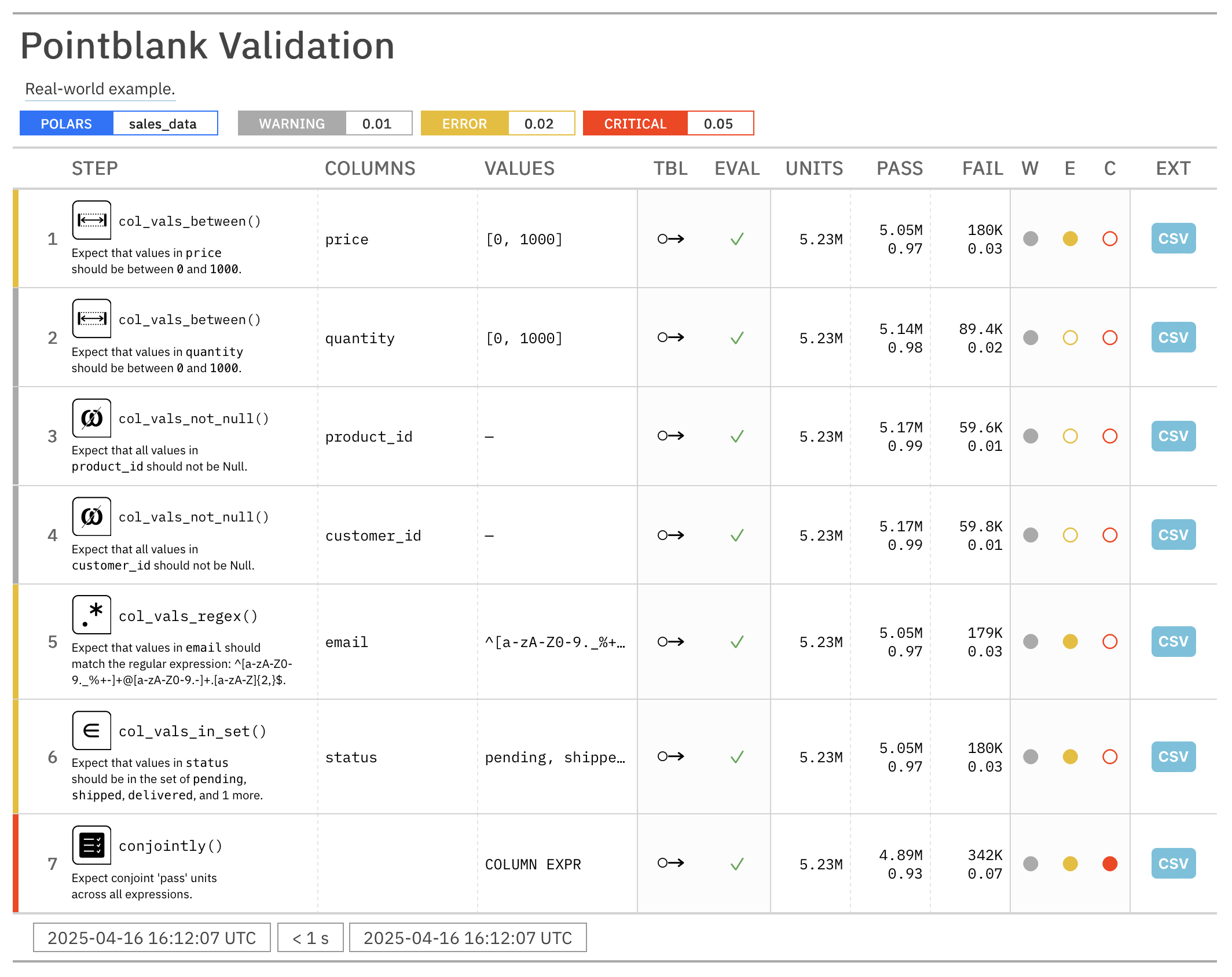

Here's how Pointblank handles complex, real-world scenarios with advanced features like threshold management, automated alerts, and comprehensive business rule validation:

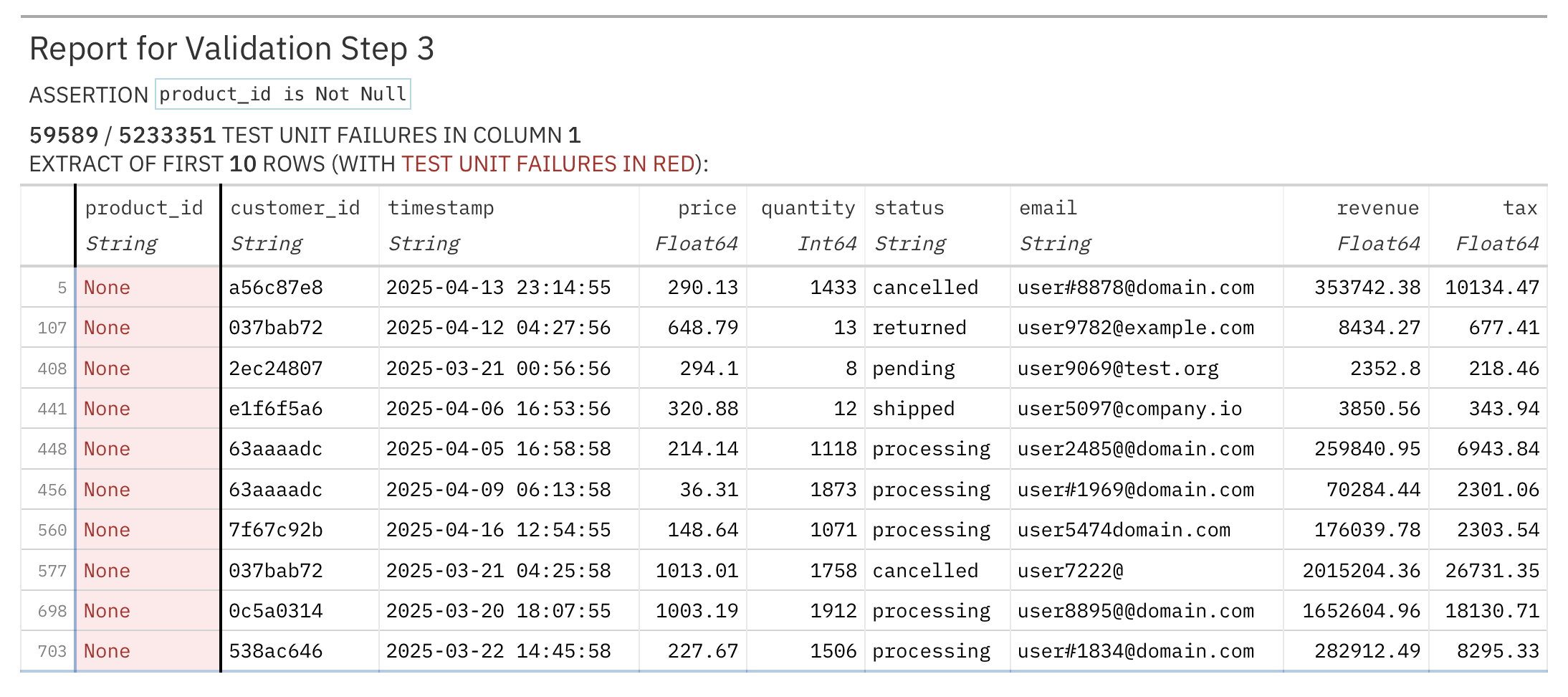

import pointblank as pb import polars as pl # Load your data sales_data = pl.read_csv("sales_data.csv") # Create a comprehensive validation validation = ( pb.Validate( data=sales_data, tbl_name="sales_data", # Name of the table for reporting label="Real-world example.", # Label for the validation, appears in reports thresholds=(0.01, 0.02, 0.05), # Set thresholds for warnings, errors, and critical issues actions=pb.Actions( # Define actions for any threshold exceedance critical="Major data quality issue found in step {step} ({time})." ), final_actions=pb.FinalActions( # Define final actions for the entire validation pb.send_slack_notification( webhook_url="https://hooks.slack.com/services/your/webhook/url" ) ), brief=True, # Add automatically-generated briefs for each step ) .col_vals_between( # Check numeric ranges with precision columns=["price", "quantity"], left=0, right=1000 ) .col_vals_not_null( # Ensure that columns ending with '_id' don't have null values columns=pb.ends_with("_id") ) .col_vals_regex( # Validate patterns with regex columns="email", pattern="^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\\.[a-zA-Z]{2,}$" ) .col_vals_in_set( # Check categorical values columns="status", set=["pending", "shipped", "delivered", "returned"] ) .conjointly( # Combine multiple conditions lambda df: pb.expr_col("revenue") == pb.expr_col("price") * pb.expr_col("quantity"), lambda df: pb.expr_col("tax") >= pb.expr_col("revenue") * 0.05 ) .interrogate() )Major data quality issue found in step 7 (2025-04-16 15:03:04.685612+00:00). # Get an HTML report you can share with your team validation.get_tabular_report().show("browser")# Get a report of failing records from a specific step validation.get_step_report(i=3).show("browser") # Get failing records from step 3For teams that need portable, version-controlled validation workflows, Pointblank supports YAML configuration files. This makes it easy to share validation logic across different environments and team members, ensuring everyone is on the same page.

validation.yaml

validate: data: small_table tbl_name: "small_table" label: "Getting started validation" steps: - col_vals_gt: columns: "d" value: 100 - col_vals_le: columns: "c" value: 5 - col_exists: columns: ["date", "date_time"]Execute the YAML validation

import pointblank as pb # Run validation from YAML configuration validation = pb.yaml_interrogate("validation.yaml") # Get the results just like any other validation validation.get_tabular_report().show()This approach is suitable for:

- CI/CD pipelines: Store validation rules alongside your code

- Team collaboration: Share validation logic in a readable format

- Environment consistency: Use the same validation across dev, staging, and production

- Documentation: YAML files serve as living documentation of your data quality requirements

Pointblank includes a powerful CLI utility called pb that lets you run data validation workflows directly from the command line. Perfect for CI/CD pipelines, scheduled data quality checks, or quick validation tasks.

Explore Your Data

# Get a quick preview of your data pb preview small_table # Preview data from GitHub URLs pb preview "https://github.com/user/repo/blob/main/data.csv" # Check for missing values in Parquet files pb missing data.parquet # Generate column summaries from database connections pb scan "duckdb:///data/sales.ddb::customers"Run Essential Validations

# Run validation from YAML configuration file pb run validation.yaml # Run validation from Python file pb run validation.py # Check for duplicate rows pb validate small_table --check rows-distinct # Validate data directly from GitHub pb validate "https://github.com/user/repo/blob/main/sales.csv" --check col-vals-not-null --column customer_id # Verify no null values in Parquet datasets pb validate "data/*.parquet" --check col-vals-not-null --column a # Extract failing data for debugging pb validate small_table --check col-vals-gt --column a --value 5 --show-extractIntegrate with CI/CD

# Use exit codes for automation in one-liner validations (0 = pass, 1 = fail) pb validate small_table --check rows-distinct --exit-code # Run validation workflows with exit codes pb run validation.yaml --exit-code pb run validation.py --exit-codeClick the following headings to see some video demonstrations of the CLI:

- Complete validation workflow: From data access to validation to reporting in a single pipeline

- Built for collaboration: Share results with colleagues through beautiful interactive reports

- Practical outputs: Get exactly what you need: counts, extracts, summaries, or full reports

- Flexible deployment: Use in notebooks, scripts, or data pipelines

- Customizable: Tailor validation steps and reporting to your specific needs

- Internationalization: Reports can be generated in 40 languages, including English, Spanish, French, and German

Visit our documentation site for:

We'd love to hear from you! Connect with us:

- GitHub Issues for bug reports and feature requests

- Discord server for discussions and help

- Contributing guidelines if you'd like to help improve Pointblank

You can install Pointblank using pip:

pip install pointblankYou can also install Pointblank from Conda-Forge by using:

conda install conda-forge::pointblankIf you don't have Polars or Pandas installed, you'll need to install one of them to use Pointblank.

pip install "pointblank[pl]" # Install Pointblank with Polars pip install "pointblank[pd]" # Install Pointblank with PandasTo use Pointblank with DuckDB, MySQL, PostgreSQL, or SQLite, install Ibis with the appropriate backend:

pip install "pointblank[duckdb]" # Install Pointblank with Ibis + DuckDB pip install "pointblank[mysql]" # Install Pointblank with Ibis + MySQL pip install "pointblank[postgres]" # Install Pointblank with Ibis + PostgreSQL pip install "pointblank[sqlite]" # Install Pointblank with Ibis + SQLitePointblank uses Narwhals to work with Polars and Pandas DataFrames, and integrates with Ibis for database and file format support. This architecture provides a consistent API for validating tabular data from various sources.

There are many ways to contribute to the ongoing development of Pointblank. Some contributions can be simple (like fixing typos, improving documentation, filing issues for feature requests or problems, etc.) and others might take more time and care (like answering questions and submitting PRs with code changes). Just know that anything you can do to help would be very much appreciated!

Please read over the contributing guidelines for information on how to get started.

There's also a version of Pointblank for R, which has been around since 2017 and is widely used in the R community. You can find it at https://github.com/rstudio/pointblank.

We're actively working on enhancing Pointblank with:

- Additional validation methods for comprehensive data quality checks

- Advanced logging capabilities

- Messaging actions (Slack, email) for threshold exceedances

- LLM-powered validation suggestions and data dictionary generation

- JSON/YAML configuration for pipeline portability

- CLI utility for validation from the command line

- Expanded backend support and certification

- High-quality documentation and examples

If you have any ideas for features or improvements, don't hesitate to share them with us! We are always looking for ways to make Pointblank better.

Please note that the Pointblank project is released with a contributor code of conduct.

By participating in this project you agree to abide by its terms.

Pointblank is licensed under the MIT license.

© Posit Software, PBC.

This project is primarily maintained by Rich Iannone. Other authors may occasionally assist with some of these duties.