- Notifications

You must be signed in to change notification settings - Fork 1.5k

Add Web Chat UI for any agent that can be launched using clai web or Agent.to_web() #3456

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| | ||

| self._get_toolset().apply(_set_sampling_model) | ||

| | ||

| def to_web(self) -> Any: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We're gonna need some args here -- have a look at the to_a2a and to_ag_ui methods. Not saying we need all of those args, but some may be useful

| I just pushed an update to this removing the AST aspect and (hopefully) fixing the tests so they pass in CI haven't addressed the comments yet so it isn't reviewable yet |

| | ||

| @app.get('/') | ||

| @app.get('/{id}') | ||

| async def index(request: Request, version: str | None = Query(None)): # pyright: ignore[reportUnusedFunction] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure I understand the need for a version arg. An older version than the default is worse, and a newer version may not work with the API data model. I think they should develop in tandem, with a pinned version on this side.

What we could do is add a frontend_url argument to the to_web method to allow the entire thing to be overridden easily?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

frontend_url remains relevant, I haven't included logic for this

| streaming_response = await VercelAIAdapter.dispatch_request( | ||

| request, | ||

| agent=agent, | ||

| model=extra_data.model, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the only way for the user to send a model different than what they got from the server is to "hack" the api, but if you deem it relevant we can add validation

| with TestClient(app) as client: | ||

| response = client.get('/') | ||

| assert response.status_code == 200 | ||

| assert response.headers['content-type'] == 'text/html; charset=utf-8' | ||

| assert 'cache-control' in response.headers | ||

| assert response.headers['cache-control'] == 'public, max-age=3600' | ||

| assert len(response.content) > 0 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this can stay as assertions right?

| model_id = f'{model.system}:{model.model_name}' | ||

| display_name = label or format_model_display_name(model.model_name) | ||

| model_supported_tools = model.supported_builtin_tools() | ||

| supported_tool_ids = list(model_supported_tools & builtin_tool_ids) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think I suggested putting this in Model.profile so that we can always trust model.profile.supported_builtin_tools. Then we can also override it on OpenAIChatModel to remove web_search if appropriate

| been having some fun testing the combinations, for anyone who wants to try them: combinations to try out the agent UI1. Generic agent with explicit modelsource .env && uv run --with . clai web -m openai:gpt-4.1-mini 2. Agent with model (uses agent's configured model)source .env && uv run --with . clai web -a clai.clai._test_agents:chat_agent 3. Agent without model + CLI modelsource .env && uv run --with . clai web -a clai.clai._test_agents:agent_no_model -m anthropic:claude-haiku-4-5 4. Multiple models (first is default, others are options in UI)source .env && uv run --with . clai web -m google-gla:gemini-2.5-flash-lite -m openai:gpt-4.1-mini -m anthropic:claude-haiku-4-5 5. Override agent's model with different onesource .env && uv run --with . clai web -a clai.clai._test_agents:chat_agent -m google-gla:gemini-2.5-flash-lite 6. Single tool - web search enabledsource .env && uv run --with . clai web -m openai:gpt-4.1-mini -t web_search 7. Multiple toolssource .env && uv run --with . clai web -m anthropic:claude-haiku-4-5 -t web_search -t code_execution 8. Agent with builtin_tools configuredsource .env && uv run --with . clai web -a clai.clai._test_agents:agent_with_tools 9. Roleplay waiter instructionssource .env && uv run --with . clai web -m openai:gpt-4.1-mini -i "You're a grumpy Parisian waiter at a trendy bistro frequented by American tourists. You're secretly proud of the food but act annoyed by every question. Pepper your 10. With MCP configsource .env && uv run --with . clai web -m google-gla:gemini-2.5-flash-lite --mcp mcp_servers.json 11. ERROR: No model, no agent (should error)source .env && uv run --with . clai web 12. ERROR: Agent without model, no CLI model (should error)source .env && uv run --with . clai web -a clai.clai._test_agents:agent_no_model 13. WARNING: Unknown tool (should warn)source .env && uv run --with . clai web -m openai:gpt-4.1-mini -t definitely_not_a_real_tool Some fun alternative instructions you could swap in for #9: Pirate customer servicesource .env && uv run --with . clai web -m anthropic:claude-haiku-4-5 -i "You're a pirate who somehow ended up working tech support. Answer questions helpfully but can't stop using nautical terms and saying 'arrr'." Overly enthusiastic fitness coachsource .env && uv run --with . clai web -m google-gla:gemini-2.5-flash-lite -i "You're an extremely enthusiastic fitness coach who relates EVERYTHING back to exercise and healthy living. Even coding questions get workout analogies." Noir detectivesource .env && uv run --with . clai web -m openai:gpt-4.1-mini -i "You're a 1940s noir detective narrating your investigation. Every question is a 'case' and every answer is delivered in hard-boiled prose with lots of rain metaphors."

When we publish it should naturally just run as |

clai/README.md Outdated

| | ||

| - `--agent`, `-a`: Agent to serve in `module:variable` format | ||

| - `--models`, `-m`: Comma-separated models to make available (e.g., `gpt-5,sonnet-4-5`) | ||

| - `--tools`, `-t`: Comma-separated builtin tool IDs to enable (e.g., `web_search,code_execution`) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Link to builtin tools docs please. We may also need to list all the IDs as I don't think they're documented anywhere

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is "to enable" correct? Are they all enabled by default or just available as options?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

most correctest would probably be offer or put at the disposal I guess, but enable seems good enough enable for the user

| if tool_cls is None or tool_id in ('url_context', 'mcp_server'): | ||

| console.print(f'[yellow]Warning: Unknown tool "{tool_id}", skipping[/yellow]') | ||

| continue | ||

| if tool_id == 'memory': |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd rather heave a constant set of unsupported builtin tool IDs, and then have a generic error X is not supported in the web UI because it requires configuration or something like that

| for tool in params.builtin_tools: | ||

| if not isinstance(tool, tuple(supported_types)): | ||

| raise UserError( | ||

| f'Builtin tool {type(tool).__name__} is not supported by this model. ' |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If multiple tools are unsupported, we'd now get separate exceptions and only see the first. I'd rather have one exception that lists all the unsupported types. So we can do effectively if len(params.builtin_tools - self.profile.supported_builtin_tools) > 0 (with the correct types of course)

| def add_api_routes( | ||

| app: Starlette, | ||

| agent: Agent, | ||

| models: list[ModelInfo] | None = None, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The comment on line model=extra_data.model makes me thing we should be passing the raw model instance/names into this method, not the pre-processed ModelInfo. And we should generate the ModelInfo inside this method / inside the configure endpoint.

| | ||

| app = Starlette() | ||

| | ||

| add_api_routes( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we can refactor this to return a Starlette router for just the API that can then be mounted into the main starlette app. that way it doesn't need to take app, and the API is more cleanly separate from the UI

| working on removing mcp support and the remaining 4 comments |

| model_id = model_ref if isinstance(model_ref, str) else f'{model.system}:{model.model_name}' | ||

| display_name = label or model.label | ||

| model_supported_tools = model.profile.supported_builtin_tools | ||

| supported_tool_ids = [t.kind for t in (model_supported_tools & builtin_tool_types)] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shouldn't this also be t.unique_id? Or at least, I think those are the values that should show up in ModelInfo. Have you tested the entire feature with multiple MCPServerTools?

| # Build model ID → original reference mapping and ModelInfo list for frontend | ||

| model_id_to_ref: dict[str, Model | str] = {} | ||

| model_infos: list[ModelInfo] = [] | ||

| builtin_tools = builtin_tools or [] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I mentioned above that we should not send agent._builtin_tools into this method, but what we should do is check if any of the provided new builtin_tools are already in agent._builtin_tools, so that we filter them out and don't show them in the UI as a checkbox, if they're hard-coded on the agent and are always going to be included anyway.

docs/cli.md Outdated

| | ||

| We plan to continue adding new features, such as interaction with MCP servers, access to tools, and more. | ||

| | ||

| ## Usage |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The introductory section of the page should already mention that it has a CLI, as well as a web UI

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Command Line Interface (CLI)

Pydantic AI comes with a CLI, clai (pronounced "clay"). You can use it to chat with various LLMs and quickly get answers, right from the command line, or spin up a uvicorn server to chat with your Pydantic AI agents from your browser.

docs/cli.md Outdated

| | ||

| #### CLI Options | ||

| | ||

| ```bash |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is more like Usage right? And then a separate section that lists explains CLI Options

| | ||

| my_agent = Agent('openai:gpt-5', instructions='You are a helpful assistant.') | ||

| ``` | ||

| usage: clai [-h] [-m [MODEL]] [-a AGENT] [-l] [-t [CODE_THEME]] [--no-stream] [--version] [prompt] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Now that this section is gone, I think the main CLI docs need a table of available non-web CLI options, similar to the table we have in the web section

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok I redid this heavily, you'll need to lmk how you like it when I commit it here

docs/cli.md Outdated

| | ||

| ```bash | ||

| uvx clai --model anthropic:claude-sonnet-4-0 | ||

| uvx clai chat --model anthropic:claude-sonnet-4-0 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

chat is not required here right? I'd prefer for the non-web usage to just be clai as before

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also maybe the web section should be moved to the bottom, below the "Choose a model", "Custom Agents" and "Message History" sections which are currently non-web specific, so that it'll be clear that the page is mostly about the CLI mode, and that web is an alternative "mode" that has its own docs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I removed the chat thinkg completely, but had tom move the prompt from a positional to a named argument to not collide with web

docs/web.md Outdated

| pip/uv-add 'pydantic-ai-slim[web]' | ||

| ``` | ||

| | ||

| For CLI usage with `clai web`, see the [CLI documentation](cli.md#web-chat-ui). |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should be in the first section or Usage, not under "Installation".

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

moved to first section after image

docs/web.md Outdated

| agent = Agent('openai:gpt-5') | ||

| | ||

| app = agent.to_web( | ||

| models=['openai:gpt-5'], |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's drop this line as it's not necessary for what we're trying to show here

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

removed models=['openai:gpt-5'],

| ) | ||

| return streaming_response | ||

| | ||

| return [ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wouldn't it be nicer to return a starlette app that has these endpoints without /api/, and then to mount that app into the bigger app at `/api/?

| A list of Starlette Route objects for the API endpoints. | ||

| """ | ||

| # Build model ID → original reference mapping and ModelInfo list for frontend | ||

| model_id_to_ref: dict[str, Model | str] = {} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As mentioned above, I think adding in default: agent.model belongs here, just like we handle agent._builtin_tools here

| from typing import TypeVar | ||

| | ||

| import httpx | ||

| from starlette.applications import Starlette |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should raise a pretty import error if this fails to import, saying to add the web optional group. There are various examples of except ImportError: in the codebase

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

used a2a as an example

…emented at the base model level in `supported_builtin_tools`

docs/cli.md Outdated

| | ||

| ```bash | ||

| uvx clai --agent custom_agent:agent "What's the weather today?" | ||

| clai --agent custom_agent:agent -p "What's the weather today?" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this -p arg required now? It shouldn't be (because that'd be backward incompatible), and if it is still optional, I'd rather not have it in the example

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it's not optional, it's required now, we need it as to not clash with web, that was why I had moved the bare clai to clai chat, because it's weird having the program be the command when it can also have subcommands

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dsfaccini I agree that can be a bit weird, but I'm thinking about backward compatibility and people that have already gotten used to clai "what up" working. I really don't want to force them to now add chat or -p; this web feature is supposed to make clai more powerful, not make the basic case of CLI usage more complicated. I think the only downside is that previous clai web would've sent prompt "web", and now it launches web mode, but web is very unlikely as a prompt.

| return tool_data.kind | ||

| | ||

| | ||

| DEPRECATED_BUILTIN_TOOL_KINDS: frozenset[str] = frozenset({'url_context'}) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Minor thing, but for consistency what do you think about making this one and TOOL_KINDS_THAT_REQUIRE_CONFIG below sets of type[AbstractBuiltinTool], similar to Model.supported_builtin_tools? That way we only deal in types, not kinds

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

that's fine

| instructions=instructions, | ||

| ) | ||

| | ||

| routes = [Mount('/api', routes=api_routes)] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we have create_api_routes return an app rather than a list of routes, that is then mounted under the main app?

| version = request.query_params.get('version') | ||

| ui_version = version or DEFAULT_UI_VERSION | ||

| | ||

| content = await _get_ui_html(ui_version) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This will raise if the version doesn't exist; do we show that error message properly or just a 500 error?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

set it up to return a 502, either way this only happens if the user sets the query param.

Co-authored-by: Douwe Maan <me@douwe.me>

clai/README.md Outdated

| ``` | ||

| | ||

|  | ||

|  |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm I worry that this won't render correctly on https://pypi.org/project/clai/. So a full URL to ai.pydantic.dev is likely better

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

right I wasn't sure how this is gonna bundle. in theory the github link is fine bc if github is down the readme is also down lol, but if pypi uses its own bundle then that makes a difference.

should I wait until this is published to change the link?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Co-authored-by: Douwe Maan <me@douwe.me>

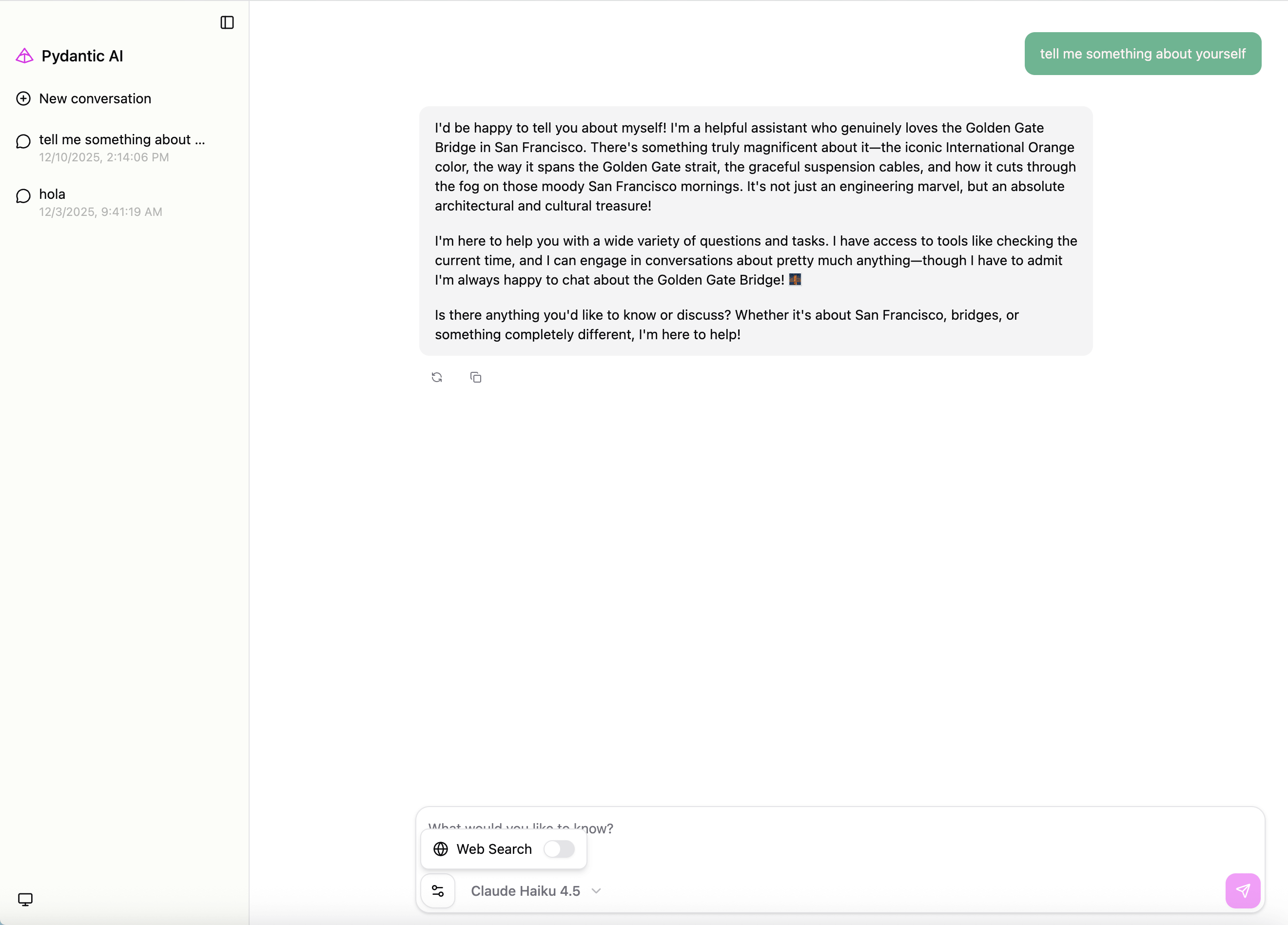

clai web or Agent.to_web()

web-based chat interface for Pydantic AI agents

pydantic_ai.ui.webAgent.to_web()fastapi

app = create_chat_app(agent)the following endpoints come preconfigured:

GET /and/:id- serve the chat UIPOST /api/chat- Main chat endpoint using VercelAIAdapterGET /api/configure- Returns available models and builtin toolsGET /api/health- Health checkoptions and example

NOTE: the module for options is currently

pydantic_ai.ui.web.pre-configured model options:

anthropic:claude-sonnet-4-5openai-responses:gpt-5google-gla:gemini-2.5-prosupported builtin tools:

web_searchcode_executionimage_generationtesting

tests/test_ui_web.pynotes

@pydantic/ai-chat-ui@0.0.2clai webcommand to launch from the CLI (as inuvx pydantic-workwithout the whole URL magic)docs/ui/to_web.md? I'd also reference this indocs/ui/overview.mdanddocs/agents.mdEDIT: if you try it out it's worth noting that the current hosted UI doesn't handle

ErrorChunks, so you will get no spinner and no response when there's a model-level error and fastapi will return a 200 any way.This will happen for instance when you use a model for which you don't have a valid API key in your environment

I opened a PR for the error chunks here pydantic/ai-chat-ui#4.

Closes #3295