ControlNet++: Improving Conditional Controls with Efficient Consistency Feedback

We provide a demo website for you to play with our ControlNet++ models and generate images interactively. For local running, please run the following command:

git clone https://github.com/liming-ai/ControlNet_Plus_Plus.git git checkout inference pip3 install -r requirements.txtPlease download the model weights and put them into each subset of checkpoints:

| model | HF weights🤗 |

|---|---|

| LineArt | model |

| Depth | model |

| Segmentation | model |

| Hed (SoftEdge) | model |

| Canny | model |

And then run:

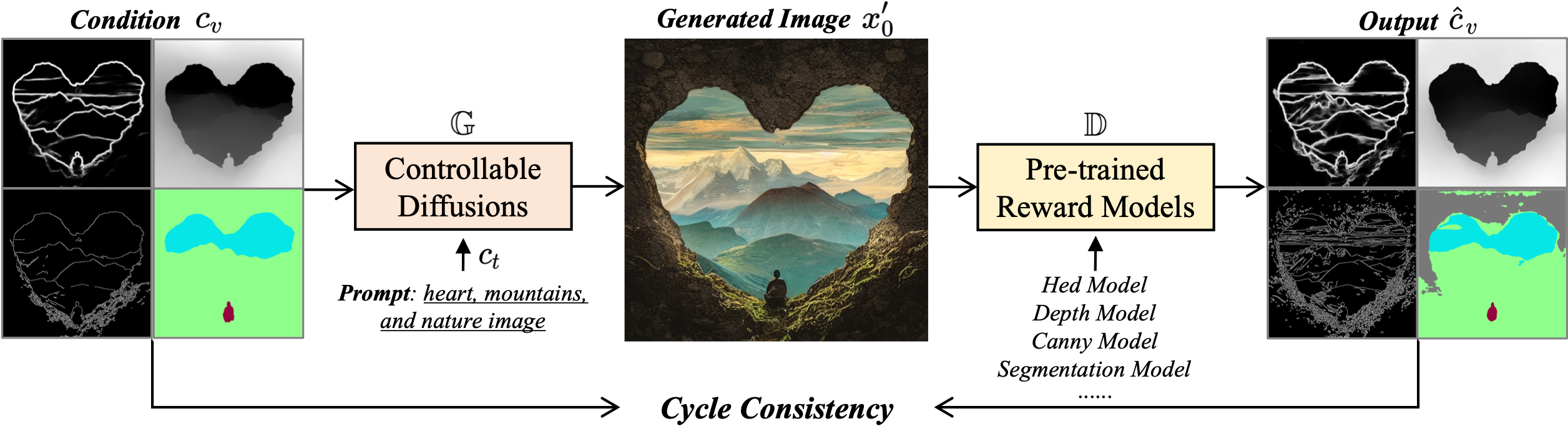

python3 app.pyWe model image-based controllable generation as an image translation task from input conditional controls

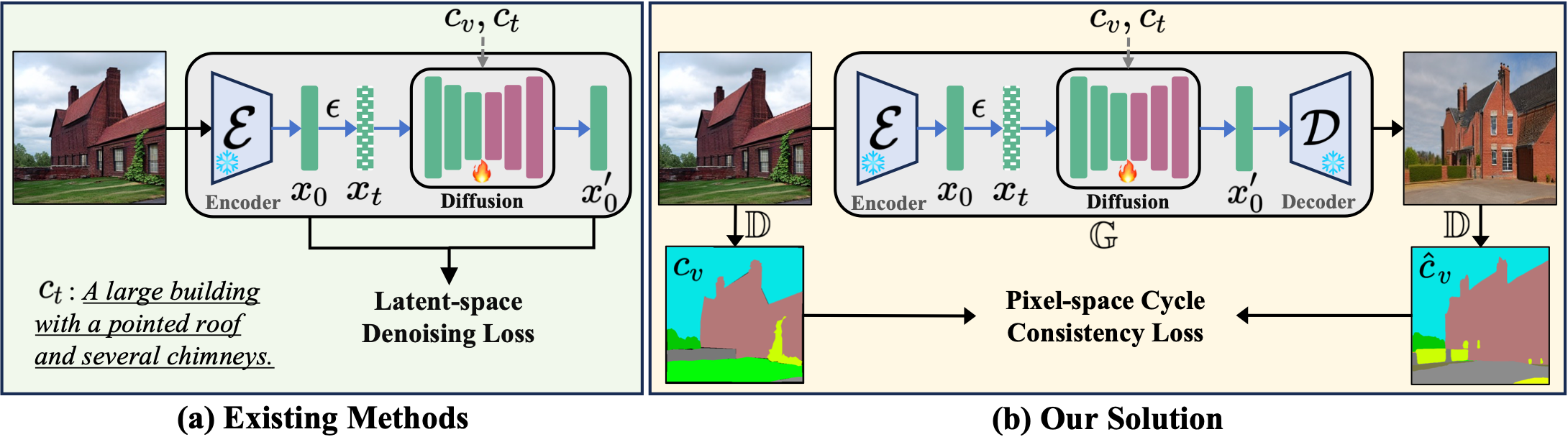

(a) Existing methods achieve implicit controllability by introducing imagebased conditional control

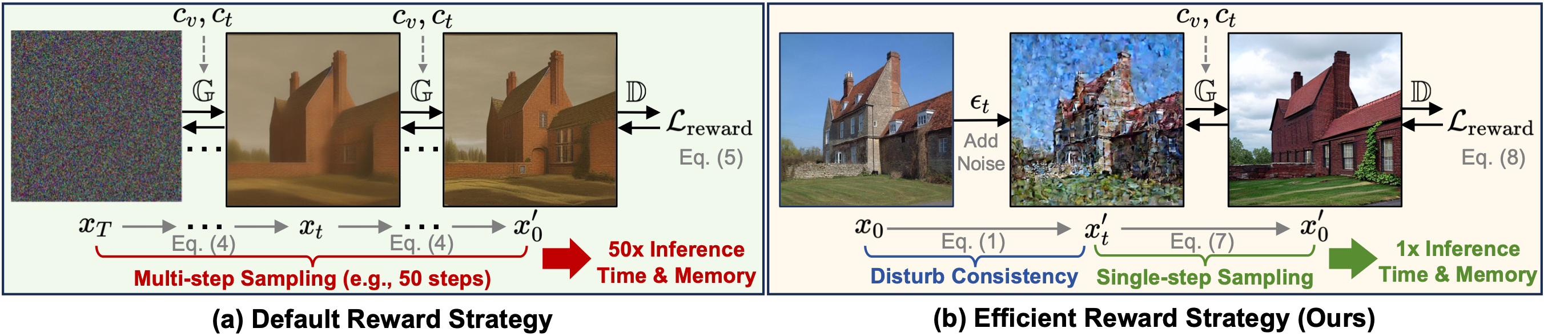

(a) Pipeline of default reward fine-tuning strategy. Reward fine-tuning requires sampling all the way to the full image. Such a method needs to keep all gradients for each timestep and the memory required is unbearable by current GPUs. (b) We add a small noise (

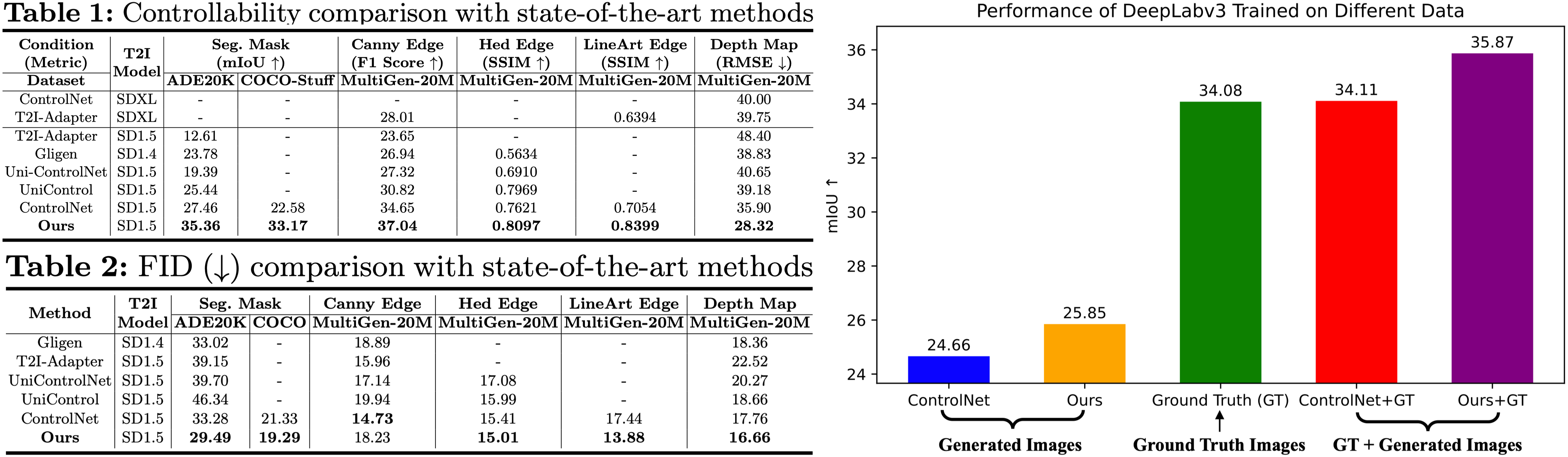

For a deep dive into our analyses, discussions, and evaluations, check out our paper.

This project is licensed under the MIT License - see the LICENSE file for details.

If our work assists your research, feel free to give us a star ⭐ or cite us using:

@inproceedings{controlnet_plus_plus, author = {Ming Li, Taojiannan Yang, Huafeng Kuang, Jie Wu, Zhaoning Wang, Xuefeng Xiao, Chen Chen}, title = {ControlNet $$++ $$: Improving Conditional Controls with Efficient Consistency Feedback}, booktitle = {European Conference on Computer Vision (ECCV)}, year = {2024}, }