- Notifications

You must be signed in to change notification settings - Fork 4.9k

🎉 New Source: Twilio Taskrouter API [low-code cdk] #18685

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| @Alcadeus0 Thanks for your contribution. I will help to review it, will get back to you EOD. |

| Sure! |

YiyangLi left a comment

YiyangLi left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it's a good start. The source connector only returns one single workspace. I would encourage you to implement more streams under Taskrouter. Thanks.

...grations/connectors/source-twilio-taskrouter/source_twilio_taskrouter/twilio_taskrouter.yaml Show resolved Hide resolved

...grations/connectors/source-twilio-taskrouter/source_twilio_taskrouter/twilio_taskrouter.yaml Outdated Show resolved Hide resolved

airbyte-integrations/connectors/source-twilio-taskrouter/source_twilio_taskrouter/spec.yaml Outdated Show resolved Hide resolved

| record_selector: | ||

| $ref: "*ref(definitions.selector)" | ||

| paginator: | ||

| type: NoPagination |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I understand that only one workspace is fetched, so there's no need to paginate. But if sid is offered, you can fetch other resources under the workspace. Can you implement one that requires a paginator?

And the Twilio's paginator is applied across all APIs, including taskrouter. Can you leverage codes in the existing source-twilio?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you want me to create another workspace stream and have the user enter the resource name? Because certain resources have indicated to avoid use of Page query in the taskrouter api

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A paginator would be needed when the response is too long, or the resource set is huge. Consequently, the requester has to include the page number or another form of cursor to get the remaining parts.

For workspaces, the API "will return the first 50 Workspaces. Supply a PageSize parameter to fetch more than 50 Workspaces." Maybe you can't create multiple workspaces, but I guess the resource set is huge on other streams, for example, activities

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure that works, so for the workspaces stream I'll have to add another optional parameter for the Page Size. Can you point me to a cdk doc on how to implement it? It would be great

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"will return the first 50 Workspaces. Supply a PageSize parameter to fetch more than 50 Workspaces."

It comes from Twilio's API doc, you may supply the parameter to get more than 50. And you may pick or implement a paginator in order to get other pages. For example, the PageSize is 100, and there are 500 entities, there will be 5 pages in total. Airbyte needs to send 5 requests to complete a full import.

My suggestion is to have a workspaces stream that fetches all workspaces, and add another stream that depends on the required field workspaceId.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is the doc for the paginator

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm having some trouble implementing the paginator, so I've set it up so that the user can enter a page_size input and page_size reads it as page_size: "{{config['number']}}" but how do i convert it into an integer from a string

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

number is not part of spec.yaml. When you add it to the spec, you could set the type of an attribute to integer.

My understanding of page_size is it defines the number of entities in a page. You could set a constant number, for example, 50. Even a customer is allowed to use a large number, say 65535, the server-side on twilio may cap it to another number, say 200. And if there are thousands of entities, you need to turn the next page by including the page number.

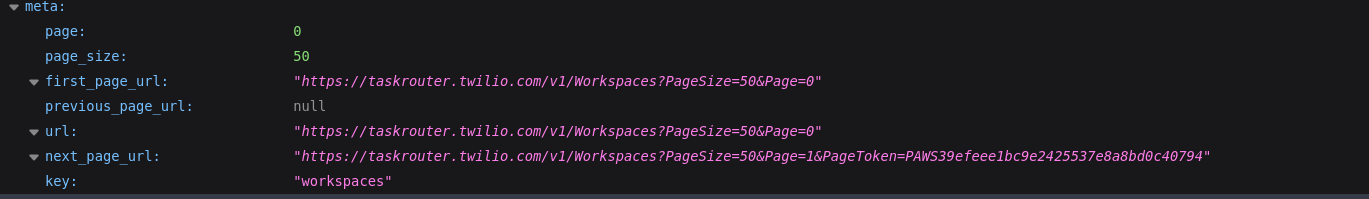

source-greenhouse is a good example, the greenhouse includes the page number in the headers of a response. It's an industry standard called RFC 8288. Twilio envelopes the entities, and set page_size, page_number and even the link to next page, called next_page_uri in the response body. Something like the following:

{ "page": 0, "page_size": 50, "uri": "\/2010-04-01\/Accounts\/ACXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX\/Calls.json", "first_page_uri": "\/2010-04-01\/Accounts\/ACXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX\/Calls.json?Page=0&PageSize=50", "previous_page_uri": null, "next_page_uri": "\/2010-04-01\/Accounts\/ACXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX\/Calls.json?Page=1&PageSize=50&AfterSid=CA228399228abecca920de212121", "calls": [ To test your pagination strategy, you don't need to create excessive resources. You could simply set page_size to a small number. For example, you have 6 workers, and the page size is 2, you need to paginate 3 times. After you run the read command, airbyte will tell you how many workers are read.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

source-greenhouse is a good example, the greenhouse includes the page number in the headers of a response. It's an industry standard called RFC 8288. Twilio envelopes the entities, and set page_size, page_number and even the link to next page, called next_page_uri in the response body. Something like the following:

Yes, I was confused at first when the response header did not indicate the page_size and number but as you explained it enveloping it makes sense. I'll add the paginator source to the workers stream with the example values you provided and see how it goes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@YiyangLi could you take a look at the changes and see if I need anything else?

airbyte-integrations/connectors/source-twilio-taskrouter/source_twilio_taskrouter/spec.yaml Outdated Show resolved Hide resolved

YiyangLi left a comment

YiyangLi left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's good to see the progression. Can you share how to create

| | ||

| check: | ||

| stream_names: | ||

| - "allworkspaces" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think you only need one stream to verify the check

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sure I'll remove the other two streams

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it still requires fetching all 3 streams to verify the connectivity. Can you simplify it? Thanks.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah i seemed to have made the new changes in only my topic branch. I'll push the changes to this branch

| path: "/v1/Workspaces" | ||

| primary_key: "sid" | ||

| retriever: | ||

| $ref: "*ref(definitions.base_stream.retriever)" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

do you need the paginator for allworkspaces? I understand that in your test account, there's only one workspace, but for others, there might be more than 50, and a paginator is required. And to avoid a duplicated codes, you can have an incremental_stream that extends base_stream, and you can move the paginator from workers to the incremental stream. workers and allworkspaces extends the incremental_stream, and incremental_stream extends base_stream.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is the incremental stream implemented using stream slicers? If so, the date-updated property of a workspace can be used

airbyte-integrations/connectors/source-twilio-taskrouter/source_twilio_taskrouter/spec.yaml Outdated Show resolved Hide resolved

| | ||

| 1. Navigate to the Airbyte Open Source dashboard. | ||

| 2. Set the name for your source. | ||

| 3. Enter your `account_sid`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you provide more twilio links to tell users where to find the account_sid, auth_token and sid? Users don't see sid in the UI, the label text is the description, which is workspace Id.

| I just realized that you create the PR based on the master branch in the forked repository, can you use a topic branch? |

| I run the command to fetch data, including workers, the read never stops. I guess it's the problem on the pagination, you need to tell airbyte to stop when it reaches to the last page. It happens when the next_page_url is null. Take the following payload, it means the page size is 2, and the next page is page 1 (0-index based): while for the next response, the next_page_url is null, meaning it reaches to the end. You could reproduce the problem by setting a small page_size. |

I created a new branch twilio-taskrouter but is there a way to edit the pull request to point to it instead of master branch? or should i create a new pr for it? |

| I fixed the endless read error when paginating by implementing CursorPagination as it has a stop condition parameter and giving it a value of null ends when no more records are to be read |

It's okay, just leave it as is. In order to be qualified for the Octoberfest bounty, you need to create the PR before Nov 4th. Please make sure you share the edit permissions with us. https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/working-with-forks/allowing-changes-to-a-pull-request-branch-created-from-a-fork Me or the CI will push commits in order to get your codes published. |

Not sure if you pushed your local commits correctly, the read in my local environment never ends. The version I am running is 2c6a104. You could try it by the following commands. Build Run In my Twilio account, I have 34 workers. I didn't set the page_size in the secrets/config.json. My config is similar to the sample_config.json you provide. |

| extractor: | ||

| field_pointer: ["workers"] | ||

| paginator: | ||

| type: "DefaultPaginator" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't see the CursorPagination you refer to, did you forget to push your commits?

| I got the error. |

| field_pointer: ["workers"] | ||

| | ||

| streams: | ||

| - "*ref(definitions.allworkspaces_stream)" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you need to list other streams if they are configured in integration_tests/configured_catalog.json.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah I listed the other streams

| inject_into: "request_parameter" | ||

| field_name: "page_size" | ||

| pagination_strategy: | ||

| type: "CursorPagination" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the paginator doesn't turn to the next page. In my test account, I have 57 workers, I would expect the connector firstly fetches the first 50 workers as 50 is the default page size, and it increases the page to 1 and use page=1 to get the remaining 7. But only the first 50 are read.

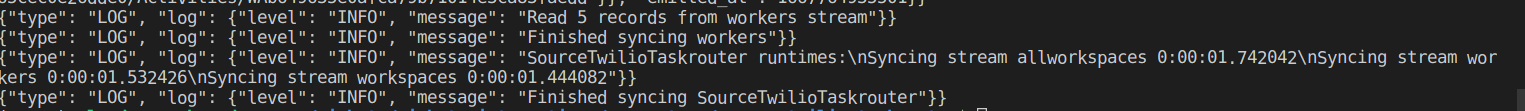

{"type": "LOG", "log": {"level": "INFO", "message": "Read 50 records from workers stream"}} {"type": "LOG", "log": {"level": "INFO", "message": "Finished syncing workers"}} {"type": "LOG", "log": {"level": "INFO", "message": "SourceTwilioTaskrouter runtimes:\nSyncing stream allworkspaces 0:00:00.364470\nSyncing stream workers 0:00:00.780400\nSyncing stream workspaces 0:00:00.384402"}} {"type": "LOG", "log": {"level": "INFO", "message": "Finished syncing SourceTwilioTaskrouter"}} There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've made sure the edit access for maintainers is checked, just need to fix the paginator. What could be the reason for it to be unable to read the remaining records. I'll try testing it out by creating more on my test acc

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you run the test again and see if it works?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What could be the reason for it to be unable to read the remaining records

The reason is that you need to parse response.body.meta, get the page number in the next_page_url, and use it to send the request again, until next_age_url is null.

request_url = "base_url/v1/workers"; do { response = request.get(request_url); request_url = response.body.meta.next_page_url; } while (request_url != null) The pseudo-code looks like above.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

where would i need to enter the code? in which file specifically? Is it by done by defining custom components?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

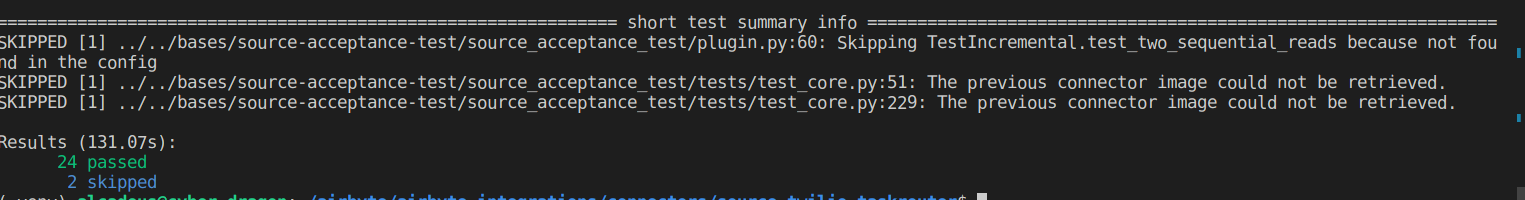

Since a new paginator is introduced, please run unit tests, integration tests, and acceptance test in your local environment and provide the logs again. The one in the PR description is out-dated. Thanks.

https://docs.airbyte.com/connector-development/testing-connectors/source-acceptance-tests-reference

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are able to fetch 5 workers because the page_size of 2 is not passed to the request parameters. The property in the config.json plays no effects.

I still don't get 57 workers. When I use a different page_size, the result is the same, meaning only the first page is fetched.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

where would i need to enter the code? in which file specifically? Is it by done by defining custom components?

I just offer you a pseudo-code to explain the algorithm behind, you need to set up the paginator correctly so that it works like what the algorithm describes. According to your yaml file, your paginator doesn't parse the response at all. Check greenhouse.yaml for an example.

| field_name: "page_size" | ||

| pagination_strategy: | ||

| type: "CursorPagination" | ||

| cursor_value: "{{ headers['url']['next'] }}" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you try to tell the paginator to get the cursor value from the headers. Are you sure the headers contain the value you want?

The following is an example response header when I try to GET /Workers

Date: Mon, 07 Nov 2022 04:10:22 GMT Content-Type: application/json Content-Length: 4151 Connection: keep-alive x-rate-limit-remaining: 50 x-rate-limit-limit: 50, 50;window=1 Twilio-Concurrent-Requests: 1 x-rate-limit-config: Worker-List Twilio-Request-Id: RQ5982d0470f1e214bfd748a8eb699f817 Twilio-Request-Duration: 0.021 Access-Control-Allow-Origin: * Access-Control-Allow-Headers: Accept, Authorization, Content-Type, If-Match, If-Modified-Since, If-None-Match, If-Unmodified-Since, Idempotency-Key Access-Control-Allow-Methods: GET, POST, DELETE, OPTIONS Access-Control-Expose-Headers: ETag Access-Control-Allow-Credentials: true X-Powered-By: AT-5000 X-Shenanigans: none X-Home-Region: us1 X-API-Domain: taskrouter.twilio.com Strict-Transport-Security: max-age=31536000 There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, I'll figure out the appropriate cursor value to solve the response header

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the cursor value doesn't come from the header. It's part of the response body, it's body["meta"]["next_page_uri"]

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I had tried that but it shows that body is not defined

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm trying with response['meta']['next_page_url'] but it shows the JSON Decode Error at line 1 col 1 char(0)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

there's a case that next_page_url is null, which indicates you have reached the last page. And maybe that's the error reason of JSON deserializer.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

or maybe it's because I am using a record extractor to filter out the meta json respone?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

When i try checking the API, it does show the next page url. Could it maybe related to the page_token injecting the path?

The next_page_url is the URL for the next page, you will get another next_page_url in the meta with this URL, until the value is null. This is the pseudo-code I provided you above. The right pagination strategy or paginator in the YAML works like the pseudo-code if it's configured correctly. You can also manually change the URL in a tool like postman to understand how it works.

request_url = "base_url/v1/workers"; do { response = request.get(request_url); request_url = response.body.meta.next_page_url; } while (request_url != null) There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

YiyangLi left a comment

YiyangLi left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

[ ] pass the page_size to request parameters correctly since you put it under the spec.json

[ ] parse the cursor value correctly from the response, and use it for the next request if not null.

| /test connector=connectors/source-twilio-taskrouter

Build PassedTest summary info: |

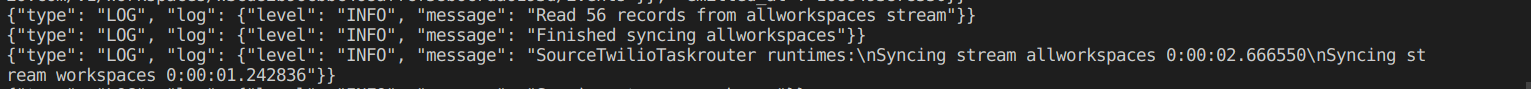

| I added a SubStreamSlicer to read all workspaces and read all other endpoints with the stream slicer. Also make pagination to work, now reads the 57 records in worker stream. |

| /publish connector=connectors/source-twilio-taskrouter

if you have connectors that successfully published but failed definition generation, follow step 4 here |

marcosmarxm left a comment

marcosmarxm left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @Alcadeus0 and @YiyangLi for the review.

| Thanks @marcosmarxm and @YiyangLi for reviewing and helping me with this PR. |

| @Alcadeus0 can you please update your profile with your email? :) If you prefer, you can DM me instead with the following:

Thanks! |

| @RealChrisSean I've added my email to my profile. |

What

It's useful for twilio users to keep track of their workspaces. This connector adds workspace data from a user's twilio account using the workspace ID

How

This connector adds the following stream

Recommended reading order

🚨 User Impact 🚨

No changes to existing code

Pre-merge Checklist

New Connector

Community member or Airbyter

airbyte_secret./gradlew :airbyte-integrations:connectors:<name>:integrationTest.README.mdbootstrap.md. See description and examplesdocs/integrations/<source or destination>/<name>.mdincluding changelog. See changelog exampledocs/integrations/README.mdairbyte-integrations/builds.mdAirbyter

If this is a community PR, the Airbyte engineer reviewing this PR is responsible for the below items.

/test connector=connectors/<name>command is passing/publishcommand described hereTests

Unit

None

Integration

Acceptance