- Notifications

You must be signed in to change notification settings - Fork 2.3k

Description

Environment:

TensorRT Version: 7.2.1

CUDA Version: 11.1

CUDNN Version: 8.0.4

Operating System + Version: ubuntu18.04

Python Version: 3.6.10

PyTorch Version: 1.7.0

##Description:

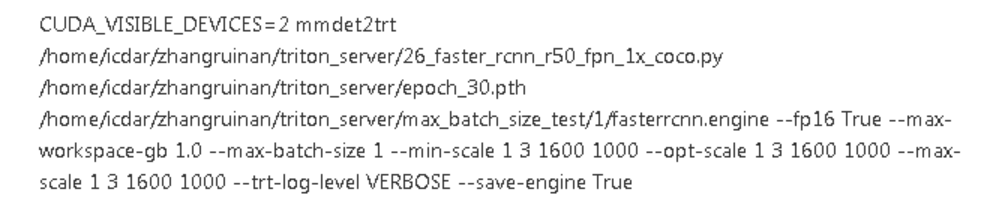

First, using the project mmdetection-to-tensorrt(link:https://github.com/grimoire/mmdetection-to-tensorrt) to convert our faster rcnn model(.pth file) directly convert to trtmodel(max batch size =1), the convert command is as follows:

Then, using same command but set max batch size =2, the convert command is as follows:

Third, using above converted trtmodels to infer with same image respectively, for trtmodel (with max batch size =2) the image repeated twice, adding the below code to record the layer time, then summarising the top20 layer time consuming for above two trtmodels as following table, from the table, for the network layers (with green identified) time consuming for the model (with max batch size =2) are almost double compared to the model(with max batch size =1), that seems unreasonable, because the network layers (with green identified) is convolution layer with tensor operation which can be considered parallel. Can you give me some suggestions? Thank you.