The OpenAI Agents SDK is a lightweight yet powerful framework for building multi-agent workflows. It is provider-agnostic, supporting the OpenAI Responses and Chat Completions APIs, as well as 100+ other LLMs.

Note

Looking for the JavaScript/TypeScript version? Check out Agents SDK JS/TS.

- Agents: LLMs configured with instructions, tools, guardrails, and handoffs

- Handoffs: A specialized tool call used by the Agents SDK for transferring control between agents

- Guardrails: Configurable safety checks for input and output validation

- Sessions: Automatic conversation history management across agent runs

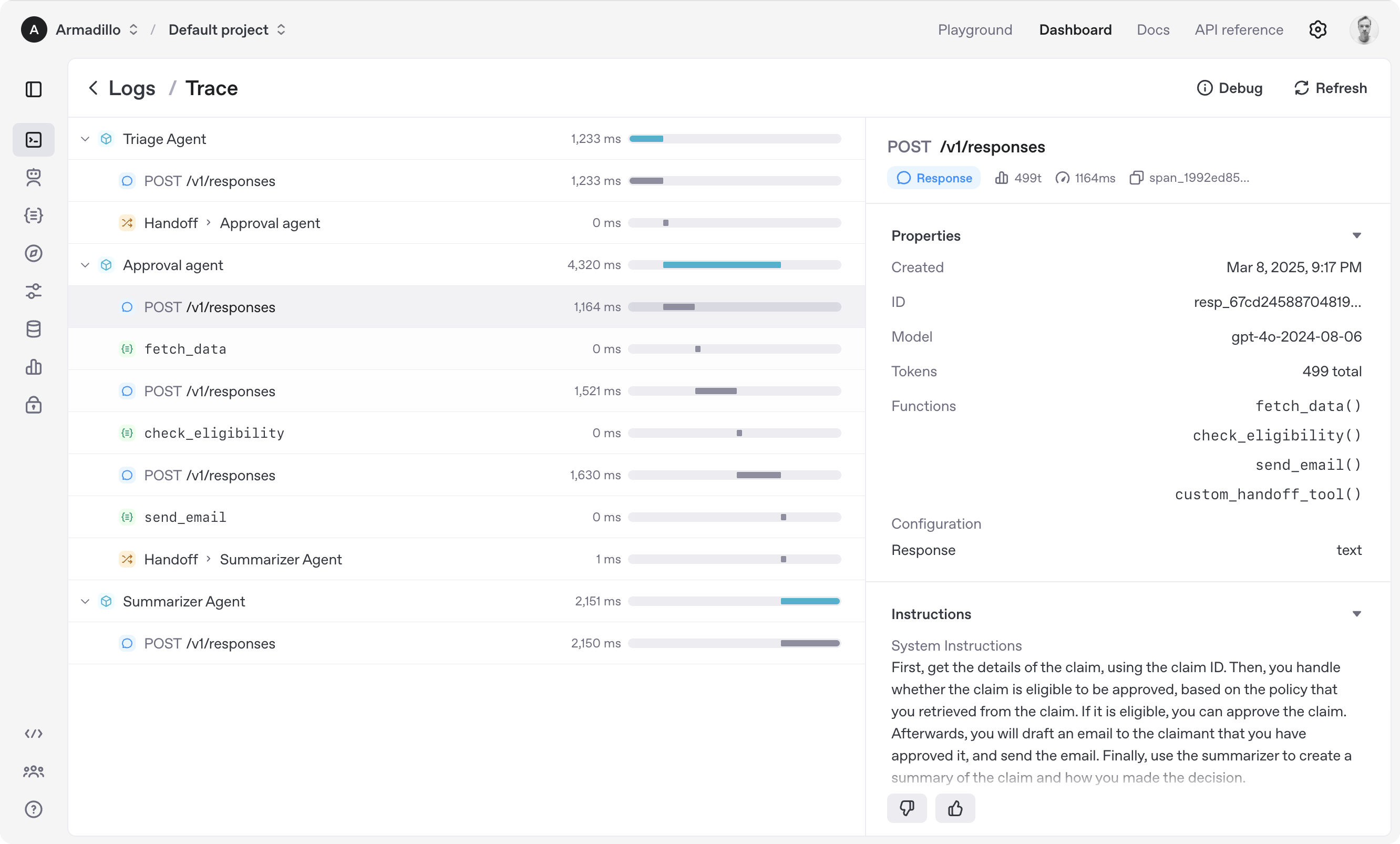

- Tracing: Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

Explore the examples directory to see the SDK in action, and read our documentation for more details.

- Set up your Python environment

- Option A: Using venv (traditional method)

python -m venv env source env/bin/activate # On Windows: env\Scripts\activate- Option B: Using uv (recommended)

uv venv source .venv/bin/activate # On Windows: .venv\Scripts\activate- Install Agents SDK

pip install openai-agentsFor voice support, install with the optional voice group: pip install 'openai-agents[voice]'.

from agents import Agent, Runner agent = Agent(name="Assistant", instructions="You are a helpful assistant") result = Runner.run_sync(agent, "Write a haiku about recursion in programming.") print(result.final_output) # Code within the code, # Functions calling themselves, # Infinite loop's dance.(If running this, ensure you set the OPENAI_API_KEY environment variable)

(For Jupyter notebook users, see hello_world_jupyter.ipynb)

from agents import Agent, Runner import asyncio spanish_agent = Agent( name="Spanish agent", instructions="You only speak Spanish.", ) english_agent = Agent( name="English agent", instructions="You only speak English", ) triage_agent = Agent( name="Triage agent", instructions="Handoff to the appropriate agent based on the language of the request.", handoffs=[spanish_agent, english_agent], ) async def main(): result = await Runner.run(triage_agent, input="Hola, ¿cómo estás?") print(result.final_output) # ¡Hola! Estoy bien, gracias por preguntar. ¿Y tú, cómo estás? if __name__ == "__main__": asyncio.run(main())import asyncio from agents import Agent, Runner, function_tool @function_tool def get_weather(city: str) -> str: return f"The weather in {city} is sunny." agent = Agent( name="Hello world", instructions="You are a helpful agent.", tools=[get_weather], ) async def main(): result = await Runner.run(agent, input="What's the weather in Tokyo?") print(result.final_output) # The weather in Tokyo is sunny. if __name__ == "__main__": asyncio.run(main())When you call Runner.run(), we run a loop until we get a final output.

- We call the LLM, using the model and settings on the agent, and the message history.

- The LLM returns a response, which may include tool calls.

- If the response has a final output (see below for more on this), we return it and end the loop.

- If the response has a handoff, we set the agent to the new agent and go back to step 1.

- We process the tool calls (if any) and append the tool responses messages. Then we go to step 1.

There is a max_turns parameter that you can use to limit the number of times the loop executes.

Final output is the last thing the agent produces in the loop.

- If you set an

output_typeon the agent, the final output is when the LLM returns something of that type. We use structured outputs for this. - If there's no

output_type(i.e. plain text responses), then the first LLM response without any tool calls or handoffs is considered as the final output.

As a result, the mental model for the agent loop is:

- If the current agent has an

output_type, the loop runs until the agent produces structured output matching that type. - If the current agent does not have an

output_type, the loop runs until the current agent produces a message without any tool calls/handoffs.

The Agents SDK is designed to be highly flexible, allowing you to model a wide range of LLM workflows including deterministic flows, iterative loops, and more. See examples in examples/agent_patterns.

The Agents SDK automatically traces your agent runs, making it easy to track and debug the behavior of your agents. Tracing is extensible by design, supporting custom spans and a wide variety of external destinations, including Logfire, AgentOps, Braintrust, Scorecard, and Keywords AI. For more details about how to customize or disable tracing, see Tracing, which also includes a larger list of external tracing processors.

You can use the Agents SDK Temporal integration to run durable, long-running workflows, including human-in-the-loop tasks. View a demo of Temporal and the Agents SDK working in action to complete long-running tasks in this video, and view docs here.

The Agents SDK provides built-in session memory to automatically maintain conversation history across multiple agent runs, eliminating the need to manually handle .to_input_list() between turns.

from agents import Agent, Runner, SQLiteSession # Create agent agent = Agent( name="Assistant", instructions="Reply very concisely.", ) # Create a session instance session = SQLiteSession("conversation_123") # First turn result = await Runner.run( agent, "What city is the Golden Gate Bridge in?", session=session ) print(result.final_output) # "San Francisco" # Second turn - agent automatically remembers previous context result = await Runner.run( agent, "What state is it in?", session=session ) print(result.final_output) # "California" # Also works with synchronous runner result = Runner.run_sync( agent, "What's the population?", session=session ) print(result.final_output) # "Approximately 39 million"- No memory (default): No session memory when session parameter is omitted

session: Session = DatabaseSession(...): Use a Session instance to manage conversation history

from agents import Agent, Runner, SQLiteSession # Custom SQLite database file session = SQLiteSession("user_123", "conversations.db") agent = Agent(name="Assistant") # Different session IDs maintain separate conversation histories result1 = await Runner.run( agent, "Hello", session=session ) result2 = await Runner.run( agent, "Hello", session=SQLiteSession("user_456", "conversations.db") )You can implement your own session memory by creating a class that follows the Session protocol:

from agents.memory import Session from typing import List class MyCustomSession: """Custom session implementation following the Session protocol.""" def __init__(self, session_id: str): self.session_id = session_id # Your initialization here async def get_items(self, limit: int | None = None) -> List[dict]: # Retrieve conversation history for the session pass async def add_items(self, items: List[dict]) -> None: # Store new items for the session pass async def pop_item(self) -> dict | None: # Remove and return the most recent item from the session pass async def clear_session(self) -> None: # Clear all items for the session pass # Use your custom session agent = Agent(name="Assistant") result = await Runner.run( agent, "Hello", session=MyCustomSession("my_session") )- Ensure you have

uvinstalled.

uv --version- Install dependencies

make sync- (After making changes) lint/test

make check # run tests linter and typechecker Or to run them individually:

make tests # run tests make mypy # run typechecker make lint # run linter make format-check # run style checker We'd like to acknowledge the excellent work of the open-source community, especially:

- Pydantic (data validation) and PydanticAI (advanced agent framework)

- LiteLLM (unified interface for 100+ LLMs)

- MkDocs

- Griffe

- uv and ruff

We're committed to continuing to build the Agents SDK as an open source framework so others in the community can expand on our approach.