- Notifications

You must be signed in to change notification settings - Fork 179

Feature: Adding support for processing and searching pdfs inside google drive #94

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature: Adding support for processing and searching pdfs inside google drive #94

Conversation

app/indexing/index_documents.py Outdated

| | ||

| | ||

| @staticmethod | ||

| def _split_PDF_into_paragraphs(texts:List[PDFDocument],minimum_length=256): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

please move this to pdf_parser, class BasicDocument's content should stay :str

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 i refactored , should be now resolved , moved it into pdf parser file and refactored the logic

| type: DocumentType | ||

| title: str | ||

| content: str | ||

| content: Union[str, List[PDFDocument]] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

should be str.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 reverted and changed back to as it was str

| loader = PyPDFLoader(input_filename) | ||

| documents = loader.load() | ||

| text_split = CharacterTextSplitter(chunk_size=100, chunk_overlap=0) | ||

| texts = text_split.split_documents(documents) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

add here the pdf->text, this func should just return str

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 refactored and now pdf_to_text function returns a str

| python-dateutil | ||

| httplib2 | ||

| pypdf | ||

| pycryptodome |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

are you sure all of these needed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 yes they are required by pypdf and langchain

app/indexing/index_documents.py Outdated

| if document.file_type != FileType.PDF: | ||

| paragraphs = Indexer._split_into_paragraphs(document.content) | ||

| else: | ||

| paragraphs = split_PDF_into_paragraphs(document.content) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

now when content is always simple str, is this needed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 yes because the way i split documents is different than the other one , split_PDF_into_paragraphs is different than this Indexer._split_into_paragraphs so i have to check the file type

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 with your above suggestion to move the split_PDF_into_paragraphs function to the pdf parser module it is important to do this check

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Roey7 reverted back after discussions

…n works after changing the delimeter

Summary of Changes:

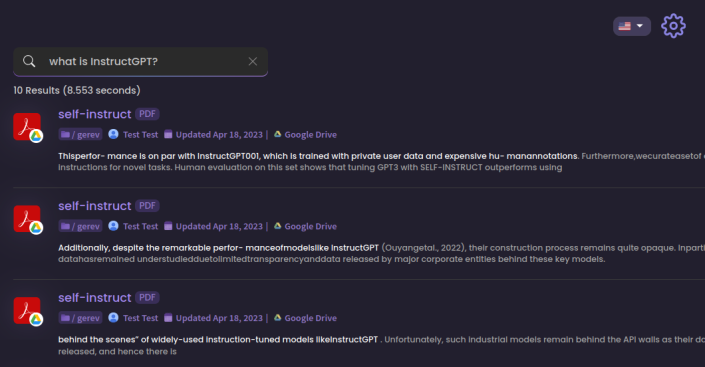

What it should look like: