1.官方提供的三种部署方式

minikube

Minikube是一个工具,可以在本地快速运行-一个单点的Kubernetes,仅用子尝试Kubemnetes或日常开发的用户使用。部署地址: htps://kubernetese io/docs/setup/minikube/

kubeadm

Kubeadm也是一个工具,揭供kubeadm init和ukubeadm join,用于快速部署Kubermnetes集群,部署地址:htpst/:/ubee/es.cs/do/s/cference/scetup tos/kubedm/kubeadm/

二进制包

推荐,从官方下载发打版的二进制包,手动部署每个组件,组成Kubermetes集群。 下载地址:htpts//github.com/kubemetes/kuberetes/teleases

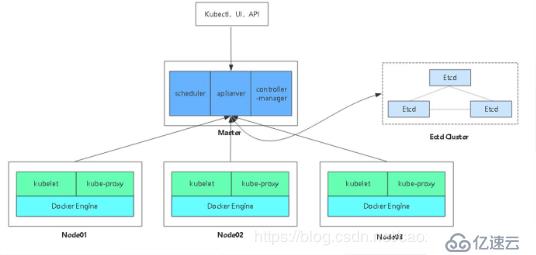

单Master集群架构图

| 组件 | 使用的证书 |

|---|---|

| etcd | capem, server.pem, server-key.pem |

| flannel | ca.pem,server.pem, server-key.pem |

| kube-apiserver | ca.pem. server.pem. server-key.pem |

| kubelet | ca.pem, ca-key.pem |

| kube-proxy | ca.pem, kube-proxy pem, kube-proxy-key.pem |

| kubectl | ca.pem, admin.pem, admin-key.pem |

etcd是CoreOS团队于2013年6月发起的开源项目,它的目标是构建一个高可用的分布式键值(key-value)数据库。etcd内部采用raft协议作为一致性算法,etcd基于Go语言实现。

etcd作为服务发现系统,有以下的特点:

简单:安装配置简单,而且提供了HTTP API进行交互,使用也很简单

安全:支持SSL证书验证

快速:根据官方提供的benchmark数据,单实例支持每秒2k+读操作

可靠:采用raft算法,实现分布式系统数据的可用性和一致性

一个强一致性、高可用的服务存储目录。

基于Ralf算法的etcd天生就是这样一个强一致性、高可用的服务存储目录。

一种注册服务和健康服务健康状况的机制。

用户可以在etcd中注册服务,并且对注册的服务配置key TTL,定时保持服务的心跳以达到监控健康状态的效果。

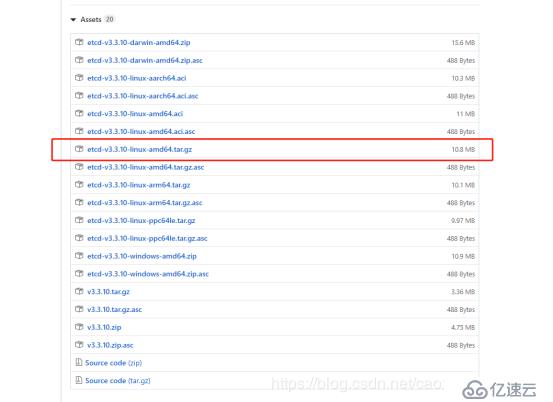

二进制包下载地址

https://github.com/etcd-io/etcd/releases

/opt/etcd/bin/etcdctl \ --a-file=ca.pem -crt-file=server.pem --key-file= server-key.pem \ --endpoints=*https://192.168.0.x:2379.https://192.168.0.x:2379,https://192.168.0x:2379" \ cluster-health

| 主机 | 需要安装的软件 |

|---|---|

| master(192.168.142.129/24) | kube-apiserver、kube-controller-manager、kube-scheduler、etcd |

| node01(192.168.142.130/24) | kubelet、kube-proxy、docker、flannel、etcd |

| node02(192.168.142.131/24) | kubelet、kube-proxy、docker 、flannel 、etcd |

k8s官网地址,点击获取噢!

ETCD 二进制包地址,点击即可获取噢!

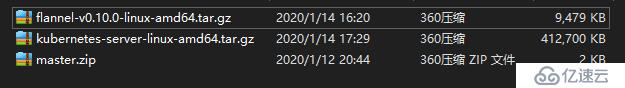

将上述的压缩包复制到centos7的下面即将创建的k8s目录中

资源包链接:

https://pan.baidu.com/s/1QGvhsAVmv2SmbrWMGc3Bng 提取码:mlh5mkdir k8s cd k8s/ mkdir etcd-cert mv etcd-cert.sh etcd-certvim cfssl.sh curl -L https:#pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl curl -L https:#pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson curl -L https:#pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfobash cfssl.shcfssl 生成证书工具 cfssljson 通过传入json文件生成证书 cfssl-certinfo 查看证书信息cd etcd-cert/cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOFcat > ca-csr.json <<EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "192.168.142.129", "192.168.142.130", "192.168.142.131" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare servertar zxvf etcd-v3.3.10-linux-amd64.tar.gzmkdir /opt/etcd/{cfg,bin,ssl} -p mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/cp etcd-cert/*.pem /opt/etcd/ssl/bash etcd.sh etcd01 192.168.142.129 etcd02=https:#192.168.142.130:2380,etcd03=https:#192.168.142.131:2380ps -ef | grep etcdscp -r /opt/etcd/ root@192.168.142.130:/opt/ scp -r /opt/etcd/ root@192.168.142.131:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.142.130:/usr/lib/systemd/system/ scp /usr/lib/systemd/system/etcd.service root@192.168.142.131:/usr/lib/systemd/system/vim /opt/etcd/cfg/etcd#[Member] ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https:#192.168.142.130:2380" ETCD_LISTEN_CLIENT_URLS="https:#192.168.142.130:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https:#192.168.142.130:2380" ETCD_ADVERTISE_CLIENT_URLS="https:#192.168.142.130:2379" ETCD_INITIAL_CLUSTER="etcd01=https:#192.168.142.129:2380,etcd02=https:#192.168.142.130:2380,etcd03=https:#192.168.142.131:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"systemctl start etcd systemctl status etcdvim /opt/etcd/cfg/etcd#[Member] ETCD_NAME="etcd03" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https:#192.168.142.131:2380" ETCD_LISTEN_CLIENT_URLS="https:#192.168.142.131:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https:#192.168.142.131:2380" ETCD_ADVERTISE_CLIENT_URLS="https:#192.168.142.131:2379" ETCD_INITIAL_CLUSTER="etcd01=https:#192.168.142.129:2380,etcd02=https:#192.168.142.130:2380,etcd03=https:#192.168.142.131:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"systemctl start etcd systemctl status etcd/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https:#192.168.142.129:2379,https:#192.168.142.130:2379,https:#192.168.142.131:2379" cluster-healthmember 3eae9a550e2e3ec is healthy: got healthy result from https:#192.168.142.129:2379 member 26cd4dcf17bc5cbd is healthy: got healthy result from https:#192.168.142.130:2379 member 2fcd2df8a9411750 is healthy: got healthy result from https:#192.168.142.131:2379 cluster is healthy#安装依赖包 yum install yum-utils device-mapper-persistent-data lvm2 -y #设置阿里云镜像源 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #安装Docker-ce yum install -y docker-ce #关闭防火墙及增强型安全功能 systemctl stop firewalld.service setenforce 0 #启动Docker并设置为开机自启动 systemctl start docker.service systemctl enable docker.service #检查相关进程开启情况 ps aux | grep docker #重载守护进程 systemctl daemon-reload #重启服务 systemctl restart docker/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.142.129:2379,https://192.168.142.130:2379,https://192.168.142.131:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.142.129:2379,https://192.168.142.130:2379,https://192.168.142.131:2379" get /coreos.com/network/configcd /root/k8s scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.142.130:/root scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.142.131:/roottar zxvf flannel-v0.10.0-linux-amd64.tar.gz mkdir /opt/kubernetes/{cfg,bin,ssl} -p mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/ vim flannel.sh #!/bin/bash ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"} cat <<EOF >/opt/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \ -etcd-cafile=/opt/etcd/ssl/ca.pem \ -etcd-certfile=/opt/etcd/ssl/server.pem \ -etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/opt/kubernetes/cfg/flanneld ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable flanneld systemctl restart flanneldbash flannel.sh https://root@192.168.142.129:2379,https://root@192.168.142.130:2379,https://root@192.168.142.131:2379vim /usr/lib/systemd/system/docker.service [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker #14行的准启动前插入以下条目 EnvironmentFile=/run/flannel/subnet.env #引用参数$DOCKER_NETWORK_OPTIONS ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:# --containerd=/run/containerd/containerd.sock ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always #查看网络信息 cat /run/flannel/subnet.envDOCKER_OPT_BIP="--bip=172.17.15.1/24" DOCKER_OPT_IPMASQ="--ip-masq=false" DOCKER_OPT_MTU="--mtu=1450" #说明:bip指定启动时的子网 DOCKER_NETWORK_OPTIONS=" --bip=172.17.15.1/24 --ip-masq=false --mtu=1450"systemctl daemon-reload systemctl restart docker[root@localhost ~]# ifconfig docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu1500 inet 172.17.56.1 netmask 255.255.255.0broadcast 172.17.56.255 ether 02:42:74:32:33:e3 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.142.130 netmask 255.255.255.0 broadcast 192.168.142.255 inet6 fe80::8cb8:16f4:91a1:28d5 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:04:f1:1f txqueuelen 1000 (Ethernet) RX packets 436817 bytes 153162687 (146.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 375079 bytes 47462997 (45.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.17.56.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::249c:c8ff:fec0:4baf prefixlen 64 scopeid 0x20<link> ether 26:9c:c8:c0:4b:af txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 26 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1 (Local Loopback) RX packets 1915 bytes 117267 (114.5 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1915 bytes 117267 (114.5 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:61:63:f2 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0docker run -it centos:7 /bin/bash yum install net-tools -y[root@5f9a65565b53 /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.17.56.2 netmask 255.255.255.0broadcast 172.17.56.255 ether 02:42:ac:11:38:02 txqueuelen 0 (Ethernet) RX packets 15632 bytes 13894772 (13.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 7987 bytes 435819 (425.6 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@f1e937618b50 /]# ping 172.17.15.2 PING 172.17.15.2 (172.17.15.2) 56(84) bytes of data. 64 bytes from 172.17.15.2: icmp_seq=1 ttl=62 time=0.420 ms 64 bytes from 172.17.15.2: icmp_seq=2 ttl=62 time=0.302 ms 64 bytes from 172.17.15.2: icmp_seq=3 ttl=62 time=0.420 ms 64 bytes from 172.17.15.2: icmp_seq=4 ttl=62 time=0.364 ms 64 bytes from 172.17.15.2: icmp_seq=5 ttl=62 time=0.114 ms1.自签APIServer证书

cd k8s/ unzip master.zip mkdir /opt/kubernetes/{cfg,bin,ssl} -p #apiserver自签证书目录 mkdir apiserver cd apiserver/#定义ca证书,生成ca证书配置文件 cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF #生成证书签名文件 cat > ca-csr.json << EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF #证书签名(生成ca.pem ca-key.pem) cfssl gencert -initca ca-csr.json | cfssljson -bare ca -#定义apiserver证书,生成apiserver证书配置文件 cat > server-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.142.129", #master1 "192.168.142.120", #master2(后期做双节点) "192.168.142.20", #vip "192.168.142.140", #lb nginx负载均衡(master) "192.168.142.150", #lb (backup) "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF #证书签名(生成server.pem server-key.pem) cfssl gencert -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes server-csr.json | cfssljson -bare server#定义admin证书 cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF #证书签名(生成admin.pem admin-key.epm) cfssl gencert -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes admin-csr.json | cfssljson -bare admin#定义kube-proxy证书 cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF #证书签名(生成kube-proxy.pem kube-proxy-key.pem) cfssl gencert -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxybash k8s-cert.sh cp -p *.pem /opt/kubernetes/ssl/cd .. tar zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin/ cp -p kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/cd /opt/kubernetes/cfg #生成随机的令牌 export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') cat > token.csv << EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOFvim apiserver.sh #!/bin/bash MASTER_ADDRESS=$1 ETCD_SERVERS=$2 cat <<EOF >/opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \\ --v=4 \\ --etcd-servers=${ETCD_SERVERS} \\ --bind-address=${MASTER_ADDRESS} \\ --secure-port=6443 \\ --advertise-address=${MASTER_ADDRESS} \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --kubelet-https=true \\ --enable-bootstrap-token-auth \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-50000 \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOFbash apiserver.sh 192.168.142.129 https://192.168.142.129:2379,https://192.168.142.130:2379,https://192.168.142.131:2379cat <<EOF >/opt/kubernetes/cfg/kube-apiserverKUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.142.129:2379,https://192.168.142.130:2379,https://192.168.142.131:2379 \ --bind-address=192.168.142.129 \ --secure-port=6443 \ --advertise-address=192.168.142.129 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem"systemctl daemon-reload systemctl start kube-apiserver systemctl status kube-apiserver systemctl enable kube-apiserver #查看服务端口状况 ps aux | grep kube 2.部署Controller-Manager服务

cd /k8s/kubernetes/server/bin #移动脚本 cp -p kube-controller-manager /opt/kubernetes/bin/cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem" EOFcat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https:#github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOFchmod +x /usr/lib/systemd/system/kube-controller-manager.service systemctl start kube-controller-manager systemctl status kube-controller-manager systemctl enable kube-controller-manager #查看服务端口状况 netstat -atnp | grep kube-controll3.部署Scheruler服务

cd /k8s/kubernetes/server/bin #移动脚本 cp -p kube-scheduler /opt/kubernetes/bin/cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect" EOFcat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https:#github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOFchmod +x /usr/lib/systemd/system/kube-scheduler.service systemctl daemon-reload systemctl start kube-scheduler systemctl status kube-scheduler systemctl enable kube-scheduler #查看服务端口状况 netstat -atnp | grep schedule/opt/kubernetes/bin/kubectl get cs #成功部署应该全部为healthyNAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"}4.部署Kubelet&kube-proxy

cd kubernetes/server/bin #向node节点推送控制命令 scp -p kubelet kube-proxy root@192.168.142.130:/opt/kubernetes/bin/ scp -p kubelet kube-proxy root@192.168.142.131:/opt/kubernetes/bin/cd /root/k8s/kubernetes/ #指定api入口,指自身即可(必须安装了apiserver) export KUBE_APISERVER="https:#192.168.142.129:6443" #设置集群 /opt/kubernetes/bin/kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=/k8s/kubeconfig/bootstrap.kubeconfig #设置客户端认证 /opt/kubernetes/bin/kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=/k8s/kubeconfig/bootstrap.kubeconfig #设置上下文参数 /opt/kubernetes/bin/kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=/k8s/kubeconfig/bootstrap.kubeconfig #设置默认上下文 /opt/kubernetes/bin/kubectl config use-context default \ --kubeconfig=/k8s/kubeconfig/bootstrap.kubeconfig#设置集群 /opt/kubernetes/bin/kubectl config set-cluster kubernetes \ --certificate-authority=/opt/etcd/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=/k8s/kubeconfig/kube-proxy.kubeconfig #设置客户端认证 /opt/kubernetes/bin/kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \ --client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=/k8s/kubeconfig/kube-proxy.kubeconfig #设置上下文参数 /opt/kubernetes/bin/kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=/k8s/kubeconfig/kube-proxy.kubeconfig #设置默认上下文 /opt/kubernetes/bin/kubectl config use-context default \ --kubeconfig=/k8s/kubeconfig/kube-proxy.kubeconfigscp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.142.130:/opt/kubernetes/cfg/ scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.142.131:/opt/kubernetes/cfg/echo "export PATH=\$PATH:/opt/kubernetes/bin/" >> /etc/profile source /etc/profilekubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap1.安装Kubelet

cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=${NODE_ADDRESS} \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet.config \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/opt/kubernetes/cfg/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: ${NODE_ADDRESS} port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - ${DNS_SERVER_IP} clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true EOFcat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOFchmod +x /usr/lib/systemd/system/kubelet.service systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet#检查签名请求 kubectl get csrNAME AGE REQUESTOR CONDITION node-csr-NOI-9vufTLIqJgMWq4fHPNPHKbjCXlDGHptj7FqTa8A 55s kubelet-bootstrap Approved,Issuedkubectl certificate approve node-csr-NOI-9vufTLIqJgMWq4fHPNPHKbjCXlDGHptj7FqTa8Akubectl get nodes2.安装kube-proxy

cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=192.168.142.130 \\ --cluster-cidr=10.0.0.0/24 \\ --proxy-mode=ipvs \\ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOFcat <<EOF >/usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOFchmod +x /usr/lib/systemd/system/kube-proxy.service systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy #查看服务端口状况 netstat -atnp | grep proxy免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。