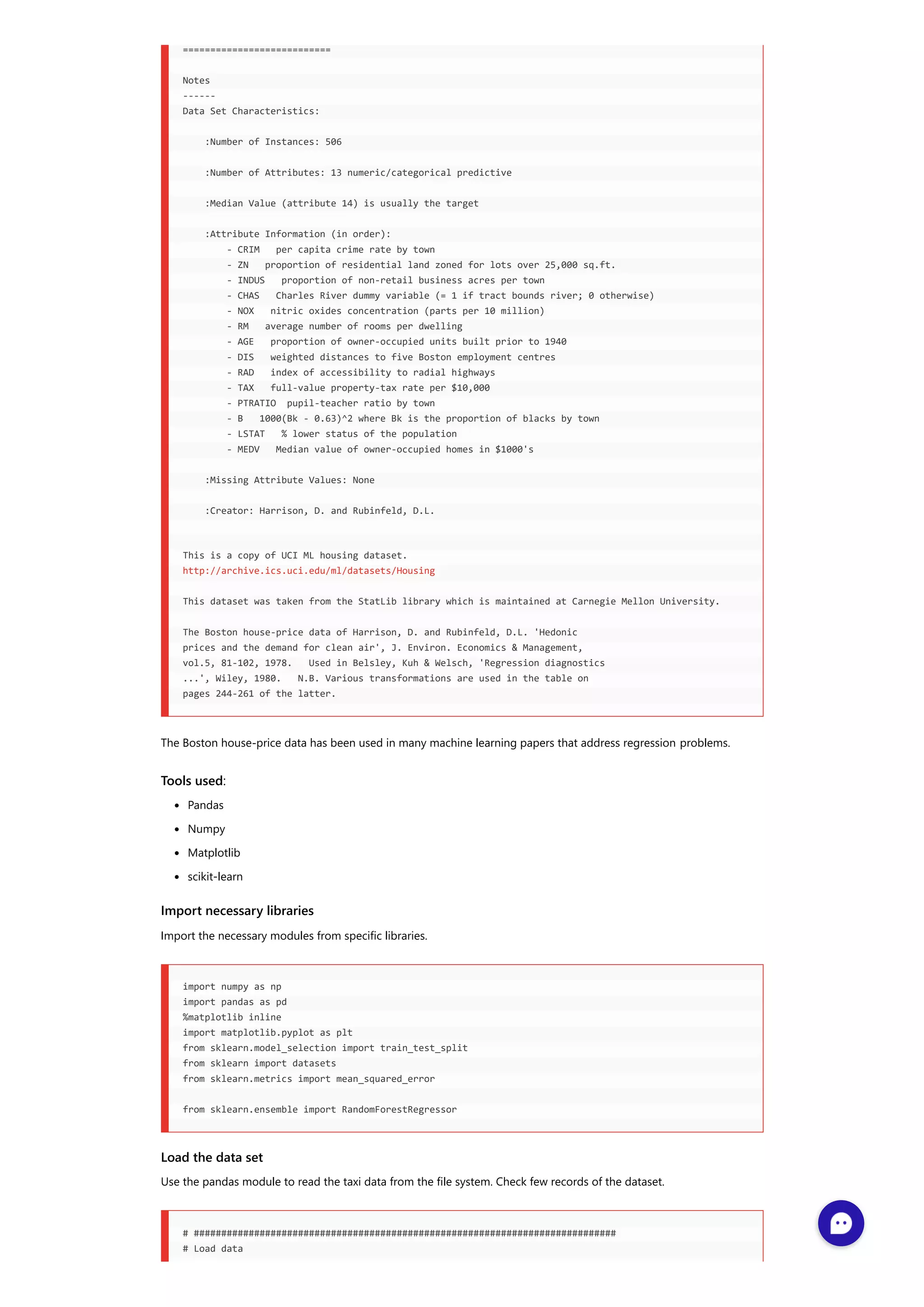

The document discusses using the random forest algorithm for regression problems. It begins by introducing random forest and ensemble methods, which combine predictions from multiple models. For regression problems, random forest uses bagging to train decision trees on random subsets of data and averages their predictions. The document then applies random forest to the Boston housing dataset, evaluating performance on test data and concluding it is a well-fitting model for predicting house prices.

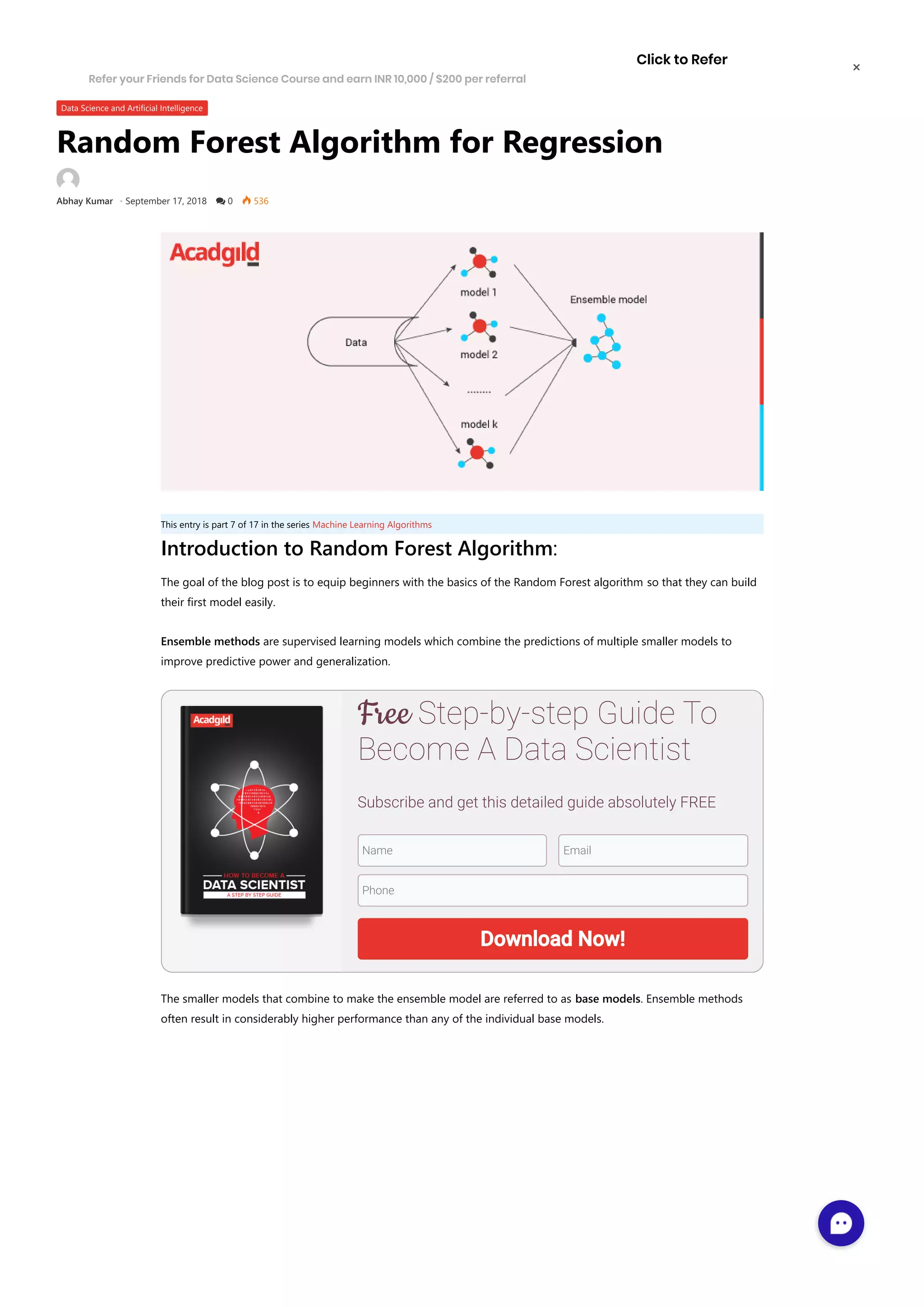

![boston = datasets.load_boston() print(boston.data.shape, boston.target.shape) print(boston.feature_names) (506, 13) (506,) ['CRIM' 'ZN' 'INDUS' 'CHAS' 'NOX' 'RM' 'AGE' 'DIS' 'RAD' 'TAX' 'PTRATIO' 'B' 'LSTAT'] data = pd.DataFrame(boston.data,columns=boston.feature_names) data = pd.concat([data,pd.Series(boston.target,name='MEDV')],axis=1) data.head() CRIM ZN INDUS CHAS NOX RM AGE DIS RAD TAX PTRATIO B LSTAT MEDV 0 0.00632 18.0 2.31 0.0 0.538 6.575 65.2 4.0900 1.0 296.0 15.3 396.90 4.98 24.0 1 0.02731 0.0 7.07 0.0 0.469 6.421 78.9 4.9671 2.0 242.0 17.8 396.90 9.14 21.6 2 0.02729 0.0 7.07 0.0 0.469 7.185 61.1 4.9671 2.0 242.0 17.8 392.83 4.03 34.7 3 0.03237 0.0 2.18 0.0 0.458 6.998 45.8 6.0622 3.0 222.0 18.7 394.63 2.94 33.4 4 0.06905 0.0 2.18 0.0 0.458 7.147 54.2 6.0622 3.0 222.0 18.7 396.90 5.33 36.2 Select the predictor and target variables X = data.iloc[:,:-1] y = data.iloc[:,-1] Train test split : x_training_set, x_test_set, y_training_set, y_test_set = train_test_split(X,y,test_size=0.10, random_state=42, shuffle=True) Training/model fitting: Fit the model to selected supervised data n_estimators=100 # Fit regression model # Estimate the score on the entire dataset, with no missing values model = RandomForestRegressor(random_state=0, n_estimators=n_estimators) model.fit(x_training_set, y_training_set) Model parameters study : The coefficient R^2 is defined as (1 – u/v), where u is the residual sum of squares ((y_true – y_pred) ** 2).sum() and v is the total sum of squares ((y_true – y_true.mean()) ** 2).sum(). from sklearn.m etrics import mean_squared_error, r2_score model_score = model.score(x_training_set,y_training_set) # Have a look at R sq to give an idea of the fit , # Explained variance score: 1 is perfect prediction print(“ coefficient of determination R^2 of the prediction.: ',model_score) y_predicted = model.predict(x_test_set) # The mean squared error print("Mean squared error: %.2f"% mean_squared_error(y_test_set, y_predicted)) # Explained variance score: 1 is perfect prediction print('Test Variance score: %.2f' % r2_score(y_test_set, y_predicted)) Coefficient of determination R^2 of the prediction : 0.982022598521334 Mean squared error: 7.73 Test Variance score: 0.88 Accuracy report with test data : Let’s visualize the goodness of the fit with the predictions being visualized by a line # So let's run the model against the test data](https://image.slidesharecdn.com/randomforestalgorithmforregressionabeginnersguide-181009110530/75/Random-forest-algorithm-for-regression-a-beginner-s-guide-4-2048.jpg)

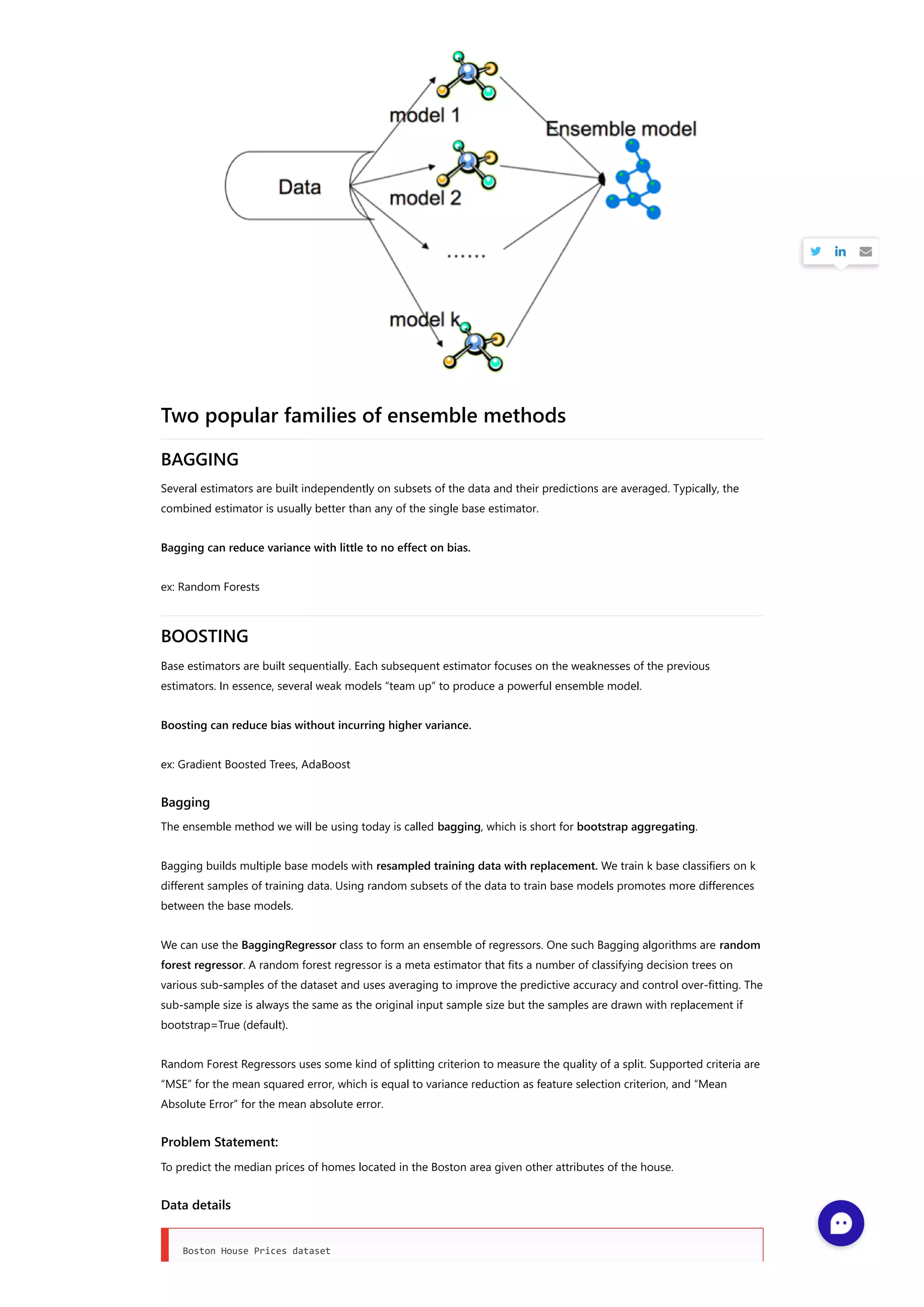

![This site uses Akismet to reduce spam. Learn how your comment data is processed. << Using Gradient Boosting for Regression Problems Linear Regression >> Edit Post from sklearn.model_selection import cross_val_predict fig, ax = plt.subplots() ax.scatter(y_test_set, y_predicted, edgecolors=(0, 0, 0)) ax.plot([y_test_set.min(), y_test_set.max()], [y_test_set.min(), y_test_set.max()], 'k--', lw=4) ax.set_xlabel('Actual') ax.set_ylabel('Predicted') ax.set_title("Ground Truth vs Predicted") plt.show() Conclusion: We can see that our R2 score and MSE are both very good. This means that we have found a well-fitting model to predict the median price value of a house. There can be a further improvement to the metric by doing some preprocessing before fitting the data. Series Navigation Related Random Forest July 12, 2018 In "Data Science and Artificial Intelligence" Introduction to Machine Learning Using Spark January 2, 2017 In "Big Data Hadoop & Spark" Data Science Glossary- Machine Learning Tools and Terminologies May 3, 2018 In "All Categories"](https://image.slidesharecdn.com/randomforestalgorithmforregressionabeginnersguide-181009110530/75/Random-forest-algorithm-for-regression-a-beginner-s-guide-5-2048.jpg)