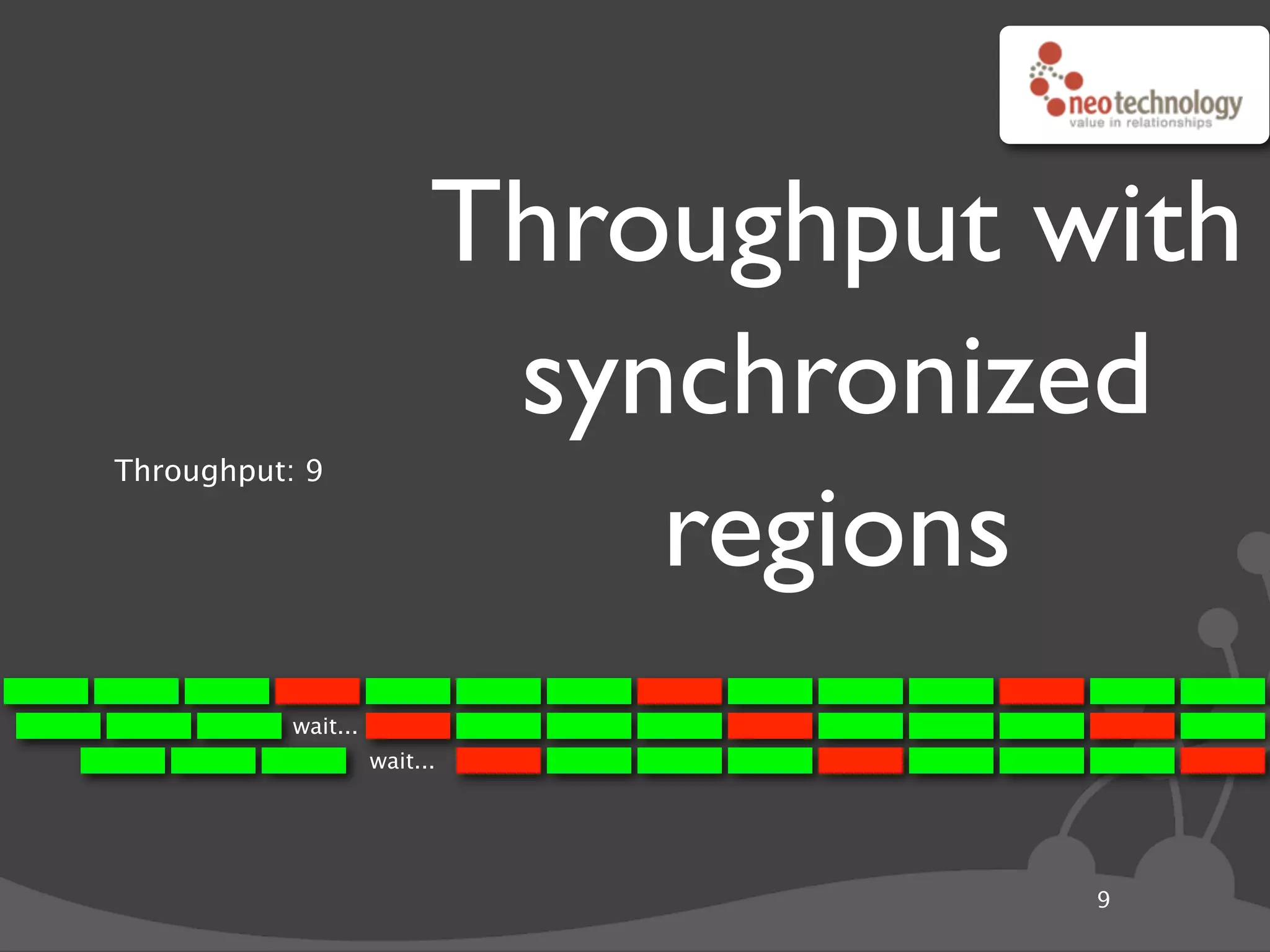

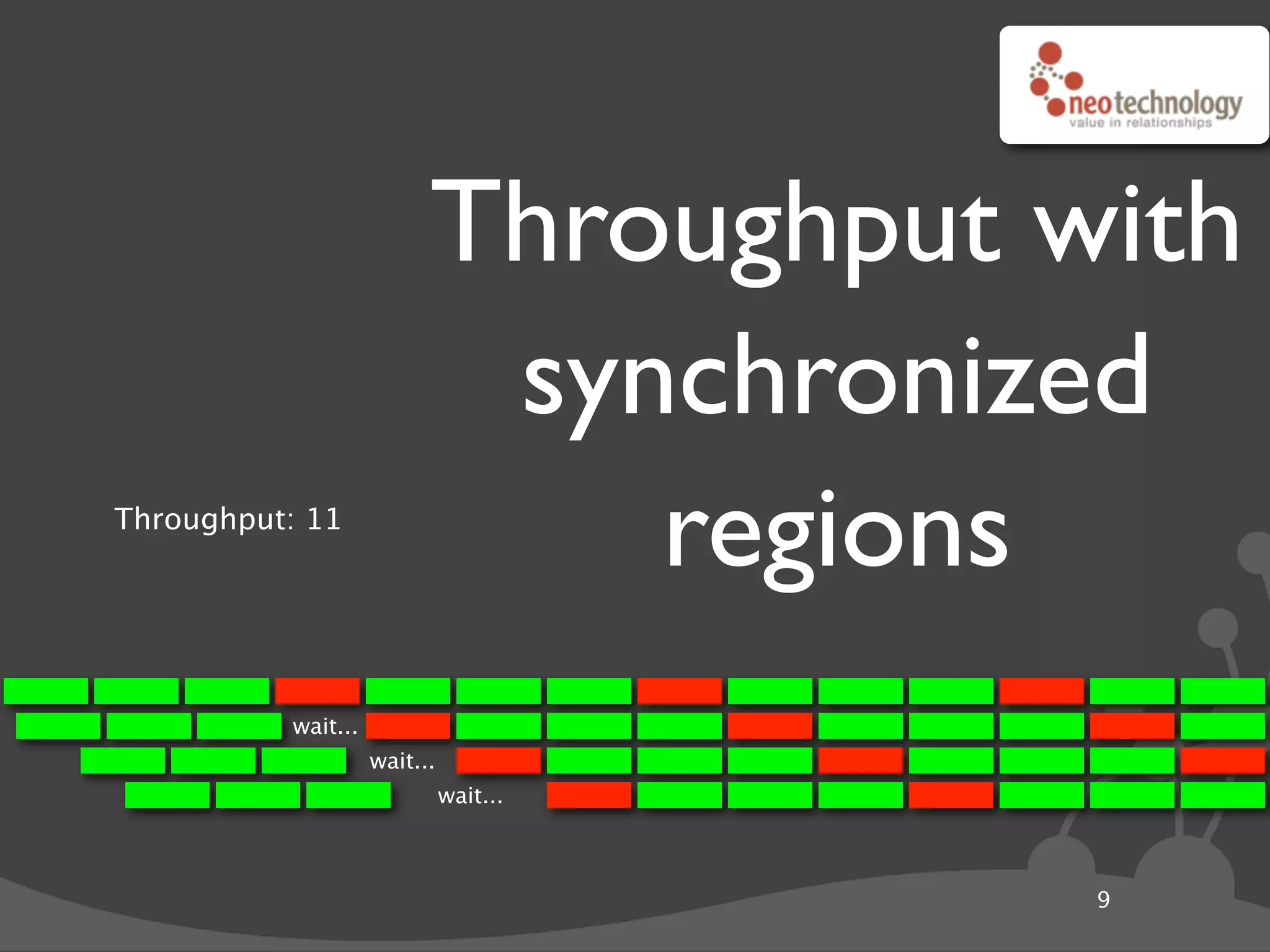

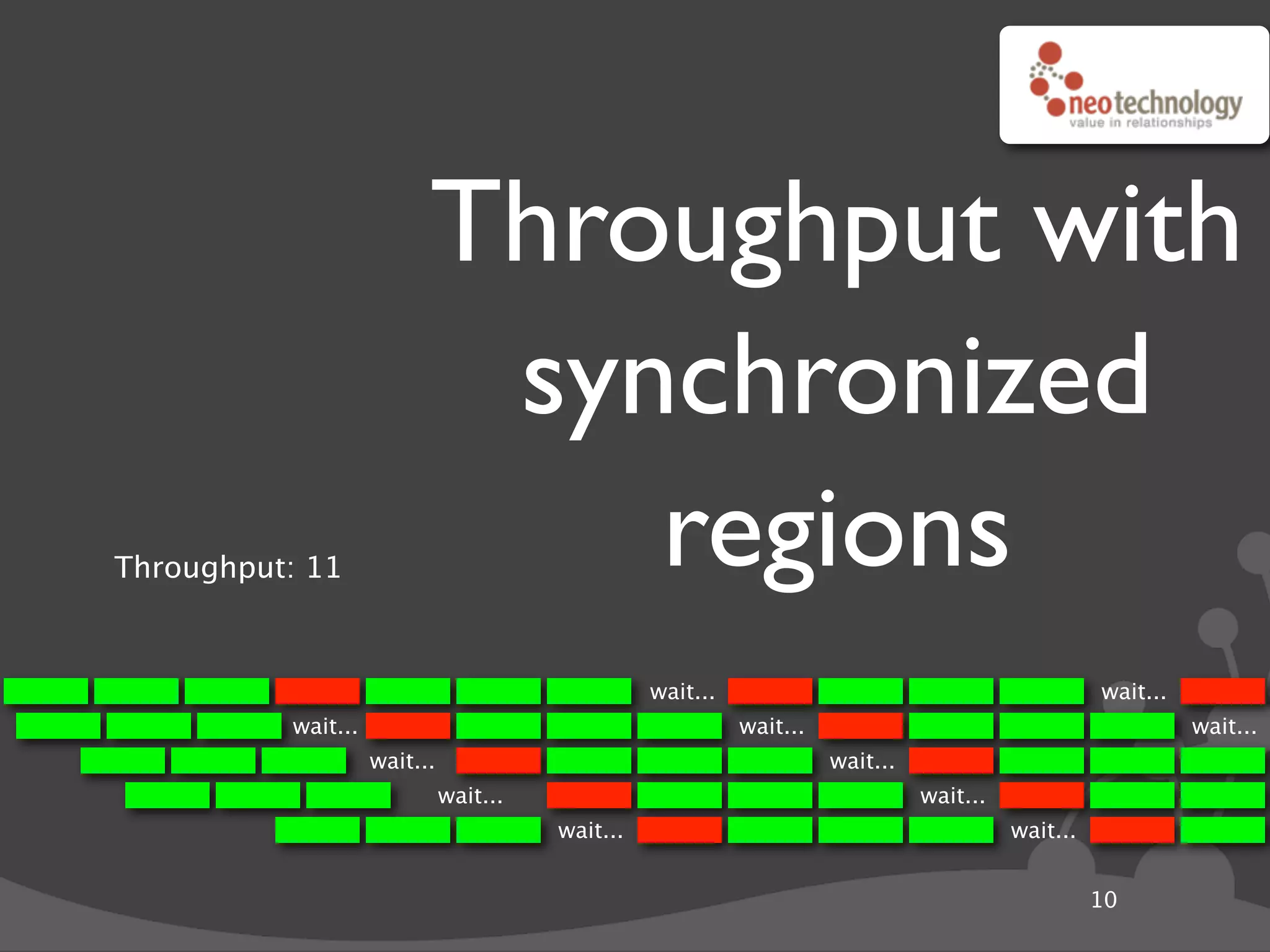

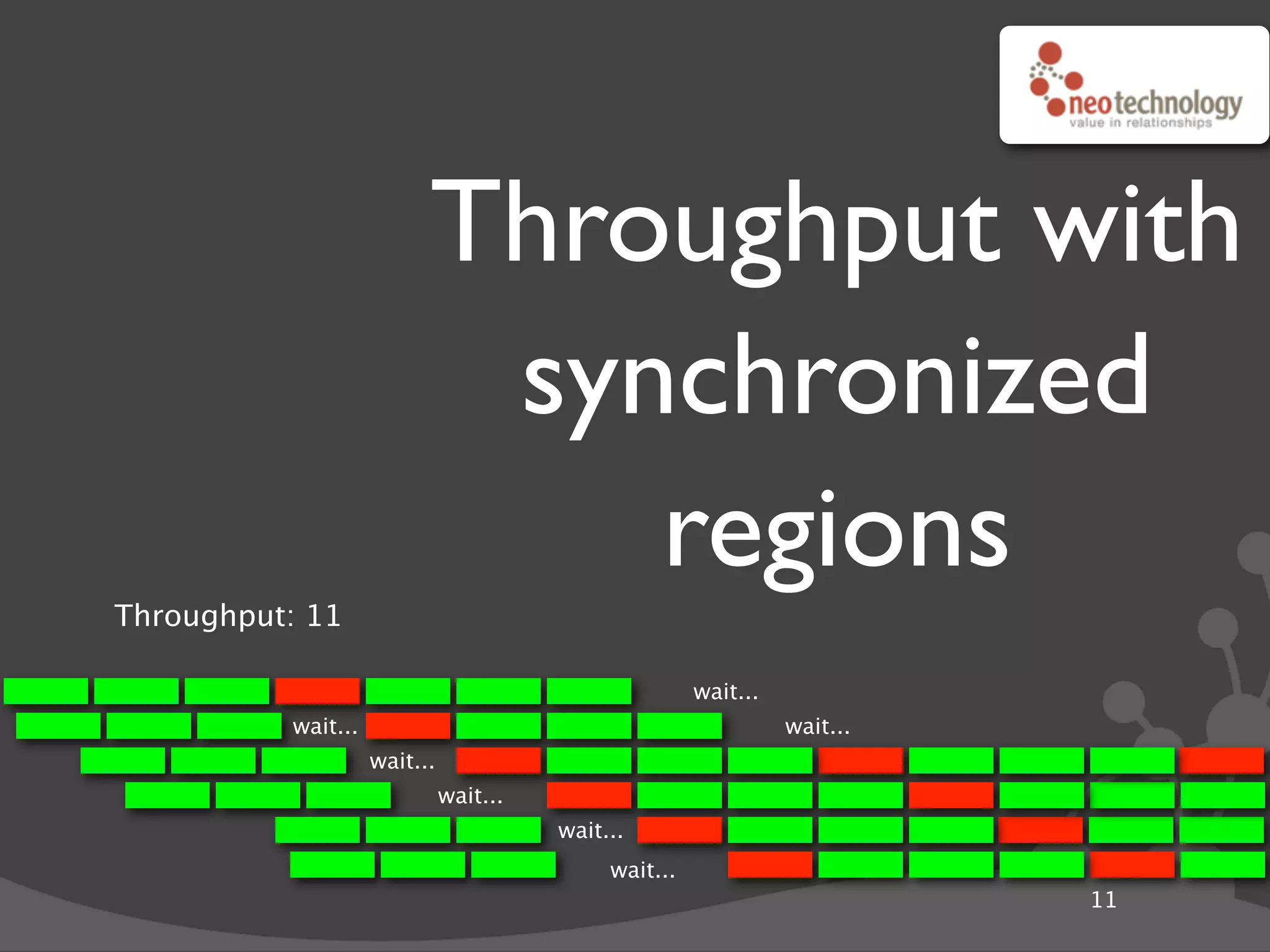

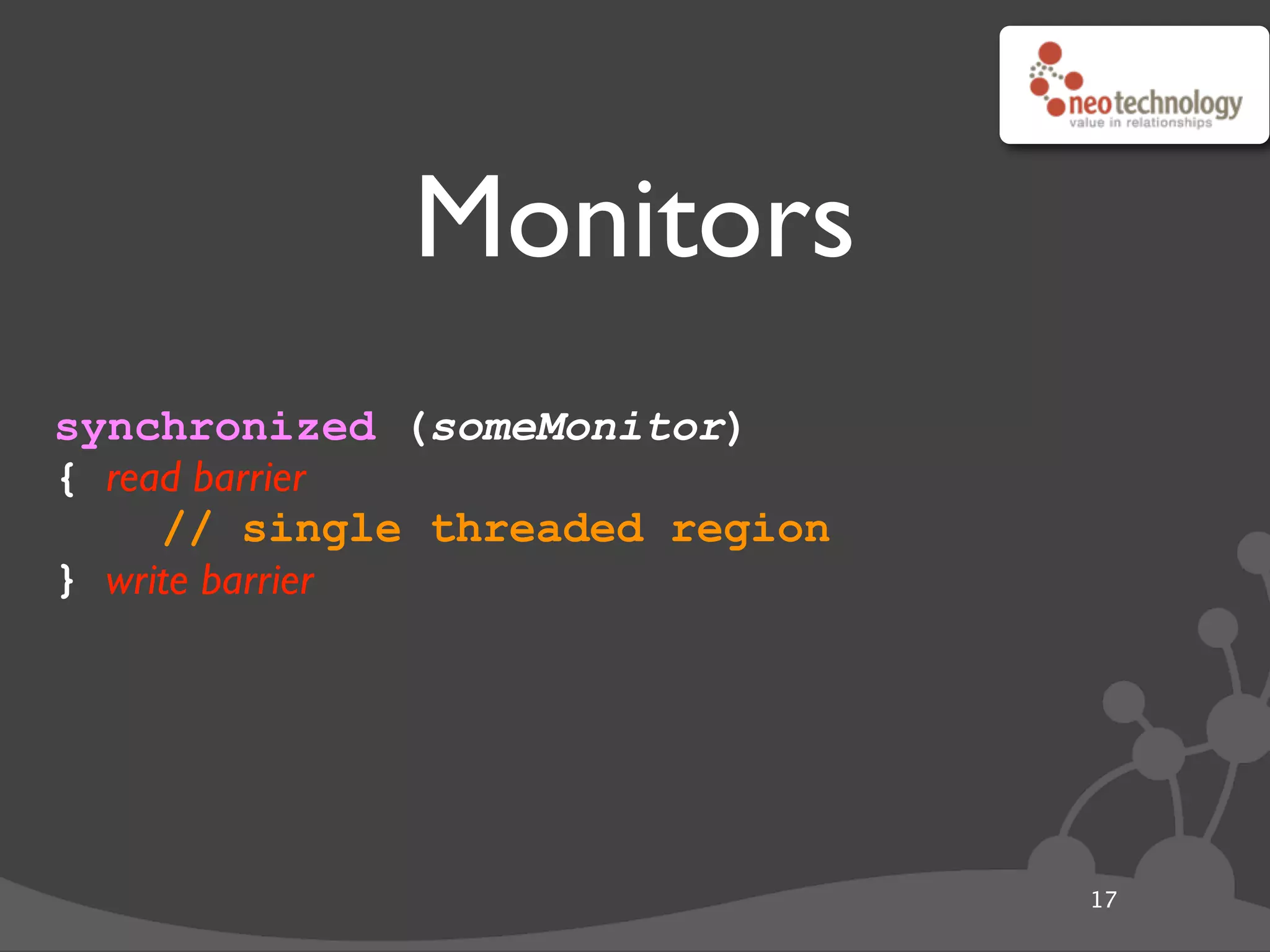

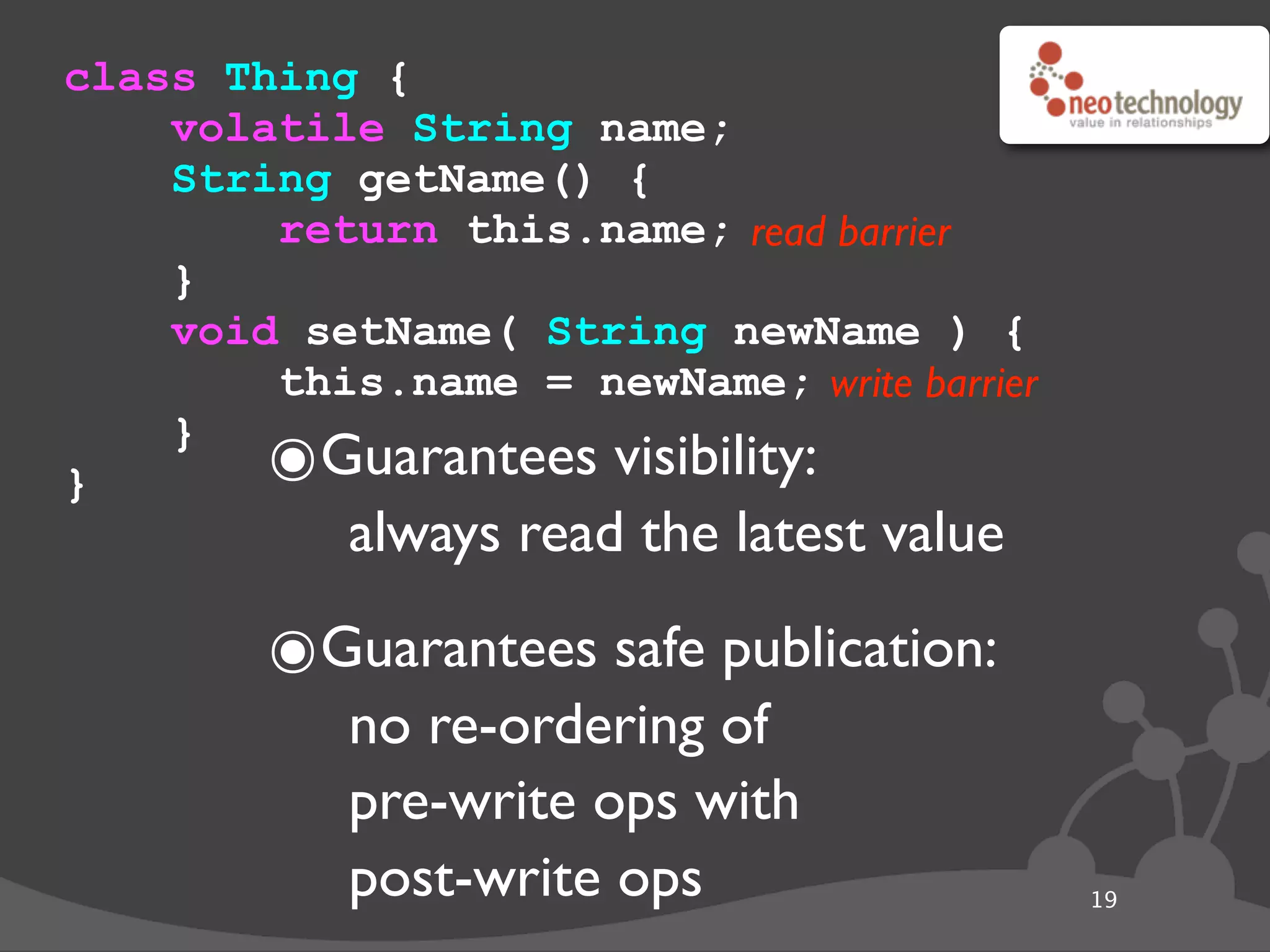

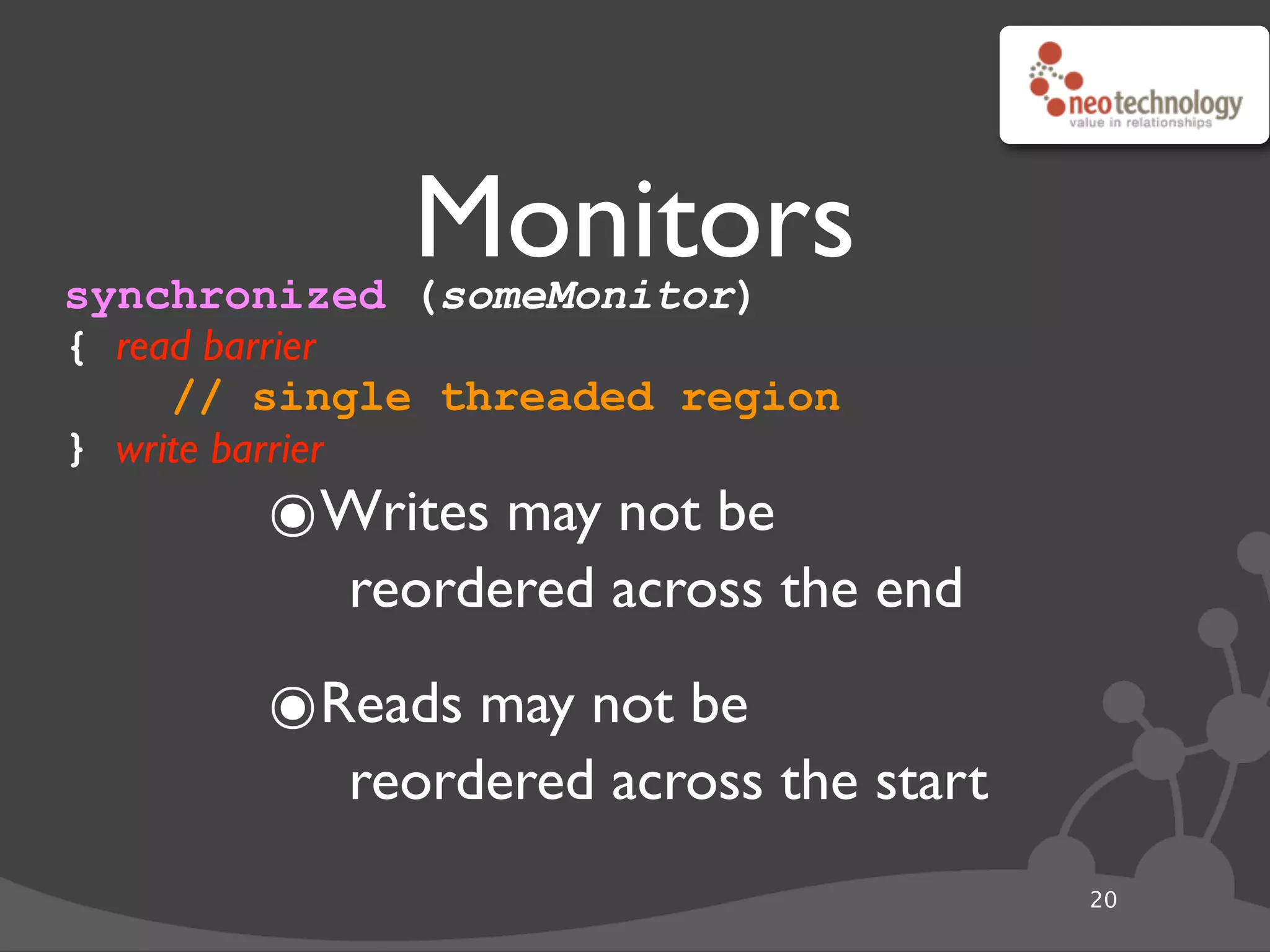

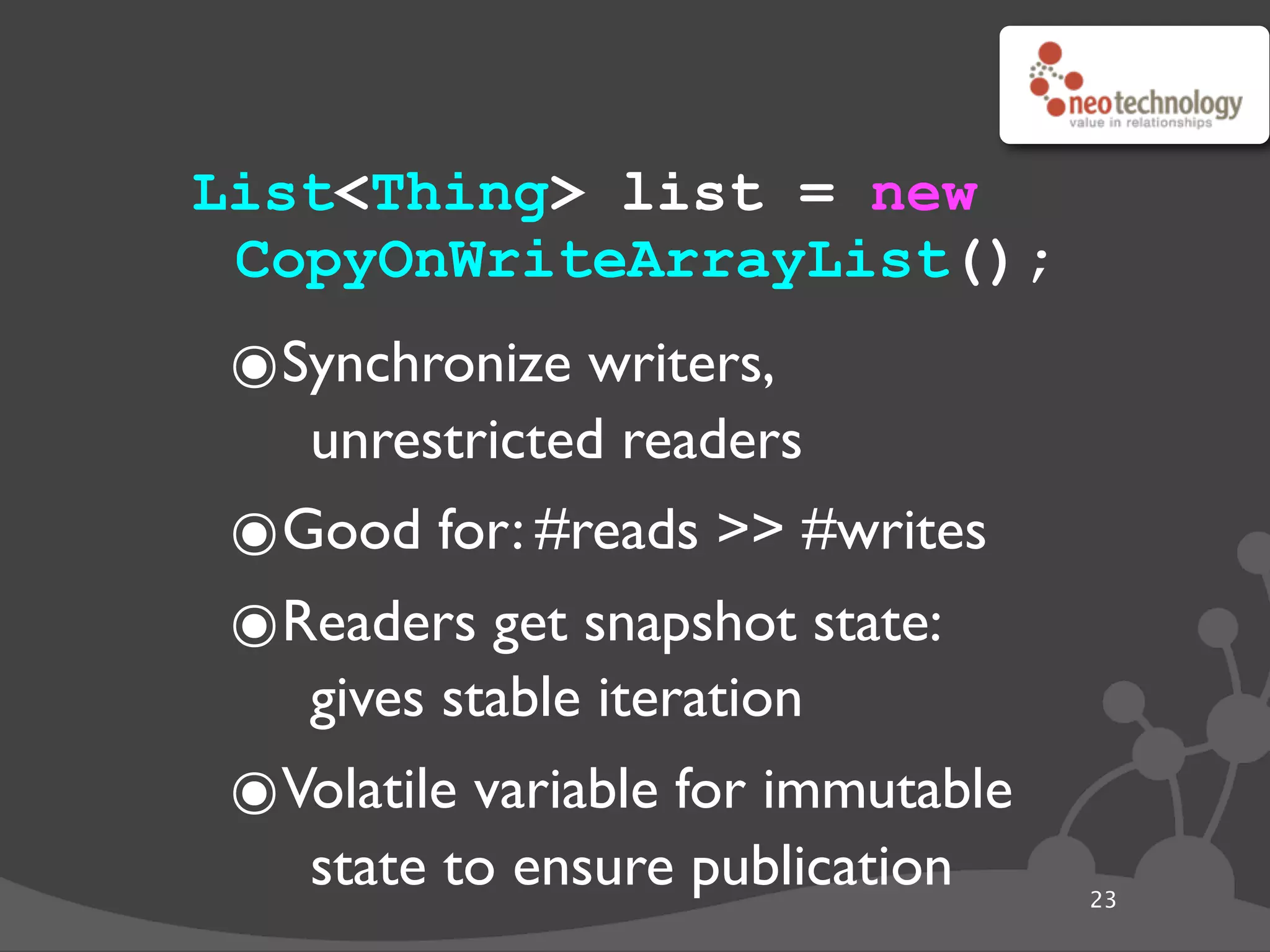

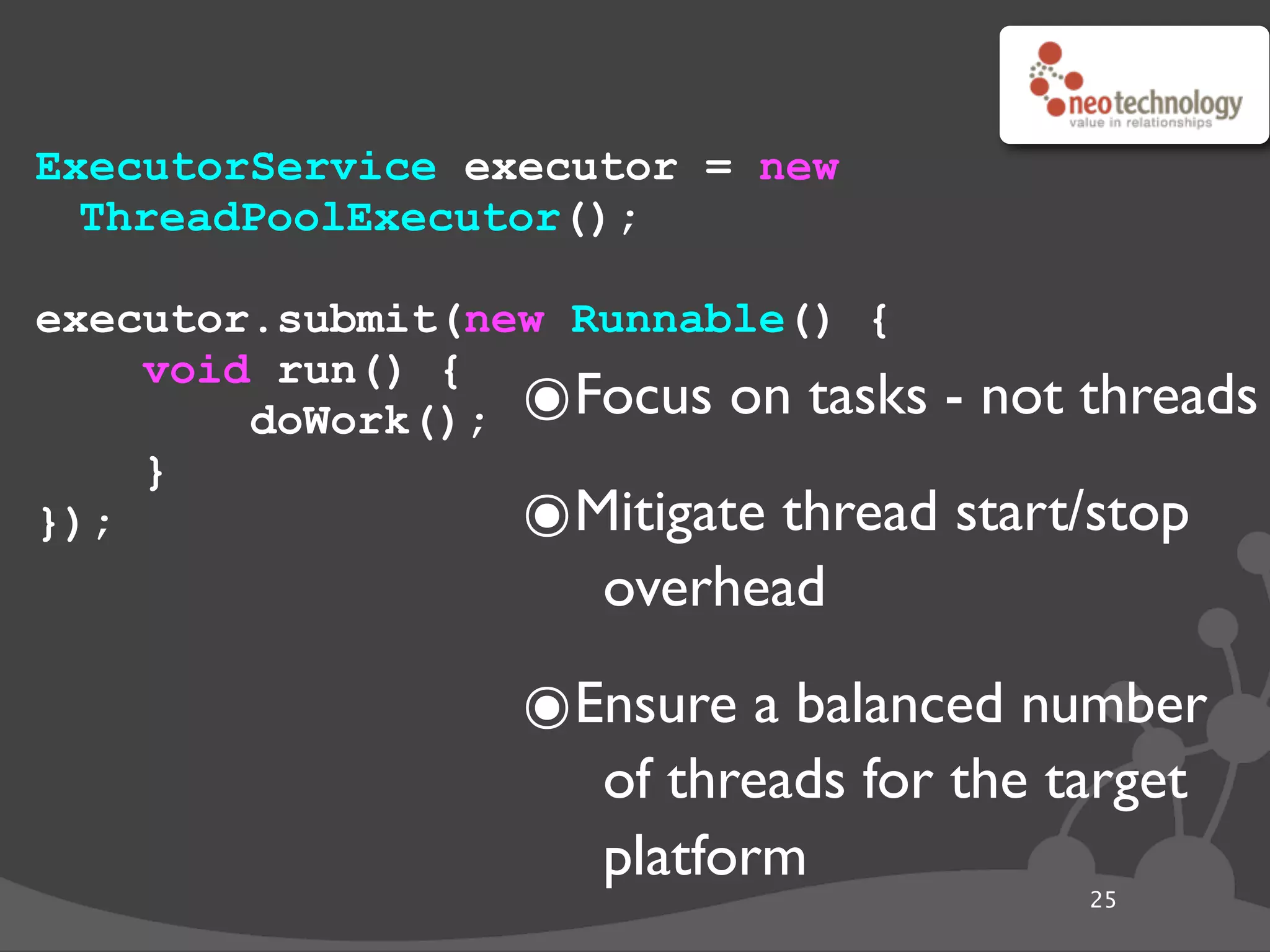

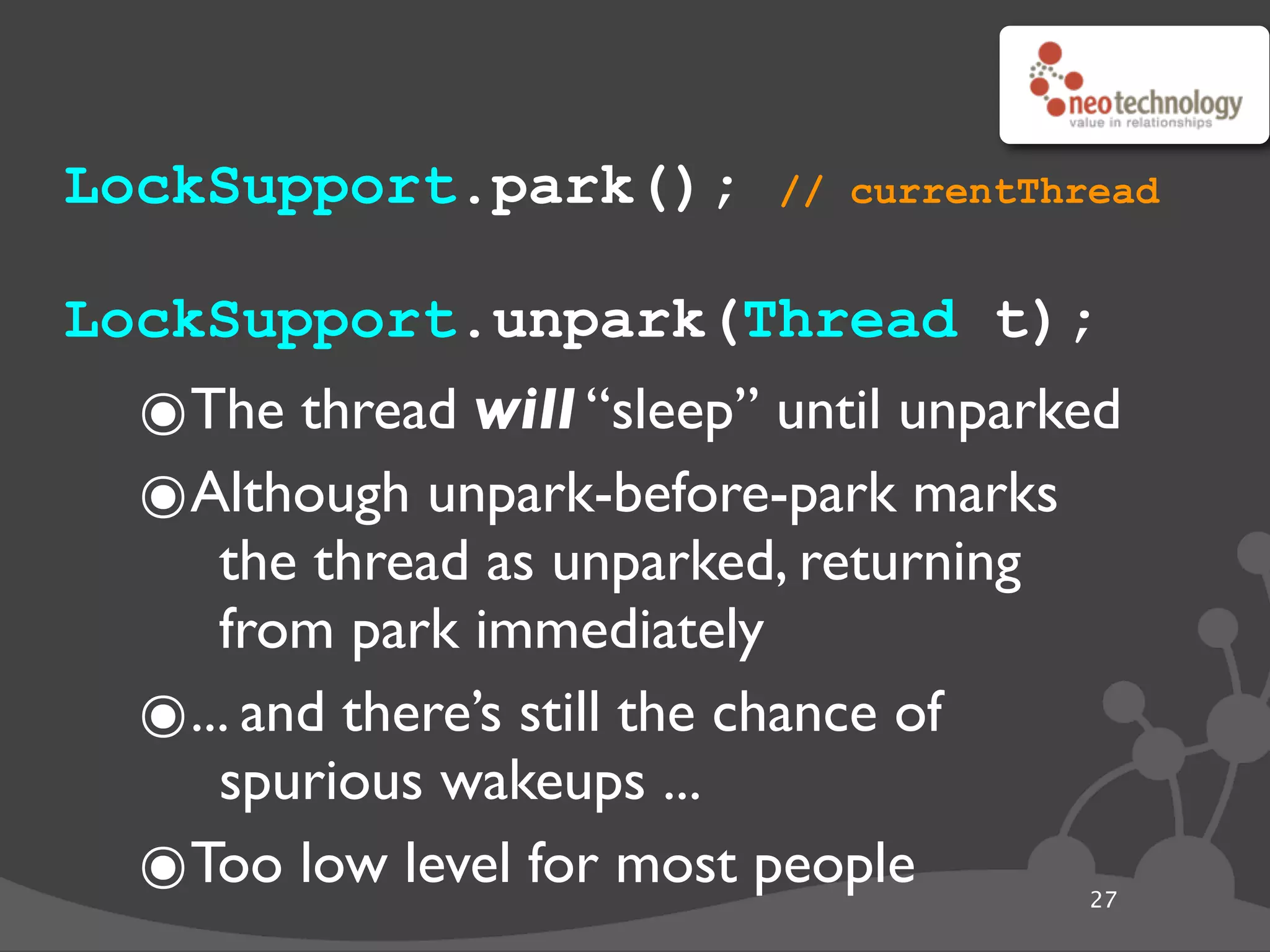

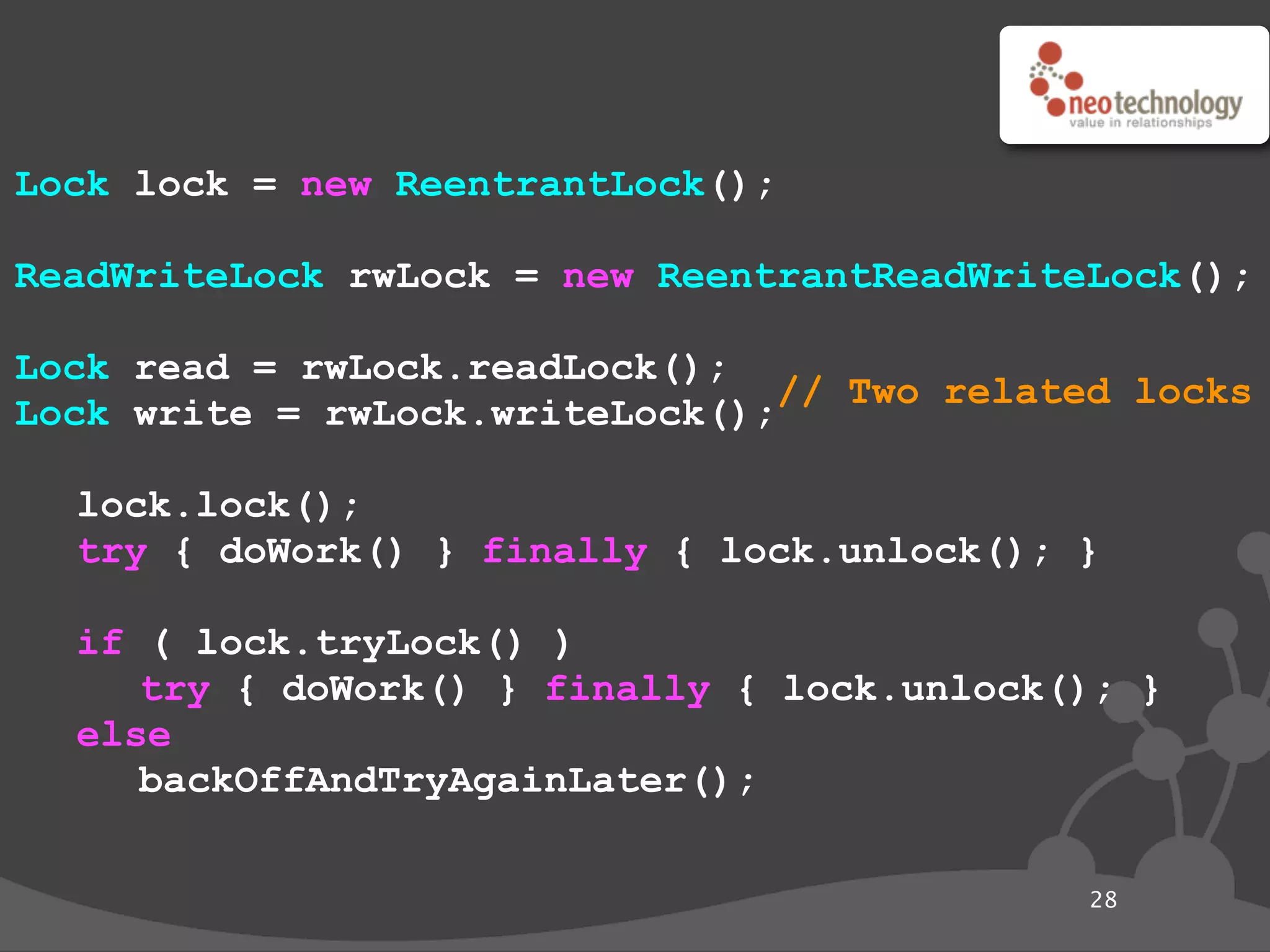

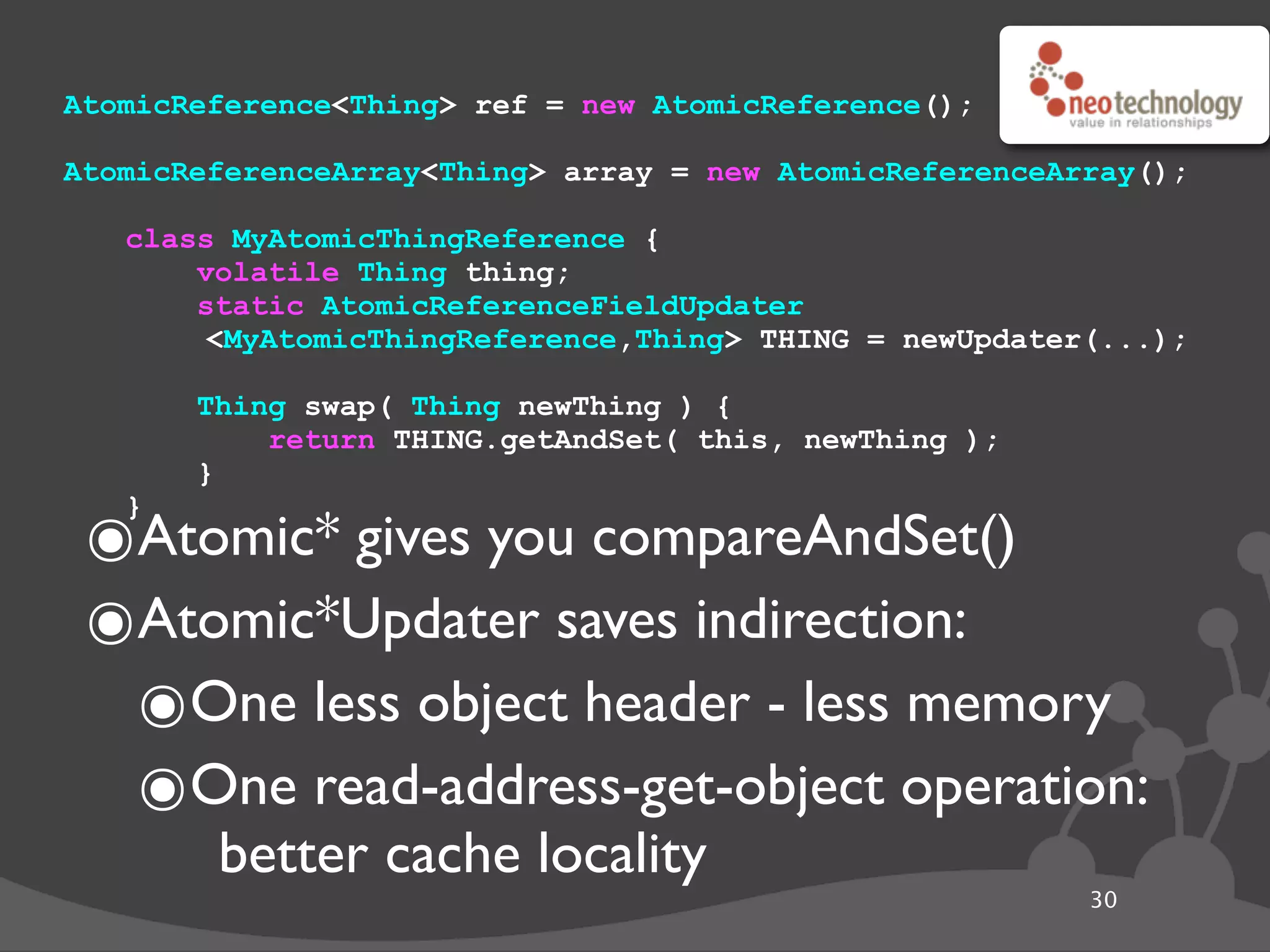

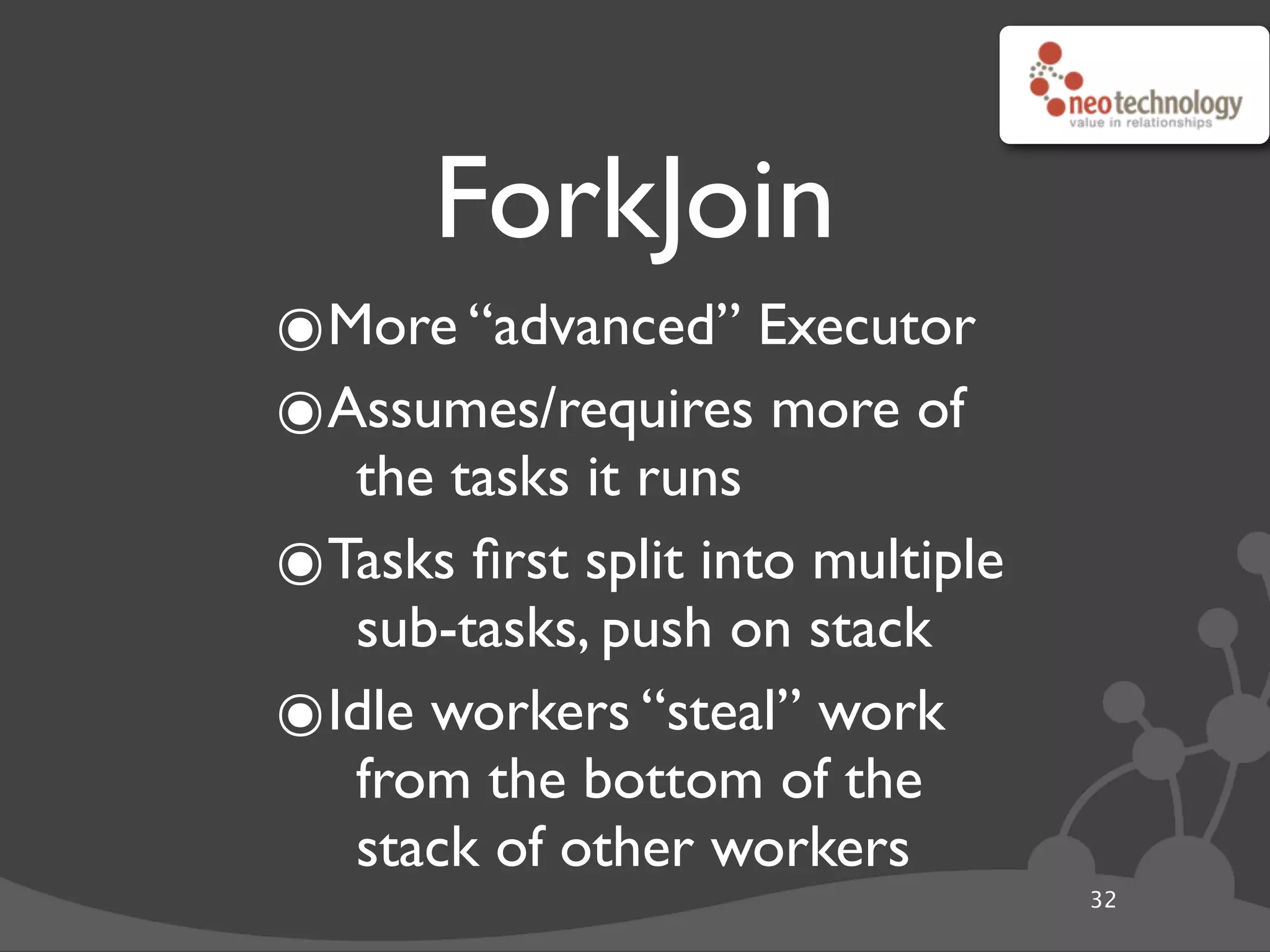

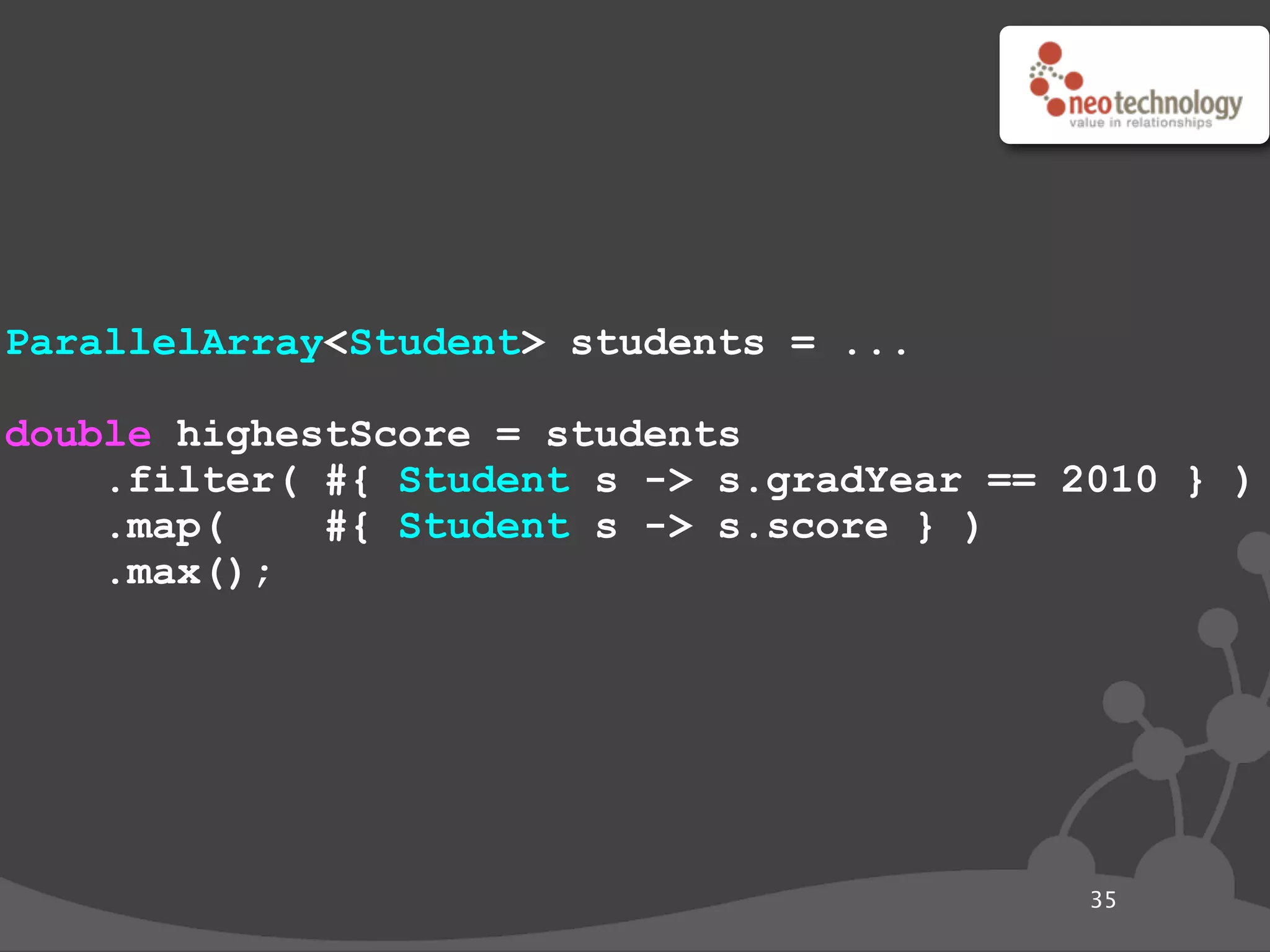

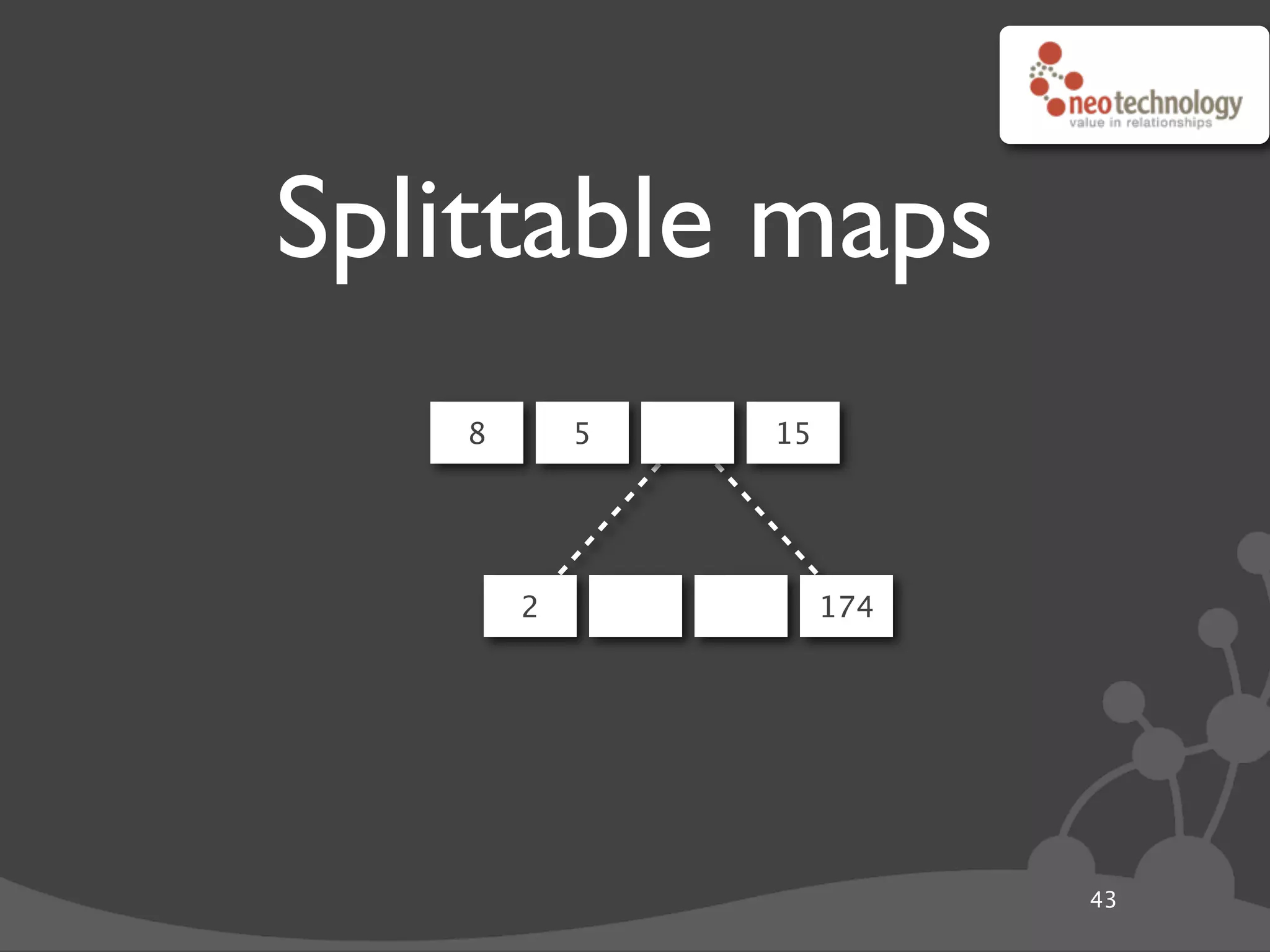

The document discusses models for concurrent programming. It summarizes common misconceptions about threads and concurrency, and outlines some of the core abstractions and tools available in Java for writing concurrent programs, including threads, monitors, volatile variables, java.util.concurrent classes like ConcurrentHashMap, and java.util.concurrent.locks classes like ReentrantLock. It also discusses some models not currently supported in Java like parallel arrays, transactional memory, actors, and Clojure's approach to concurrency using immutable data structures, refs, and atoms.

![ConcurrentMap<String,Thing> map = new ConcurrentHashMap(); ๏Adds atomic operations to the Map interface: - putIfAbsent(key, value) - remove(key, value) - replace(key,[oldVal,]newVal) - but not yet putIfAbsent(key, #{makeValue()}) ๏CHM is lock striped, i.e. synchronized on regions 22](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-25-2048.jpg)

![public class CopyOnWriteArrayList<T> private volatile Object[] array = new Object[0]; public synchronized boolean add(T value) { Object[] newArray = Arrays.copyOf(array, array.length+1); newArray[array.length] = value; array = newArray; // write barrier return true; } // write barrier public Iterator<T> iterator() { return new ArrayIterator<T>(this.array); // read barrier } private static class ArrayIterator<T> implements Iterator<T> { // I’ve written too many of these to be amused any more ... } 24](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-27-2048.jpg)

![(def x 10) ; create a root binding (def x 42) ; redefine the root binding (binding [x 13] ...) ; create a thread local override 48](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-55-2048.jpg)

![(def street (ref)) (def city (ref)) (defn move [new-street new-city] (dosync ; synchronize the changes (ref-set street new-street) (ref-set city new-city))) ; will retry if txn fails due to concurrent change (defn print-address [] (dosync ; get a snapshot state of the refs (printf "I live on %s in %s%n" @street @city))) 50](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-57-2048.jpg)

![(defstruct address-t :street :city) (def address (atom)) (defn move [new-street new-city] (set address (struct address-t new-street new-city))) (defn print-address [] (let [addr @address] ; get snapshot (printf "I live on %s in %s%n" (get addr :street) (get addr :city)))) 52](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-59-2048.jpg)

![(def account (agent)) (defn deposit [balance amount] (+ balance amount)) (send account deposit 1000) 55](https://image.slidesharecdn.com/24881-modelsforconcurrentprogramming-111019034625-phpapp02/75/JavaOne-2011-Models-for-Concurrent-Programming-62-2048.jpg)