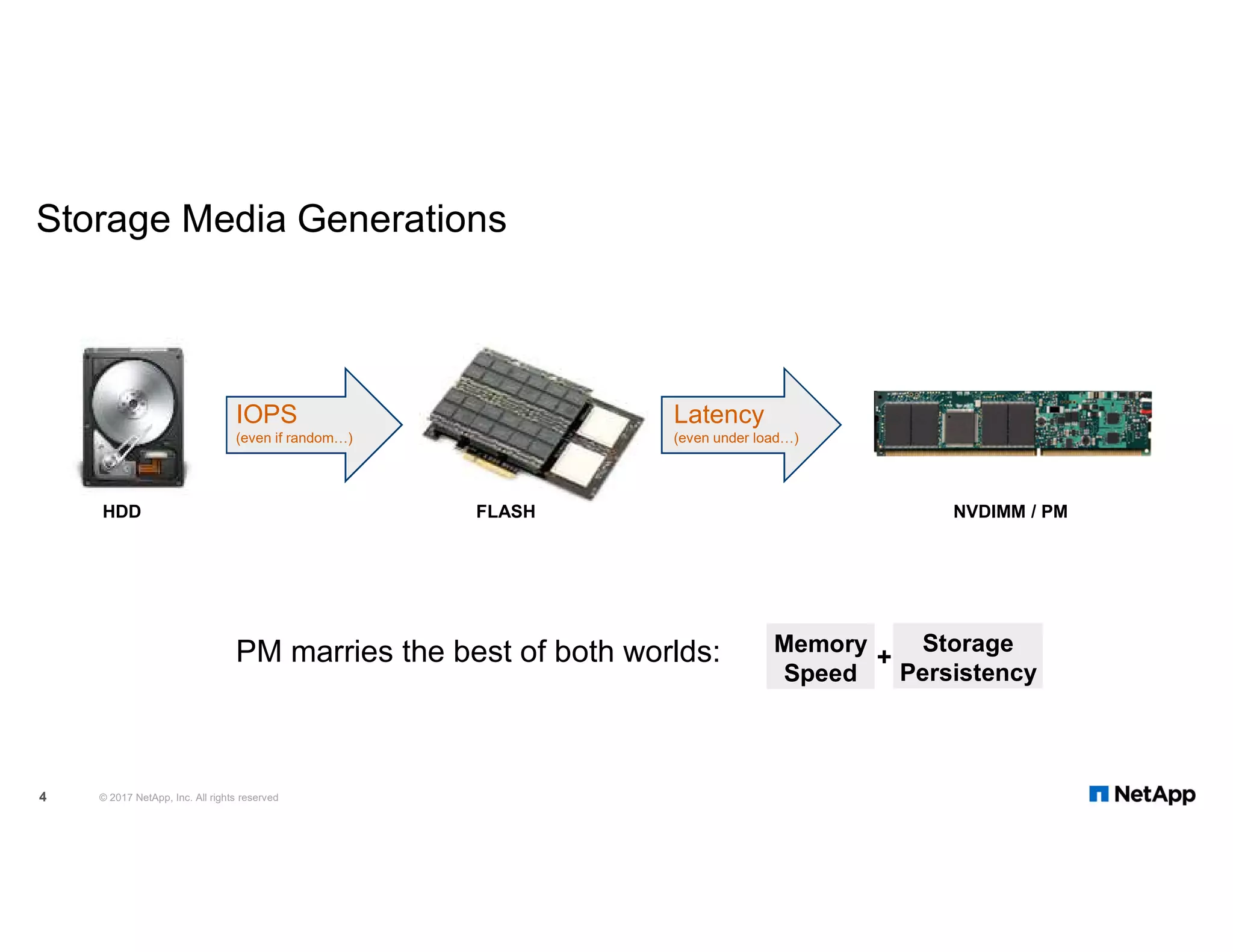

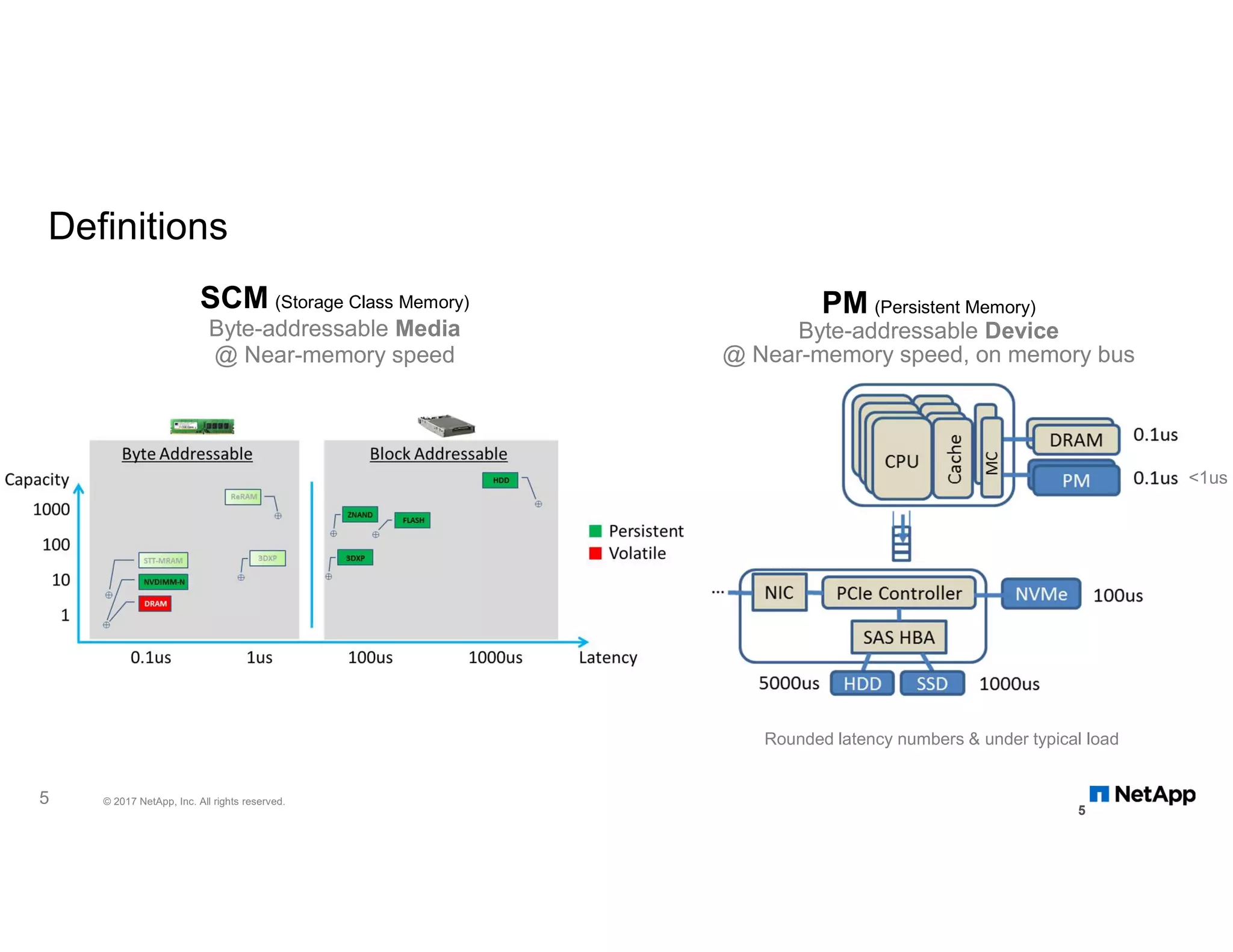

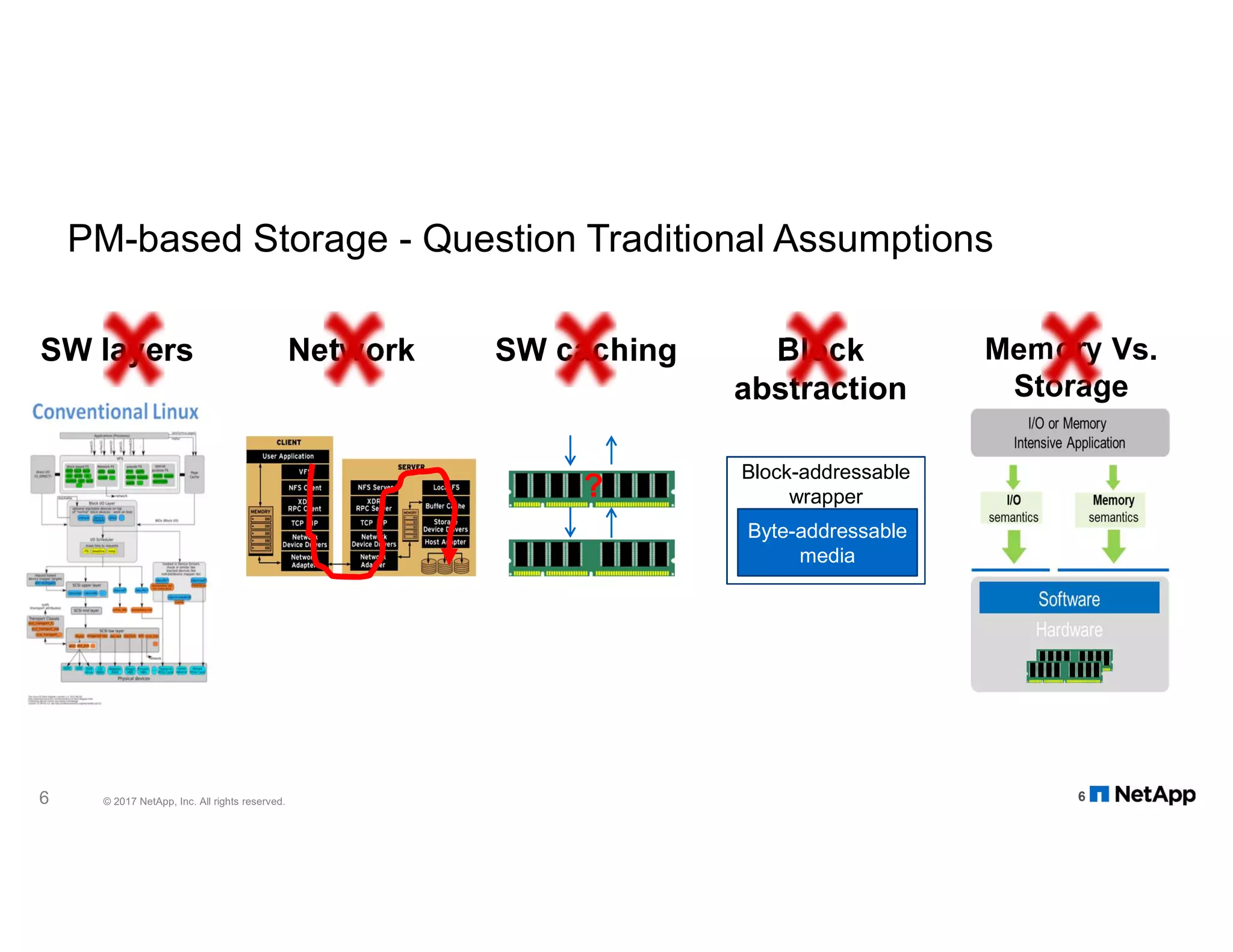

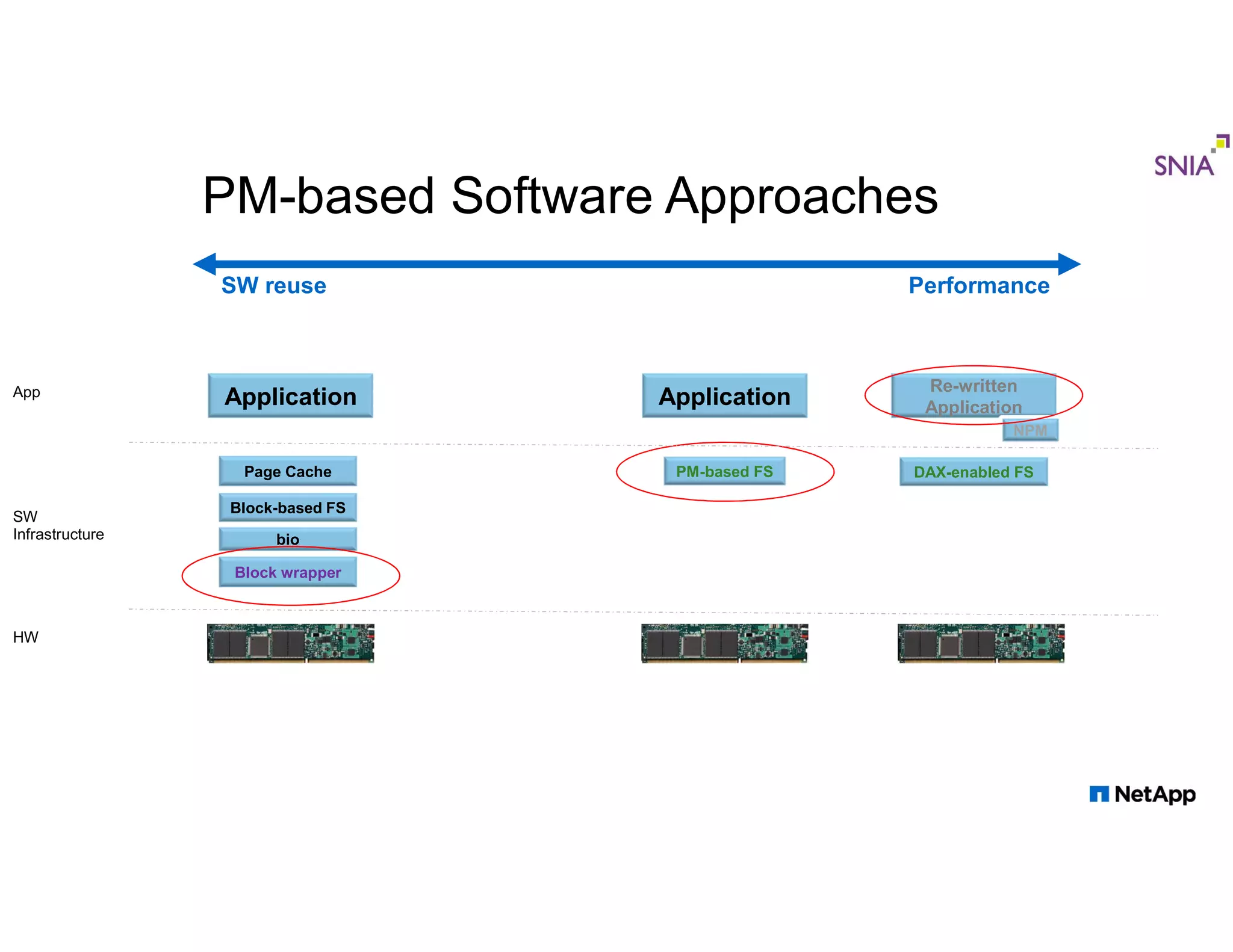

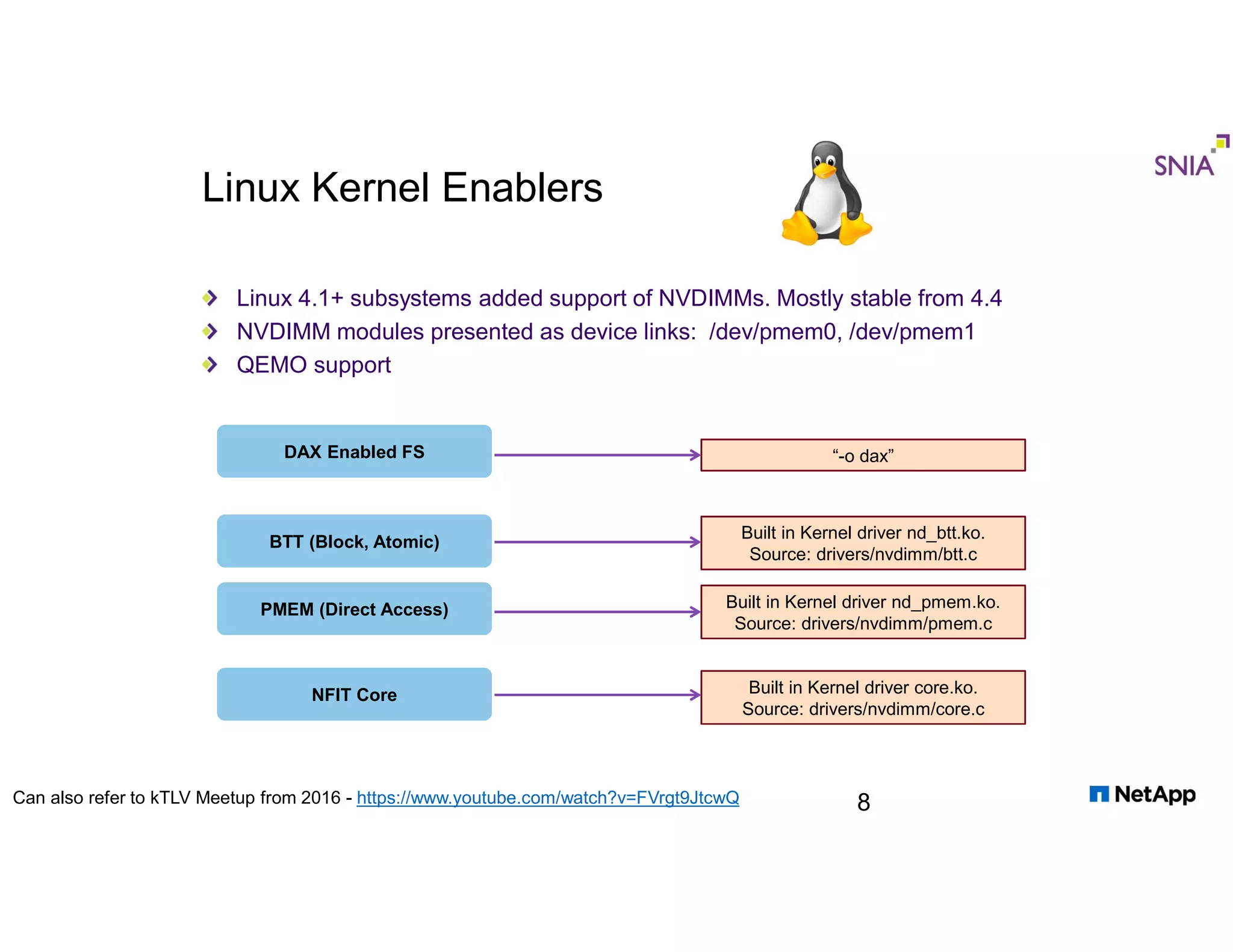

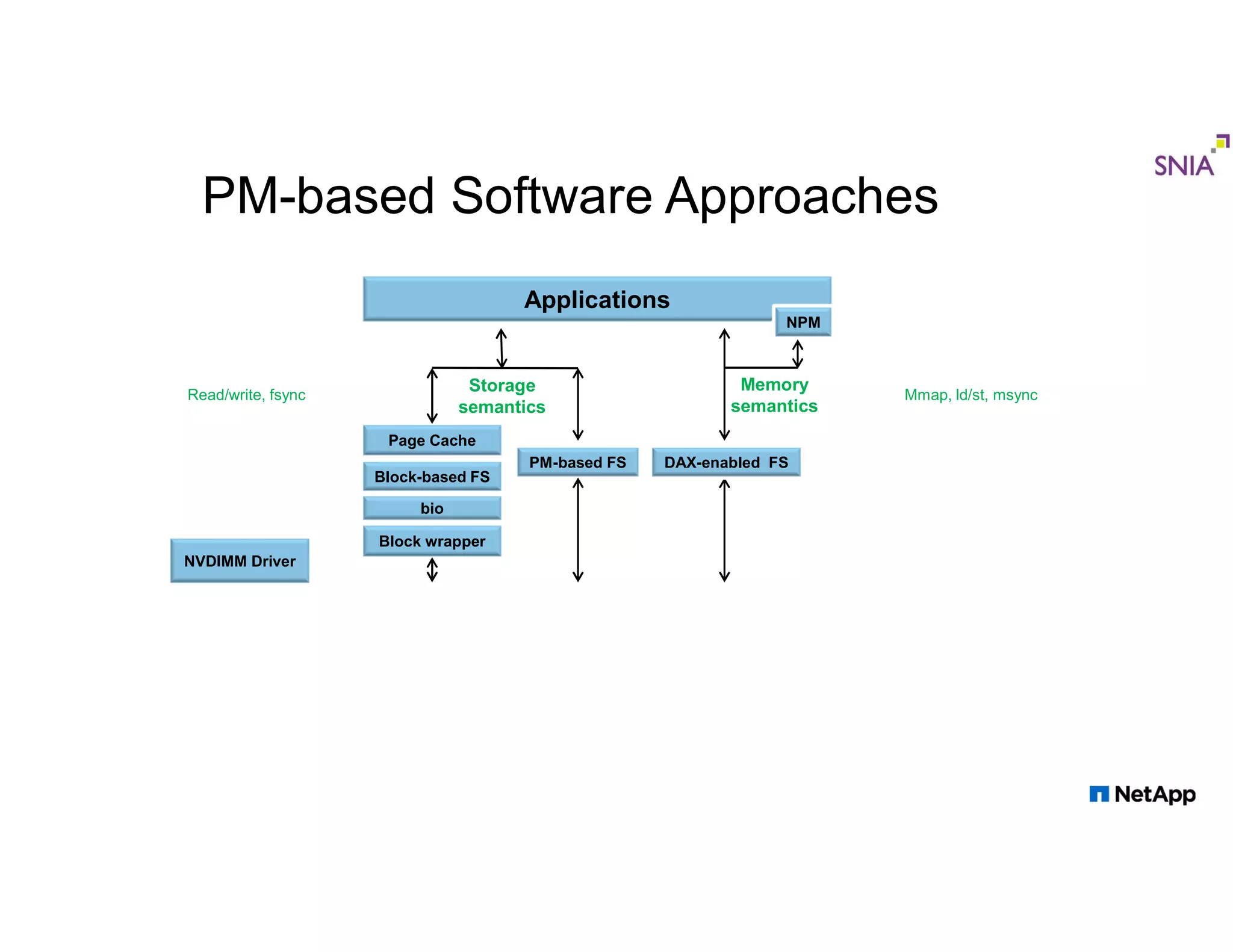

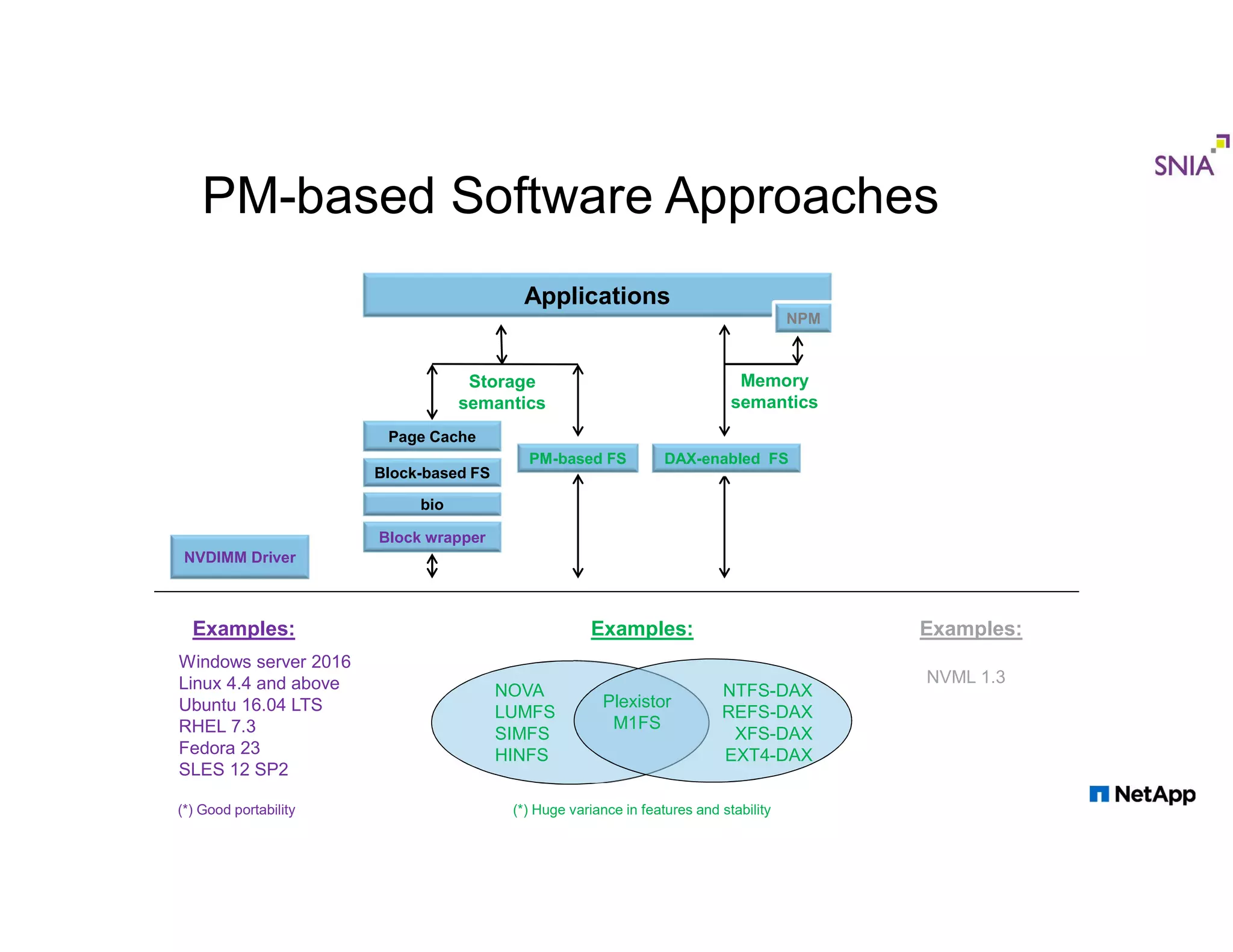

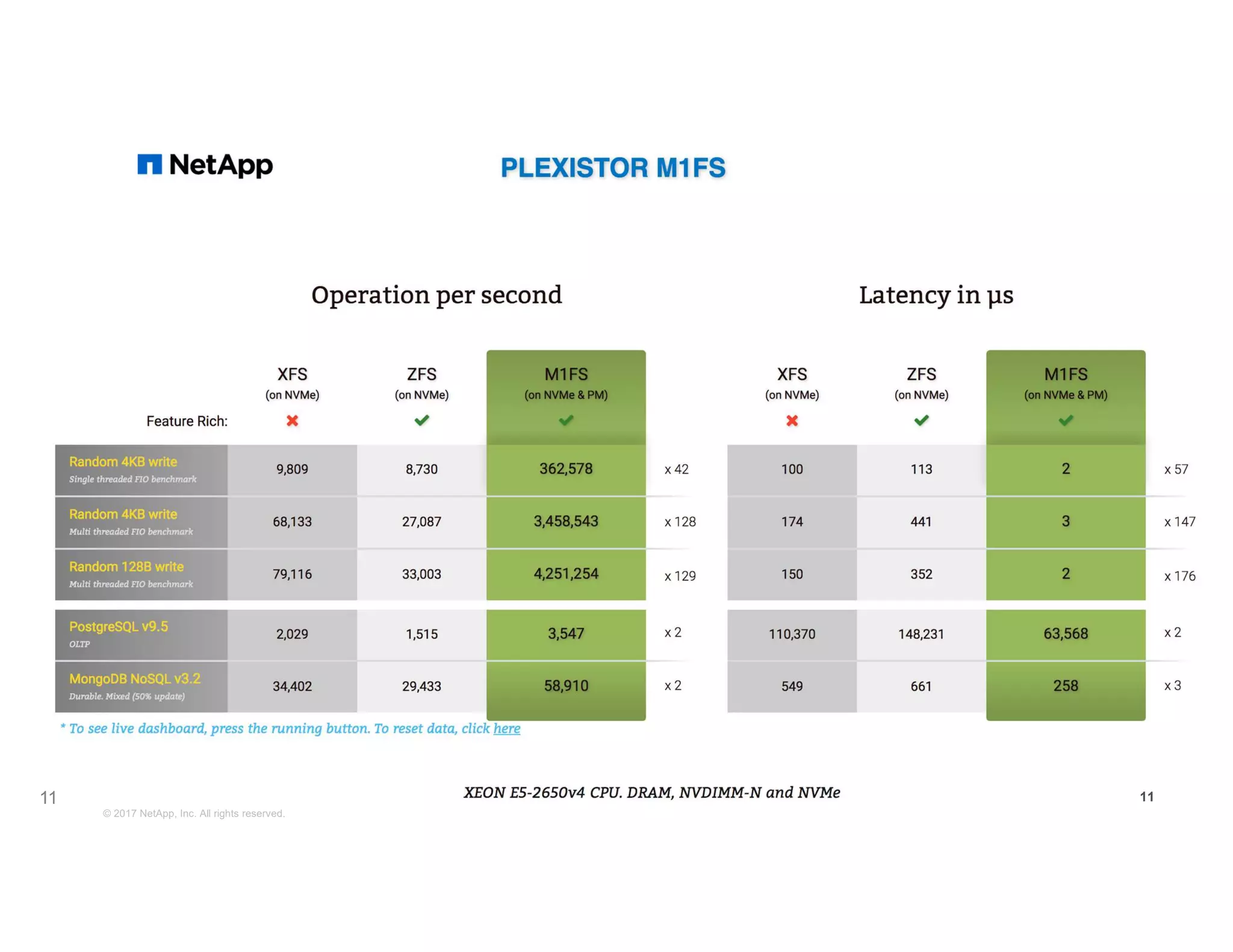

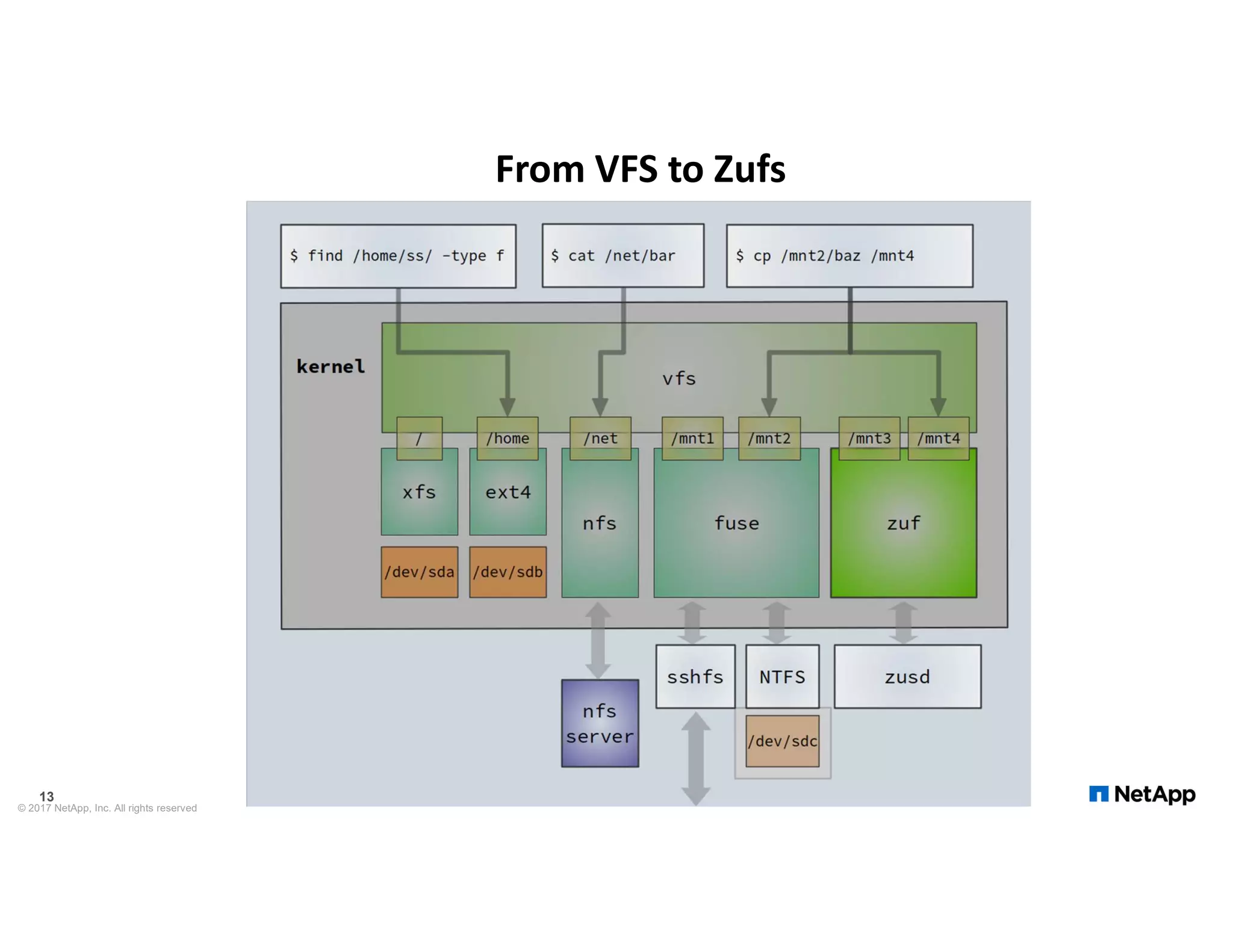

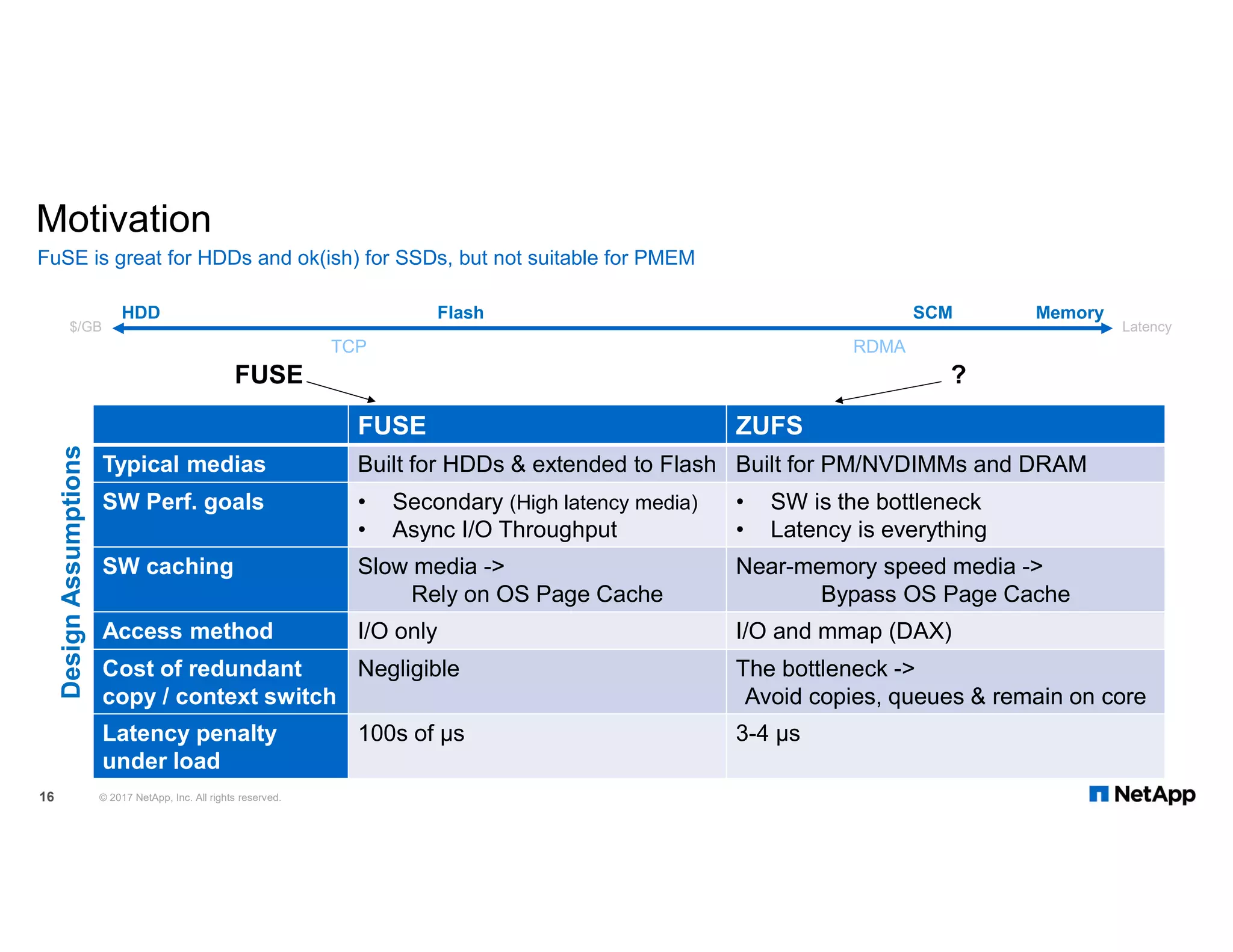

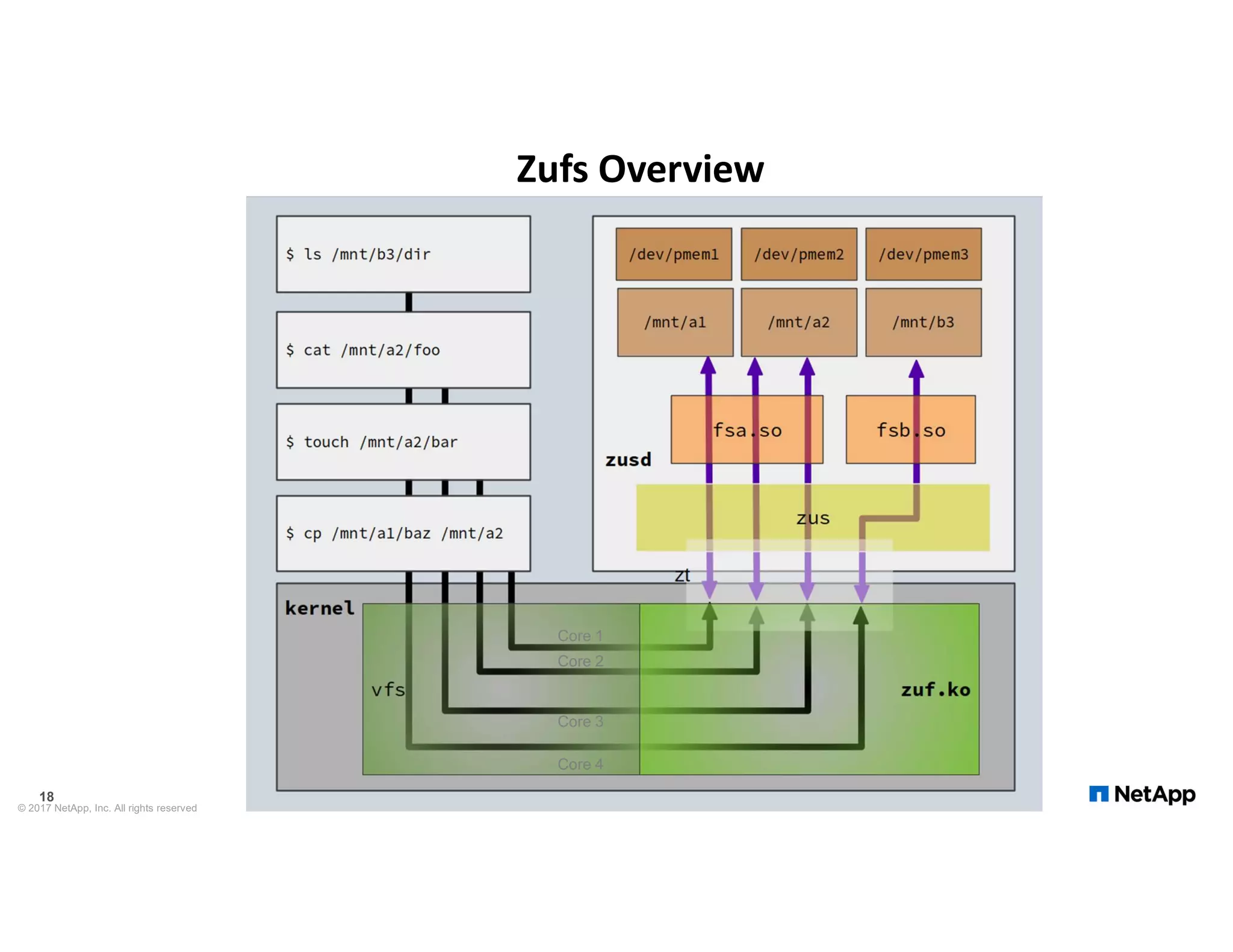

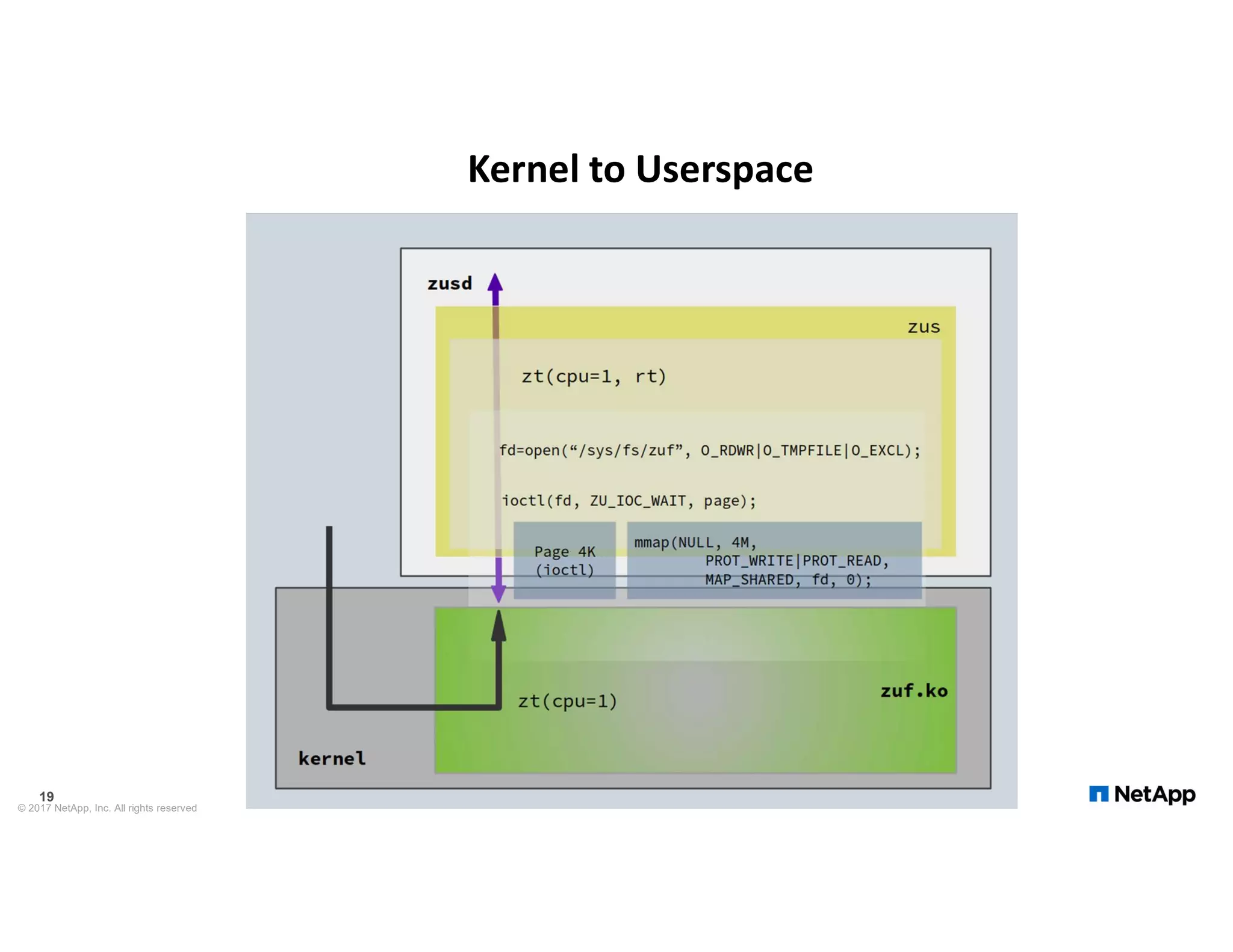

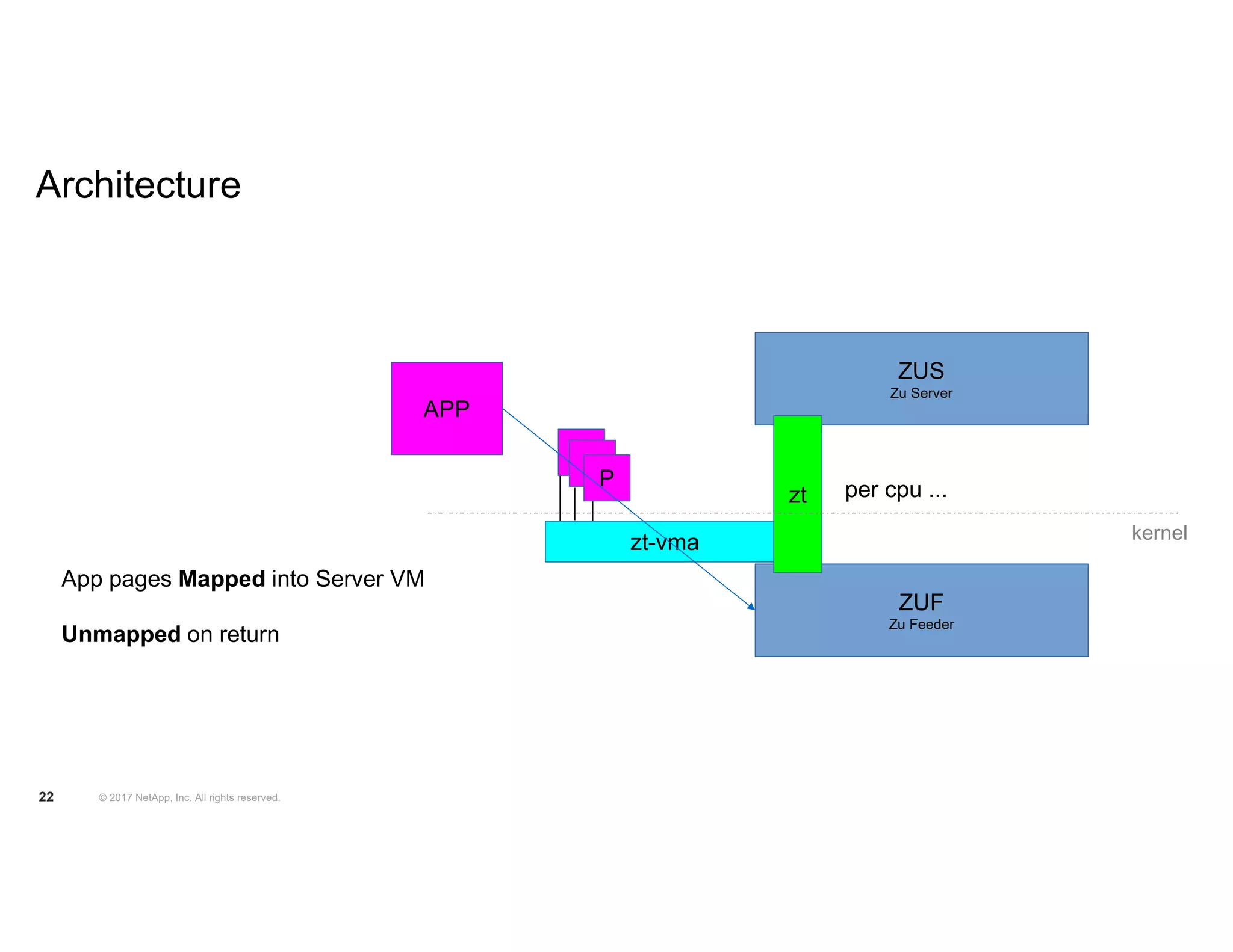

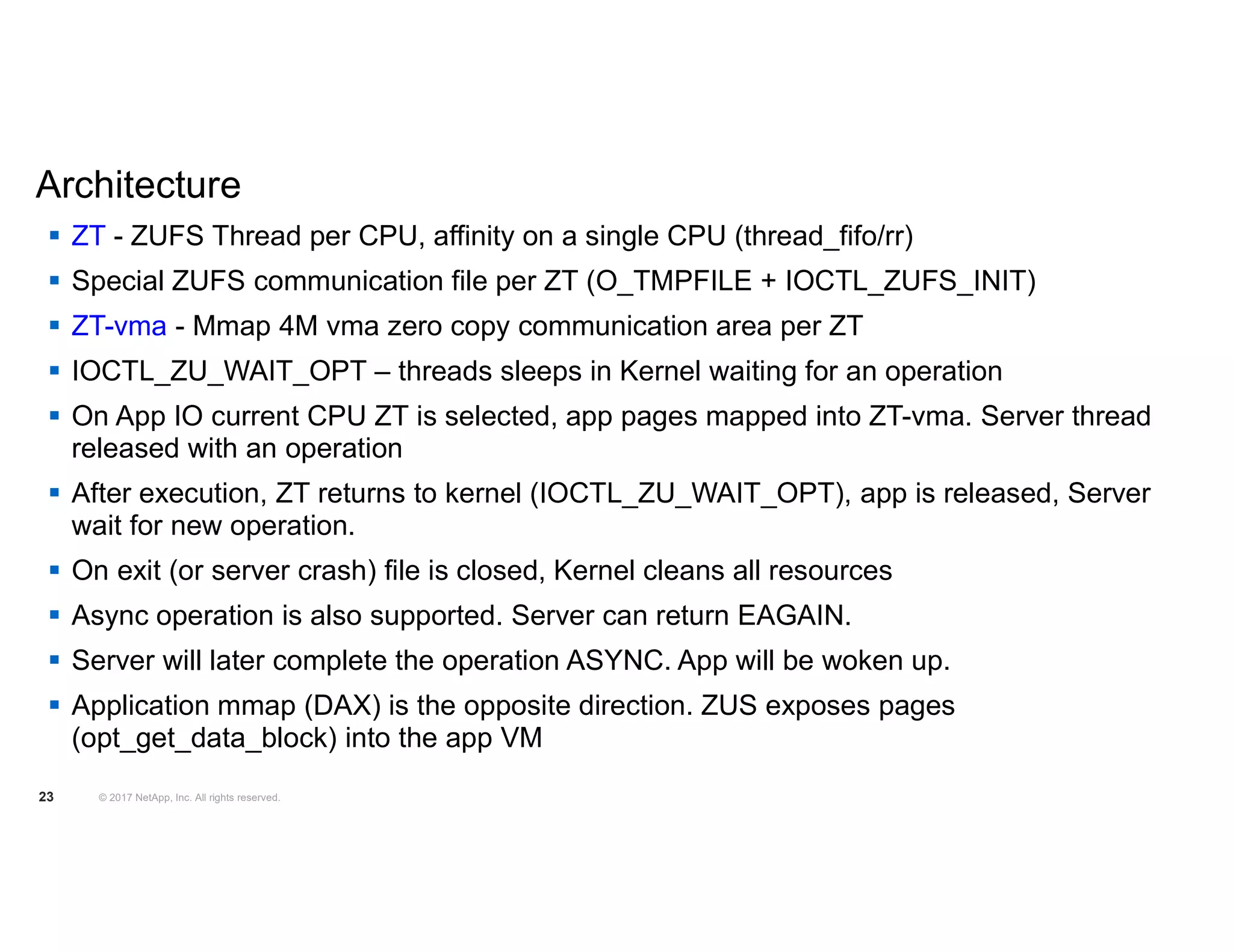

The document discusses the implications of persistent memory (PM) technologies on storage systems, highlighting the advantages of combining storage persistency with memory speed in PM-based filesystems. It covers advancements in the Linux kernel and emerging user-mode filesystems like Zero-copy User-mode Filesystem (ZUFS) which focus on reducing latency and improving performance. Additionally, it provides insights into architectural optimizations needed for leveraging PM effectively in applications.

![Perf. Optimizations - 1 MMAP_LOCAL_CPU © 2017 NetApp, Inc. All rights reserved24 • mm patch to allow single-core TLB invalidate (in the common case) 0 5 10 15 20 25 30 - 200,000 400,000 600,000 800,000 1,000,000 1,200,000 1,400,000 1,600,000 1,800,000 2,000,000 Latency[us] IOPS ZUFS w/wo mm patch ZUFS_unpatched_mm ZUFS_patched_mm](https://image.slidesharecdn.com/pmandzufsktlvmeetup14nov2017-171115095650/75/Emerging-Persistent-Memory-Hardware-and-ZUFS-PM-based-File-Systems-in-User-Space-23-2048.jpg)