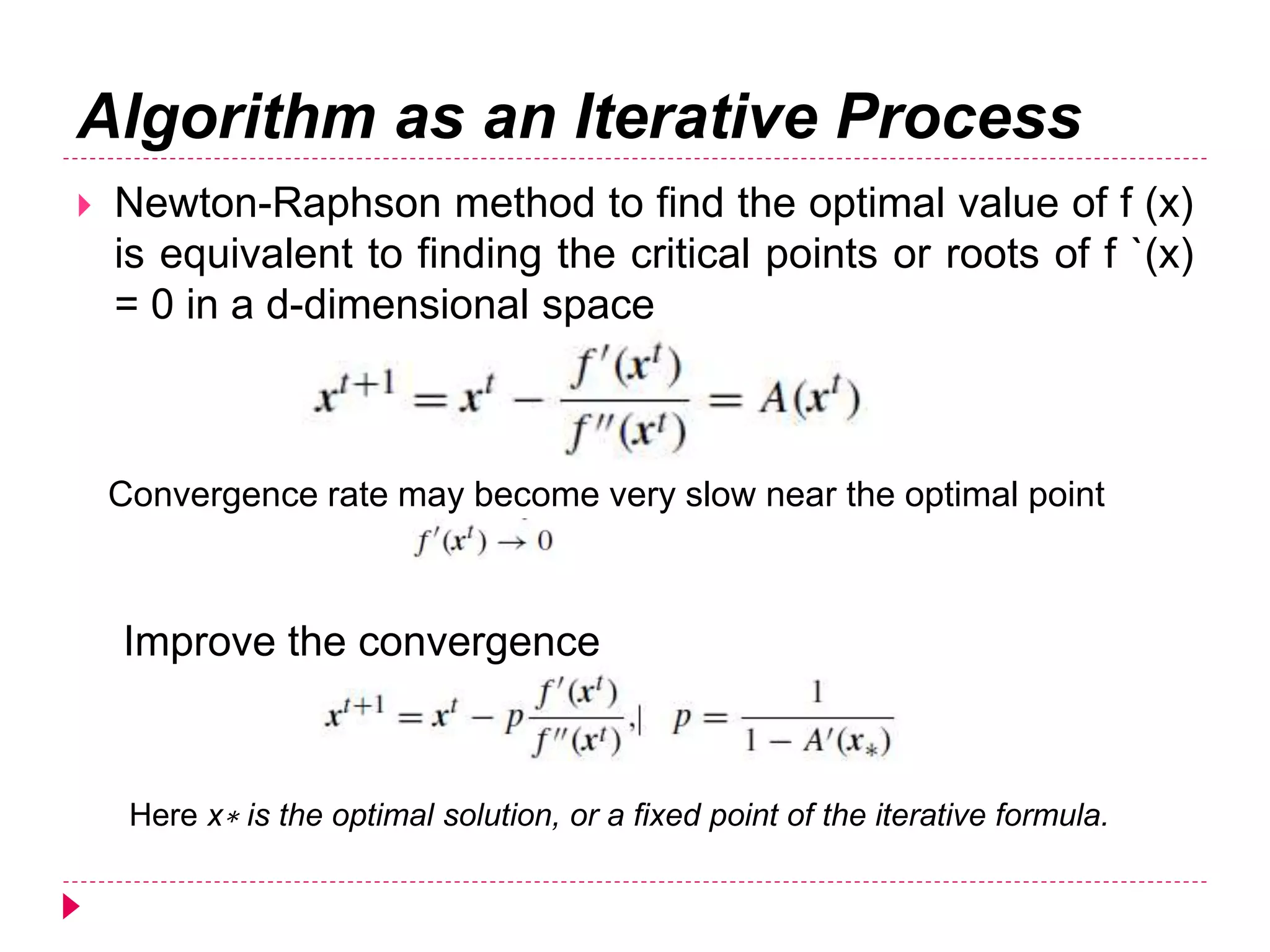

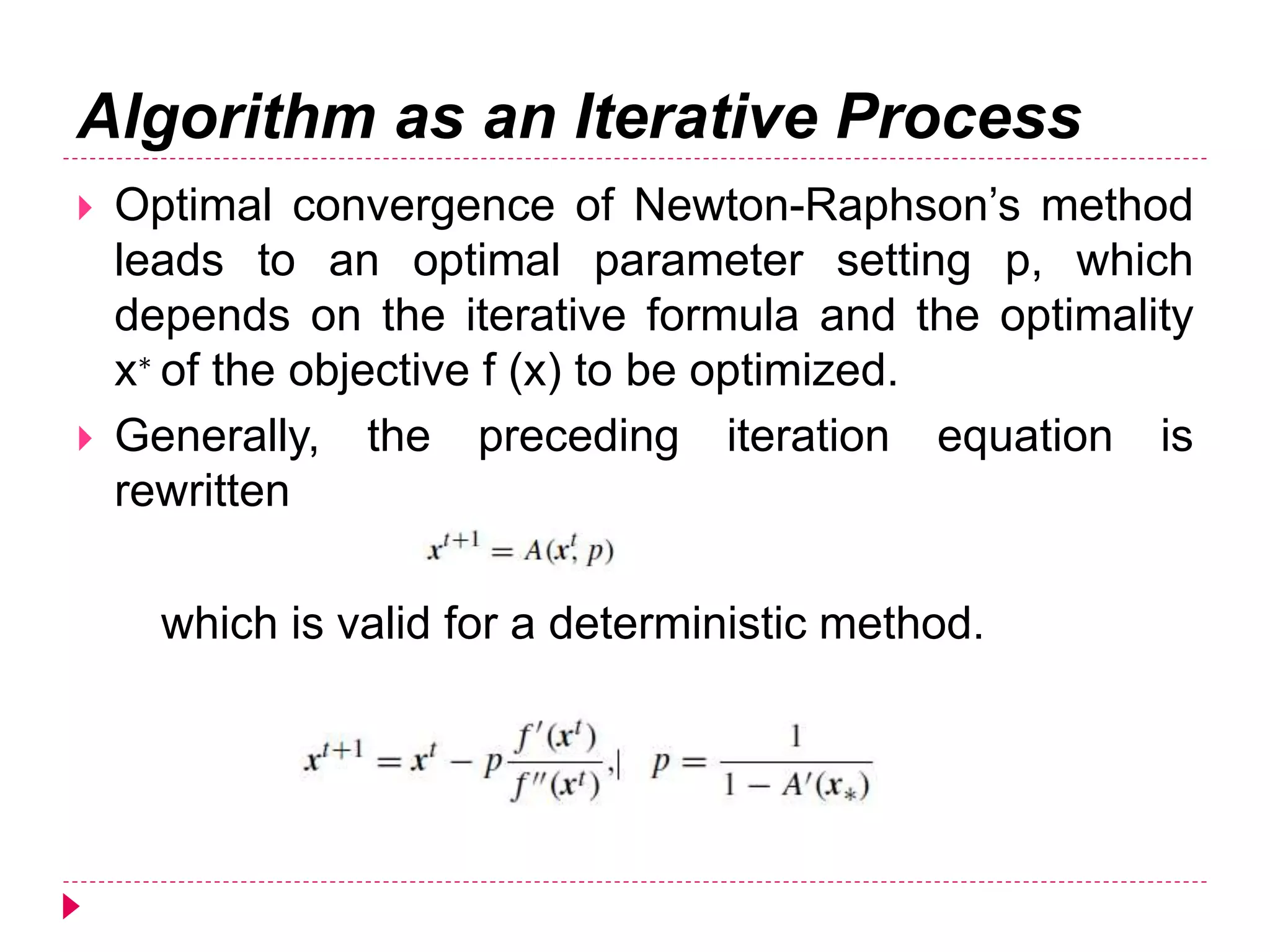

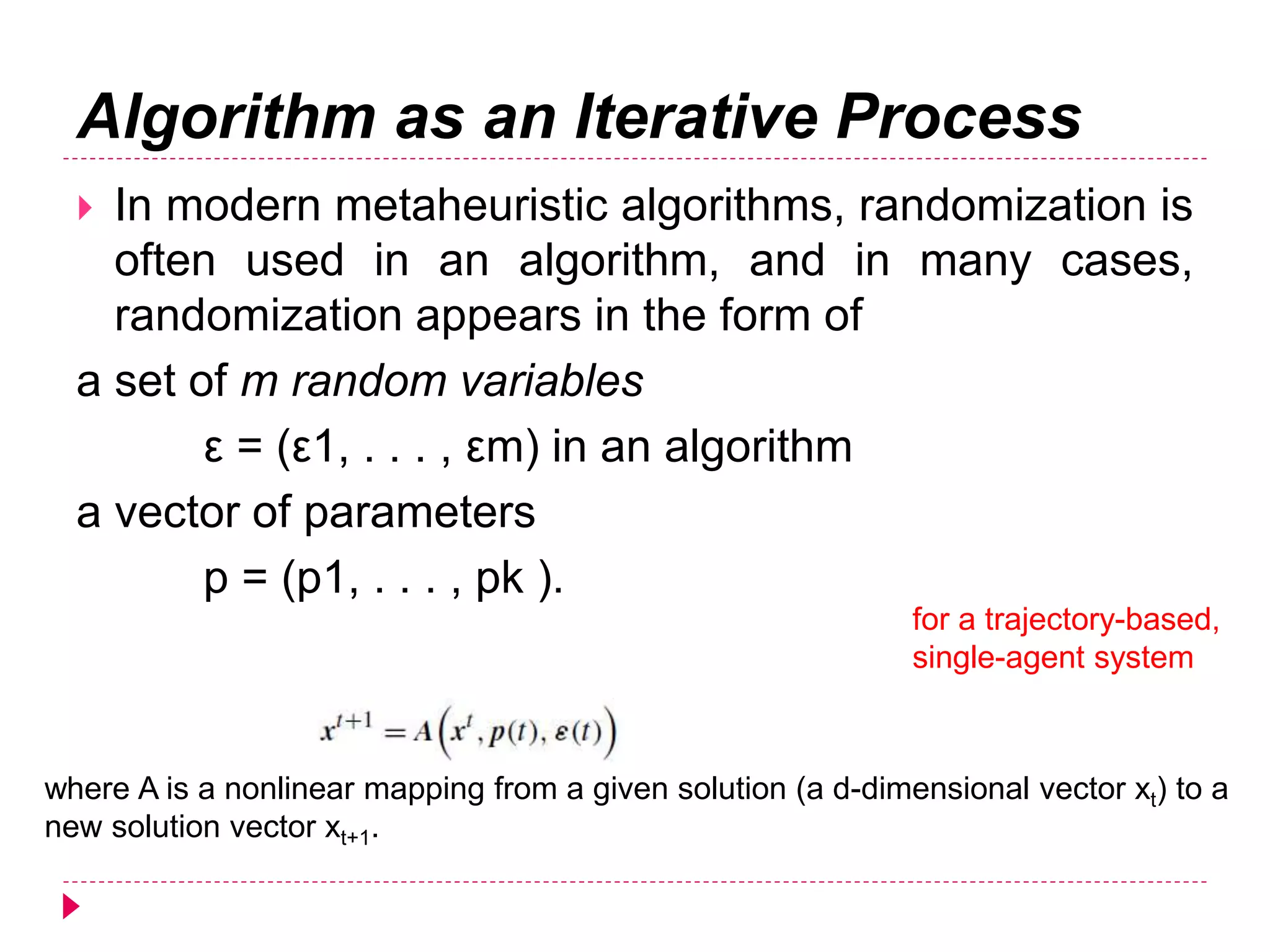

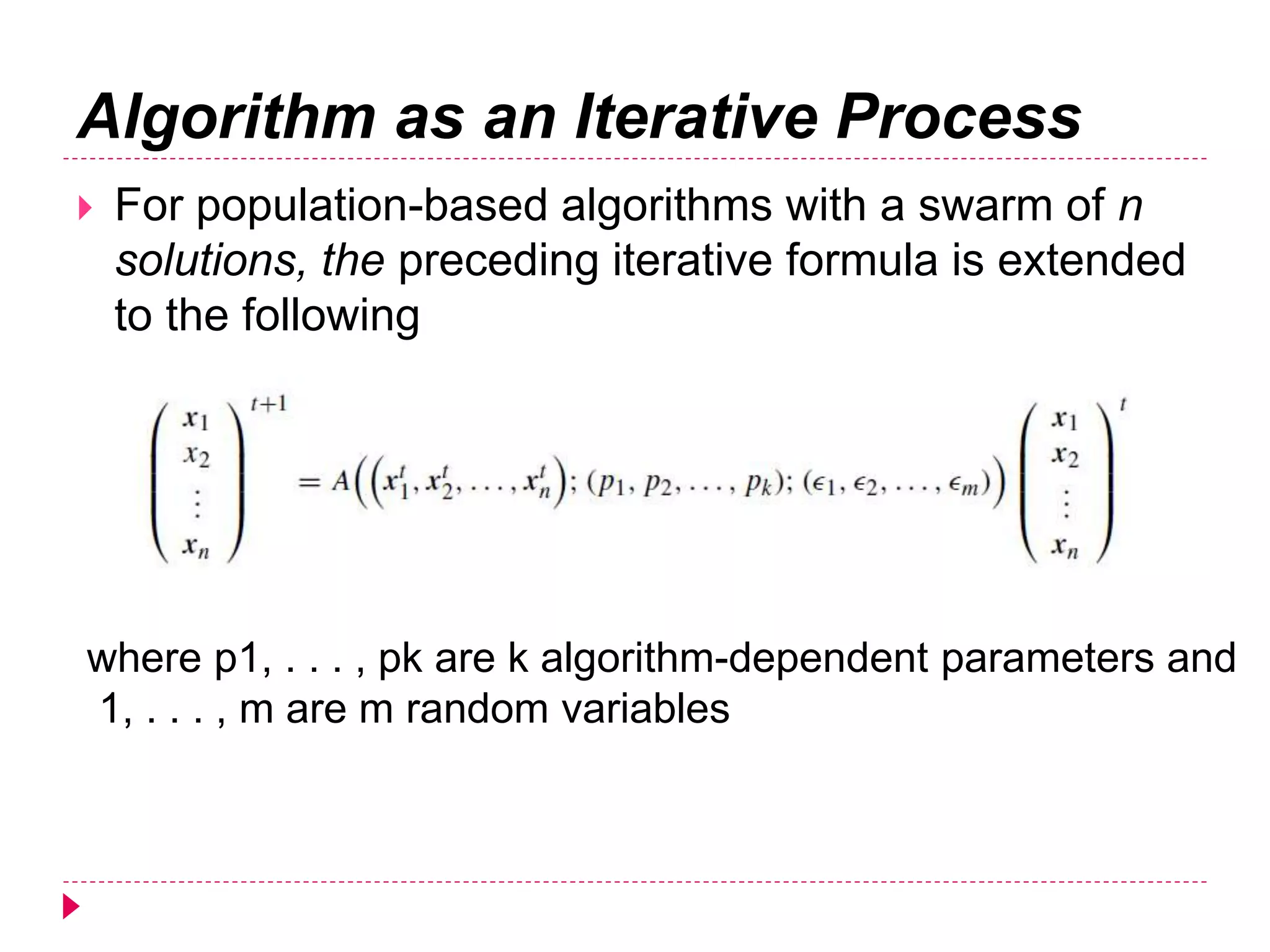

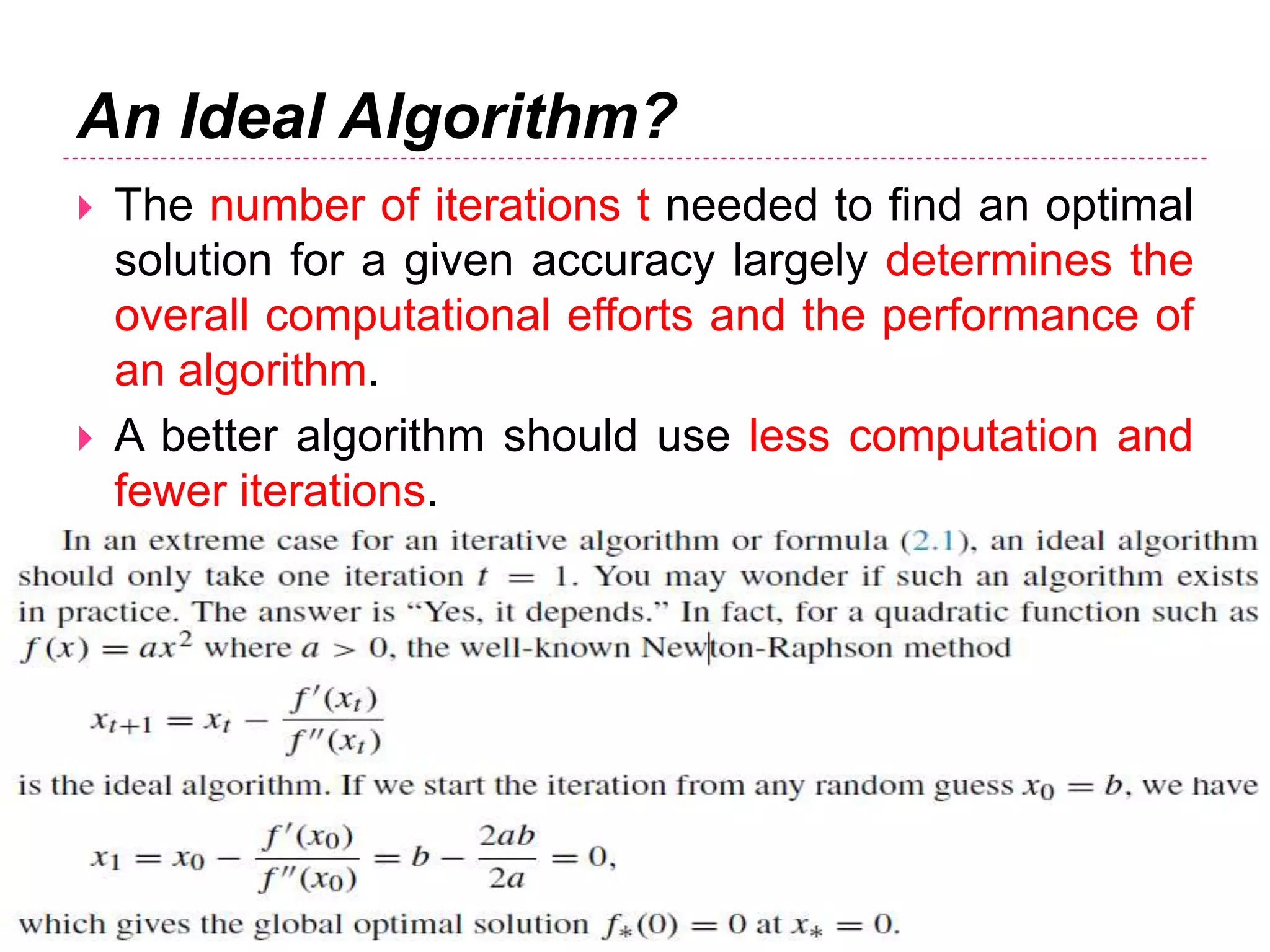

This document discusses four key concepts related to optimization algorithms: 1) Algorithms are iterative processes that aim to generate improved solutions over time. 2) Algorithms can be modeled as self-organizing systems with exploration and exploitation. 3) Exploration and exploitation are two conflicting components that require balancing. 4) Genetic algorithms use three main evolutionary operators: crossover, mutation, and selection.