4/2/2024

EMÜ322

Simulation Modeling and Analysis

Random Number & Random Variate

Generation

Banu Yuksel Ozkaya

Hacettepe University

Department of Industrial Engineering

1

Random Number Generators

• Random numbers are necessary ingredients in

the simulation of almost all discrete systems.

• A simulation of any system or process in which

there are inherently random components

requires a method of generating or obtaining

numbers that are random in some sense.

1

4/2/2024

Random Number Generators

• Example:

– Inter-arrival and service times in the single server

queuing system

– Travel times, loading times, weighing times in the

truck load system

– Newsday type and number of demands in each

newsday in the newsboy problem

Random Number Generators

• Generating Random Variates

– Uniform distribution on the interval [0 1], U(0,1)

– Random variates generated from the U(0,1)

distribution are called random numbers.

– Random variates from all other distributions

(normal, Poisson, gamma, binomial, geometric, ….)

can be obtained by transforming independent and

identically distributed random numbers

2

4/2/2024

Random Number Generators

• Properties of Random Numbers:

– A random number must be independently drawn

from a uniform distribution with pdf:

1, 0 x 1

f ( x) =

0, otherwise

1

1 x2

1

E ( R) = xdx = =

0 2 2

0

– Two important statistical properties:

• Uniformity

• Independence.

6

3

4/2/2024

Random Number Generators

• Mid-square method

– Start with a four-digit positive integer Z0 and find the square

of it to obtain an integer with up to eight digits. If necessary,

append zeros to the left to make it exactly 8 digits. Take the

middle four digits as the next four digit number, Z1 and etc.

i Zi Ui Zi2 i Zi Ui Zi2

0 7182 - 51581124 7 2176 0.2176 04734976

1 5811 0.5811 33767721 8 7349 0.7349 54007801

2 7677 0.7677 58936329 9 78 0.0078 00006084

3 9363 0.9363 87665769 10 60 0.0060 00003600

4 6657 0.6657 44315649 11 36 0.0036 00001296

5 3156 0.3156 09960336 12 12 0.0012 00000144

6 9603 0.9603 92217609 13 1 0.0001 00000001

7

4

4/2/2024

Random Number Generators

• Mid-square method

– One serious problem is that it has a strong

tendency to degenerate rapidly to zero and stay

there forever.

– A more fundamental objection to the mid-square

method is that it is not random, at all in the sense

of being unpredictable (This objection will apply to

all arithmetic generators)

Random Number Generators

• Generation of Pseudo-Random Numbers:

– Pseudo means false!!!

– False random numbers!!!

– If the method is known, the set of random

numbers can be replicated. Then, the numbers are

not truly random!

– The goal is to produce a sequence of numbers

between 0 and 1 that simulates, imitates the ideal

properties of uniform distribution and

independence as closely as possible.

10

5

4/2/2024

11

Random Number Generators

• Possible errors or departures from randomness

– The generated numbers might not be uniformly

distributed.

– The mean and/or the variance of the generated

numbers might be too high or too low.

– There might be dependence

• Autocorrelation between numbers

• Numbers successively higher or lower than adjacent

numbers

• Several numbers above the mean followed by several

numbers below the mean

12

6

4/2/2024

13

Random Number Generators

• A good arithmetic random number generator

should possess several properties:

– The numbers produced should appear to be

distributed uniformly on [0 1] (histogram) and

should not exhibit any correlation with each other.

– Fast and not requiring a lot of storage.

– Reproduce a given stream of random numbers

• Verification of the computer program

• Identical random numbers might be needed to compare

alternatives.

14

7

4/2/2024

Random Number Generators

• A good arithmetic random number generator

should possess several properties:

– Generators should be portable, e.g. to produce the

same sequence of random numbers on different

computers and compilers.

– Generators should have sufficiently long cycles

15

Random Number Generators

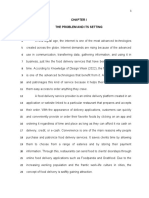

• Linear Congruential Generator (Lehmer 1951):

– A sequence of integers Z1,Z2,….. is defined by the

recursive formula

Z i +1 = (aZ i + c) mod m, i = 0,1,2,...

m: modulus, a: multiplier, c: increment, Z0: Seed or

starting value are all non-negative integers.

– What values can Zi‘s can take?

Zi

Ui = , i = 1,2,...

m

– What values can Ui‘s can take? 16

8

4/2/2024

17

Random Number Generators

• Linear Congruential Generator (Lehmer 1951):

– Z i +1 = (5Z i + 3) mod 16, i = 0,1,2,...

i Z i Ui i Zi Ui i Zi Ui

0 7 - 7 12 0.750 14 13 0.813

1 6 0.375 8 15 0.938 15 4 0.250

2 1 0.063 9 14 0.875 16 7 0.438

3 8 0.500 10 9 0.563 17 6 0.375

4 11 0.688 11 0 0.000 18 1 0.063

5 10 0.625 12 3 0.188 19 8 0.500

6 5 0.313 13 2 0.125 20 11 0.688

18

9

4/2/2024

19

Random Number Generators

• Linear Congruential Generator (Lehmer 1951):

– The looping behavior is obvious when Zi takes on a

value that it has had previously.

– The cycle repeats itself endlessly.

– The length of a cycle is the period of a generator.

– What is the maximum period of LCG?

20

10

4/2/2024

Random Number Generators

• Linear Congruential Generator (Lehmer 1951):

– If the period is m, LCG is said to have full period.

– Large scale simulations can use millions of random

numbers and hence, it is desirable to have LCG’s

with long periods.

– LCG has full period if and only if

• The only positive integer that exactly divides both m and

c is 1

• If q is a prime number that divides m, then q divides a-1.

• If 4 divides m, then 4 divides a-1.

21

Random Number Generators

• Linear Congruential Generator (Lehmer 1951):

1 1200

0.9

1000

0.8

0.7

800

0.6

i+1

0.5 600

U

0.4

400

0.3

0.2

200

0.1

0 0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Ui

a=16807, m=231-1, c=0, Z0=123457

22

11

4/2/2024

Random Number Generators

• Linear Congruential Generator (Lehmer 1951):

– Mixed Generator (c>0)

• Able to obtain full period

• Parameter choice➔ m=2b, c is odd, a-1 is divisible by 4.

Z0 is any integer between 0 and m-1

23

Random Number Generators

• Tests for Random Numbers

– The most direct way to test a generator is to use it

to generate some Ui’s, which are then examined

statistically to see how closely they resemble IID

U(0,1) random variates.

– The desirable properties are

• Uniformity

• Independence

24

12

4/2/2024

Random Number Generators

• Tests for Random Numbers

– Frequency Tests: Compare the distribution of the

set of numbers generated to a uniform distribution.

• Kolmogrov-Smirnov

• Chi-square test

– Autocorrelation Tests: Test the correlation

between numbers and compares the sample

correlation to the expected correlation (which is

zero)

25

Random Number Generators

• Tests for Uniformity

H0: Ri ~U[0,1]

H1: Ri ~U[0,1]

with significance level a=P(reject H0 | H0 is true)

– Rejecting H0 at a means that the numbers

generated do not follow a uniform distribution.

– Failing to reject H0 means that evidence of non-

uniformity has not been detected. This does not

imply that further testing for uniformity is

unnecessary.

26

13

4/2/2024

Random Number Generators

• Tests for Independence

H0: Ri ~Independently

H1: Ri ~Independently

with significance level a=P(reject H0 | H0 is true)

– Rejecting H0 at a means that the numbers

generated are not independent.

– Failing to reject H0 means that evidence of

dependence has not been detected by the test.

This does not imply that further testing for

independence is unnecessary.

27

tests

28

14

4/2/2024

Random Number Generators

• Tests for Uniformity

• Chi-Square Goodness of Fit Test:

– Suppose you have a sample of n generated

numbers between 0 and 1, U1,U2,….Un.

– n numbers are arranged in a frequency histogram

having k bins or class intervals.

– Let Oi be the frequency in the ith class interval and

Ei be the expected frequency in the ith class

interval.

29

30

15

4/2/2024

Random Number Generators

• Chi-Square Goodness of Fit Test:

– If the class intervals (n=10) are of equal length

(0.1), what is Ei ?

– If U1,U2,….Un are uniformly distributed on [0,1], the

test statistics c02 has a Chi-Square distribution with

k-1 degrees of freedom if n is large enough.

k

c 02 =

(Oi − Ei )2

i =1 Ei

31

Random Number Generators

• Chi-Square Goodness of Fit Test:

– H0: Ri ~U[0,1]

H1: Ri ~U[0,1]

– Reject H0 if c02 > c2a,k-1 where c2a,k-1 is the (1-a)th

percentile of a Chi-Square distribution with k-1

degrees of freedom.

32

16

4/2/2024

33

Random Number Generators

• Chi-Square Goodness of Fit Test:

34

17

4/2/2024

Random Number Generators

• Chi-Square Goodness of Fit Test:

– Example: n=100 numbers are generated.

10 intervals: [0,0.1),[0.1,0.2),…..[0.9,1]

Interval Oi Ei (Oi –Ei)2/Ei Interval Oi Ei (Oi –Ei)2/Ei

1 8 10 0.4 6 12 10 0.4

2 8 10 0.4 7 10 10 0.0

3 10 10 0.0 8 14 10 1.6

4 9 10 0.1 9 10 10 0.0

5 8 10 0.4 10 11 10 0.1

c02=3.4 < c20.05,9=16.9➔ fail to reject H0.

35

Random Number Generators

36

18

4/2/2024

Random Number Generators

• Chi-Square Goodness of Fit Test:

– How do we determine the class intervals?

• If the expected frequencies are too small, the test

statistic will not reflect the departure of observed

frequencies from expected frequencies.

• The minimum value of 5 are widely used as minimal

value of expected frequencies.

• If an expected frequency is too small, it can be

combined with the expected frequency in an adjacent

class interval.

• Class intervals are not required to be of equal width.

37

cent interval

38

19

4/2/2024

Random Number Generators

• Tests for Uniformity

• Kolmogrov-Smirnov Test (K-S test):

– The test compares the continuous cumulative

distribution function, F(x) of the uniform

distribution with the empirical cumulative

distribution function, Sn(x) of the sample of n

observations.

39

Random Number Generators

• Kolmogrov-Smirnov Test (K-S test):

– F(x)=x for 0≤x ≤1.

– The empirical cumulative distribution function,

Sn(x) is defined by

number of U1 , U 2 ,...., U n which are x

S n ( x) =

n

– As n becomes larger, Sn(x) should become a better

approximation to F(x) provided that the null

hypothesis is true.

40

20

4/2/2024

41

Random Number Generators

• Kolmogrov-Smirnov Test (K-S test):

– The K-S Test is based on the largest absolute

deviation between F(x) and Sn(x) over the range of

the random variable of interest.

D = max F ( x) − S n ( x)

42

21

4/2/2024

Random Number Generators

• Kolmogrov-Smirnov Test (K-S test):

– Rank the data from smallest to largest.

U(1) ≤ U(2) ≤ ……. ≤ U(n)

– Compute

i i − 1

D + = max − U (i ) , D − = maxU (i ) −

1i n n

1i n

n

– Compute D=max(D+,D-)

– If D>Da,n , reject H0. If D≤Da,n , conclude that no

difference has been detected between the true distribution

of U1,U2… Un and the uniform distribution.

43

44

22

4/2/2024

K-S critical value at a significance level

and n sample size

45

Random Number Generators

46

23

4/2/2024

Random Number Generators

• Kolmogrov-Smirnov Test (K-S test):

– Example: Five numbers 0.44, 0.81, 0.14, 0.05, 0.93

are generated.

U(i) 0.05 0.14 0.44 0.81 0.93

i/n 0.20 0.40 0.60 0.80 1.00

i/n-U(i) 0.15 0.26 0.16 - 0.07

U(i) –(i-1)/n 0.05 - 0.04 0.21 0.13

– D+ is the largest deviation of Sn(x) above F(x) – 0.26.

– D- is the largest deviation of Sn(x) below F(x) – 0.21.

– D=0.26< D0.05,5=0.565 ➔ fail to reject H0.

47

48

24

4/2/2024

Random Number Generators

• Comparison of Chi-Square Goodness of Fit Test

and Kolmogrov-Smirnov Test:

– Both tests are acceptable for testing the uniformity

of a sample of data provided that the sample size is

large.

– K-S test is more powerful of the two and is

recommended.

– K-S Test can be applied to smaller sample sizes.

– Chi-square goodness of fit tests are valid only for

large samples, say N≥50.

49

Random Number Generators

Test for Independence

50

25

4/2/2024

Random Number Generators

• Test for Independence

• Autocorrelation Test:

Autocorrelation Function for C6

(with 5% significance limits for the autocorrelations)

1.0

0.8

0.6

0.4

Autocorrelation

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1 2 3 4 5 6 7 8

Lag

51

52

26

4/2/2024

Random Number Generators

• Test for Independence

• Autocorrelation Test: Linear congruential generator

with a=16807, m=231-1, c=0, Z0=123457

Autocorrelation Function for C1

(with 5% significance limits for the autocorrelations)

1.0

0.8

0.6

0.4

Autocorrelation

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1 10 20 30 40 50 60 70 80 90 100 110 120 130 140

Lag 53

Random Number Generators

• Test for Independence

• Runs Test - Several versions

• Runs Test 1

– Examine the Ui sequence for unbroken sequences of

maximal length in which the Ui’s increase

monotonically (such a sequence is called a run-up).

– Consider the following sequence:

0.86, 0.11, 0.23, 0.03, 0.13, 0.06, 0.55, 0.64, 0.87, 0.10

Runs up of length 1 – 2 – 2 – 4 – 1

54

27

4/2/2024

55

56

28

4/2/2024

Random Number Generators

• Runs Test - Several versions:

• Runs Test 2

– Define

number of runs up of length i i = 1,2,3,4,5

ri =

number of runs up of length 6 i=6

– Runs up of length 1 – 2 – 2 – 4 – 1➔ r1=2, r2=2, r3=0,

r4=1, r5=0, r6=0

57

58

29

4/2/2024

Random Number Generators

• Runs Test - Several versions

• Runs Test 3

0.86, 0.11, 0.23, 0.03, 0.13, 0.06, 0.55, 0.64, 0.87, 0.10

Runs above and below K = 0.358

The observed number of runs = 4

The expected number of runs = 5.8

4 observations above K, 6 below

P-value = 0.206

Linear congruential generator with a=16807, m=231-1, c=0, Z0=123457

Runs above and below K = 0.501290

The observed number of runs = 4979

The expected number of runs = 5000.91

5021 observations above K, 4979 below

P-value = 0.661

59

__

x

60

30

4/2/2024

61

Random-Variate Generation

• Generation of a random-variate refers to the

activity of obtaining an observation on a

random variable from the desired distribution.

• It is now assumed that a distribution has been

specified somehow (distribution fitting) and we

address the issue of how we can generate the

random-variates with this distribution in order

to run the simulation model.

62

31

4/2/2024

Random-Variate Generation

• Example:

– Inter-arrival and service time in the single-server

queuing system

– Inter-arrival, loading, weighing and travel times in

the truck-load system

were needed for simulation of the corresponding

system.

63

Random-Variate Generation

• The basic ingredient needed for every method

of generating random variates from any

distribution or random process is a source of

independent and identically distributed U(0,1)

random variates (random numbers).

• Assume that a good source of random numbers

is available for use.

64

32

4/2/2024

Random-Variate Generation

• Factors to choose an algorithm to use:

– Exactness:

• Result in random variates with exactly the desired

distribution (within the limits of the machine accuracy)

– Efficiency:

• Use less storage space and execution time.

– Complexity:

• Overall complexity of the algorithm such as the

conceptual and implementation factors.

– Robustness:

• Efficient for all parameter values

65

Random-Variate Generation

• General Approaches:

– Inverse Transform

– Composition

– Convolution

– Acceptance-Rejection

66

33

4/2/2024

Random-Variate Generation

• Inverse-Transform Technique: Algorithm for

generating a continuous random-variate X

having distribution function F() is as follows:

1. Generate U~ U(0,1).

2. Compute X= F-1(U) (X s.t. F(X)=U).

F(x)

U

X

67

68

34

4/2/2024

Random-Variate Generation

• Inverse-Transform Technique:

– Example: How to generate an exponential random-

variate with parameter l?

1 − e − lx x0

F (x ) =

0 o.w.

1. Generate U~ U(0,1).

2. Compute X s.t. U=F(X)=1-e-lX ➔ X=-ln(1-U)/l.

(or X= -ln(U)/l)

69

70

35

4/2/2024

Random-Variate Generation

• Inverse-Transform Technique:

– Example: Generate 10000 exponential random

variates with l=2.

1200 3500

3000

1000

2500

800

2000

Frequency

600

1500

400

1000

200

500

0 0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 1 2 3 4 5 6

X

– mean=0.5032, variance=0.2540 71

Random-Variate Generation

• Inverse-Transform Technique: Why does the

Inverse Transform Technique work?

Exponential density (l=2) Exponential distribution function (l=2)

2 1

1.8 0.9

1.6 0.8

1.4 0.7

1.2 0.6

F(x)

f(x)

1 0.5

0.8 0.4

0.6 0.3

0.4 0.2

0.2 0.1

0 0

0 0.5 1 1.5 2 2.5 3 3.5 4 0 0.5 1 1.5 2 2.5 3 3.5 4

x x

72

36

4/2/2024

Random-Variate Generation

• Example: Consider a random variable X that

has the following probability density function:

x 0 x 1

f ( x ) = 2 − x 1 x 2

0

o.w.

How do you generate this random variable by

inverse-transform method?

73

f(x)

x

74

37

4/2/2024

75

76

38

4/2/2024

Random-Variate Generation

• Inverse-Transform Method: Algorithm for

generating a discrete random-variate X taking

values x1,x2,…. where x1<x2<… and having

distribution function F() is as follows:

1. Generate U~ U(0,1).

2. Determine the smallest positive integer i such that

U≤ F(xi) and return X= xi.

77

78

39

4/2/2024

Random-Variate Generation

• Inverse-Transform Method:

1

X

0 X X

79

Random-Variate Generation

• Inverse-Transform Method:

• The value X returned by this technique has the

desired distribution F.

– i=1 ➔ X=x1 ↔ U ≤ F(x1) = p(x1)

– i≥2 ➔ X=xi ↔ F(xi-1) < U ≤ F(xi) →

P(X=xi)=P(F(xi-1) < U ≤ F(xi))= F(xi) - F(xi-1)= p(xi)

80

40

4/2/2024

(Slide #54)

81

Random-Variate Generation

• Inverse-Transform Method:

– Example: Generate 10000 geometric random

variates with p=1/5.

1200 4000

3500

1000

3000

800

2500

Frequency

600 2000

1500

400

1000

200

500

0 0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 5 10 15 20 25 30 35 40 45 50

X

– mean=5.0280, variance=20.3314 82

41

4/2/2024

Random-Variate Generation

• Inverse-Transform Method:

– Example: In an inventory system, the demand sizes

are random taking on the values 1,2,3, and 4 with

respective probabilities 1/6, 1/3, 1/3 and 1/6.

83

F(x)

x

84

42

4/2/2024

Random-Variate Generation

• Inverse-Transform Method:

– Example:

1200 3500

3000

1000

2500

800

2000

600

1500

400

1000

200

500

0 0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 1 2 3 4

– mean=2.5035, variance=0.9219

85

Random-Variate Generation

• Advantages of the Inverse Transform Method

1. Both valid to generate continuous and discrete

random variables➔ valid to generate mixed random

variables

X = min x : F ( x ) U

86

43

4/2/2024

Random-Variate Generation

• Advantages of the Inverse Transform Method

2. Can be used to generate correlated random

variables

• Positive correlation

• Negative correlation.

87

88

44

4/2/2024

Random-Variate Generation

• Disadvantages of the Inverse Transform

Method

– The need to calculate F-1(U) or F(U).

• Normal, gamma, beta distribution

– Usually not the fastest way to generate a random

variate.

89

Random-Variate Generation

• Convolution Method:

– A random variable X can be expressed as a sum of other

random variables that are independent and identically

distributed.

X = Y1 + Y2 + ....Ym

• Gamma (Erlang) random variable

• Binomial random variable

• Negative Binomial random variable

– X is said to be a m-fold convolution of the distribution of

Yj’s.

– Rather than generating X directly, generate m Y’s and

then sum them up.

90

45

4/2/2024

91

Random-Variate Generation

• Composition Method:

– When the distribution function F from which we

wish to generate can be expressed as a convex

combination of other distribution functions, the

composition technique works.

– Suppose we assume that for all x, F(x) can be written

as:

F ( x ) = p j F j ( x)

j =1

where Spj=1. Then,

f ( x ) = p j f j ( x)

j =1

92

46

4/2/2024

93

(Slide #61)

94

47

4/2/2024

Random-Variate Generation

• Composition Method:

1. Generate a positive random integer J, such that

P(J = j ) = p j

for j = 1,2,.....

➔ Select the distribution to generate the random

variate

2. Return X with distribution function Fj or density

function fj

➔ Generate the random variate from the selected

distribution function

95

Random-Variate Generation

• Composition Method:

• Example: The double exponential (or Laplace)

distribution has density as follows:

f (x ) = 0.5e x I ( −,0) ( x) + 0.5e − x I [0, ) ( x)

where IA is the indicator function of the set A,

defined by:

1 x A

I A ( x) =

0 o.w.

96

48

4/2/2024

Random-Variate Generation

• Composition Method:

• Example:

– Generate U1,U2.

– If U1<0.5, X=ln(U2)

– If U1>0.5, X=-ln(U2)

97

98

49

4/2/2024

99

100

50

4/2/2024

101

Random-Variate Generation

• Composition Method:

• Example: For 0<a<1, the right-trapezoidal

distribution has density as follows:

a + 2(1 − a) x 0 x 1

f (x ) =

0 o.w.

Then f(x) can be written as:

f ( x ) = aI[ 0,1] ( x) + (1 − a )2 xI [ 0,1] ( x)

102

51

4/2/2024

Random-Variate Generation

• Composition Method:

• Example:

– Generate U1,U2.

– If U1<=a, X=U2

– If U1>a, X=sqrt(U2)

103

104

52

4/2/2024

105

106

53

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

– Other three methods are direct in the sense that

they deal directly with the distribution or random

variable desired.

– Acceptance-rejection region is useful when direct

methods fail or are inefficient.

– Aim is to generate a continuous random variable X

with distribution function F and density f.

107

108

54

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

– Specify a function t, which is called the majorizing function

such that t(x)>=f(x) for all x

109

110

55

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

– For the majorizing function such that t(x)>=f(x) for

all x

c = t ( x)dx f ( x)dx = 1

− −

– It is not necessary that t(x) is a density function

but r(x)=t(x)/c is a probability density function.

– Let Y be a random variable with density r.

111

Random-Variate Generation

• Acceptance-Rejection Method:

1. Generate Y having density r.

2. Generate U independent of Y.

3. If U<=f(Y)/t(Y), return X=Y (accept the random

variate Y as X).

Otherwise (reject the random variate Y) go to step

1 and try again.

112

56

4/2/2024

113

a b

more frequently

114

57

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

Example: Suppose we want to generate a beta

random variable with parameters 4 and 3.

60 x 3 (1 − x ) 2

0 x 1

f ( x) =

0 o.w.

Inverse-transform, convolution and composition

methods are not applicable.

115

Random-Variate Generation

• Acceptance-Rejection Method:

Example: The maximum value of f(x) occurs at

x=0.6 with f(0.6)=2.0736.

2.5

t(x)=2.0736

f(x)

1.5

r(x)=1

1

0.5

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

116

58

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

Example: Define t(x) as follows:

2.0736 0 x 1

t ( x) =

0 o.w.

c=2.0736. Then, r(x) is given by

1 0 x 1

r ( x) =

0 o.w.

117

Random-Variate Generation

• Acceptance-Rejection Method:

Example:

1. Generate Y with density r(x).

2. Generate U independent of Y.

60Y 3 (1 − Y )

2

3. If U , return X=Y.

2.0736

Otherwise go to step 1 again.

118

59

4/2/2024

119

0.28 0.84 0.53 0.72 0.90 0.21 0.63 0.54 120

60

4/2/2024

0.28 0.84 0.53 0.72 0.90 0.21 0.63 0.54

Y=0.28

U=0.84

121

0.28 0.84 0.53 0.72 0.90 0.21 0.63 0.54

122

61

4/2/2024

0.28 0.84 0.53 0.72 0.90 0.21 0.63 0.54

123

In this example,

700

600

500

10000 X values are generated.

400

Hence, approximately 10000/(1/c)=20736

300

Y values are generated

200

100

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

124

62

4/2/2024

Random-Variate Generation

• Acceptance-Rejection Method:

Example:

1200 700

600

1000

500

800

400

600

300

400

200

200

100

0 0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

125

Random-Variate Generation

• Acceptance-Rejection Method:

– The method generates a random number with the

desired distribution regardless of the choice of the

majorizing function, t(x).

– The choice of the majorizing function is important

for the efficiency of the algorithm.

• Step 1 requires generating Y with density t(x)/c.

Therefore, we should choose so that this can be

accomplished rapidly.

• We want to have a majorizing function for which the

probability of rejection (acceptance) is very small (high).

126

63

4/2/2024

127

64