Instruction Level Pipelining

Uploaded by

GowriInstruction Level Pipelining

Uploaded by

GowriComputer Architecture

A Quantitative Approach, Fifth Edition

Chapter 3

Instruction-Level Parallelism

and Its Exploitation

Copyright © 2012, Elsevier Inc. All rights reserved. 1

Contents

1. Pipelining; hazards

2. ILP - data, name, and control dependence

3. Compiler techniques for exposing ILP: Pipeline scheduling,

Loop unrolling, Strip mining, Branch prediction

4. Register renaming

5. Multiple issue and static scheduling

6. Speculation

7. Energy efficiency

8. Multi-threading

9. Fallacies and pitfalls

10. Exercises

Copyright © 2012, Elsevier Inc. All rights reserved. 2

Introduction

Introduction

Pipelining become universal technique in 1985

Overlaps execution of instructions

Exploits “Instruction Level Parallelism (ILP)”

Two main approaches:

Dynamic hardware-based

Used in server and desktop processors

Not used as extensively in Parallel Multiprogrammed

Microprocessors (PMP)

Static approaches compiler-based

Not as successful outside of scientific applications

Copyright © 2012, Elsevier Inc. All rights reserved. 3

Review of basic concepts

Pipelining each instruction is split up into a sequence of

steps – different steps can be executed concurrently by

different circuitry.

A basic pipeline in a RISC processor

IF – Instruction Fetch

ID – Instruction Decode

EX – Instruction Execution

MEM – Memory Access

WB – Register Write Back

Two techniques:

Superscalar A superscalar processor executes more than one

instruction during a clock cycle.

VLIW very long instruction word – compiler packs multiple

independent operations into an instruction

dcm 4

Basic superscalar 5-stage pipeline

Superscalar a processor executes more than one instruction during a

clock cycle by simultaneously dispatching multiple instructions to

redundant functional units on the processor.

The hardware determines (statically/ dynamically) which one of a block on

n instructions will be executed next.

A single-core superscalar processor SISD

A multi-core superscalar MIMD.

Copyright © 2012, Elsevier Inc. All rights reserved. 5

Hazards pipelining could lead to incorrect results.

Data dependence “true dependence” Read after Write hazard (RAW)

i: sub R1, R2, R3 % sub d,s,t d =s-t

i+1: add R4, R1, R3 % add d,s t d = s+t

Instruction (i+1) reads operand (R1) before instruction (i) writes it.

Name dependence “anti dependence” two instructions use the same

register or memory location, but there is no data dependency between them.

Write after Read hazard (WAR) Example:

i: sub R4, R5, R3

i+1: add R5, R2, R3

i+2: mul R6, R5, R7

Instruction (i+1) writes operand (R5) before instruction (i) reads it.

Write after Write (WAW) (Output dependence) Example

i: sub R6, R5, R3

i+1: add R6, R2, R3

i+2: mul R1, R2, R7

Instruction (i+1) writes operand (R6) before instruction (i) writes it.

dcm 6

More about hazards

Data hazards RAW, WAR, WAW.

Structural hazard occurs when a part of the processor's hardware is

needed by two or more instructions at the same time. Example: a single

memory unit that is accessed both in the fetch stage where an instruction

is retrieved from memory, and the memory stage where data is written

and/or read from memory. They can often be resolved by separating the

component into orthogonal units (such as separate caches) or bubbling

the pipeline.

Control hazard (branch hazard) are due to branches. On many

instruction pipeline microarchitectures, the processor will not know the

outcome of the branch when it needs to insert a new instruction into the

pipeline (normally the fetch stage).

Copyright © 2012, Elsevier Inc. All rights reserved. 7

Introduction

Instruction-level parallelism (ILP)

When exploiting instruction-level parallelism, goal is to

maximize CPI

Pipeline CPI =

Ideal pipeline CPI +

Structural stalls +

Data hazard stalls +

Control stalls

Parallelism with basic block is limited

Typical size of basic block = 3-6 instructions

Must optimize across branches

Copyright © 2012, Elsevier Inc. All rights reserved. 8

Introduction

Data dependence

Loop-Level Parallelism

Unroll loop statically or dynamically

Use SIMD (vector processors and GPUs)

Challenges:

Data dependency

Instruction j is data dependent on instruction i if

Instruction i produces a result that may be used by instruction j

Instruction j is data dependent on instruction k and instruction k

is data dependent on instruction i

Dependent instructions cannot be executed

simultaneously

Copyright © 2012, Elsevier Inc. All rights reserved. 9

Introduction

Data dependence

Dependencies are a property of programs

Pipeline organization determines if dependence

is detected and if it is s causes a “stall.”

Data dependence conveys:

Possibility of a hazard

Order in which results must be calculated

Upper bound on exploitable instruction level

parallelism

Dependencies that flow through memory

locations are difficult to detect

Copyright © 2012, Elsevier Inc. All rights reserved. 10

Introduction

Name dependence

Two instructions use the same name but no flow

of information

Not a true data dependence, but is a problem when

reordering instructions

Antidependence: instruction j writes a register or

memory location that instruction i reads

Initial ordering (i before j) must be preserved

Output dependence: instruction i and instruction j

write the same register or memory location

Ordering must be preserved

To resolve, use renaming techniques

Copyright © 2012, Elsevier Inc. All rights reserved. 11

Introduction

Control dependence

Every instruction is control dependent on some set of

branches and, in general, the control dependencies must be

preserved to ensure program correctness.

Instruction control dependent on a branch cannot be moved before

the branch so that its execution is no longer controller by the branch.

An instruction not control dependent on a branch cannot be moved

after the branch so that its execution is controlled by the branch.

Example

if C1 {

S1;

};

if C2 {

S2;

};

S1 is control dependent on C1; S2 is control dependent on C2 but not on C1

Copyright © 2012, Elsevier Inc. All rights reserved. 12

Properties essential to program correctness

Preserving exception behavior any change in instruction order must not

change the order in which exceptions are raised. Example:

DADDU R1, R2, R3

BEQZ R1, L1

L1 LW R4, 0(R1)

Can we move LW before BEQZ ?

Preserving data flow the flow of data between instructions that produce

results and consumes them. Example:

DADDU R1, R2, R3

BEQZ R4, L1

DSUBU R1, R8, R9

L1 LW R5, 0(R2)

OR R7, R1, R8

Does OR depend on DADDU and DSUBU?

dcm 13

Introduction

Examples

• Example 1: OR instruction dependent

DADDU R1,R2,R3 on DADDU and DSUBU

BEQZ R4,L

DSUBU R1,R1,R6

L: …

OR R7,R1,R8

• Example 2: Assume R4 isn’t used after

DADDU R1,R2,R3 skip

BEQZ R12,skip

Possible to move DSUBU

DSUBU R4,R5,R6

before the branch

DADDU R5,R4,R9

skip:

OR R7,R8,R9

Copyright © 2012, Elsevier Inc. All rights reserved. 14

Compiler techniques for exposing ILP

Loop transformation technique to optimize a program's execution speed:

1. reduce or eliminate instructions that control the loop, e.g., pointer

arithmetic and "end of loop" tests on each iteration

2. hide latencies, e.g., the delay in reading data from memory

3. re-write loops as a repeated sequence of similar independent

statements space-time tradeoff

4. reduce branch penalties;

Methods

1. pipeline scheduling

2. loop unrolling

3. strip mining

4. branch prediction

Copyright © 2012, Elsevier Inc. All rights reserved. 15

Compiler Techniques

1. Pipeline scheduling

Pipeline stall delay in execution of an instruction in an instruction

pipeline in order to resolve a hazard. The compiler can reorder

instructions to reduce the number of pipeline stalls.

Pipeline scheduling Separate dependent instruction from the

source instruction by the pipeline latency of the source instruction

Example:

for (i=999; i>=0; i=i-1)

x[i] = x[i] + s;

Copyright © 2012, Elsevier Inc. All rights reserved. 16

Compiler Techniques

Pipeline stalls

Assumptions:

1. S F2

2. The element of the array with the highest address R1

3. The element of the array with the lowest address +8 R2

Loop: L.D F0,0(R1) % load array element in F0

stall % next instruction needs the result in F0

ADD.D F4,F0,F2 % increment array element

stall

stall % next instruction needs the result in F4

S.D F4,0(R1) % store array element

DADDUI R1,R1,#-8 % decrement pointer to array element

stall (assume integer load latency is 1) % next instruction needs result in R1

BNE R1,R2,Loop % loop if not the last element

Copyright © 2012, Elsevier Inc. All rights reserved. 17

Compiler Techniques

Pipeline scheduling

Scheduled code:

Loop: L.D F0,0(R1)

DADDUI R1,R1,#-8

ADD.D F4,F0,F2

stall

stall

S.D F 4,8(R1)

BNE R1,R2,Loop

Copyright © 2012, Elsevier Inc. All rights reserved. 18

2. Loop unrolling

Given the same code

Loop: L.D F0,0(R1)

DADDUI R1,R1,#-8

ADD.D F4,F0,F2

S.D F4,8(R1)

BNE R1,R2,Loop

Assume # elements of the array with starting address in R1 is

divisible by 4

Unroll by a factor of 4

Eliminate unnecessary instructions.

dcm 19

Compiler Techniques

Unrolled loop

Loop: L.D F0,0(R1)

ADD.D F4,F0,F2

S.D F4,0(R1) % drop DADDUI & BNE

L.D F6,-8(R1)

ADD.D F8,F6,F2

S.D F8,-8(R1) %drop DADDUI & BNE

L.D F10,-16(R1)

ADD.D F12,F10,F2

S.D F12,-16(R1) % drop DADDUI & BNE

L.D F14,-24(R1)

ADD.D F16,F14,F2

S.D F16,-24(R1)

DADDUI R1,R1,#-32

BNE R1,R2,Loop

Live registers: F0-1, F2-3, F4-5, F6-7, F8-9,F10-11, F12-13, F14-15,

and F16-15; also R1 and R2

In the original code: only F0-1, F2-3, and F4-5; also R1 and R2

Copyright © 2012, Elsevier Inc. All rights reserved. 20

Compiler Techniques

Pipeline schedule the unrolled loop

Loop: L.D F0,0(R1) Pipeline scheduling reduces

L.D F6,-8(R1) the number of stalls.

L.D F10,-16(R1) 1. The L.D instruction

L.D F14,-24(R1) requires only one cycle so

ADD.D F4,F0,F2 when ADD.D are issued F4,

ADD.D F8,F6,F2 F8, F12 , and F16 are already

ADD.D F12,F10,F2 loaded.

ADD.D F16,F14,F2 2. The ADD.D requires only

S.D F4,0(R1) two cycles so that two S.D

S.D F8,-8(R1) can proceed immediately

DADDUI R1,R1,#-32 3. The array pointer is

S.D F12,16(R1) updated after the first two S.D

S.D F16,8(R1)

so the loop control can

proceed immediately after the

BNE R1,R2,Loop

last two S.D

Copyright © 2012, Elsevier Inc. All rights reserved. 21

Loop unrolling & scheduling summary

Use different registers to avoid unnecessary constraints.

Adjust the loop termination and iteration code.

Find if the loop iterations are independent except the loop maintenance

code if so unroll the loop

Analyze memory addresses to determine if the load and store from

different iterations are independent if so interchange load and stores

in the unrolled loop

Schedule the code while ensuring correctness.

Limitations of loop unrolling

Decrease of the amount of overhead with each roll

Growth of the code size

Register pressure (shortage of registers) scheduling to increase ILP

increases the number of live values thus, the number of registers

Copyright © 2012, Elsevier Inc. All rights reserved. 22

More compiler-based loop transformations

fission/distribution break a loop into multiple loops over the same index range but each

taking only a part of the loop's body. Can improve locality of reference, both of the data

being accessed in the loop and the code in the loop's body.

fusion/combining when two adjacent loops would iterate the same number of times their

bodies can be combined as long as they make no reference to each other's data.

interchange/permutation exchange inner loops with outer loops. When the loop variables

index into an array, such a transformation can improve locality of reference.

inversion changes a standard while loop into a do/while (a.k.a. repeat/until) loop wrapped

in an if conditional, reducing the number of jumps by two for cases where the loop is

executed. Doing so duplicates the condition check (increasing the size of the code) but is

more efficient because jumps usually cause a pipeline stall.

reversal reverses the order in which values are assigned to the index variable. This is a

subtle optimization which can help eliminate dependencies and thus enable other

optimizations.

scheduling divide a loop into multiple parts that may be run concurrently on multiple

processors.

skewing take a nested loop iterating over a multidimensional array, where each iteration

of the inner loop depends on previous iterations, and rearranges its array accesses so that

the only dependencies are between iterations of the outer loop.

dcm 23

Compiler Techniques

3. Strip mining

The block size of the outer block loops is determined by

some characteristic of the target machine, such as the

vector register length or the cache memory size.

Number of iterations = n

Goal: make k copies of the loop body

Generate pair of loops:

First executes n mod k times

Second executes n / k times

“Strip mining”

Copyright © 2012, Elsevier Inc. All rights reserved. 24

4. Branch prediction

Branch prediction guess whether a conditional jump will be taken or not.

Goal improve the flow in the instruction pipeline.

Speculative execution the branch that is guessed to be the most likely

is then fetched and speculatively executed.

Penalty if it is later detected that the guess was wrong then the

speculatively executed or partially executed instructions are discarded and

the pipeline starts over with the correct branch, incurring a delay.

How? the branch predictor keeps records of whether branches are

taken or not taken. When it encounters a conditional jump that has been

seen several times before then it can base the prediction on the history.

The branch predictor may, for example, recognize that the conditional

jump is taken more often than not, or that it is taken every second time

Note: not to be confused with branch target prediction guess the target of a

taken conditional or unconditional jump before it is computed by decoding and

executing the instruction itself. Both are often combined into the same circuitry.

Copyright © 2012, Elsevier Inc. All rights reserved. 25

Branch Prediction

Predictors

Basic 2-bit predictor:

For each branch:

Predict taken or not taken

If the prediction is wrong two consecutive times, change prediction

Correlating predictor:

Multiple 2-bit predictors for each branch

One for each possible combination of outcomes of preceding n

branches

Local predictor:

Multiple 2-bit predictors for each branch

One for each possible combination of outcomes for the last n

occurrences of this branch

Tournament predictor:

Combine correlating predictor with local predictor

Copyright © 2012, Elsevier Inc. All rights reserved. 26

Branch Prediction

Branch prediction performance

Copyright © 2012, Elsevier Inc. All rights reserved. 27

Branch Prediction

Dynamic scheduling

Rearrange order of instructions to reduce stalls

while maintaining data flow

Advantages:

Compiler doesn’t need to have knowledge of

microarchitecture

Handles cases where dependencies are unknown at

compile time

Disadvantage:

Substantial increase in hardware complexity

Complicates exceptions

Copyright © 2012, Elsevier Inc. All rights reserved. 28

Branch Prediction

Dynamic scheduling

Dynamic scheduling implies:

Out-of-order execution

Out-of-order completion

Creates the possibility for WAR and WAW

hazards

Tomasulo’s Approach

Tracks when operands are available

Introduces register renaming in hardware

Minimizes WAW and WAR hazards

Copyright © 2012, Elsevier Inc. All rights reserved. 29

Branch Prediction

Register renaming

Example:

DIV.D F0,F2,F4

ADD.D F6,F0,F8

antidependence WAR - F8

S.D F6,0(R1)

SUB.D F8,F10,F14 antidependence WAW – F6

MUL.D F6,F10,F8

+ name dependence with F6

Copyright © 2012, Elsevier Inc. All rights reserved. 30

Branch Prediction

Register renaming

Example: add two temporary registers S and T

i: DIV.D F0,F2,F4

i+1: ADD.D S,F0,F8 (instead of ADD.D F6,F0,F8)

i+2 S.D S,0(R1) (instead of S.D F6,0(R1)

i+3 SUB.D T,F10,F14 (instead of SUB.D F8,F10,F14)

i+4 MUL.D F6,F10,T (instead of MUL.D F6,F10,F8)

Now only RAW hazards remain, which can be strictly

ordered

Copyright © 2012, Elsevier Inc. All rights reserved. 31

Branch Prediction

Register renaming

Register renaming is provided by reservation stations (RS)

Contains:

The instruction

Buffered operand values (when available)

Reservation station number of instruction providing the operand

values

RS fetches and buffers an operand as soon as it becomes available

(not necessarily involving register file)

Pending instructions designate the RS to which they will send their

output

Result values broadcast on a result bus, called the common data bus (CDB)

Only the last output updates the register file

As instructions are issued, the register specifiers are renamed with

the reservation station

May be more reservation stations than registers

Copyright © 2012, Elsevier Inc. All rights reserved. 32

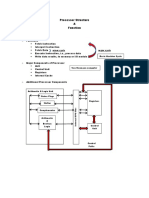

Branch Prediction

Tomasulo’s algorithm

Goal high performance without special compilers when

the hardware has only a small number of floating point

(FP) registers.

Designed in 1966 for IBM 360/91 with only 4 FP registers.

Used in modern processors: Pentium 2/3/4, Power PC 604,

Neehalem…..

Additional hardware needed

Load and store buffers contain data and addresses, act like

reservation station.

Reservation stations feed data to floating point arithmetic units.

Copyright © 2012, Elsevier Inc. All rights reserved. 33

dcm 34

Copyright © 2012, Elsevier Inc. All rights reserved. 35

Branch Prediction

Instruction execution steps

Issue

Get next instruction from FIFO queue

If available RS, issue the instruction to the RS with operand values if

available

If operand values not available, stall the instruction

Execute

When operand becomes available, store it in any reservation stations

waiting for it

When all operands are ready, issue the instruction

Loads and store maintained in program order through effective

address

No instruction allowed to initiate execution until all branches that

proceed it in program order have completed

Write result

Broadcast result on CDB into reservation stations and store buffers

(Stores must wait until address and value are received)

Copyright © 2012, Elsevier Inc. All rights reserved. 36

Branch Prediction

Example

Copyright © 2012, Elsevier Inc. All rights reserved. 37

Notations

David Patterson – class notes 38

Example of Tomasulo algorithm

3 – load buffers (Load1, Load 2, Load 3)

5 - reservation stations (Add1, Add2, Add3, Mult 1, Mult 2).

16 pairs of floating point registers F0-F1, F2-F3,…..F30-31.

Clock cycles: addition 2; multiplication 10; division 40;

David Patterson – class notes 39

Clock cycle 1

A load from memory location 34+(R2) is issued; data will be stored

later in the first load buffer (Load1)

David Patterson – class notes 40

Clock cycle 2

The Second load from memory loaction 45+(R3) is issued; data will be

stored in load buffer 2 (Load2).

Multiple loads can be outstanding.

David Patterson – class notes 41

Clock cycle 3

First load completed SUBD instruction is waiting for the data.

MULTD issued

Register names are removed/renamed in Reservation Stations

David Patterson – class notes 42

Clock cycle 4

Load2 is completed; MULTD waits for the results of it.

The results of Load 1 are available for the SUBD instruction.

David Patterson – class notes 43

Clock cycle 5

The result of Load2 available for MULTD executed by Mult1 and SUBD

executed by Add1. Both can now proceed as they have both operands.

Mult2 executes DIVD and cannot proceed yet as it waits for the results of

Add1.

David Patterson – class notes 44

Clock cycle 6

Issue ADDD

David Patterson – class notes 45

Clock cycle 7

The results of the SUBD produced by Add1 will be available in the next

cycle.

ADDD instruction executed by Add2 waits for them.

David Patterson – class notes 46

Clock cycle 8

The results of SUBD are deposited by the Add1 in F8-F9

David Patterson – class notes 47

Clock cycle 9

David Patterson – class notes 48

Clock cycle 10

ADDD executed by Add2 completes, it needed 2 cycles.

There are 5 more cycles for MULTD executed by Mult1.

David Patterson – class notes 49

Clock cycle 11

Only MULTD and DIVD instructions did not complete. DIVD is waiting for

the result of MULTD before moving to the execute stage.

David Patterson – class notes 50

Clock cycle 12

David Patterson – class notes 51

Clock cycle 13

David Patterson – class notes 52

Clock cycle 14

David Patterson – class notes 53

Clock cycle 15

MULTS instruction executed by Mult1 unit completed execution.

DIVD in instruction executed by the Mult2 unit is waiting for it.

David Patterson – class notes 54

Clock cycle 16

David Patterson – class notes 55

Clock cycle 55

DIVD will finish execution in cycle 56 and the result will be in F6-F7

in cycle 57.

David Patterson – class notes 56

Branch Prediction

Hardware-based speculation

Goal overcome control dependency by speculating.

Allow instructions to execute out of order but force them to

commit to avoid: (i) updating the state or (ii) taking an

exception

Instruction commit allow an instruction to update the

register file when instruction is no longer speculative

Key ideas:

1. Dynamic branch prediction.

2. Execute instructions along predicted execution paths, but only

commit the results if prediction was correct.

3. Dynamic scheduling to deal with different combination of basic

blocks

Copyright © 2012, Elsevier Inc. All rights reserved. 57

Branch Prediction

How speculative execution is done

Need additional hardware to prevent any irrevocable

action until an instruction commits.

Reorder buffer (ROB)

Modify functional units – operand source is ROB rather than

functional units

Register values and memory values are not written until

an instruction commits

On misprediction:

Speculated entries in ROB are cleared

Exceptions:

Not recognized until it is ready to commit

Copyright © 2012, Elsevier Inc. All rights reserved. 58

Extended floating point unit

FP using Tomasulo’s

algorithm extended to

handle speculation.

Reorder buffer now

holds the result of

instruction between

completion and

commit. Has 4 fields

Instruction type:

branch/store/register

Destination field:

register number

Value field: output

value

Ready field:

completed

execution?

Operand source is now

reorder buffer instead

of functional unit

dcm 59

Multiple Issue and Static Scheduling

Multiple issue and static scheduling

To achieve CPI < 1 complete multiple instructions per

clock cycle

Three flavors of multiple issue processors

1. Statically scheduled superscalar processors

a. Issue a varying number of instructions per clock cycle

b. Use in-order execution

2. VLIW (very long instruction word) processors

a. Issue a fixed number of instructions as one large instruction

b. Instructions are statically scheduled by the compiler

3. Dynamically scheduled superscalar processors

a. Issue a varying number of instructions per clock cycle

b. Use out-of-order execution

dcm 60

Multiple Issue and Static Scheduling

Multiple issue processors

Copyright © 2012, Elsevier Inc. All rights reserved. 61

VLIW Processors

Package multiple operations into one instruction

Must be enough parallelism in code to fill the available

slots,

Disadvantages:

Statically finding parallelism

Code size

No hazard detection hardware

Binary code compatibility

Copyright © 2012, Elsevier Inc. All rights reserved. 62

Multiple Issue and Static Scheduling

Example

Unroll the loop for x[i]= x[i] +s

to eliminate any stalls. Ignore

delayed branches. Loop: L.D F0,0(R1)

The code we had before L.D F6,-8(R1)

shown on the right

L.D F10,-16(R1)

Package in one VLIW L.D F14,-24(R1)

instruction :

ADD.D F4,F0,F2

One integer instruction (or

branch) ADD.D F8,F6,F2

ADD.D F12,F10,F2

Two independent floating-

point operations ADD.D F16,F14,F2

Two independent memory

S.D F4,0(R1)

references. S.D F8,-8(R1)

DADDUI R1,R1,#-32

S.D F12,16(R1)

S.D F16,8(R1)

BNE R1,R2,Loop

dcm 63

Example

Copyright © 2012, Elsevier Inc. All rights reserved. 64

Dynamic Scheduling, Multiple Issue, and Speculation

Dynamic scheduling, multiple issue, speculation

Modern microarchitectures:

Dynamic scheduling + multiple issue + speculation

Two approaches:

Assign reservation stations and update pipeline control table in

half clock cycles

Only supports 2 instructions/clock

Design logic to handle any possible dependencies between the

instructions

Hybrid approaches.

Issue logic can become bottleneck

Copyright © 2012, Elsevier Inc. All rights reserved. 65

Multiple issue processor with speculation

The organization should allow simultaneous execution for all issues in

one clock cycle of one of the following operations:

FP multiplication

FP addition

Integer operations

Load/Store

Several datapaths must be widened to support multiple issues.

The instruction issue logic will be fairly complex.

Copyright © 2012, Elsevier Inc. All rights reserved. 66

Dynamic Scheduling, Multiple Issue, and Speculation

Multiple issue processor with speculation

Copyright © 2012, Elsevier Inc. All rights reserved. 67

Dynamic Scheduling, Multiple Issue, and Speculation

Basic strategy for updating the issue logic

Assign a reservation station and a reorder buffer for every instruction

that may be issued in the next bundle.

To pre-allocate reservation stations limit the number of instructions of a

given class that can be issued in a “bundle”

I.e. one FP, one integer, one load, one store

Examine all the dependencies among the instructions in the bundle

If dependencies exist in bundle, use the assigned ROB number to

update the reservation table for dependent instructions. Otherwise, use

the existing reservations table entries for the issuing instruction.

Also need multiple completion/commit

Copyright © 2012, Elsevier Inc. All rights reserved. 68

Dynamic Scheduling, Multiple Issue, and Speculation

Example

Loop: LD R2,0(R1) ;R2=array element

DADDIU R2,R2,#1 ;increment R2

SD R2,0(R1) ;store result

DADDIU R1,R1,#8 ;increment pointer

BNE R2,R3,LOOP ;branch if not last element

Copyright © 2012, Elsevier Inc. All rights reserved. 69

Dynamic Scheduling, Multiple Issue, and Speculation

Dual issue without speculation

Time of issue, execution, and writing the result for a dual-issue of the pipeline.

The LD following the BNE (cycles 3, 6) cannot start execution earlier, it must

wait until the branch outcome is determined as there is no speculation

Copyright © 2012, Elsevier Inc. All rights reserved. 70

Dynamic Scheduling, Multiple Issue, and Speculation

Dual issue with speculation

Time of issue, execution, writing the result, and commit.

The LD following the BNE can start execution early; speculation is supported.

Copyright © 2012, Elsevier Inc. All rights reserved. 71

Advanced techniques for instruction delivery

Increase instruction fetch bandwidth of a multi-issue processor to

match the average throughput.

If an instruction is a branch we will have a zero branch penalty if we

know the next PC.

Requires

Wide enough datapaths to IC (Instruction cache)

Effective branch handling

Branch target buffer cache that stores the predicted address for

the next instruction after branch.

If the PC of a fetched instruction matches the address in this cache

the corresponding predicted PC is used as the next PC.

Copyright © 2012, Elsevier Inc. All rights reserved. 72

Adv. Techniques for Instruction Delivery and Speculation

Branch-target buffer

Next PC prediction buffer, indexed by current PC

Copyright © 2012, Elsevier Inc. All rights reserved. 73

Adv. Techniques for Instruction Delivery and Speculation

Branch folding

Optimization:

Larger branch-target buffer

Add target instruction into buffer to deal with longer

decoding time required by larger buffer

“Branch folding”

Copyright © 2012, Elsevier Inc. All rights reserved. 74

Adv. Techniques for Instruction Delivery and Speculation

Return address predictor

Most unconditional branches come from function returns

The same procedure can be called from multiple sites

Causes the buffer to potentially forget about the return address

from previous calls

Create return address buffer organized as a stack

Copyright © 2012, Elsevier Inc. All rights reserved. 75

Adv. Techniques for Instruction Delivery and Speculation

Integrated instruction fetch unit

Design monolithic unit that performs:

Branch prediction

Instruction prefetch

Fetch ahead

Instruction memory access and buffering

Deal with crossing cache lines

Copyright © 2012, Elsevier Inc. All rights reserved. 76

Adv. Techniques for Instruction Delivery and Speculation

Register renaming vs. reorder buffers

Instead of virtual registers from reservation stations and reorder

buffer, create a single register pool

Contains visible registers and virtual registers

Use hardware-based map to rename registers during issue

WAW and WAR hazards are avoided

Speculation recovery occurs by copying during commit

Still need a ROB-like queue to update table in order

Simplifies commit:

Record that mapping between architectural register and physical

register is no longer speculative

Free up physical register used to hold older value

In other words: SWAP physical registers on commit

Physical register de-allocation is more difficult

Copyright © 2012, Elsevier Inc. All rights reserved. 77

Adv. Techniques for Instruction Delivery and Speculation

Integrated issue and renaming

Combining instruction issue with register renaming:

Issue logic pre-reserves enough physical registers for the

bundle (fixed number?)

Issue logic finds dependencies within bundle, maps registers

as necessary

Issue logic finds dependencies between current bundle and

already in-flight bundles, maps registers as necessary

Copyright © 2012, Elsevier Inc. All rights reserved. 78

Adv. Techniques for Instruction Delivery and Speculation

How much to speculate?

How much to speculate

Mis-speculation degrades performance and power

relative to no speculation

May cause additional misses (cache, TLB)

Prevent speculative code from causing higher

costing misses (e.g. L2)

Speculating through multiple branches

Complicates speculation recovery

No processor can resolve multiple branches per

cycle

Copyright © 2012, Elsevier Inc. All rights reserved. 79

Adv. Techniques for Instruction Delivery and Speculation

Energy efficiency

Speculation and energy efficiency

Note: speculation is only energy efficient when it significantly

improves performance

Value prediction

Uses:

Loads that load from a constant pool

Instruction that produces a value from a small set of values

Not been incorporated into modern processors

Similar idea--address aliasing prediction--is used on some

processors

Copyright © 2012, Elsevier Inc. All rights reserved. 80

Multithreading

Distinction between TLP (Thread-level parallelism) and multithreading:

A multiprocessor multiple independent threads run concurrently.

A uniprocessor system only one thread can be active at one time

Multithreading improve throughput in uniprocessor systems:

Shares most of the processor core among the set of threads

Duplicates only registers, program counter.

Separate page tables for each thread.

dcm 81

Hardware approaches to multithreading

1. Fine-grain multithreading interleaves execution of multiple threads,

switches between threads in each clock cycle, round-robin.

Increase in throughput, but slows down execution of each thread.

Examples Sun Niagra, Nvidia GPU

2. Coarse grain multithreading switches only on costly stalls e.g., level 2

or 3 cache misses

High pipeline start-up costs

No processors

3. Simultaneous multithreading (SMT) fine-grain multithreading on

multiple-issue, dynamically scheduled processor.

Most common

Copyright © 2012, Elsevier Inc. All rights reserved. 82

Copyright © 2012, Elsevier Inc. All rights reserved. 83

ARM Cortex A8

Dual-issue, statically scheduled superscalar; dynamic issue detection.

Two instructions per clock cycle, CPI= 0.5.

Used as basis Apple A9 used in iPad, Intel Core i7 and processors used

in smartphones.

Copyright © 2012, Elsevier Inc. All rights reserved. 84

Figure 3.37 The five-stage instruction decode of the A8. In the first stage, a PC produced by the fetch unit (either from the

branch target buffer or the PC incrementer) is used to retrieve an 8-byte block from the cache. Up to two instructions are

decoded and placed into the decode queue; if neither instruction is a branch, the PC is incremented for the next fetch. Once in

the decode queue, the scoreboard logic decides when the instructions can issue. In the issue, the register operands are read;

recall that in a simple scoreboard, the operands always come from the registers. The register operands and opcode are sent to

the instruction execution portion of the pipeline.

Copyright © 2011, Elsevier Inc. All rights Reserved. 85

Figure 3.38 The five-stage instruction decode of the A8. Multiply operations are always performed in ALU pipeline 0.

Copyright © 2011, Elsevier Inc. All rights Reserved. 86

Figure 3.39 The estimated composition of the CPI on the ARM A8 shows that pipeline stalls are the primary addition to the

base CPI. eon deserves some special mention, as it does integer-based graphics calculations (ray tracing) and has very few cache

misses. It is computationally intensive with heavy use of multiples, and the single multiply pipeline becomes a major bottleneck.

This estimate is obtained by using the L1 and L2 miss rates and penalties to compute the L1 and L2 generated stalls per instruction.

These are subtracted from the CPI measured by a detailed simulator to obtain the pipeline stalls. Pipeline stalls include all three

hazards plus minor effects such as way misprediction.

Copyright © 2011, Elsevier Inc. All rights Reserved. 87

Figure 3.40 The performance ratio for the A9 compared to the A8, both using a 1 GHz clock and the same size caches for L1

and L2, shows that the A9 is about 1.28 times faster. Both runs use a 32 KB primary cache and a 1 MB secondary cache, which is 8-

way set associative for the A8 and 16-way for the A9. The block sizes in the caches are 64 bytes for the A8 and 32 bytes for the A9. As

mentioned in the caption of Figure 3.39, eon makes intensive use of integer multiply, and the combination of dynamic scheduling and a

faster multiply pipeline significantly improves performance on the A9. twolf experiences a small slowdown, likely due to the fact that

its cache behavior is worse with the smaller L1 block size of the A9.

Copyright © 2011, Elsevier Inc. All rights Reserved. 88

Figure 3.36 The basic structure of the A8 pipeline is 13 stages. Three cycles are used for instruction fetch and four for

instruction decode, in addition to a five-cycle integer pipeline. This yields a 13-cycle branch misprediction penalty. The

instruction fetch unit tries to keep the 12-entry instruction queue filled.

Copyright © 2011, Elsevier Inc. All rights Reserved. 89

Intel i7 pipeline

Figure 3.41 The Intel Core

i7 pipeline structure shown

with the memory system

components.

The total pipeline depth is

14 stages, with branch

mispredictions costing 17

cycles.

There are 48 load and 32

store buffers. The six

independent functional units

can each begin execution of

a ready micro-op in the

same cycle.

Copyright © 2012, Elsevier Inc. All rights reserved. 90

SPECCPU2006 -benchmark

• CPU2006 is SPEC's next-generation, industry-standardized, CPU-

intensive benchmark suite, stressing a system's processor, memory

subsystem and compiler.

• Provide a comparative measure of compute-intensive performance

across the widest practical range of hardware using workloads

developed from real user applications.

• These benchmarks are provided as source code and require the

user to be comfortable using compiler commands as well as other

commands via a command interpreter using a console or command

prompt window in order to generate executable binaries.

Copyright © 2012, Elsevier Inc. All rights reserved. 91

SPECCPU2006 - benchmark

Peralbench Pearl; cut-down version of Perl v5.8.7

Mcf C; vehicle scheduling in public mass transportation. Integer arithmetic.

Gobmk C; executes a set of commands to analyze Go positions

Hmmr C; uses a Hidden Markov Model for searching gene databases

Sjeng C; plays chess and several chess variants

Libquantum C; library for the simulation of a quantum computer

Omnetpp C++; discrete event simulation of a large Ethernet network

Astar C++; derived from a portable 2D path-finding library for game AI

Xalancbmk C++ program; XSLT processor for transforming XML documents

into HTML, text, or other XML document types

Milc C program for QCD (Quantum Chromodynamics)

Namd C++; classical molecular dynamics simulation

Dealii C++; PDE solver using the adaptive finite element method

Soplex C++; simplex linear program (LP) Solver

Povray C++; ray tracing in visualization

Lbm C; computational fluid dynamics using the lattice Boltzmann method

Sphynx3 C; speech recognition.

dcm 92

Performance of i7

Figure 3.42 The amount

of “wasted work” is

plotted by taking the ratio

of dispatched micro-ops

that do not graduate to all

dispatched micro-ops.

For example, the ratio is

25% for sjeng, meaning

that 25% of the

dispatched and executed

micro-ops are thrown

away.

Copyright © 2012, Elsevier Inc. All rights reserved. 93

I7 CPI

Figure 3.43 The CPI for

the 19 SPECCPU2006

benchmarks shows an

average CPI for 0.83 for

both the FP and integer

benchmarks, although the

behaviour is quite

different.

A. In the integer case, the

CPI values range from

0.44 to 2.66 with a

standard deviation of 0.77.

B. The variation in the FP

case is from 0.62 to 1.38

with a standard deviation

of 0.25.

Copyright © 2012, Elsevier Inc. All rights reserved. 94

Fallacies

A. It is easy to predict the performance and the energy efficiency of

two different versions of the same instruction set architecture, if we

hold the technology constant. Case in point Intel i7 920, Intel Atom

230, and ARM A8

Figure 3.45 The relative performance and energy efficiency for a set of single-

threaded benchmarks shows the i7 920 is 4 to over 10 times faster than the Atom 230

but that it is about 2 times less power efficient on average! Performance is shown in

the columns as i7 relative to Atom, which is execution time (i7)/execution time (Atom).

Energy is shown with the line as Energy (Atom)/Energy (i7). The i7 never beats the

Atom in energy efficiency, although it is essentially as good on four benchmarks,

three of which are floating point. Only one core is active on the i7, and the rest are in

deep power saving mode. Turbo Boost is used on the i7, which increases its

performance advantage but slightly decreases its relative energy efficiency.

Copyright © 2012, Elsevier Inc. All rights reserved. 95

Copyright © 2012, Elsevier Inc. All rights reserved. 96

Copyright © 2012, Elsevier Inc. All rights reserved. 97

Fallacies

B. Processors with lower CPI will always be faster.

C. Processors with faster clock rate will always be faster.

The product of the CPI and the clock rate determine performance!!

Copyright © 2012, Elsevier Inc. All rights reserved. 98

Problem solving

Consider the following code; the latency of all the

instructions used by the code snippet are also shown in

Figure 3.48 below

Copyright © 2012, Elsevier Inc. All rights reserved. 99

Problem 1

When a branch instruction is involved, the location of the following

delay slot instruction in the pipeline may be called a branch delay

slot. Branch delay slots are found mainly in DSP architectures and

older RISC architectures. MIPS, PA-RISC, ETRAX CRIS, SuperH,

and SPARC are RISC architectures that each have a single branch

delay slot.

Copyright © 2012, Elsevier Inc. All rights reserved. 100

P1 - solution

Each instruction requires one clock cycle of execution (a clock cycle in

which that instruction, and only that instruction, is occupying the

execution units; since every instruction must execute, the loop will take

at least that many clock cycles). To that base number, we add the extra

latency cycles. Don’t forget the branch shadow cycle.

Copyright © 2012, Elsevier Inc. All rights reserved. 101

Problem 2

Copyright © 2012, Elsevier Inc. All rights reserved. 102

P2 - solution

Copyright © 2012, Elsevier Inc. All rights reserved. 103

Problem 3

Copyright © 2012, Elsevier Inc. All rights reserved. 104

P3 -solution

Copyright © 2012, Elsevier Inc. All rights reserved. 105

Problem 4

Copyright © 2012, Elsevier Inc. All rights reserved. 106

P4 - solution

Long-latency ops are at highest risk of being passed by a subsequent

op. The DIVD instr will complete long after the LD F4,0(Ry), for

example.

Copyright © 2012, Elsevier Inc. All rights reserved. 107

Problem 5

Copyright © 2012, Elsevier Inc. All rights reserved. 108

P5-solution

Copyright © 2012, Elsevier Inc. All rights reserved. 109

Problem 6

Copyright © 2012, Elsevier Inc. All rights reserved. 110

P6 a - solution

The fraction of all cycles (for both pipes) wasted in the

reordered code in Problem 5 is:

0.275 = 11 operation / 2 x 20 opportunities 27.5%

1- 0.275 = 0.725 72.5 % pipeline utilization

Copyright © 2012, Elsevier Inc. All rights reserved. 111

P6b -solution

Copyright © 2012, Elsevier Inc. All rights reserved. 112

P6c -solution

Speedup = Execution time without enhancement /

Execution time with enhancement

S = 20 / (22/2) = 20 /11 = 1.82

Copyright © 2012, Elsevier Inc. All rights reserved. 113

You might also like

- CSE 820 Graduate Computer Architecture Week 5 - Instruction Level ParallelismNo ratings yetCSE 820 Graduate Computer Architecture Week 5 - Instruction Level Parallelism38 pages

- Pipelining Become Universal Technique in 1985No ratings yetPipelining Become Universal Technique in 198516 pages

- Instruction-Level Parallelism (ILP), Since The100% (1)Instruction-Level Parallelism (ILP), Since The57 pages

- Instruction-Level Parallelism and Its Exploitation: Prof. Dr. Nizamettin AYDINNo ratings yetInstruction-Level Parallelism and Its Exploitation: Prof. Dr. Nizamettin AYDIN170 pages

- 4-Advanced Pipelining - 241114 - 060906No ratings yet4-Advanced Pipelining - 241114 - 06090680 pages

- Instruction Level Parallelism and Its Exploitation: Unit Ii by Raju K, Cse DeptNo ratings yetInstruction Level Parallelism and Its Exploitation: Unit Ii by Raju K, Cse Dept201 pages

- Instruction Level Parallelism: Soner OnderNo ratings yetInstruction Level Parallelism: Soner Onder25 pages

- Parallelism Via Instructions: Instruction-Level Parallelism (ILP)No ratings yetParallelism Via Instructions: Instruction-Level Parallelism (ILP)21 pages

- Instruction-Level Parallelism: Stalls Control Stalls WAW Stalls WAR Stalls RAW Stalls Structural CPI CPINo ratings yetInstruction-Level Parallelism: Stalls Control Stalls WAW Stalls WAR Stalls RAW Stalls Structural CPI CPI50 pages

- Reduced Instruction Set Computer (Risc) Complex Instruction Set Computer (Cisc)No ratings yetReduced Instruction Set Computer (Risc) Complex Instruction Set Computer (Cisc)7 pages

- Decode and Issue More and One Instruction at A Time Executing More Than One Instruction at A Time More Than One Execution UnitNo ratings yetDecode and Issue More and One Instruction at A Time Executing More Than One Instruction at A Time More Than One Execution Unit28 pages

- Module 5 Instruction Level Parallelism and PipeliningNo ratings yetModule 5 Instruction Level Parallelism and Pipelining54 pages

- Instruction-Level Parallel Processors: Asim MunirNo ratings yetInstruction-Level Parallel Processors: Asim Munir28 pages

- Cs2354 Advanced Computer Architecture 2 MarksNo ratings yetCs2354 Advanced Computer Architecture 2 Marks10 pages

- Star Lion College of Engineering & Technology: Cs2354 Aca-2 Marks & 16 MarksNo ratings yetStar Lion College of Engineering & Technology: Cs2354 Aca-2 Marks & 16 Marks14 pages

- 01 - Mod 2 - Livro AutorresponsabilidadeNo ratings yet01 - Mod 2 - Livro Autorresponsabilidade9 pages

- Computer Architecture ILP - Techniques For IncreasingNo ratings yetComputer Architecture ILP - Techniques For Increasing11 pages

- Instruction-Level Parallel Processors: ObjectiveNo ratings yetInstruction-Level Parallel Processors: Objective31 pages

- Advanced Computer Architecture: BY Dr. Radwa M. TawfeekNo ratings yetAdvanced Computer Architecture: BY Dr. Radwa M. Tawfeek36 pages

- ROG Overclocking Guide Core For 5960X, 5930K & 5280KNo ratings yetROG Overclocking Guide Core For 5960X, 5930K & 5280K7 pages

- Phu Luc - Cac Lenh Assembly Cua CPU NIOS IINo ratings yetPhu Luc - Cac Lenh Assembly Cua CPU NIOS II19 pages

- Chapter 4 - Cache Memory: 4.1 Computer Memory System Overview 4.2 Cache Memory Principles 4.3 Elements of Cache DesignNo ratings yetChapter 4 - Cache Memory: 4.1 Computer Memory System Overview 4.2 Cache Memory Principles 4.3 Elements of Cache Design13 pages

- SonicBOOM - The 3rd Generation Berkeley Out-of-Order MachineNo ratings yetSonicBOOM - The 3rd Generation Berkeley Out-of-Order Machine7 pages

- Direct Communication and SynchronizationNo ratings yetDirect Communication and Synchronization170 pages

- A Framework For Fault Tolerance in RISC-VNo ratings yetA Framework For Fault Tolerance in RISC-V8 pages

- Factors Causing Power Consumption in An Embedded Processor - A StudyNo ratings yetFactors Causing Power Consumption in An Embedded Processor - A Study7 pages

- The X86 Microprocessor & Alp: Microprocessors and MicrocontrollersNo ratings yetThe X86 Microprocessor & Alp: Microprocessors and Microcontrollers56 pages

- Direct-Mapped Cache: Write Allocate With Write-Through ProtocolNo ratings yetDirect-Mapped Cache: Write Allocate With Write-Through Protocol25 pages