Recent stories

Apache Beam: the Future of Data Processing?

Apache Beam: the Future of Data Processing?

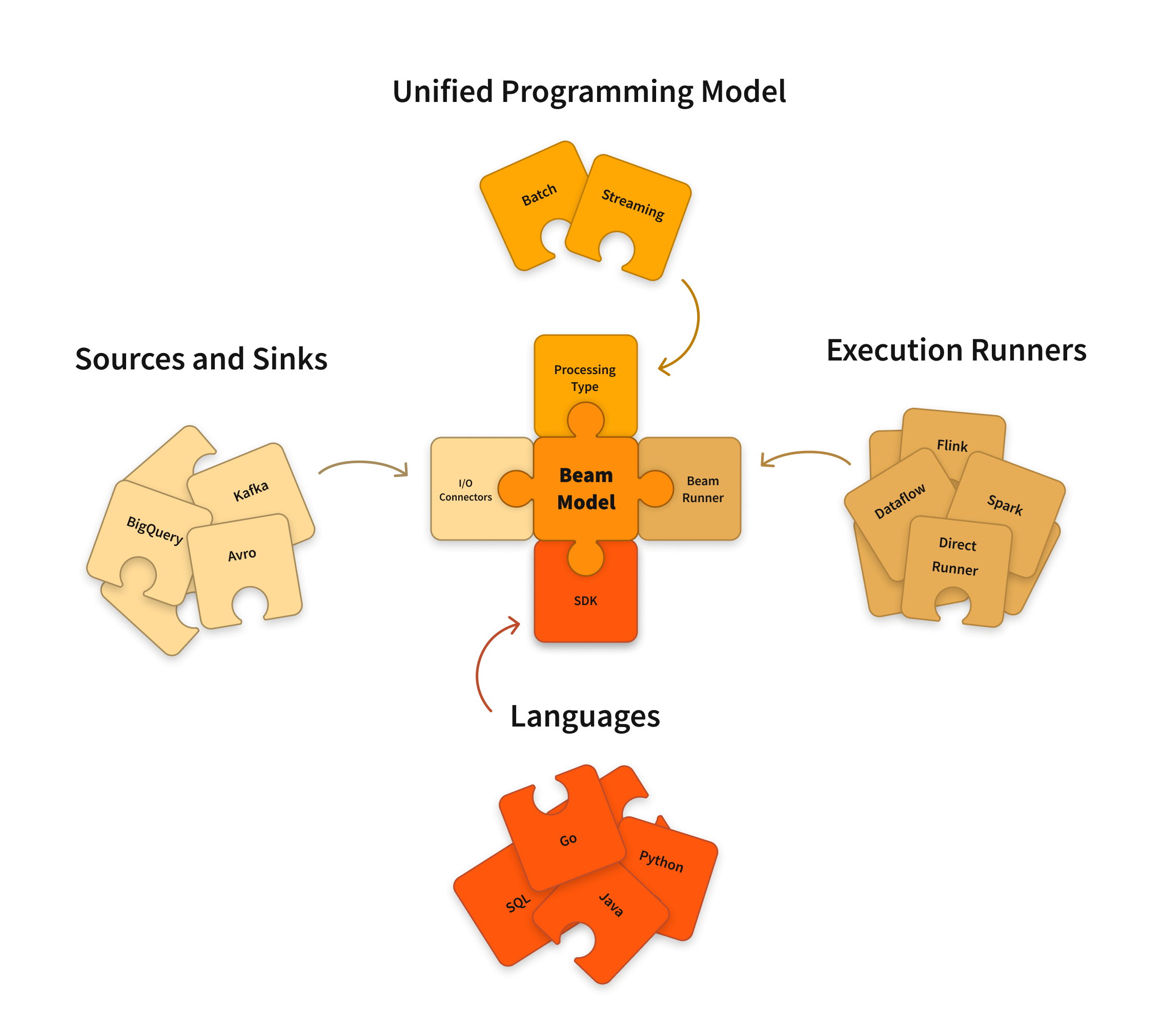

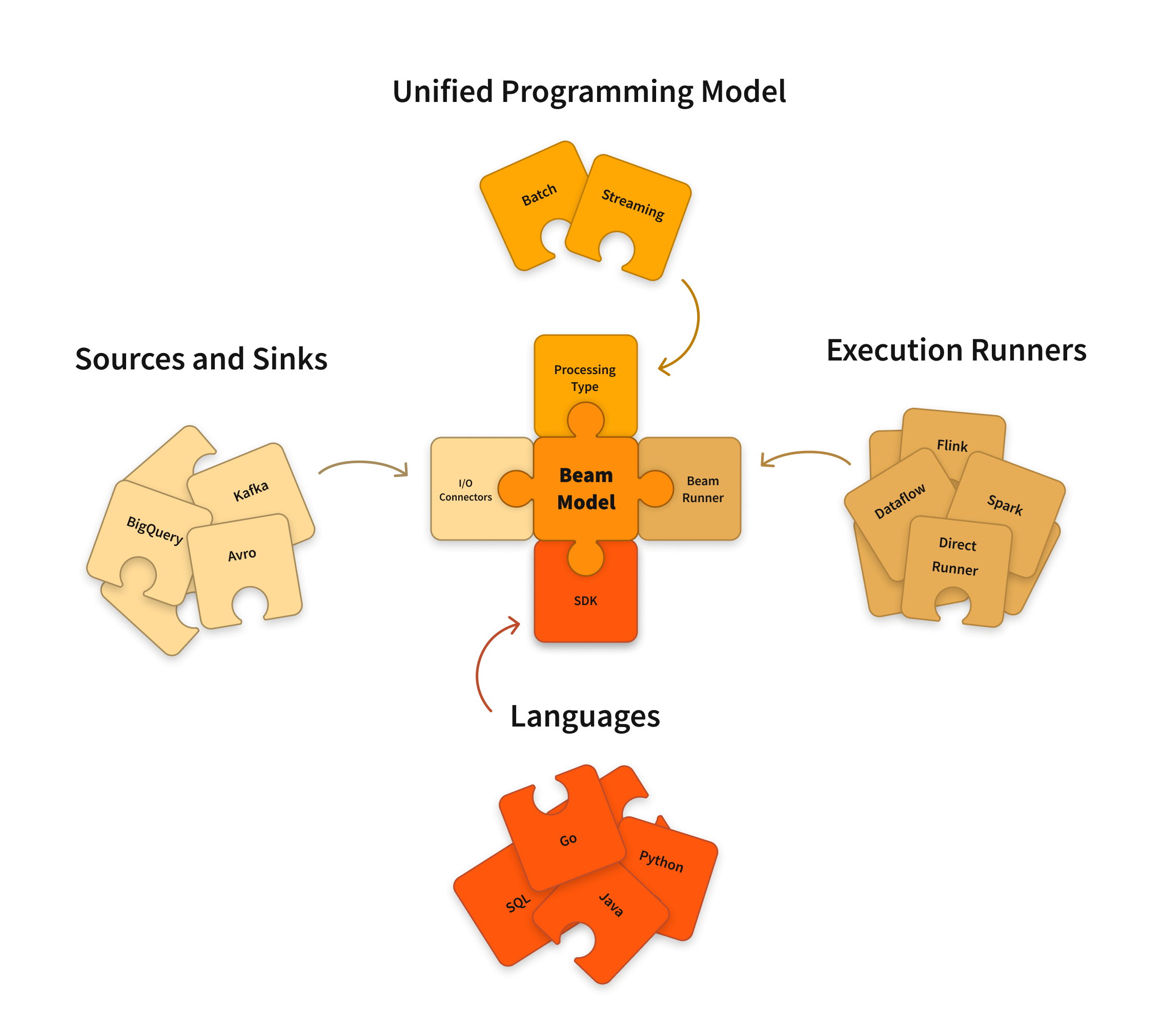

Apache Beam is a unified model for defining both batch and streaming data-parallel processing pipelines. It’s a ...

Caching Alternatives in Google Dataflow: Avoiding Quota Limits and Improving Performance

The problem When building data pipelines, it’s very common to require an external API call to enrich, validate or ...

Putting ML Prototypes Into Production Using TensorFlow Extended (TFX)

Introduction Machine learning projects start by building a proof-of-concept or a prototype. This entails choosing the ...