Guide

API Monitoring: Best Practices & Examples

Table of Contents

Building an application is ultimately about serving its users, whether they engage with it through internal portals, external websites, or APIs. As business needs evolve, systems naturally change to adapt, and tracking their performance through the changes becomes critical.

API monitoring is the practice of using tools to continuously track and evaluate an API's performance, availability, and reliability by measuring key performance indicators (KPIs) such as response time, uptime, error rates, and throughput. It involves assessing an API’s success in terms of its availability and the accuracy of the data it provides.

To effectively implement API monitoring, it's important to understand the core components of the process and how to apply them in practice. This article outlines best practices for API monitoring, including what monitoring involves and the tools and techniques you can use to ensure seamless performance.

Summary of API monitoring best practices

The table below summarizes the best practices discussed in this article.

| Best Practices | Description |

|---|---|

| Implement proactive monitoring | Understand the API's intent clearly, design appropriate error-handling mechanisms for graceful failures, and log and track incoming requests to the API. |

| Define key performance metrics | Error rate and latency should be closely monitored because they directly reflect an API’s reliability and responsiveness. Monitoring throughput helps contextualize performance in relation to traffic trends. |

| Define SLIs and SLOs | Identify metrics that reflect user experience (SLIs), and set realistic, platform-appropriate performance targets (SLOs) to guide reliability and engineering priorities. |

| Establish performance baselines | Understand the capabilities of your API by conducting load and stress testing on your application. Once you have identified these baselines, set up alerts to detect anomalies or outliers that deviate from expected behavior. |

| Track outliers and anomalies | Use monitoring tools to track one-off spikes and incidents in real time. This will help you to stay ahead of growth spurts, security issues, and other irregular events. |

| Set up observability | Integrate tools like OpenTelemetry to collect standardized metrics, logs, and traces across your distributed systems. Use these tools to unify data collection, enhance visibility, and enable faster troubleshooting through correlated insights. |

Implement proactive monitoring

Proactive monitoring keeps your team in control rather than constantly in firefighting mode. There are two key aspects to this process: understanding the intent of the API and implementing logging.

Understand your API’s intent

The first step in implementing effective monitoring is developing a deep understanding of the API’s intent, backed by well-established, accessible documentation across the platform. This means collaborating closely with teams to define the right guardrails, including when the API should succeed, when it should fail, and what exactly constitutes a failure.

It’s important to be explicit and answer questions like:

- Which types of errors should be thrown?

- Which status codes should be returned?

- How should errors be messaged to the client?

Error messages should be clearly defined, consistent, and actionable. The data’s structure should also align with what the client actually needs for effective monitoring. Publish this information using OpenAPI, GraphQL schema, or gRPC proto.

Here’s an example OpenAPI specification for a getUser operation.

openapi: 3.0.0 info: title: User API version: 1.0.0 paths: /user/{id}: get: summary: Retrieve a user by ID description: Returns a user object when a valid ID is provided. Returns appropriate error messages for invalid or missing IDs. parameters: - name: id in: path required: true schema: type: string responses: '200': description: A user object content: application/json: schema: $ref: '#/components/schemas/User' '400': description: Bad request (e.g., malformed ID) content: application/json: schema: $ref: '#/components/schemas/Error' '404': description: User not found content: application/json: schema: $ref: '#/components/schemas/Error' '500': description: Internal server error content: application/json: schema: $ref: '#/components/schemas/Error' components: schemas: User: type: object properties: id: type: string name: type: string Error: type: object properties: code: type: integer example: 404 message: type: string example: User not found details: type: string example: No user exists with the provided ID.For each API in your service, align with the client up front on failure modes and clearly document expected HTTP error codes. This sets a strong foundation for effective and actionable error monitoring. A clear distinction between 4xx and 5xx errors, for example, helps quickly signal whether an issue is on the client or server side.

Add logging

Structured logging is important. Use a well-documented JSON schema or protobuf format to log API events in a consistent, machine-readable structure. Each log entry should be timestamped and include contextual metadata to support indexing, search, rule-based alerting, and statistical aggregation. While logs can provide deep insights and serve as a source of truth, the primary goal is to extract statistical metrics that enable proactive monitoring through threshold-based alerts.

For example, the log entry below records a successful API request to retrieve data from a user-service. It includes a timestamp, log level, and detailed context, such as the HTTP method, endpoint accessed, response status code, and request latency. It also captures user-specific metadata and a trace ID to facilitate correlation across distributed systems.

{ "timestamp": "2025-05-25T14:30:00Z", "level": "INFO", "service": "user-service", "event": "api_request", "request": { "method": "GET", "endpoint": "/user/123", "status_code": 200, "latency_ms": 150 }, "user": { "id": "123", "role": "admin" }, "trace_id": "abc123xyz", "message": "Successfully retrieved user data" }Define key performance metrics

When monitoring APIs, several metrics should be tracked to paint a full picture of how your services are handling load and where bottlenecks might be. Monitoring the following metrics will help your team stay ahead of issues by understanding how often your API is called, its success vs. failure rate, how long it takes to respond, whether your infrastructure is getting maxed out, and the financial impact of your API usage.

Rate, errors, and duration (RED) metrics

RED metrics focus on API-level performance and reliability:

- Rate: Tracking call rate as requests per second per API allows businesses to use this data and trends for capacity planning and understanding traffic patterns.

- Errors: These are the most important indicator of user-facing failures. Monitoring the percentage of API calls that return 4xx and 5xx status codes helps teams identify the frequency and nature of errors affecting users.

- Duration (or latency): Track latency distributions using percentiles—such as p50, p95, and p99—to reveal slow dependencies and tail latency. For example, p99 latency reveals the slowest 1% of requests, often signaling issues like sluggish databases, congested queues, or problematic third-party services. Distributed tracing enhances this by measuring latency at each hop, which allows developers to pinpoint bottlenecks and correlate spans to resource contention.

Modern Automation tools for API testing

Learn moreUtilization, saturation, and errors (USE) metrics

These metrics allow you to track the health of the infrastructure supporting APIs:

- Utilization: Using tools like Cloudwatch or Node Exporter, measure the percentage of resource capacity consumed when calling a specific service API. For example, measuring CPU utilization helps detect throttle risks. Once CPU utilization reaches around 80%, the infrastructure may struggle to sustain traffic and start throttling requests. Other important metrics include memory usage, which can predict OOM kills and pod or machine restarts; and disk I/O, since high volumes of read/write operations can increase API latency.

- Saturation: This is monitored through metrics like disk I/O wait times and connection pool saturation. For example, disk I/O wait times exceeding 10% indicate that a significant portion of writes are pending, which often correlates with elevated p99 latency.

- Errors: Track infrastructure failures such as out-of-memory (OOM) kills and disk I/O errors to quickly detect and respond to underlying hardware or platform issues.

Security KPIs

These metrics help detect and mitigate API vulnerabilities, enforce access controls, and ensure compliance with security best practices:

- Unauthorized attempts: Monitor the rate of

401 Unauthorizedand403 Forbiddenresponses by analyzing logs from your web application firewall (WAF) and API gateway. - OWASP API top‑10 incident counts: Track incidents based on the OWASP API security top 10 risks. Tools like AWS WAF and Cloudflare API Shield use rule-based engines to tag requests against these risk categories.

- Incident trends: Counting incidents by rule label—rather than just raw 4xx/5xx counts—identifies which specific risk classes are active. Monitoring trends by API version can also help teams detect security regressions after deployments.

Data quality KPIs

Ensuring data integrity and contract fidelity helps maintain API reliability and prevents downstream processing errors.

- Schema validation: Implement middleware to enforce schema validation and instrument it to measure how well incoming and outgoing data adhere to the expected schema.

- Null or incorrect field: Measuring % null for a given API is a great data quality metric that allows you to quickly identify when clients deviate from the required behavior of a given request payload.

Cost

Finally, it’s important to evaluate an API’s success from a financial perspective. For example, measuring the cost per 1,000 calls enables teams to identify optimization opportunities, such as caching high-traffic endpoints and negotiating better rates with third-party providers.

Define SLIs and SLOs

SLIs

A service-level indicator (SLI) is a quantitative measure of a service’s behavior that reflects what directly impacts users, such as availability, latency, or correctness. Defining clear SLIs is essential for aligning engineering efforts with user expectations and setting meaningful performance targets.

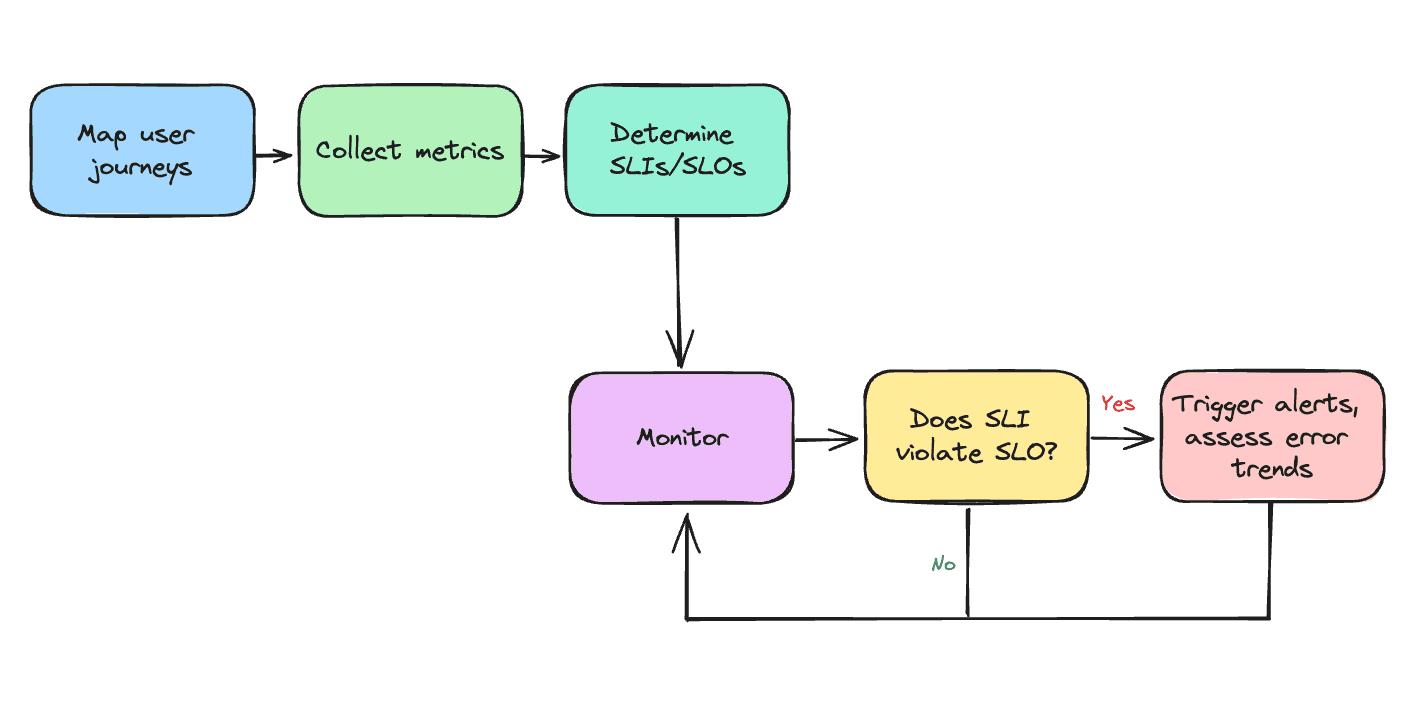

Start by mapping the critical user journeys and workflows your service supports. Identify the APIs responsible for each workflow and define what success looks like from the user's point of view. Good SLIs should capture the health of the system in ways that matter to end users.

Common SLIs include metrics like availability (e.g., percentage of successful responses), latency (e.g., p95 or p99 response times), correctness (e.g., percentage of accurate or complete data), and freshness (e.g., data update intervals). For example, if an e-commerce company wanted to define an SLI for its checkout experience, it might use the following:

“99% of requests to the checkout API must return an HTTP 200 status within 300 ms.”

This is a good SLI because it combines both availability and latency, two key factors that directly impact the user’s ability to complete a purchase quickly and reliably.

SLOs

A service-level objective (SLO) is a target value or range for a service-level agreement (SLA): It defines the threshold for acceptable performance.

Record session replays that include everything from frontend screens to deep platform traces, metrics, and logs

Start For FreeA common formula for calculating SLO compliance is:

SLO target = (Good Events / Total Events) * 100%

Good events are requests that satisfy all of the API's defined SLIs, such as meeting both availability and latency thresholds.

When defining SLO targets, consider different types of users, platforms, and the desired user experience. For example, mobile devices are generally more sensitive to latency than desktops with strong Wi-Fi connections, so it can be useful to define different latency SLIs—and corresponding SLOs—for each platform or user segment.

Before committing to SLO targets and establishing goals for them, observe system behavior using at least a two-week rolling average. This helps smooth out noise and provides a stable picture of system performance.

When defining SLOs, prioritize reliability and user impact over novelty or cutting-edge features. Identify which critical workflows require tighter SLOs with smaller error budgets. For example, a patient data write API may warrant stricter reliability guarantees than a patient signup API.

The goal should be to use these insights proactively, continuously identifying where you can improve performance before users are impacted. Measure the cost of downtime with respect to revenue, developer productivity, and support cost to help prioritize which areas require the most attention.

SLIs and SLOs play a key role in monitoring and alerting

Establish performance baselines

To effectively monitor an API, you must first define what “normal” looks like for each version. This includes acceptable levels of errors, latency, and request rates. Align early with your team and cross-functional stakeholders to agree on meaningful KPIs and what good performance means. Establishing baselines based on real usage data is critical—without them, monitoring is essentially guesswork.

Creating a reliable baseline requires rigor: observing normal behavior through synthetic testing, production traffic replay, statistical analysis, and transparent error-budget tracking. Once you have this foundation, deviations from normal patterns can trigger alerts and appropriate engineering responses. Here are three effective testing methods:

- Load testing measures system performance under expected normal and peak traffic conditions to ensure that it can handle anticipated user demand without degradation. This involves sending a steady, realistic volume of requests to simulate typical usage patterns and verify that the API meets performance targets such as response time and error rates.

- Stress testing involves pushing a system beyond normal operational capacity to find its breaking points or bottlenecks. One form of stress testing is burst testing, which sends a sudden high volume of traffic—such as 3,000 requests over 30 seconds—to observe how the API and underlying infrastructure handle traffic spikes.

- Chaos testing simulates worst-case scenarios by intentionally causing failures in critical dependencies while generating high traffic. This helps reveal how the API behaves under failure conditions and uncovers resilience weaknesses when dependent systems falter.

As a real-world example, consider an organization that introduced a foundation database for an attribution measurement use case expected to receive 10,000 requests per second. The development team ran stress and burst tests against the application that integrated with the foundation database. Measuring RPS + p99 latency and error rate during each synthetic run allowed the team to measure maximum allowable throughput before breaching SLOs with a defined set of resources for a specific version of the API.

Tired of manually updating your system docs, APIs, and diagrams?

Learn How to AutomateUltimately, establishing baselines means taking time to understand the application layer and all of its dependencies to set realistic performance expectations. Run different types of performance tests to evaluate how each dependency behaves under pressure because your system’s baseline performance is essentially the sum total of how all dependencies perform together.

Track outliers and anomalies

Anomalies often first appear as subtle latency spikes on edge calls or unexpected drops in request volume for a specific shard. These issues are tricky to detect and often go unnoticed in complex microservice environments with intricate dependency graphs.

KPI-based monitoring typically captures 99% of traffic, but don’t overlook the importance of outliers. Check that your monitoring tools report on one-off incidents and anomalies, as these often reveal deeper insights into system bottlenecks, edge-case failures, or issues stemming from third-party integrations.

Aggregate error rates as the request moves from edge to origin. This will allow you to trace the request and track it against the service metrics for each hop of the request. Use monitoring platforms that support different types of gauges when measuring RED metrics.

While time series visualizations show how performance changes over time, distribution tracking helps reveal minimum performance levels and outliers that might otherwise get lost in the averages. This dual approach gives you a more complete view that helps identify unusual patterns, degraded performance, and potential reliability risks that may not show up in your top-line metrics. Use LLM-powered tools that are integrated with the logging/metrics platform to identify and analyze anomalies.

Set up observability

Traditional monitoring plays a crucial role in tracking KPIs and detecting anomalies, providing the foundation for understanding your system’s health. However, in modern distributed environments, monitoring alone may not provide the full picture.

There are two reasons for this:

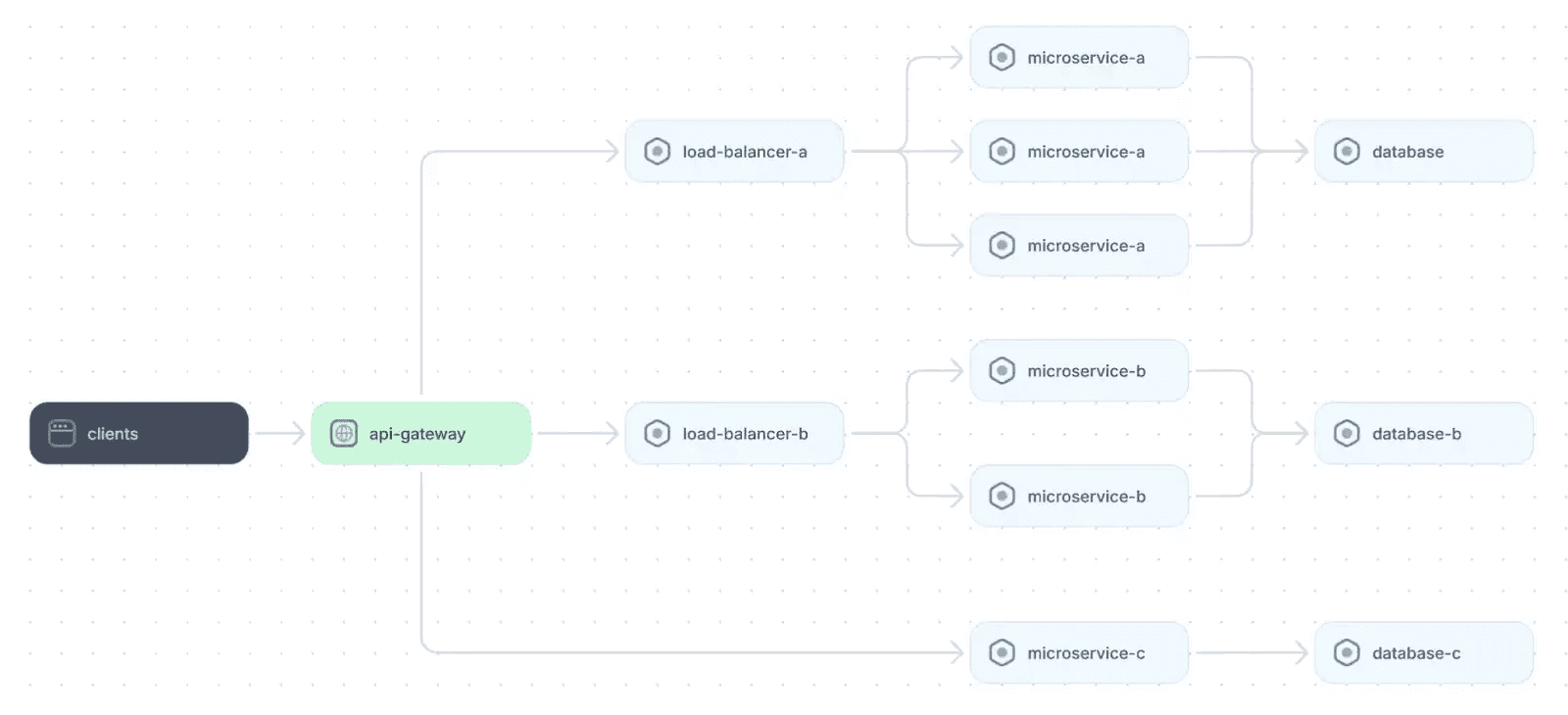

First, as services scale and become more decoupled, a single user request can span dozens of microservices, each deployed independently across regions, teams, and runtime environments. Without unified visibility across metrics, logs, and traces, it's nearly impossible to pinpoint where things are breaking, why they’re slow, or how one service’s degradation is affecting the rest of the system.

Example microservices architecture

Second, traditional monitoring tools often operate in silos, requiring engineers to manually correlate data from different sources. This not only slows down root cause analysis and increases mean time to resolution (MTTR), but also reduces confidence in deployments and recovery efforts.

These challenges have driven the recent shift toward observability, which empowers developers with a more integrated and correlated approach to understanding system behavior. Observability combines different types of telemetry data—such as metrics, logs, and distributed traces—to offer a unified, end-to-end perspective on system behavior. One of its primary goals is to empower developers and operations teams to diagnose issues early, before they impact end users or reach production. Let’s take a look at how observability might be implemented.

Collect telemetry data

Start by connecting your services to software that collects telemetry from the traffic flowing through the system. These metrics become actionable when combined with your earlier work of defining your KPIs and establishing baselines for them. Once you know what “normal” looks like, you can quickly detect and respond to deviations.

One of the most effective tools for collecting and standardizing metrics, logs, and traces is OpenTelemetry. The software is vendor-neutral, which allows collected data to be integrated with multiple platforms for visualization and alerting. It supports a wide variety of programming languages (Go, Python, Java, etc.) and can be deployed as SDKs, sidecars, daemonsets, or at the gateway level. It also allows developers to tag each data point with consistent metadata like service name, version, and deployment environment, making it easier to aggregate and analyze data at the service level.

For example, the code block below demonstrates how to set up OpenTelemetry in a Python application to collect both traces and metrics, tag them with meaningful metadata (such as service name, version, and environment), and export this telemetry data to a backend for further analysis and visualization.

from opentelemetry import trace, metrics from opentelemetry.sdk.trace import TracerProvider from opentelemetry.sdk.metrics import MeterProvider from opentelemetry.sdk.resources import Resource from opentelemetry.sdk.trace.export import BatchSpanProcessor from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter from opentelemetry.exporter.otlp.proto.grpc.metric_exporter import OTLPMetricExporter from opentelemetry.sdk.metrics.export import PeriodicExportingMetricReader # Define common resource metadata resource = Resource.create({ "service.name": "user-service", "service.version": "1.2.3", "deployment.environment": "production" }) # Set up tracing trace.set_tracer_provider(TracerProvider(resource=resource)) tracer = trace.get_tracer(__name__) trace.get_tracer_provider().add_span_processor( BatchSpanProcessor(OTLPSpanExporter()) ) # Set up metrics metrics.set_meter_provider(MeterProvider(resource=resource)) metric_reader = PeriodicExportingMetricReader(OTLPMetricExporter()) metrics.get_meter_provider().start_pipeline(metric_reader, interval=10) meter = metrics.get_meter(__name__) # Define a counter metric request_counter = meter.create_counter( name="api_requests_total", description="Total number of API requests", unit="1" ) # Simulate a request and trace it with tracer.start_as_current_span("handle_request"): print("Handling API request...") request_counter.add(1, {"endpoint": "/user", "method": "GET"})Integrate with observability tools

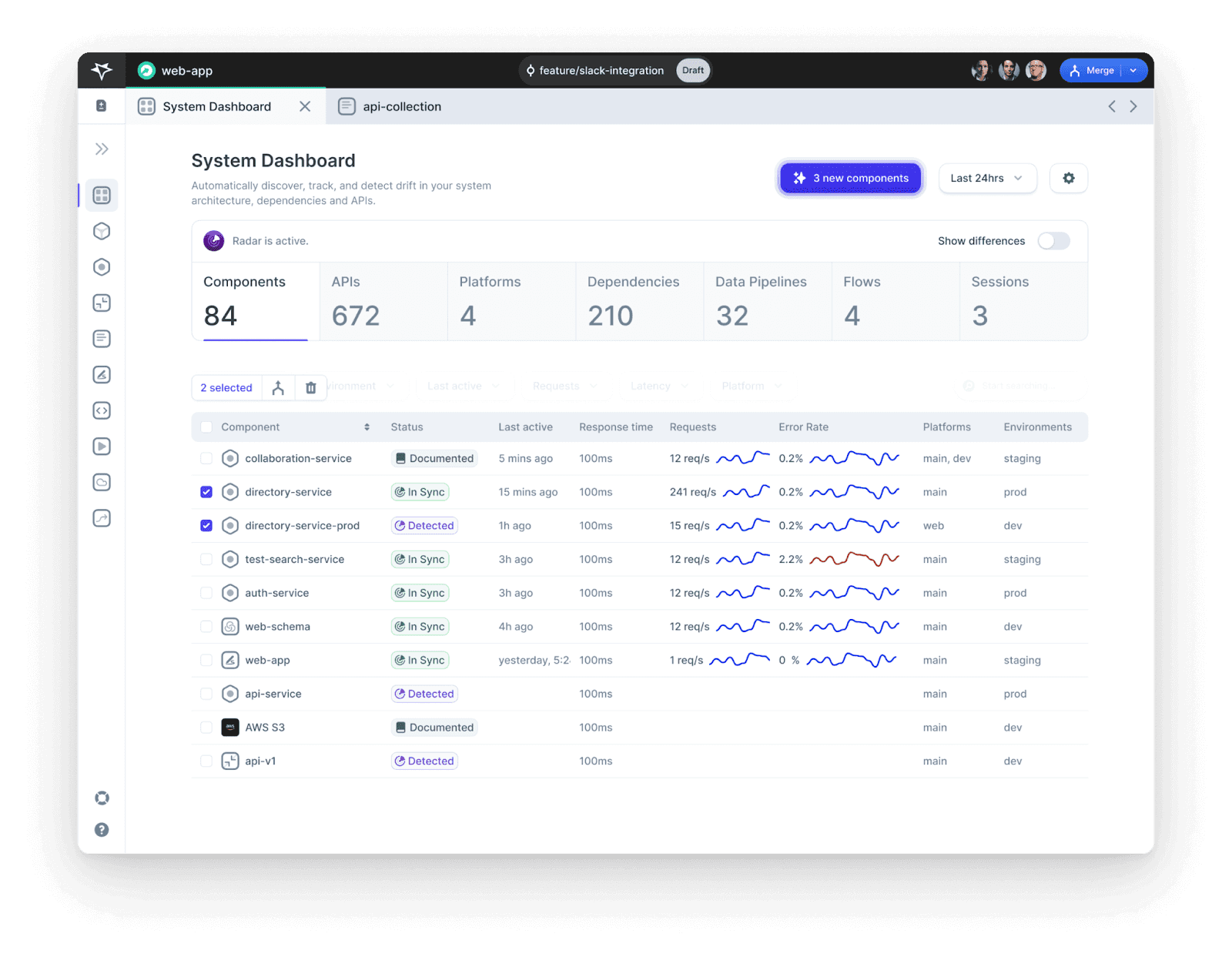

Once OpenTelemetry is integrated into your system, its data can be exported to a platform of your choice. For example, platforms like Multiplayer leverage this data to create a dynamic and real-time map of services, APIs, and dependencies. Multiplayer also consolidates metrics, logs, and traces into its System Dashboard, which summarizes telemetry data across different platforms, components, APIs, and dependencies.

Multiplayer’s System Dashboard

A core benefit of this type of platform is that it provides a centralized, up-to-date view of system architecture combined with telemetry data. When developers have easy access to this information, it allows them to diagnose and remediate issues more quickly and build new features with confidence that changes will not unintentionally impact other parts of the system.

Implement adaptive alerting

Another key aspect of observability is alerting, which complements dashboards by automatically notifying teams when key metrics deviate from normal patterns or cross critical thresholds.

Rather than relying on static thresholds, it is better to compute rolling baselines, such as a two-week sliding window, and trigger alerts when metrics exceed a set number of standard deviations (σ) above the baseline or when the error-budget burn rate surpasses twice the target.

For high-cardinality metrics, applying the modified Z-Score method (using median and median absolute deviation) helps systems resist outliers. Seasonality patterns like hour-of-day or day-of-week can be modeled with techniques like Holt-Winters to reduce false positives. To minimize noise from transient spikes or one-off events, fine-tune these thresholds over time based on actual usage patterns.

Once thresholds are defined, alerts should be integrated with tools like Slack or on-call management systems for proactive monitoring. The ultimate goal is to establish a continuous cycle of monitoring → alerting → resolving, which helps teams catch issues early and continuously improve system reliability.

Last thoughts

Effective APIs communicate their behavior through performance metrics. Tracking these metrics and gathering actionable insights through comprehensive observability practices adds enormous value by enabling developers to gain a deeper understanding of the API and identify issues.

Leveraging platforms like Multiplayer to unify and visualize telemetry data empowers teams to proactively monitor APIs, identify dependencies, and optimize performance. Well-defined APIs combined with robust monitoring and observability mechanisms foster a healthy team culture and development environment, ultimately boosting productivity and driving greater product value.