Data comes in all shapes and forms, but duplicate records are a prominent part of every data format. Whether dealing with web-based data or simply navigating through a truckload of sales data, your analysis will get skewed if you have duplicate values.

Do you use SQL to crunch your numbers and perform long queries on your data stacks? If yes, then this guide on managing SQL duplicates will be an absolute delight for you.

Here are a few different ways you can use to manage duplicates using SQL.

1. Counting Duplicates Using Group by Function

SQL is a multi-faceted programming language that offers various functions to simplify calculations. If you have plenty of experience with the aggregation functions in SQL, you might already be familiar with the group by function and what it can be used for.

The group by function is one of the most basic SQL commands, which is ideal for dealing with multiple records since you can use different aggregate functions like sum, count, average, and many others in conjunction with the group by function to arrive at a distinct row-wise value.

Depending on the scenario, you can find duplicates with the group by function within a single column and multiple columns.

a. Count Duplicates in a Single Column

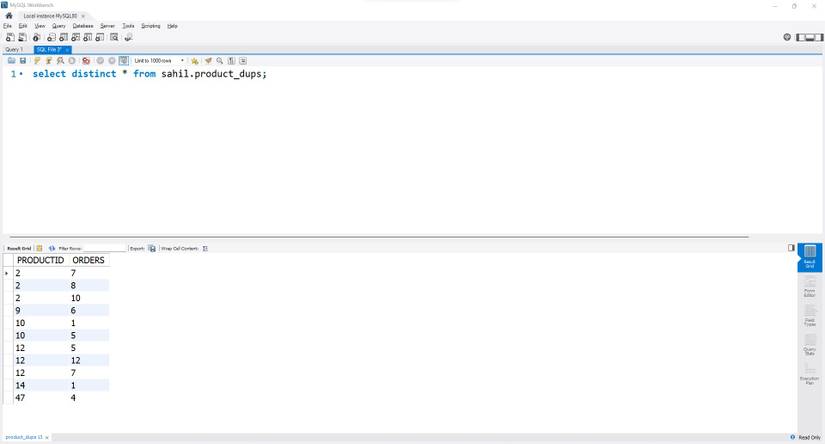

Suppose you have the following data table with two columns: ProductID and Orders.

| ProductID | Orders |

| 2 | 7 |

| 2 | 8 |

| 2 | 10 |

| 9 | 6 |

| 10 | 1 |

| 10 | 5 |

| 12 | 5 |

| 12 | 12 |

| 12 | 7 |

| 14 | 1 |

| 14 | 1 |

| 47 | 4 |

| 47 | 4 |

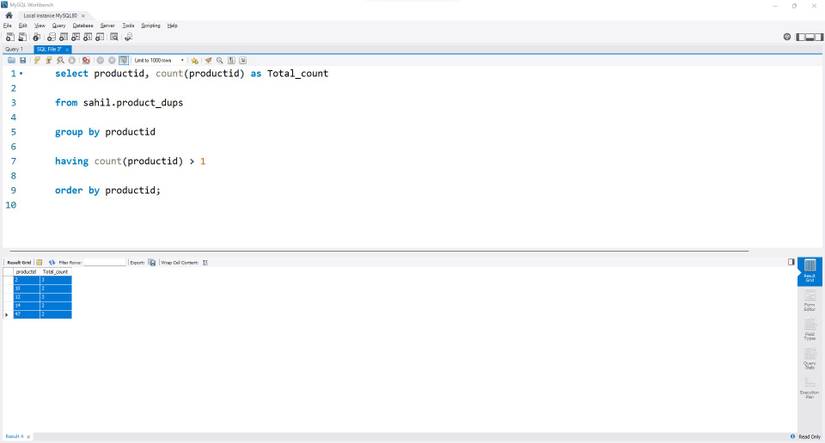

To find duplicate Product IDs, you can use the group by function and the having clause to filter the aggregated values, as follows:

select productid, count(productid) as Total_count

from sahil.product_dups

group by productid

having count(productid) > 1

order by productid; As with a typical SQL statement, you must start by defining the columns you want to display in the final result. In this case, we want to display the number of duplicate values within the ProductID column.

In the first segment, define the ProductID column within the select statement. The count function follows the ProductID reference so that SQL understands the purpose of your query.

Next, define the source table using the from clause. Since count is an aggregation function, you need to use the group by function to group all the similar values.

Remember, the idea is to list the duplicate values within the ProductID column. To do so, you must filter the count and display values occurring more than once in the column. The having clause filters the aggregated data; you can use the condition, i.e., count(productid) >1, to display the desired results.

Finally, the order by clause sorts the final results in ascending order.

The output is as follows:

b. Count Duplicates in Multiple Columns

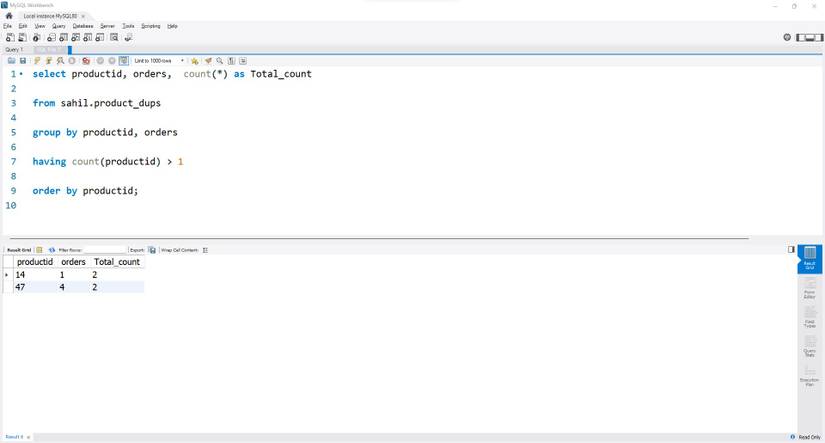

When you want to count duplicates in multiple columns but don't want to write multiple SQL queries, you can expand the above code with a few tweaks. For example, if you want to display duplicate rows in multiple columns, you can use the following code:

select productid, orders, count(*) as Total_count

from sahil.product_dups

group by productid, orders

having count(productid) > 1

order by productid; In the output, you will notice that only two rows are displayed. When you tweak the query and add the reference of both columns within the select statement, you get a count of matching rows with duplicate values.

Instead of the count(column) function, you must pass the count(*) function to get duplicate rows. The * function toggles through all rows and looks for duplicate rows instead of individual duplicate values.

The output is shown below:

The corresponding rows with Product ID 14 and 47 are displayed since the order values are the same.

2. Flagging Duplicates With row_number() Function

While the group by and having combination is the simplest way to find and flag duplicates within a table, there is an alternate way to find duplicates using the row_number() function. The row_number() function is a part of the SQL window functions category and is essential for efficiently processing your queries.

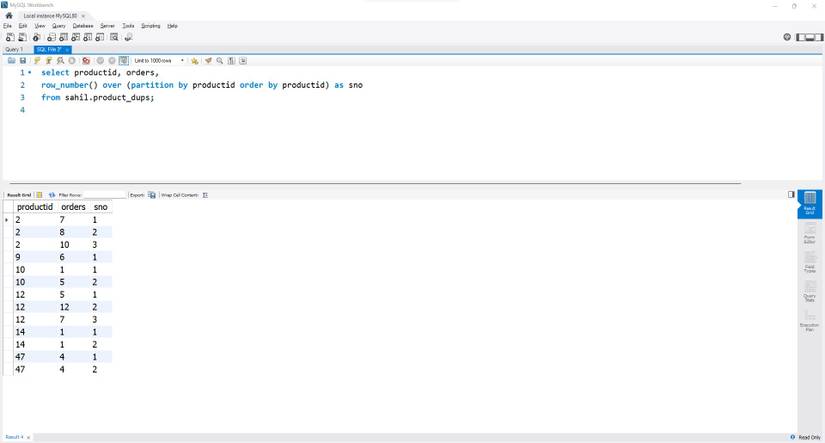

Here's how you can flag duplicates using the row_number() function:

select productid, orders,

row_number() over (partition by productid order by productid) as sno

from sahil.product_dups; The row_number() function combs through each Product ID value and assimilates the number of recurrences for each ID. The partition keyword segregates the duplicate values and assigns values chronologically, like 1, 2,3, and so forth.

If you don't use the partition keyword, you will have a unique serial number for all the Product IDs, which won't suit your purpose.

The order by clause within the partition section is functional when defining the sorting order. You can choose between ascending (default) and descending order.

Finally, you can assign an alias to the column to make it easier to filter later (if required).

3. Deleting Duplicate Rows From a SQL Table

Since duplicate values in a table can skew your analysis, eliminating them during the data-cleaning stage is often imperative. SQL is a valuable language that offers ways to track and delete your duplicate values efficiently.

a. Using the distinct Keyword

The distinct keyword is probably the most common and frequently used SQL function to remove duplicate values in a table. You can remove duplicates from a single column or even duplicate rows in one go.

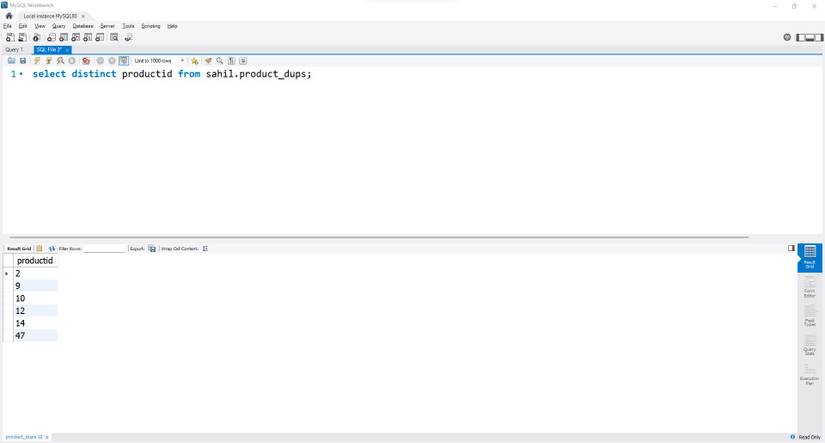

Here's how you can remove duplicates from a single column:

select distinct productid from sahil.product_dups; The output returns a list of all unique Product IDs from the table.

To remove duplicate rows, you can tweak the above code as follows:

select distinct * from sahil.product_dups; The output returns a list of all unique rows from the table. Looking at the output, you will notice that Product IDs 14 and 47 appear only once in the final result table.

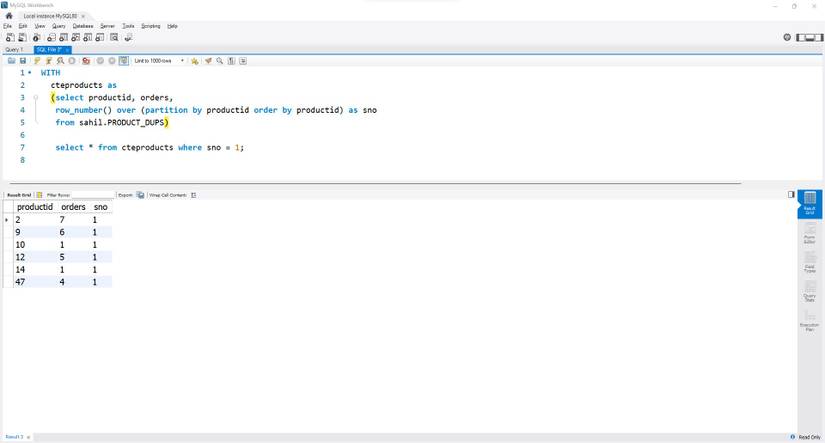

b. Using the Common Table Expression (CTE) Method

The Common Table Expression (CTE) method differs slightly from the mainstream SQL code. CTEs are similar to SQL's temporary tables, with the only difference being that they are virtual, which you can reference during the query's execution only.

The biggest benefit is that you don't have to pass a separate query to drop these tables later, as they cease to exist as soon as the query executes. Using the CTE method, you can use the code below to find and delete duplicates.

with cteproducts as

(select productid, orders,

row_number() over (partition by productid order by productid) as sno

from sahil.product_dups)

select * from cteproducts

where sno = 1; You can invoke the CTE function using the with keyword; define the name of the temporary virtual table after the with keyword. The CTE table reference is useful while filtering the table's values.

In the next part, assign row numbers to your Product IDs using the row_number() function. Since you are referencing each Product ID with a partition function, each recurring ID has a distinct value.

Finally, filter the newly created sno column in the last segment with another select statement. Set this filter to 1 to obtain unique values in the final output.

Learn to Use SQL the Easy Way

SQL and its variants have become the talk of the town, with its innate ability to query and use relational databases. From writing simple queries to performing elaborate analyses with sub-queries, this language has a little of everything.

However, before writing any queries, you must hone your skills and get cracking with the codes to make yourself an adept coder. You can learn SQL in a fun way by implementing your knowledge in games. Learn some fancy coding nuances by adding a little fun to your code.