Table of Contents

Introduction

Kubernetes metrics are required to run top command. To know the resource utilization of pods and nodes this will help us. While trying to set up the metric we could come across a few issues. For future reference this has been documented, may be helpful for someone those who face similar issues.

I have used an old version of metric till now, It’s time for upgrade. Tried with latest version of metric server deployment.

If you are looking to Setup the Kubernetes Cluster using Ansible, have a look at this.

Installing Latest Metrics

Latest Kubernetes metrics manifests can be downloaded from here, as of when I’m started the upgrade the latest version os v0.4.1.

Thought, the issues would have fixed on the latest version and still they exist. I have used the latest and still face few of issue.

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yamlOutput for reference

ansible@k8mas1:~$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created ansible@k8mas1:~$ Fixing Kubernetes Metrics for Pods

This guide will walk through small fix.

ansible@k8mas1:~$ kubectl top pods Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io) ansible@k8mas1:~$Even installed with latest metric and getting unformatted output or metric not available error.

ansible@k8mas1:~$ kubectl top pods W1203 23:45:07.204217 20547 top_pod.go:265] Metrics not available for pod default/apacheweb-5c8fcdd556-hq8mz, age: 16m9.204210978s error: Metrics not available for pod default/apacheweb-5c8fcdd556-hq8mz, age: 16m9.204210978s ansible@k8mas1:~$ Edit the metric server deployment in kube-system namespace.

$ kubectl edit deployments.apps -n kube-system metrics-server Append with two options, from

spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443

to this

spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls=true - --kubelet-preferred-address-types=InternalIP

Save and exit from deployment using wq!

ansible@k8mas1:~$ kubectl edit deployments.apps -n kube-system metrics-server deployment.apps/metrics-server edited ansible@k8mas1:~$Let’s describe the deployment now, we should get the appended arguments.

ansible@k8mas1:~$ kubectl describe deployments.apps -n kube-system metrics-server Name: metrics-server Namespace: kube-system CreationTimestamp: Thu, 03 Dec 2020 23:26:08 +0400 Labels: k8s-app=metrics-server Annotations: deployment.kubernetes.io/revision: 5 Selector: k8s-app=metrics-server Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 0 max unavailable, 25% max surge Pod Template: Labels: k8s-app=metrics-server Service Account: metrics-server Containers: metrics-server: Image: k8s.gcr.io/metrics-server/metrics-server:v0.4.1 Port: 4443/TCP Host Port: 4443/TCP Args: --cert-dir=/tmp --secure-port=4443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --kubelet-insecure-tls=true --kubelet-use-node-status-port Liveness: http-get https://:https/livez delay=0s timeout=1s period=10s #success=1 #failure=3 Readiness: http-get https://:https/readyz delay=0s timeout=1s period=10s #success=1 #failure=3 Environment: <none> Mounts: /tmp from tmp-dir (rw) Volumes: tmp-dir: Type: EmptyDir (a temporary directory that shares a pod's lifetime) Medium: SizeLimit: <unset> Priority Class Name: system-cluster-critical Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: metrics-server-798bd55c4d (1/1 replicas created) Events: <none> ansible@k8mas1:~$Now metric for pods should work fine.

ansible@k8mas1:~$ kubectl top pods NAME CPU(cores) MEMORY(bytes) apacheweb-5c8fcdd556-hq8mz 1m 6Mi apacheweb-5c8fcdd556-mxmc2 1m 6Mi apacheweb-5c8fcdd556-rlvhn 1m 6Mi apacheweb-5c8fcdd556-x7vr7 1m 6Mi apacheweb-5c8fcdd556-zsvkp 1m 6Mi somepod 0m 2Mi ansible@k8mas1:~$ Fixing Kubernetes Metrics for Worker Nodes

While trying to list the top command, getting unknown for worker nodes.

ansible@k8mas1:~$ kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8nod1 163m 8% 742Mi 9% k8mas1 <unknown> <unknown> <unknown> <unknown> k8nod2 <unknown> <unknown> <unknown> <unknown> ansible@k8mas1:~$ Once again edit the deployment of Kubernetes metrics under the kube-system namespace.

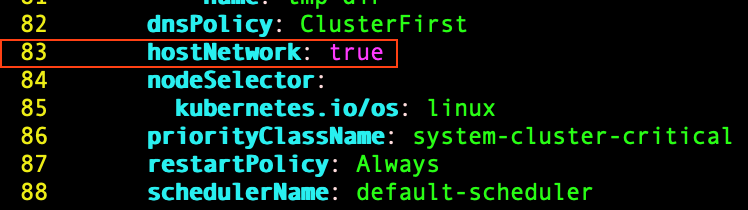

$ kubectl edit deployments.apps -n kube-system metrics-serverAdd this below dns policy or at the end of the container section above the restart Policy.

hostNetwork: true

Run the top command, it should work now.

ansible@k8mas1:~$ kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8mas1 113m 5% 1270Mi 16% k8nod1 56m 2% 741Mi 9% k8nod2 55m 2% 698Mi 8% ansible@k8mas1:~$ That’s it, we have successfully fixed the metric issues for pods and nodes.

Conclusion

To conclude on fixing the Kubernetes Metrics issue in newly deployed Kubernetes cluster or an existing cluster is easier by editing the deployment yaml files. Will come up with a new fix on other guide, Subscribe to our newsletter and stay close for more upcoming how-to guides.

Hi, it does work fine thanks!

Could you explain why hostNetwork: true is needed and what it fixes?

Hi Kube-rage,

HostNetwork – Controls whether the pod may use the node network namespace. Doing so gives the pod access to the loopback device, services listening on localhost, and could be used to snoop on network activity of other pods on the same node.

For your reference, Have a look at Pod Security Policy

Thanks & Regards

works like magic!

hey! top works fine, but on dashboard i dont see metrics. How to fix?