This code uses the Serverless Framework to deploy an AWS lambda function that, when triggered by a file uploaded in an S3 bucket, will run the SAF CLI with the given input command (COMMAND_STRING_INPUT).

- Clone this repository:

git clone https://github.com/mitre/saf-lambda-function.git - Install the Serverless Framework:

npm install -g serverless - Install the latest dependencies:

npm install. - Configure your AWS credentials. Recommended method is to add a profile in the

~/.aws/credentialsfile and then export that profile:

export AWS_PROFILE=<your_creds_profile_name> # To ensure your access to AWS, run: aws s3 ls- Set the S3 bucket name that you would like to upload your HDF file to

export BUCKET=<bucket-name>- Set the SAF CLI command environment variable. This lambda function will run

saf <command_string> -i input_file_from_bucket.jsonExample:

export COMMAND_STRING_INPUT="convert hdf2splunk -H 127.0.0.1 -u admin -p Valid_password! -I your_index_name"- More examples can be found at SAF CLI Usage

- NOTE: Do not include the input flag in the command string as this will be appended on from the S3 bucket trigger, ex: "-i hdf_file.json".

- NOTE: This action does not support

view heimdall.

- Ensure that the environment variables are set properly:

env - Modify any config values you may want to change. These are found in

config.jsonand have the following default values:

{ "service-name": "saf-lambda-function", "bucket-input-folder": "unprocessed/", "bucket-output-folder": "processed/", "output-enabled": true, "output-file-ext": ".json", "output-clarifier": "_output" } If "output-enabled" is set to true, then the uploaded output file in s3 bucket will be named <input_file_name><output-clarifier><output-file-ext>. EXAMPLE: input file: <BUCKET>/unprocessed/burpsuite_scan.xml output file: <bucket-name>/processed/burpsuite_scan_output.json

-

Test by invoking locally

-

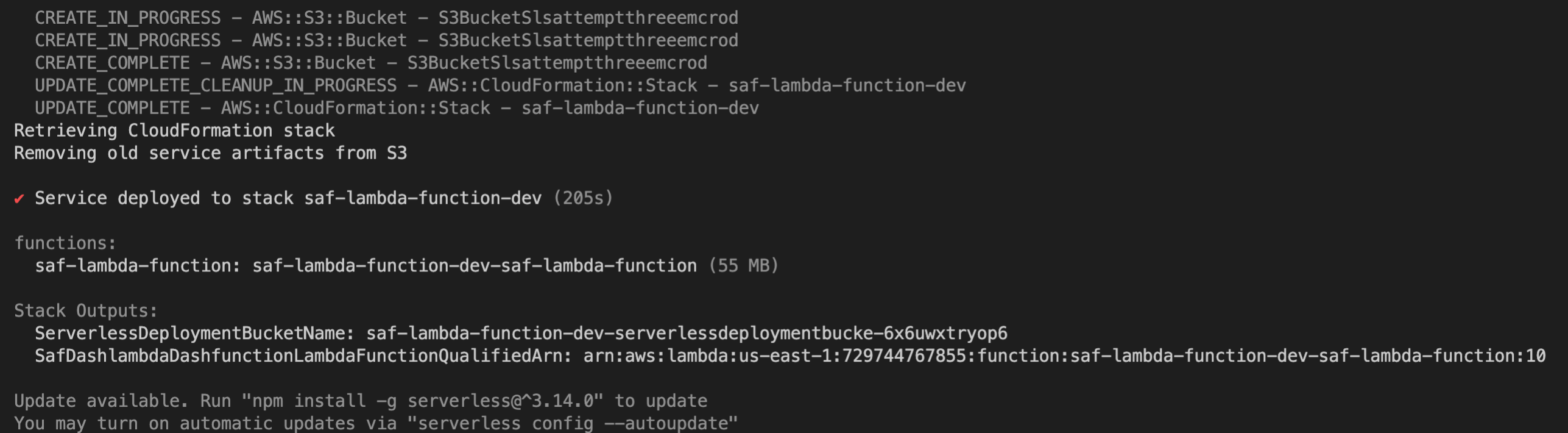

Deploy the service:

sls deploy --verbose. This may take several minutes and should look like the example below.

- (Optional) Test by invoking via AWS

- Create an AWS bucket with your bucket name that you previously specified as an environment variable.

- Load a file into the "bucket-input-folder" which is specified in the

config.json. - If testing for the first time, run

npm make-event. This will generate an s3 test event by running the commandserverless generate-event -t aws:s3 > test/event.json. - Edit the bucket name and key in

test/event.json.

"bucket": { "name": "your-bucket-name", ... }, "object": { "key": "your-input-folder/you-file-name.json", - Run

npm test. - You should see logging in the terminal and an uploaded output file in your s3 bucket if the

config.jsonfile specifies that the function should upload an output file.

Here, npm test is running the command: serverless invoke local --function saf-lambda-function --path test/event.json. You can change the specifications more if needed by looking at the documentation for serverless invoke local.

-

When the service is deployed successfully, log into the AWS console, go to the "Lamda" interface, and set the S3 bucket as the trigger if not already shown.

-

You can test the service by uploading your input file into the

bucket-namethat your exported in step 2.

The service will run saf <COMMAND_STRING_INPUT> -i <latest_file_from_bucket> and the output will be determined by that command. For example, for the convert hdf2splunk command, the service will convert the uploaded HDF file and send the data to your Splunk instance.

Please feel free to look through our issues, make a fork and submit PRs and improvements. We love hearing from our end-users and the community and will be happy to engage with you on suggestions, updates, fixes or new capabilities.

Please feel free to contact us by opening an issue on the issue board, or, at saf@mitre.org should you have any suggestions, questions or issues.

© 2022 The MITRE Corporation.

Approved for Public Release; Distribution Unlimited. Case Number 18-3678.

MITRE hereby grants express written permission to use, reproduce, distribute, modify, and otherwise leverage this software to the extent permitted by the licensed terms provided in the LICENSE.md file included with this project.

This software was produced for the U. S. Government under Contract Number HHSM-500-2012-00008I, and is subject to Federal Acquisition Regulation Clause 52.227-14, Rights in Data-General.

No other use other than that granted to the U. S. Government, or to those acting on behalf of the U. S. Government under that Clause is authorized without the express written permission of The MITRE Corporation.

For further information, please contact The MITRE Corporation, Contracts Management Office, 7515 Colshire Drive, McLean, VA 22102-7539, (703) 983-6000.