Probability Data Distributions in Data Science

Last Updated : 24 May, 2025

Understanding how data behaves is one of the first steps in data science. Before we dive into building models or running analysis, we need to understand how the values in our dataset are spread out and that’s where probability distributions come in.

Let us start with a simple example: If you roll a fair die, the chance of getting a 6 is 1 out of 6, or 16.67%. This is a basic example of a probability distribution a way to describe the likelihood of different outcomes.

Probability Data Distributions

Probability Data DistributionsWhen dealing with complex data like customer purchases, stock prices, or weather, probability distributions help answer:

- What is most likely to happen?

- What are rare or unusual outcomes?

- Are values close together or spread out?

This helps us make better predictions and understand uncertainty.

Why Are Probability Distributions Important?

- Explain how data behaves (clustered or spread)

- Form the basis of machine learning models

- Used in statistical tests (e.g., p-value)

- Help identify outliers and make predictions

Before this, we need to understand random variables, which assign numbers to outcomes of random events (e.g., rolling a die).

Random variables are:

Discrete: Only specific values (e.g., number of people)

Continuous: Any value in a range (e.g., height, temperature)

Key Components of Probability Distributions

Now that we understand random variables let's explore how we describe their probabilities using three key concepts:

1. Probability Mass Function (PMF): Used for discrete variables (e.g., number of products bought). It gives the probability of each exact value. For example, 25% of customers buy exactly 3 products.

2. Probability Density Function (PDF): Used for continuous variables (e.g., amount spent). It shows how probabilities spread over a range but not the chance of one exact value since values can be infinite.

3. Cumulative Distribution Function (CDF): Used for both types, it shows the probability that a value is less than or equal to a certain number. For example, CDF(3) = 0.75 means 75% buy 3 or fewer products; CDF($50) = 0.80 means 80% spend $50 or less. To find the CDF we can use the formula given below:

\text{CDF: } F_X(x) = P(X \leq x) = \int_{-\infty}^x f(t) \, dt

Where F(x) is the CDF and f(t) is the PDF.

Types of Probability Distributions

Probability distributions can be divided into two main types based on the nature of the random variables: discrete and continuous.

Discrete Data Distributions

A discrete distribution is used when the random variable can take on countable, specific values. For example, when predicting the number of products a customer buys in a single order the possible outcomes are whole numbers like 0, 1, 2, 3, etc. You can't buy 2.5 products so this is a discrete random variable. It includes various distributions Let's understand them one by one:

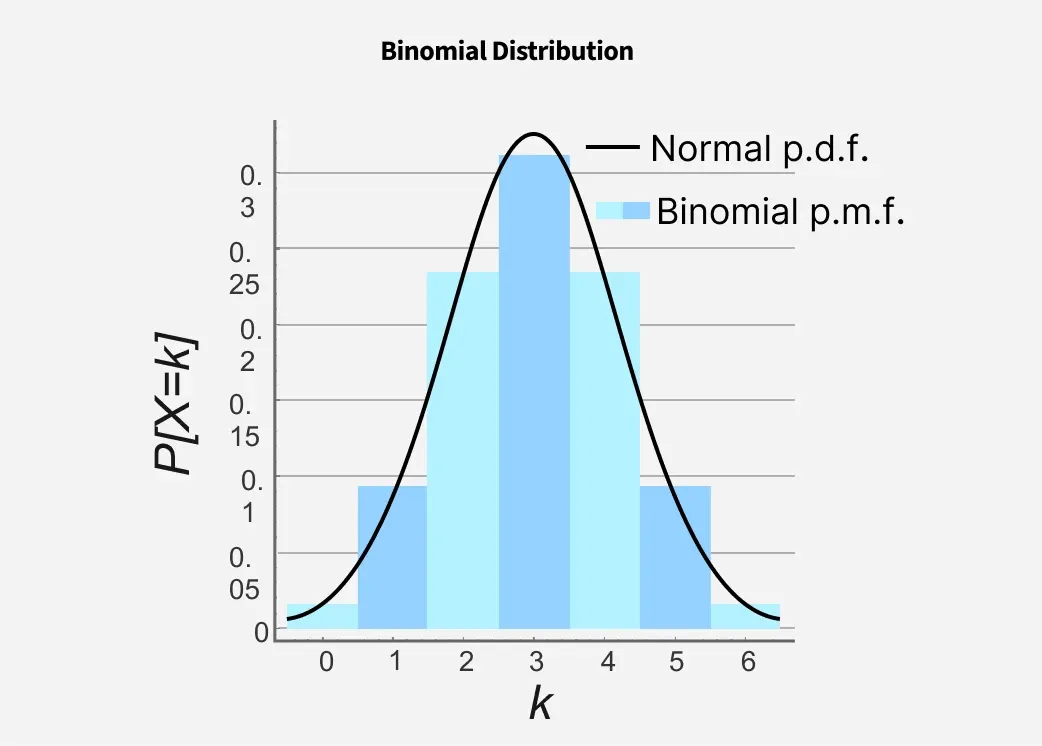

1. Binomial Distribution

The binomial distribution calculates the chance of getting a certain number of successes in a fixed number of trials. For example, flipping a coin 10 times and counting heads.

- Number of trials: 10

- Two outcomes per trial: heads (success) or tails (failure)

- Probability of success (heads): 0.5

- Shows likelihood of getting 0 to 10 heads

Binomial Distribution

Binomial Distribution2. Bernoulli Distribution

The Bernoulli distribution describes experiments with only one trial and two possible outcomes: success or failure. It’s the simplest probability distribution. For example, flipping a coin once and checking if it lands on heads.

- One trial only

- Two outcomes: heads (success) or tails (failure)

- Probability of success: 0.5

- Graph shows two bars representing success (1) and failure (0) with equal probabilities

Bernoulli Distributions

Bernoulli Distributions3. Poisson Distribution

The Poisson distribution models the number of random events happening in a fixed time or area. For example, counting how many customers enter a coffee shop per hour. It helps predict the probability of seeing a specific number of events based on the average rate.

- Counts events in a fixed interval

- Average rate (e.g., 5 customers/hour) is known

- Calculates probability of exact counts (e.g., exactly 3 customers)

- Graph shows a curve centered around the average rate, tapering off for less likely counts

Poisson Distributions

Poisson Distributions4. Geometric Distributions

The geometric distribution models the number of trials needed to get the first success in repeated independent attempts. For example, how many emails you must send before a customer makes a purchase. It helps predict the chance of success happening at each trial.

- Counts trials until first success

- Each trial is independent with fixed success probability

- Useful for questions like “How many emails until first purchase?”

- Graph shows a decreasing curve—fewer trials are more likely

Geometric Distribution

Geometric DistributionContinuous Data Distributions

A continuous distribution is used when the random variable can take any value within a specified range like when we analyze how much money a customer spends in a store then the amount can be any real number including decimals like $25.75, $50.23, etc.

In continuous distributions the Probability Density Function (PDF) shows how the probabilities are spread across the possible values. The area under the curve of this PDF represents the probability of the random variable falling within a certain range. Now let's look at some types of continuous probability distributions that are commonly used in data science:

1. Normal Distribution

The normal distribution, or bell curve, is one of the most common data distributions. Most values cluster around the mean, with fewer values farther away, forming a symmetrical shape. It’s perfect for modeling things like people’s heights.

- Mean is the center of the curve

- Symmetrical distribution (left and right sides mirror each other)

- Standard deviation shows how spread out the data is

- Smaller standard deviation means data is closer to the mean

Normal Distribution

Normal Distribution2. Exponential Distribution

The exponential distribution models the time between events happening independently and continuously. For example, the time between customer arrivals at a store. It helps predict how long you might wait for the next event.

- Models waiting time between events

- Average time (e.g., 10 minutes between customers) defines the rate (λ)

- Events occur independently and continuously

- Useful for predicting time until next event

Exponential Distributions

Exponential DistributionsWhile the exponential distribution focuses on waiting times sometimes we just need to model situations where every outcome is equally likely. In that case we use the uniform distribution.

The uniform distribution means every outcome in a range is equally likely. For example, rolling a fair six-sided die or picking a random number between 0 and 1. It applies to both discrete and continuous cases.

- All outcomes have equal probability

- Discrete example: rolling a die (1 to 6)

- Continuous example: random number between 0 and 1

Uniform Distribution

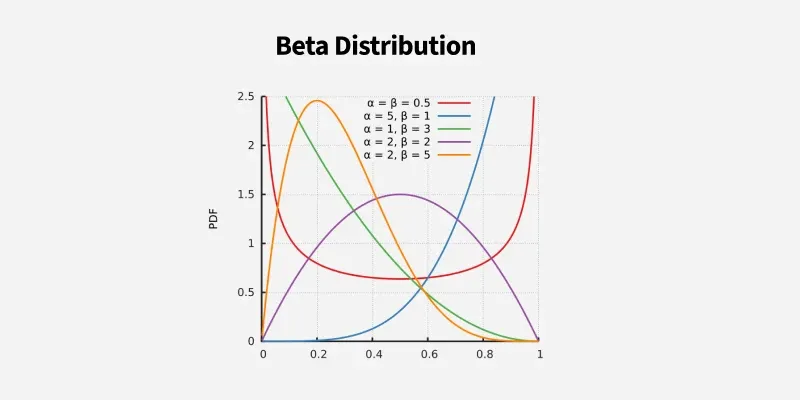

Uniform Distribution4. Beta Distribution

In real-world problems, probabilities often change as we learn more. The Beta distribution helps model this uncertainty and update beliefs with new data. For example, it can estimate the chance a customer clicks an ad.

- Models changing probabilities between 0 and 1

- Parameters (α and β) control confidence and shape

- Commonly used in Bayesian stats and A/B testing

Beta Distribution

Beta Distribution5. Gamma Distribution

The Gamma distribution models the total time needed for multiple independent events to happen. It extends the exponential distribution to cover several tasks or events. For example, estimating the total time to finish three project tasks with varying durations.

- Models total time for multiple events

- Shape parameter (κ) controls event count

- Scale parameter (θ) controls event duration

gamma distributions

gamma distributions6. Chi-Square Distribution

The Chi-Square distribution is used in hypothesis testing to check relationships between categorical variables. For example, testing if gender affects preference for coffee or tea. It helps determine if observed differences are due to chance.

- Used for testing independence between categories

- Works with contingency tables

- Degrees of freedom depend on number of categories

Chi-Square Distributions

Chi-Square Distributions7. Log-Normal Distribution

The Log-Normal distribution models data that grows multiplicatively over time, like stock prices or income. If the logarithm of the data is normally distributed, the original data follows a log-normal distribution. It only models positive values.

- Models multiplicative growth processes

- Data can’t be negative

- Commonly used for stock prices and incomes

Log Normal Distribution

Log Normal DistributionNow It is the time to summarize all the distributions that we have studied:

It looks like a bell and most data is around the middle and few values are at ends.

Distributions | Key Features | Usage |

|---|

Normal Distributions | This is used to adjust data to make it easier to analyze and to find unusual values like errors or outliers. | Used for feature scaling , model assumptions and anomaly detection |

|---|

Exponential Distributions | It measures how long it takes for something to happen like waiting for an event. | Helps to predict when a server might crash or how long it will take for customers to arrive at a store. |

|---|

Uniform Distributions | In this every possible outcome is equally likely; no outcome is more likely than another. | It is used for picking random samples from a group. |

|---|

Beta Distributions | Helps us to update our guesses about chances based on new information. | This is useful for A/B testing (comparing two options) and figuring out how often people click on links. |

|---|

Gamma Distributions | Gamma measures the total time takes for several events to happen one after another. | Helps to predict when systems might fail and assess risks in various situations. |

|---|

Chi-Square Distributions | It checks if there is a relationship between different categories of data. | helps in analyzing customer survey results to see if different groups have different opinions or behaviors. |

|---|

Log-Normal Distributions | It shows how things grow over time especially when growth happens in steps rather than all at once. | Used for predicting stock prices and understanding how income levels are distributed among people. |

|---|

Binomial Distributions | This models the number of successes in multiple trials. | Useful for determining the probability of a certain number of successes in a fixed number of trials |

|---|

Bernoulli Distributions | Bernoulli models a single trial with two outcomes (success/failure). | Mostly used in quality control to assess pass/fail situations. |

|---|

Poisson Distributions | It find the number of events occurring in a fixed interval of time or space. | Helps to predict the number of customer arrivals at a store during an hour. |

|---|

Geometric Distributions | It helps to find number of trials until the first success occurs. | Useful for understanding how many attempts it takes before achieving the first success e.g., how many times you need to flip a coin before getting heads. |

|---|

In this we learn about important probability distributions used for making predictions and understanding data. Next we’ll look at Inferential Statistics where we’ll learn how to make conclusions from it.

Similar Reads

Data Analysis (Analytics) Tutorial Data Analytics is a process of examining, cleaning, transforming and interpreting data to discover useful information, draw conclusions and support decision-making. It helps businesses and organizations understand their data better, identify patterns, solve problems and improve overall performance.

4 min read

Introduction to Data Analytics

What is Data Analytics?Data Analytics is the process of collecting, organizing and studying data to find useful information understand what’s happening and make better decisions. In simple words it helps people and businesses learn from data like what worked in the past, what is happening now and what might happen in the

6 min read

Why Data Analysis is Important?DData Analysis involves inspecting, transforming, and modeling data to discover useful information, inform conclusions, and support decision-making. It encompasses a range of techniques and tools used to interpret raw data, identify patterns, and extract actionable insights. Effective data analysis

5 min read

Data Science vs Data AnalyticsIn this article, we will discuss the differences between the two most demanded fields in Artificial intelligence that is data science, and data analytics.What is Data Science Data Science is a field that deals with extracting meaningful information and insights by applying various algorithms preproc

3 min read

Uses of Data AnalyticsIn this article, we are going to discuss different uses of data analytics. And will discuss the application where we will see how data is an essential part of different sectors. So, let's discuss them one by one. Data is of much importance nowadays. Data helps you understand performance providing th

3 min read

Life Cycle Phases of Data AnalyticsIn this article, we are going to discuss life cycle phases of data analytics in which we will cover various life cycle phases and will discuss them one by one. Data Analytics Lifecycle :The Data analytic lifecycle is designed for Big Data problems and data science projects. The cycle is iterative to

3 min read

Data Preprocessing and Exploration

What is Data Cleaning?Data cleaning, also known as data cleansing or data scrubbing, is the process of identifying and correcting (or removing) errors, inconsistencies, and inaccuracies within a dataset. This crucial step in the data management and data science pipeline ensures that the data is accurate, consistent, and

12 min read

ML | Handling Missing ValuesMissing values are a common issue in machine learning. This occurs when a particular variable lacks data points, resulting in incomplete information and potentially harming the accuracy and dependability of your models. It is essential to address missing values efficiently to ensure strong and impar

12 min read

What is Feature Engineering?Feature engineering is the process of turning raw data into useful features that help improve the performance of machine learning models. It includes choosing, creating and adjusting data attributes to make the model’s predictions more accurate. The goal is to make the model better by providing rele

5 min read

What is Data Transformation?Data transformation is an important step in data analysis process that involves the conversion, cleaning, and organizing of data into accessible formats. It ensures that the information is accessible, consistent, secure, and finally recognized by the intended business users. This process is undertak

6 min read

EDA - Exploratory Data Analysis in PythonExploratory Data Analysis (EDA) is a important step in data analysis which focuses on understanding patterns, trends and relationships through statistical tools and visualizations. Python offers various libraries like pandas, numPy, matplotlib, seaborn and plotly which enables effective exploration

6 min read

Univariate, Bivariate and Multivariate data and its analysisData analysis is an important process for understanding patterns and making informed decisions based on data. Depending on the number of variables involved it can be classified into three main types: univariate, bivariate and multivariate analysis. Each method focuses on different aspects of the dat

5 min read

Python - Data visualization tutorialData visualization is a crucial aspect of data analysis, helping to transform analyzed data into meaningful insights through graphical representations. This comprehensive tutorial will guide you through the fundamentals of data visualization using Python. We'll explore various libraries, including M

7 min read

Statistical Analysis and Probability

Probability Data Distributions in Data ScienceUnderstanding how data behaves is one of the first steps in data science. Before we dive into building models or running analysis, we need to understand how the values in our dataset are spread out and that’s where probability distributions come in.Let us start with a simple example: If you roll a f

8 min read

Central Limit Theorem in StatisticsThe Central Limit Theorem in Statistics states that as the sample size increases and its variance is finite, then the distribution of the sample mean approaches normal distribution irrespective of the shape of the population distribution.The central limit theorem posits that the distribution of samp

11 min read

Parametric Methods in StatisticsParametric statistical methods are those that make assumptions regarding the distribution of the population. These methods presume that the data have a known distribution (e.g., normal, binomial, Poisson) and rely on parameters (e.g., mean and variance) to define the data.Key AssumptionsParametric t

6 min read

Non-Parametric TestsNon-parametric tests are applied in hypothesis testing when the data does not satisfy the assumptions necessary for parametric tests, such as normality or equal variances. These tests are especially helpful for analyzing ordinal data, small sample sizes, or data with outliers.Common Non-Parametric T

5 min read

ANOVA for Machine LearningANOVA is useful when we need to compare more than two groups and determine whether their means are significantly different. Suppose you're trying to understand which ingredients in a recipe affect its taste. Some ingredients, like spices might have a strong influence while others like a pinch of sal

9 min read

Confidence IntervalA Confidence Interval (CI) is a range of values that contains the true value of something we are trying to measure like the average height of students or average income of a population.Instead of saying: “The average height is 165 cm.â€We can say: “We are 95% confident the average height is between 1

7 min read

Hypothesis TestingHypothesis testing compares two opposite ideas about a group of people or things and uses data from a small part of that group (a sample) to decide which idea is more likely true. We collect and study the sample data to check if the claim is correct.Hypothesis TestingFor example, if a company says i

9 min read

P-Value: Comprehensive Guide to Understand, Apply, and InterpretA p-value is a statistical metric used to assess a hypothesis by comparing it with observed data. This article delves into the concept of p-value, its calculation, interpretation, and significance. It also explores the factors that influence p-value and highlights its limitations. Table of Content W

12 min read

Data Analysis Libraries & Tools

Pandas TutorialPandas is an open-source software library designed for data manipulation and analysis. It provides data structures like series and DataFrames to easily clean, transform and analyze large datasets and integrates with other Python libraries, such as NumPy and Matplotlib. It offers functions for data t

6 min read

NumPy Tutorial - Python LibraryNumPy (short for Numerical Python ) is one of the most fundamental libraries in Python for scientific computing. It provides support for large, multi-dimensional arrays and matrices along with a collection of mathematical functions to operate on arrays.At its core it introduces the ndarray (n-dimens

3 min read

Matplotlib TutorialMatplotlib is an open-source visualization library for the Python programming language, widely used for creating static, animated and interactive plots. It provides an object-oriented API for embedding plots into applications using general-purpose GUI toolkits like Tkinter, Qt, GTK and wxPython. It

5 min read

Python Seaborn TutorialSeaborn is a library mostly used for statistical plotting in Python. It is built on top of Matplotlib and provides beautiful default styles and color palettes to make statistical plots more attractive.In this tutorial, we will learn about Python Seaborn from basics to advance using a huge dataset of

15+ min read

Power BI Tutorial | Learn Power BIPower BI is a Microsoft-powered business intelligence tool that helps transform raw data into interactive dashboards and actionable insights. It allow users to connect to various data sources, clean and shape data and visualize it using charts, graphs and reports all with minimal coding.It’s widely

5 min read

Tableau TutorialTableau is a leading data visualization tool that help users to create interactive and insightful visualizations from data. With Tableau we can transform raw data into meaningful visuals without the need for coding. This tutorial will guide us through data visualization using Tableau like connecting

5 min read

SQL for Data AnalysisSQL (Structured Query Language) is a powerful tool for data analysis, allowing users to efficiently query and manipulate data stored in relational databases. Whether you are working with sales, customer or financial data, SQL helps extract insights and perform complex operations like aggregation, fi

6 min read

How to Perform Data Analysis in Excel: A Beginner’s GuideExcel is one of the most powerful tools for data analysis, allowing you to process, manipulate, and visualize large datasets efficiently. Whether you're analyzing sales figures, financial reports, or any other type of data, knowing how to perform data analysis in Excel can help you make informed dec

14 min read

Time Series Analysis

Time Series Analysis & Visualization in PythonTime series data consists of sequential data points recorded over time which is used in industries like finance, pharmaceuticals, social media and research. Analyzing and visualizing this data helps us to find trends and seasonal patterns for forecasting and decision-making. In this article, we will

6 min read

8 Types of Plots for Time Series Analysis using PythonTime series data Time series data is a collection of observations chronologically arranged at regular time intervals. Each observation corresponds to a specific time point, and the data can be recorded at various frequencies (e.g., daily, monthly, yearly). This type of data is very essential in many

10 min read

Handling Missing Values in Time Series DataHandling missing values in time series data in R is a crucial step in the data preprocessing phase. Time series data often contains gaps or missing observations due to various reasons such as sensor malfunctions, human errors, or other external factors. In R Programming Language dealing with missing

5 min read

Understanding the Moving average (MA) in Time Series DataData is often collected with respect to time, whether for scientific or financial purposes. When data is collected in a chronological order, it is referred to as time series data. Analyzing time series data provides insights into how the data behaves over time, including underlying patterns that can

15 min read

Augmented Dickey-Fuller (ADF)Augmented Dickey-Fuller (ADF) test is a statistical test in time series analysis used to determine whether a given time series is stationary. A stationary time series has constant mean and variance over time, which is a core assumption in many time series models, including ARIMA.Why Stationarity Mat

3 min read

AutoCorrelationAutocorrelation is a fundamental concept in time series analysis. Autocorrelation is a statistical concept that assesses the degree of correlation between the values of variable at different time points. The article aims to discuss the fundamentals and working of Autocorrelation. Table of Content Wh

10 min read

Data Analytics Projects

30+ Top Data Analytics Projects in 2025 [With Source Codes]Are you an aspiring data analyst? Dive into 40+ FREE Data Analytics Projects packed with the hottest 2024 tech. Data Analytics Projects for beginners, final-year students, and experienced professionals to Master essential data analytical skills. These top data analytics projects serve as a simple ye

4 min read

Top 80+ Data Analyst Interview Questions and AnswersData is information, often in the form of numbers, text, or multimedia, that is collected and stored for analysis. It can come from various sources, such as business transactions, social media, or scientific experiments. In the context of a data analyst, their role involves extracting meaningful ins

15+ min read