Where AI Developers Build

Achieve consistency and predictability in your AI applications and agentic systems at scale with Comet’s end-to-end model evaluation platform.

Trusted by the most innovative ML teams

Easy Integration

Add just a few lines of code to your notebook or script and automatically start tracking LLM traces, code, hyperparameters, metrics, model predictions, and more.

from opik import track @track def llm_chain(user_question): context = get_context(user_question) response = call_llm(user_question, context) return response @track def get_context(user_question): # Logic that fetches the context, hard coded here return ["The dog chased the cat.", "The cat was called Luky."] @track def call_llm(user_question, context): # LLM call, can be combined with any Opik integration return "The dog chased the cat Luky." response = llm_chain("What did the dog do ?") print(response)from llama_index.core import VectorStoreIndex, global_handler, set_global_handler from llama_index.core.schema import TextNode # Configure the Opik integration set_global_handler("opik") opik_callback_handler = global_handler node1 = TextNode(text="The cat sat on the mat.", id_="1") node2 = TextNode(text="The dog chased the cat.", id_="2") index = VectorStoreIndex([node1, node2]) # Create a LlamaIndex query engine query_engine = index.as_query_engine() # Query the documents response = query_engine.query("What did the dog do ?") print(response)from langchain_openai import ChatOpenAI from opik.integrations.langchain import OpikTracer # Initialize the tracer opik_tracer = OpikTracer() # Create the LLM Chain using LangChain llm = ChatOpenAI(temperature=0) # Configure the Opik integration llm = llm.with_config({"callbacks": [opik_tracer]}) llm.invoke("Hello, how are you?")from openai import OpenAI from opik.integrations.openai import track_openai openai_client = OpenAI() openai_client = track_openai(openai_client) response = openai_client.chat.completions.create( model="gpt-3.5-turbo", messages=[ {"role": "user", "content": "Hello, world!"} ] )from comet_ml import Experiment import torch.nn as nn # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Create your model class class RNN(nn.Module): #... Define your Class # 3. Train and test your model while logging everything to Comet with experiment.train(): # ...Train your model and log metrics experiment.log_metric("accuracy", correct / total, step = step) # 4. View real-time metrics in Cometfrom pytorch_lightning.loggers import CometLogger # 1. Create your Model # 2. Initialize CometLogger comet_logger = CometLogger() # 3. Train your model trainer = pl.Trainer( logger=[comet_logger], # ...configs ) trainer.fit(model) # 4. View real-time metrics in Cometfrom comet_ml import Experiment from transformers import Trainer # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Train your model trainer = Trainer( model = model, # ...configs ) trainer.train() # 3. View real-time metrics in Cometfrom comet_ml import Experiment from tensorflow import keras # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Define your model model = tf.keras.Model( # ...configs ) # 3. Train your model model.fit( x_train, y_train, validation_data=(x_test, y_test), ) # 4. Track real-time metrics in Cometfrom comet_ml import Experiment import tensorflow as tf # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Define and train your model model.fit(...) # 3. Log additional model metrics and params experiment.log_parameters({'custom_params': True}) experiment.log_metric('custom_metric', 0.95) # 4. Track real-time metrics in Cometfrom comet_ml import Experiment import tree from sklearn # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Build your model and fit clf = tree.DecisionTreeClassifier( # ...configs ) clf.fit(X_train_scaled, y_train) params = {...} metrics = {...} # 3. Log additional metrics and params experiment.log_parameters(params) experiment.log_metrics(metrics) # 4. Track model performance in Cometfrom comet_ml import Experiment import xgboost as xgb # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Define your model and fit xg_reg = xgb.XGBRegressor( # ...configs ) xg_reg.fit( X_train, y_train, eval_set=[(X_train, y_train), (X_test, y_test)], eval_metric="rmse", ) # 3. Track model performance in Comet# Utilize Comet in any environment from comet_ml import Experiment # 1. Define a new experiment experiment = Experiment(project_name="YOUR PROJECT") # 2. Model training here # 3. Log metrics or params over time experiment.log_metrics(metrics) #4. Track real-time metrics in Comet# Utilize Comet in any environment from comet_mpm import CometMPM # 1. Create the MPM logger MPM = CometMPM() # 2. Add your inference logic here # 3. Log metrics or params over time MPM.log_event( prediction_id="...", input_features=input_features, output_value=prediction, output_probability=probability, )An End-to-End Model Evaluation Platform

Comet’s end-to-end model evaluation platform for developers focuses on shipping AI features, including open source LLM tracing, ML unit-testing, evaluations, experiment tracking and production monitoring.

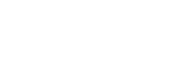

Opik: Log & Evaluate Your Application’s LLM Calls

Opik provides comprehensive LLM observability so you can confidently test, debug, and monitor your GenAI apps and agents, from application-level unit testing down to individual system prompts and user inputs.

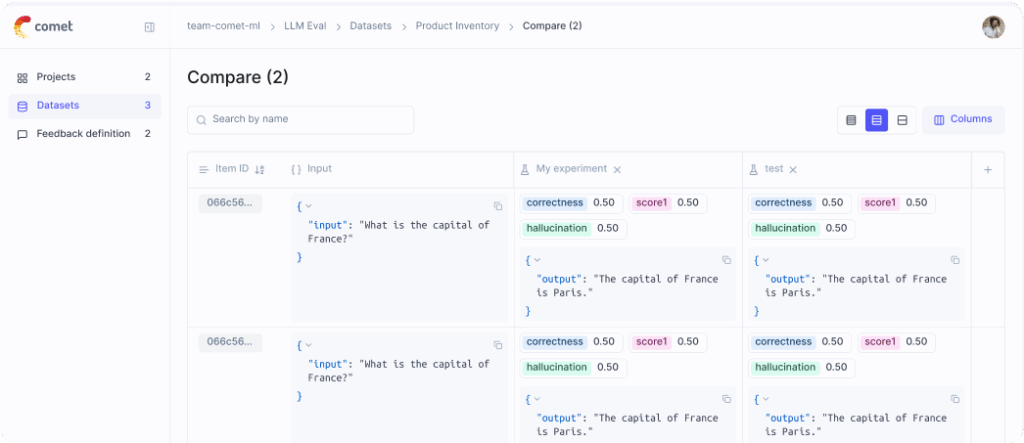

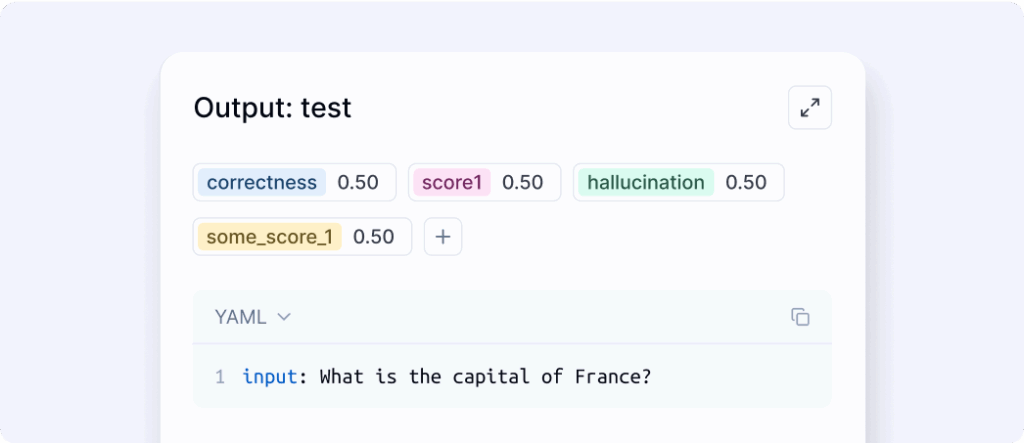

Opik: Optimize Prompts & Agentic Systems

With your application’s LLM calls and responses logged, you can bring in expert reviewers for annotation, score using built-in eval metrics, and even automate prompt engineering for complex multi-step agents.

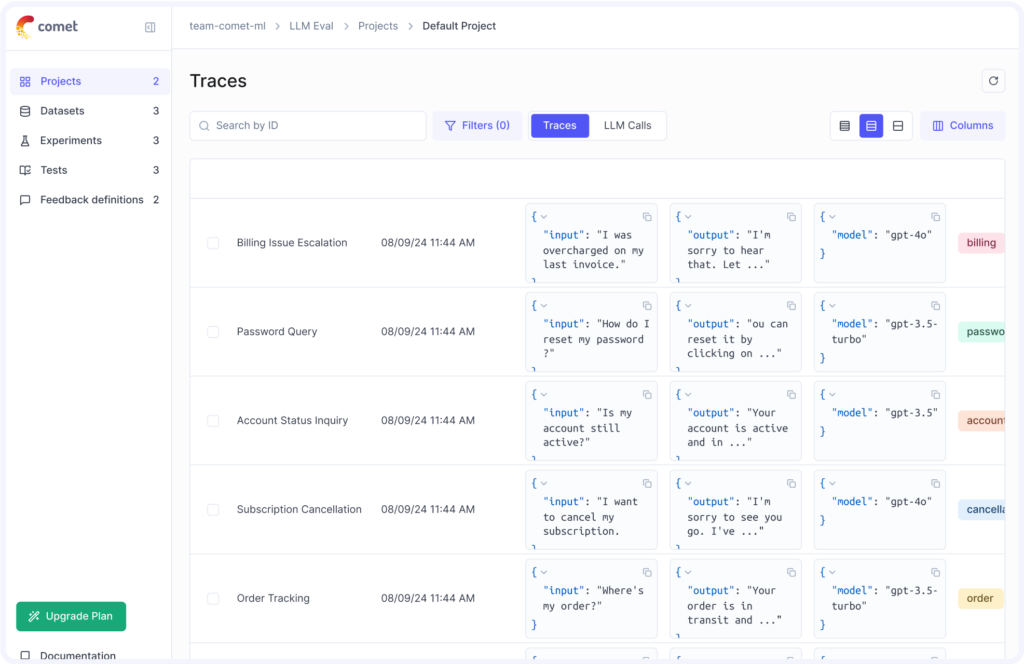

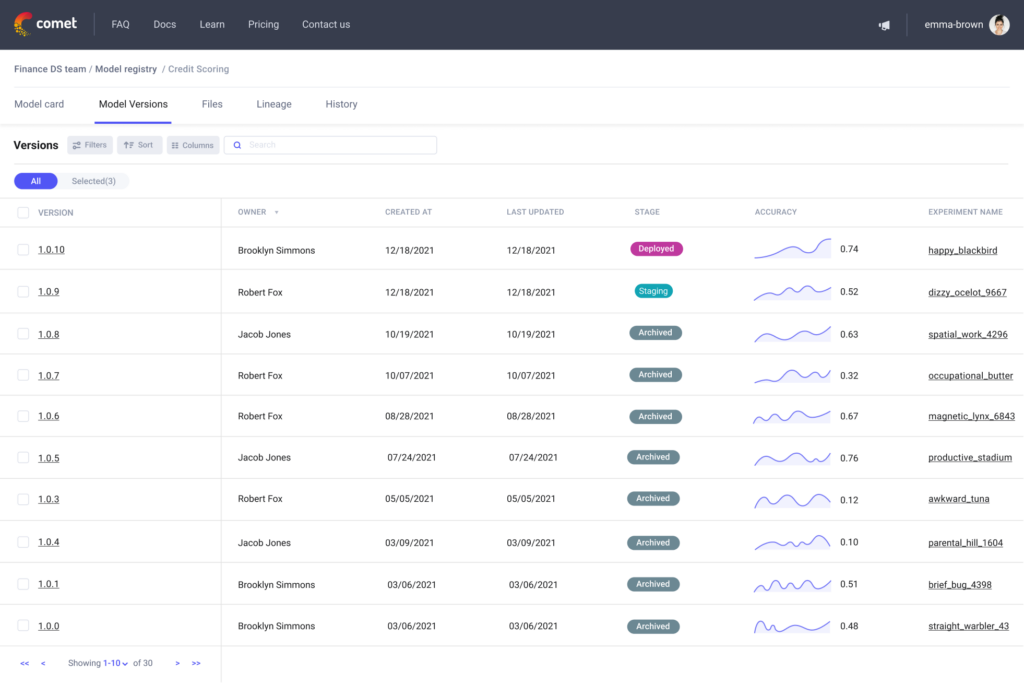

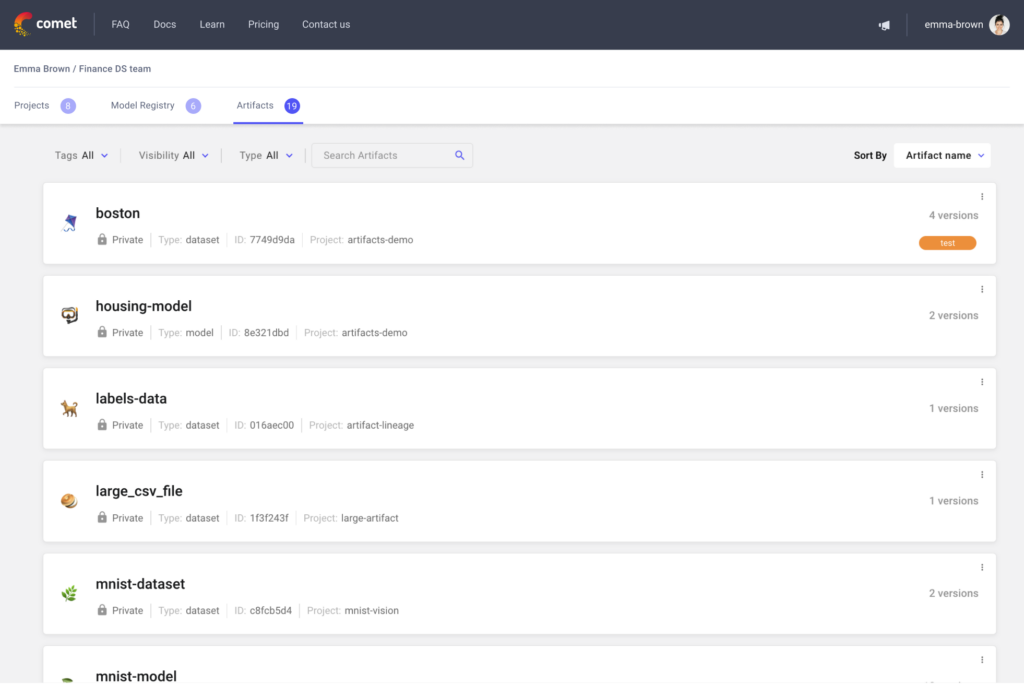

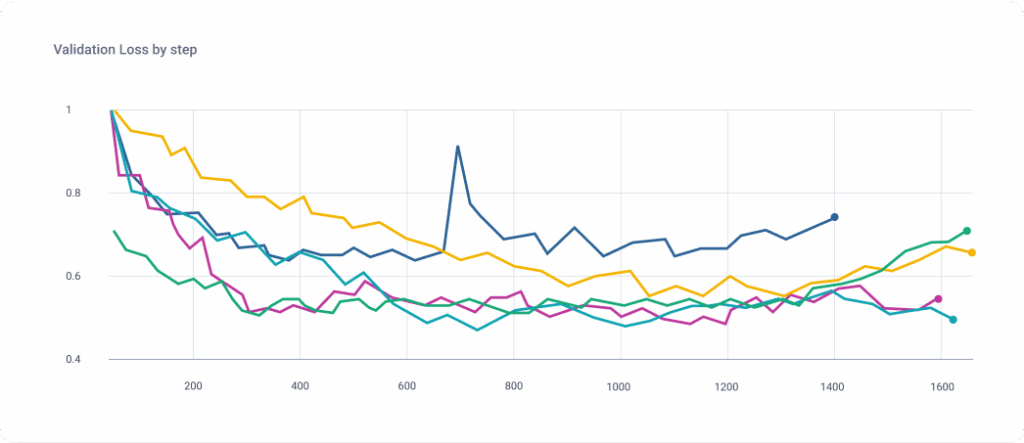

MLOps: Track & Compare Model Training Runs

Comet Experiment Management gives you the tools to ensure your models are explainable and reproducible, with custom visualizations, model versioning, dataset management, production monitoring, and more.

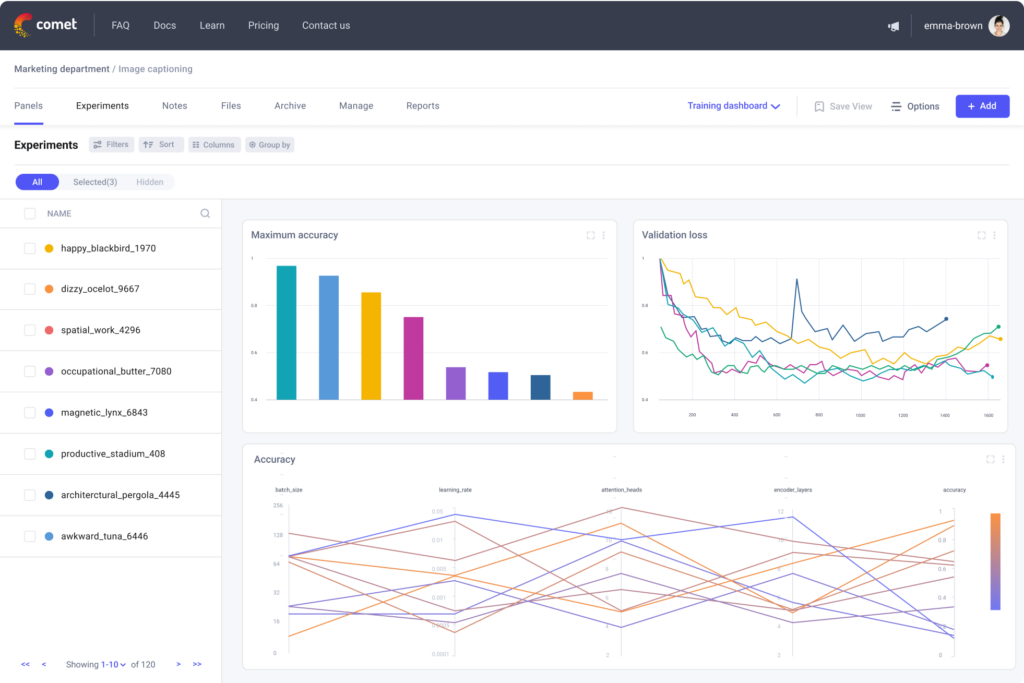

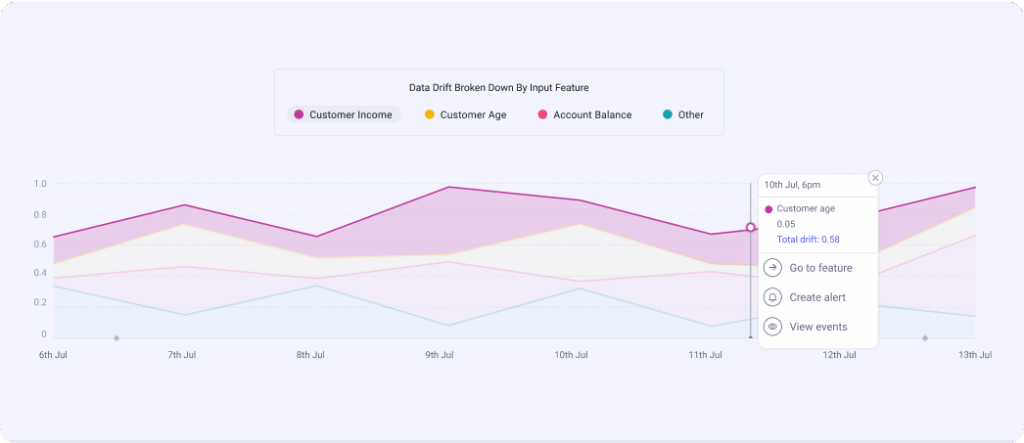

ML Model Production Monitoring

Deploy your optimized models with confidence, ensure regulatory compliance, and catch and fix issues like data drift before they start to affect your end-user experience.

Where AI Developers Build

Run Comet’s end-to-end evaluation platform on any infrastructure to see firsthand how Comet’s reshapes your workflow. Bring your existing software and data stack. Use code panels to create visualizations in your preferred user interfaces.

Infrastructure

An AI Platform Built for Enterprise, Driven by Community

Comet’s end-to-end evaluation platform is trusted by innovative data scientists, ML practitioners, and engineers in the most demanding enterprise environments.

Get started today, free.

You don’t need a credit card to sign up, and your Comet account comes with a generous free tier you can actually use—for as long as you like.