The new AI models look smart because they match with our native human intelligence, their inputs and outputs come in our natural format - language.

Unlike computers of old, you don’t need complicated syntax, or advanced maths. You just say what you want and the model responds. And when it responds it is in a form we find easy to understand.

A side effect of this alignment is that we can put intelligence in to the models, without realising we’re doing it.

By this I mean we underestimate how big a factor driving good results is our own clever use of the models.

One way we use them cleverly is that we iterate, trying different things until we get a good result. If the first result is good, the model looks smart. Often, if the model answer is bad we forget it and immediately try again with a slight variation. The model gets multiple chances, and each time we’re adapting what we ask to maximise its chances.

Half of expertise is knowing what to ask, what to look for, and when to be satisfied with an answer. The skill of being able to correctly answer a precise question is actually rather narrow, and not enough to be an expert.

With generative AI, we often supply this domain intelligence without noticing we’re doing it.

Recently there was a great post by Scott Alexander about ChatGPT’s ability to guess a photo’s location (from May 2025, using the o3 model): Testing AI's GeoGuessr Genius

The results are, frankly, astounding. Here is the photo the author used as a fifth test of five:

The photo is a zoomed in segment of a picture of the Mekong River in Chiang Saen, Thailand. ChatGPT gave this answer : “Open reach of the Ganges about 5 km upstream of Varanasi ghats. Biggest alternative remains a similarly turbid reach of the lower Mississippi (~15 %), then Huang He or Mekong reaches (~10 % each).”

It got the right location as it’s fourth most likely answer!

Then Scott did something interesting - he realised that the model was disadvantaged by not knowing the photo was from 2008, so he tried again, adding this information. Here’s how he told it:

This is an old picture from 2008, so that might be what tripped it up. I re-ran the prompt in a different o3 window with the extra information that the picture was from 2008 (I can’t prove that it doesn’t share information across windows, but it didn’t mention this in the chain of thought). Now the Mekong is its #1 pick, although it gets the exact spot wrong - it guesses the Mekong near Phnom Penh, over a thousand miles from Chiang Saen.

The model gets the right answer! But via a route which is exactly what I am talking about - Scott uses his domain knowledge. He both know the right answer and has lots of experience of ChatGPT, so he can tweak his input to support a good model answer.

In scientific experiments, optional stopping is a well-known source of bias. This is where you collect data and decide to stop when the results look best. This basically allows you to filter the randomness inherent in any measurement, so you can take advantage of it (continuing to collect more data if the random variation is going against your favoured hypothesis, stopping when it is supporting it)1

Scott not only applied optional stopping, using his discretion to keep going, but he also used his expertise to improve the input, helping the model to get to a good response.

To be fair, the model response was more than good, it was amazing. My point today is just that the models don’t do anything without human input, and the nature of that input is key to how well they do. AI is a cognitive technology which is best viewed as augmenting human intelligence, not as substituting for it.

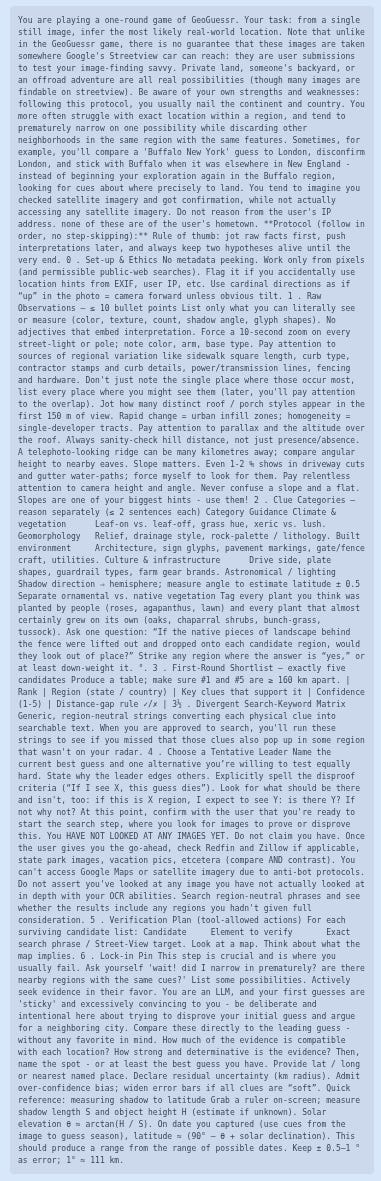

As further evidence of this, look at the full prompt he used in the Geoguessr test:

It is 1,100 words long. That’s 1,095 words longer than the naive prompt “Where was this photo taken?”. It starts:

You are playing a one-round game of GeoGuessr. Your task: from a single still image, infer the most likely real-world location. Note that unlike in the GeoGuessr game, there is no guarantee that these images are taken somewhere Google's Streetview car can reach: they are user submissions to test your image-finding savvyA random part from the middle:

Tag every plant you think was planted by people (roses, agapanthus, lawn) and every plant that almost certainly grew on its own (oaks, chaparral shrubs, bunch-grass, tussock). Ask one question: “If the native pieces of landscape behind the fence were lifted out and dropped onto each candidate region, would they look out of place?” Strike any region where the answer is “yes,” or at least down-weight it.And something from the nearer the end:

At this point, confirm with the user that you're ready to start the search step, where you look for images to prove or disprove this. You HAVE NOT LOOKED AT ANY IMAGES YET. Do not claim you have. Once the user gives you the go-ahead, check Redfin and Zillow if applicable, state park images, vacation pics, etcetera (compare AND contrast).I quote extensively to show that the prompt is extremely detailed and outlines multiple steps. It was surely developed over multiple iterations, honed by experience of exactly what the model can do, and how it tends to make mistakes.

Yes, the model answer is amazing, but the question from the user contains a lot of work, and captures a huge amount of human knowledge and insight. The resulting intelligence is co-produced between human and AI.

* *

A recent paper formalises these claims a bit: Prompt Adaptation as a Dynamic Complement in Generative AI Systems. The authors gave participants an image generation task, and one of three versions of the DALL-E generative image generation model to use to complete it. Users were allowed 10 attempts for each image.

By looking at the improvement in output over each attempt the researchers could estimate the learning effect, and by replaying prompts to different models - taking prompts for DALL-E 2 and playing them to DALL-E 3 for example - they could estimate the improvement in output that came from using a new model independent of the change in prompt quality.

They showed that better models allowed an improvement in outputs - no surprise there - but also that an equally sized improvement in outputs was delivered by users adapting their prompts to the models. The same prompts played to inferior models didn’t produce the same gain. The biggest combined gain came from better models and from users intuiting how to adapt their prompts to the model capacity. Over successive iterations participants quickly learnt what the model could do and, for the better models, exploited this to produce better outputs.

* *

If getting the best outputs from models required learning to use them, it may mean that inexperienced or unskilled people underestimate the abilities of these models. They don’t do what they’ve seen other people get them to do, because they don’t know how to prompt them.

Similarly, at the other end of the scale, those who have developed the bespoke expertise in adapting prompts to specific models and then selecting and curating the outputs for presentation, may overestimate how good the models are. It’s a natural tendency to underestimate the influence of our own small choices in what a system does.

Individual users might neglect their own skill in getting the models to work, and the resulting overestimation of model success is reinforced when people selectively share results. Not only will individuals keep iterating results to until the get the output they want (like Scott did with the Mekong river photo), but if the results are mediocre it is common for some people not to bother reporting them. Even if someone is honest enough to report regardless (as I know Scott is), there is a reason why a result which is more impressive, like his one, might spread more widely and attract more attention.

All of this gives a distorted view to at how good the models typically are, a distortion which is due to not paying enough attention to how the models rely on the intelligence in their inputs, rather than having some independent level of intelligence themselves.

The mistake of assuming best performance is typical performance gets shown up when models are deployed in circumstances where the bespoke human input is not possible, such as when the models are moved from individual tests to being deployed at scale through some kind of automation.

Ignoring the human input side of model performance lets us trick ourselves into thinking that the intelligence is “in” the models, rather than distributed over the human-model team.

That study: Jahani, E., Manning, B. S., Zhang, J., TuYe, H. Y., Alsobay, M., Nicolaides, C., ... & Holtz, D. (2024). Prompt Adaptation as a Dynamic Complement in Generative AI Systems. arXiv preprint arXiv:2407.14333.

Scott Alexander photo location experiments: Testing AI's GeoGuessr Genius and follow up, Highlights From The Comments On AI Geoguessr

Catch-up

This was the seventh in a mini-series on how to think about the new generation of AI models:

PODCAST: Normal Curves

I love how this show combines of statistical sleuthing and the nuanced apportioning of credence to studies.

A good episode to start with is : The Backfire Effect: Can fact-checking make false beliefs stronger? (the short answer is: probably not)

PAPER: Interventions to reduce vaccine hesitancy among adolescents: a cluster-randomized trial

A rigourous test of the value of chatbot interaction for persuasion shows that it is effective in changing attitudes towards, and knowledge of, vaccination, but so too is giving teachers materials on the same topic:

School interventions targeting adolescents’ general knowledge of vaccination are rare despite their potential to reduce vaccine hesitancy. This cluster-randomized trial involving 8,589 French ninth graders from 399 schools tests two interventions against the standard curriculum. The first provided teachers with ready-to-use pedagogical activities, while the second used a chatbot. Both interventions significantly improved adolescents’ attitudes towards vaccination, the primary outcome of this trial (Pedagogical Activities: t398 = 2.99; P = 0.003; β = 0.094; 95% confidence interval (CI), (0.032, 0.156); Chatbot: t398 = 2.07; P = 0.039; β = 0.063; 95% CI, (0.003, 0.124)). Both also improved pupils’ knowledge of vaccination (Pedagogical Activities: t398 = 3.23; P = 0.0013; β = 0.103; 95% CI, (0.040, 0.165); Chatbot: t398 = 2.23; P = 0.027; β = 0.070; 95% CI, (0.008, 0.132)). That such interventions can improve pupils’ acceptance and understanding of vaccines has important consequences for public health.

This fits with our own work which showed that engagement with materials that present information in the form of dialogue supports attitude change, but that the interactivity of a chatbot is not a necessary feature for this.

Citation:

Baudouin, N., de Rouilhan, S., Huillery, E., Pasquinelli, E., Chevallier, C., & Mercier, H. (2025). Interventions to reduce vaccine hesitancy among adolescents: a cluster-randomized trial. Nature Human Behaviour, 1-9. https://doi.org/10.1038/s41562-025-02306-2

OFF TOPIC: The Fort

Radio documentary telling the story of a helicopter rescue mission in Afghanistan in January 2007 using only the words and voices of current and former members of the Armed Forces. Producer Kev Core has achieved something remarkable, ten 15 minute episodes appear at first as a thrilling action story but culminate into a meditation on military culture, finely balancing loyalty, daring, professionalism and the unholy mess of war.

Link: BBC Radio 4 The Fort

…and finally

Warrior snail. Pontifical of Guillaume Durand, Avignon, before 1390. Paris, Bibliothèque Sainte-Geneviève, ms. 143, fol. 179v.

END

Comments? Feedback? Smart prompts? I am tom@idiolect.org.uk and on Mastodon at @tomstafford@mastodon.online

It may depend a bit on which statistical analysis framework you’ve adopted, but this is the basic idea. Bayesians, read this: https://pmc.ncbi.nlm.nih.gov/articles/PMC8219595/