Database performance issues, such as slow queries and high CPU usage, can severely impact your applications. Implementing appropriate indexes is a critical strategy for optimizing database performance, as it helps identify frequently used columns in search conditions and enhances query efficiency. Additionally, regular database backups are vital for maintaining database resilience and operational integrity, ensuring recovery from hardware failures, software corruption, and other catastrophic events. This article identifies common problems and provides practical solutions to improve database performance.

Introduction to Database Performance

Database performance refers to the speed and efficiency with which a database system operates. Maintaining optimal database performance is crucial for ensuring smooth application performance and preventing performance issues that can disrupt user experience. Key database performance metrics, such as response time and throughput, are essential for monitoring and optimizing database performance.

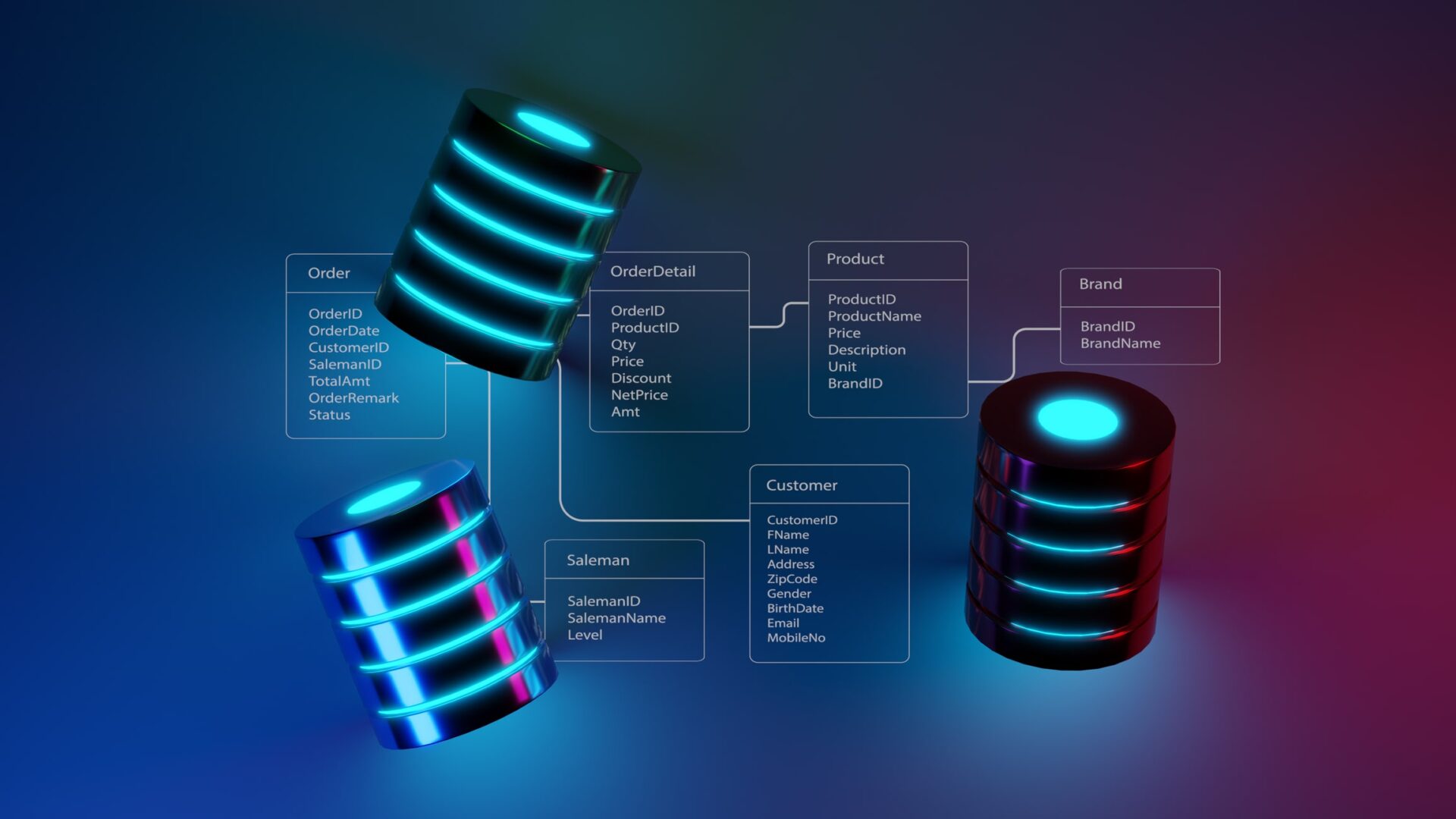

Common database performance issues, including slow query execution and inefficient queries, can significantly impact database efficiency. Identifying and addressing these performance issues is critical for maintaining optimal database performance and preventing performance degradation. Database performance problems can be caused by a variety of factors, including inefficient querying, inadequate indexing, and poor database design.

Monitoring tools, such as New Relic, can help identify performance issues and provide insights into database performance metrics. A holistic approach to database performance optimization involves considering multiple factors, including query performance, indexing, and hardware resources. By taking a comprehensive view of database performance, organizations can ensure their systems run efficiently and effectively.

Key Takeaways

- Common database performance issues such as slow query execution, high CPU utilization, and insufficient indexing significantly impact response times and user experience, particularly in high-traffic environments.

- Monitoring key performance metrics, including response time, throughput, and resource utilization, is essential to identify bottlenecks and optimize database performance effectively.

- Implementing best practices such as optimizing queries, tuning indexes, and utilizing connection pooling can enhance database efficiency and address performance challenges proactively.

Identifying Common Database Performance Issues

Identifying and addressing common issues in database performance is crucial for sustaining the efficiency and dependability of applications. These prevalent problems have a direct impact on the response times of databases, as well as user experience, particularly in environments with substantial traffic. To reach optimal database performance levels, one must first comprehend and tackle these issues.

The following are common causes for concern when it comes to database performance:

- Execution of queries at slow speeds

- Elevated CPU usage

- Disk input/output constraints

- Inadequate indexing strategies

- Challenges related to locking and concurrency mechanisms

These factors can all significantly impair your system’s effectiveness, culminating in extended wait periods and dissatisfaction amongst users during their interactive sessions. Addressing the root causes of such difficulties is imperative.

Keeping an eye on various performance metrics is key to pinpointing areas where bottlenecks occur so that you can optimize how resources are allocated within your databases. Vigilant monitoring enables early detection of any hiccups affecting application functionality which aids in preemptively securing consistent operation without compromising productivity or speed.

Slow query execution

Inefficient queries, stale statistics, and suboptimally structured SQL can drastically reduce database performance by causing slow query execution. Analyzing execution plans can help identify inefficiencies in SQL queries, enabling database administrators to make data-driven decisions to enhance performance. This results in diminished efficiency within the application as a whole. Common culprits of inefficient querying include fetching extraneous data, overuse of wildcard characters in searches, and ill-composed SQL.

Enhancing the performance of slow queries is crucial for boosting both the responsiveness of applications and the overall efficiency of databases. By refining your queries to be more precise and better organized, you can cut down on execution times significantly—thus increasing database effectiveness.

High CPU utilization

Elevated CPU usage can lead to a reduction in the speed at which queries are executed, potentially causing a downturn in database performance. When performance times exceed what would typically be expected, it helps diagnose and resolve database performance issues.

Several primary factors may be responsible for high CPU utilization, namely:

- Poorly optimized queries

- Issues with multiple operations accessing the database simultaneously (concurrency issues)

- Hardware that is either outdated or lacking in capacity

- The presence of malware

For query processing and various database tasks, the CPU plays an indispensable role. Its efficiency has a profound influence on how well the entire system operates.

Hardware constraints such as inadequate RAM, limited disk space available on hard drives, and insufficient CPU capabilities can have severe repercussions on how well a database performs. To ensure peak performance continues uninterrupted, it’s critical to conduct ongoing hardware evaluations and updates.

Disk I/O bottlenecks

When the disk subsystem’s I/O operations restrict the management of data requests, disk I/O bottlenecks emerge. Slow performance in read-write activities can be a consequence of elevated latency for disk reads and writes. Insufficient buffer cache size might precipitate regular flushing and re-reading of data from the disk, which intensifies these I/O bottlenecks.

These Disk I/O bottlenecks lead to diminished response times and deteriorated user experiences. Allocating more RAM allows commonly accessed data and query plans to be held on hand for faster retrieval, while implementing RAID configurations can bolster both storage space utilization and disk I/O throughput.

Insufficient indexing

A frequent issue leading to delayed data retrieval and reduced responsiveness is the lack of sufficient indexing. If indexes are poorly set up or insufficient, it can force queries to scan through the entire dataset, which considerably hampers database performance. In environments where traffic levels are high, this problem is even more noticeable as it leads to sluggish queries and overall deteriorated performance.

By implementing correct indexing methods, one can significantly improve the efficiency of data retrieval and boost database performance. The selection of appropriate index types adhering to established best practices along with regular maintenance forms an effective strategy for indexing that will be discussed in this article. It is crucial to identify and address indexing issues promptly to fix database performance problems before they impact production users.

Locking and concurrency problems

Contention and delays in database operations can arise from locking and concurrency issues, which become particularly problematic in environments with heavy traffic. These complications occur when user access conflicts cause tables to be locked, leading to considerable slowdowns.

When multiple users concurrently access a database, it can lead to high CPU usage due to concurrency problems, creating standstills that hinder the smooth functioning of operations. To mitigate these concerns, enhancing the database configuration along with vigilant monitoring for possible clashes is advised.

Key Database Performance Metrics to Monitor

Ensuring the efficient operation of a database is crucial, and this relies heavily on monitoring essential performance metrics. Utilizing tools such as New Relic and SQL profilers aids in pinpointing areas where performance lags occur, providing clear insights into how to enhance database productivity. By setting appropriate alert thresholds, one can keep nuisance notifications at bay while staying informed about pressing performance problems that require immediate action.

There are several key indicators for measuring database efficiency: these include response time, throughput levels, scalability potentialities, along with utilization rates of system resources like CPU power, memory capacity and disk input/output operations. Each metric offers valuable data from different angles concerning the overall health of your database’s functioning capabilities.

The measurement known as response time captures the duration between initiating a user query and receiving an outcome from the system. It is an integral component for assessing its responsiveness. Throughput quantifies transaction volume within certain timespans. Meanwhile, high-traffic scenarios necessitate robust scalability to avoid decline in service quality amid increasing demand pressures. Scrutinizing resource usage across CPUs, memory banks and disks illuminates possible constraints causing reduced operational flow, which is essential to maintain optimal database performance.

Leveraging sophisticated instruments designed for surveillance allows you to observe database functionality dynamics instantaneously alongside gauging ongoing benchmarks associated with its wellbeing – ensuring that any complications are swiftly identified before they escalate into significant hindrances impacting systems’ efficacy.

Response time

Maintaining quick response times is crucial for analyzing user experience in real-time while interacting with applications, as it measures the period from when a query starts to when the system replies. Keeping these response times short, even in situations of heavy traffic, prevents latency problems and guarantees that users have an application experience that feels immediate and responsive.

Throughput

Throughput is vital for managing a high number of simultaneous transactions and guaranteeing proficient data processing, as it assesses the amount of transactions executed within a set period.

Scalability

In cloud environments, as the number of users surges in high-traffic scenarios, scalability is crucial to avert performance degradation that could significantly affect system operations. Insufficient vertical scaling may result in complications.

Addressing the expansion needs of relational databases can be difficult. It often involves intricate hardware configurations and can encounter problems associated with vertical scaling processes.

Resource utilization (CPU, memory, disk I/O)

By keeping track of the usage of CPU, memory, and disk I/O, it is possible to pinpoint resource constraints and performance bottlenecks. Monitoring network infrastructure metrics is also crucial, as effective storage area network implementation and real-time performance measurements can significantly impact database performance and user experience. It’s essential for determining how well a database handles its resources to monitor these specific metrics.

Ensuring that a database utilizes its resources effectively is critical for maintaining smooth operations and adapting to different levels of demand.

Monitoring tools

Sophisticated monitoring tools facilitate the immediate evaluation of database performance and health indicators. They allow for continuous observation and comprehensive examination of performance metrics.

As an example, ScaleGrid’s built-in performance monitoring offers instantaneous notifications and tracking of metrics to detect problems before they worsen.

Proven Solutions to Improve Database Performance

To ensure that a database operates at peak efficiency, it is critical to apply established best practices and effective solutions. When choosing database performance tools, it is important to differentiate between on-premises, cloud, and SaaS solutions, as the choice depends on the enterprise’s specific needs for performance monitoring and management. Enhancing query performance through optimization, refining indexes for better data retrieval speeds, and adopting connection pooling are among the key strategies that contribute significantly to improving overall database efficiency. These measures not only bolster the execution of queries, but also mitigate frequent issues causing degradation in database performance.

The process of optimizing queries entails arranging them in such a way as to make optimal use of indexes while reducing superfluous computational demands. By tuning indexes appropriately, one can accelerate access to necessary data. Simultaneously, using connection pooling helps diminish the resource load involved with setting up new connections each time they’re needed. Crucial is scrutinizing the schema within a mysql-based system alongside implementing robust scaling methods which facilitate efficient management when faced with growing volumes of workloads.

By proactively incorporating these methodologies into your strategy for maintaining your system’s health, you can preemptively tackle potential problems in performance thus securing both consistent responsiveness and dependability within your databases operations – remedying challenges effectively via an encompassing approach dedicated towards resolving such matters efficiently.

Optimize queries

Enhancing the performance of a database is crucial, particularly in settings with substantial traffic. This can be achieved by crafting queries to make efficient use of indexes and by cutting down on needless calculations. By reconstructing queries that are not efficient, one can markedly boost database efficiency.

To optimize the execution of database queries, it’s essential they’re designed properly—this entails sidestepping the retrieval of superfluous data, employing suitable indexes strategically, and steering clear of using wildcard characters within SQL expressions indiscriminately. Adherence to these best practices diminishes execution times and bolsters overall effectiveness in handling queries within the database. Proactive testing and monitoring are also vital to identify and resolve other performance issues, such as database bottlenecks and various system performance challenges, thereby improving overall application performance.

Index tuning

Improving the performance of a database is largely contingent upon efficient data retrieval, which can be achieved through effective indexing. The lack of adequate indexing may result in sluggish data access and a decline in overall performance, particularly when the system experiences heavy traffic. Significant enhancements to indexing strategies can be realized by thoroughly reviewing and refining the structure of the database schema.

To sustain peak performance levels, it’s essential to conduct periodic assessments and establish suitable indexes. It’s also important to steer clear of excessive indexing while keeping index statistics up-to-date and verifying that indexes are configured correctly so as to bolster query efficiency.

Connection pooling

By utilizing connection pooling, the burden of setting up new connections is diminished as it recycles already established connections. An active pool of connections is kept on hand so that applications can borrow a connection from this pool instead of generating a fresh one with every use.

The management of numerous user connections through this method significantly boosts database efficiency by curtailing delay and augmenting data processing capacity.

Schema review

A well-crafted database schema is crucial for optimizing performance and ensuring efficient data management, including primary keys. Properly designed database fields improve performance and provide flexibility for future needs, including the correct data type, data types, documentation, and table.

Regular schema reviews and optimizations prevent inefficiencies and improve overall database performance. Factors like high CPU utilization and improper data types can lead to poor performance, underlining the importance of optimizing these elements.

Scaling strategies

Scalability is essential for handling growing workloads and requirements in database systems. It can be realized by vertical scaling, which increases the capacity of an individual server, or through horizontal scaling that spreads out the workload among several servers. The use of sharding methods guarantees a uniform distribution of data across these systems.

Data Types and Storage

Choosing the correct data type is essential for maintaining optimal database performance and preventing performance issues. Selecting the appropriate data type can help reduce storage space requirements and improve query performance. Understanding the different data types available, including integer, varchar, and datetime, is critical for effective database design.

Using the smallest possible data type can help improve database performance and reduce storage requirements. Data types can impact the performance of database queries, and selecting the correct data type is essential for optimal performance. Database storage space can be optimized by selecting the correct data type and using efficient storage techniques.

Data compression and indexing can also help improve database performance and reduce storage requirements. Regular monitoring of database storage space and data types can help identify potential performance issues and optimize database performance. By carefully selecting and managing data types, organizations can ensure their databases operate efficiently and effectively.

Disk Space and Management

Disk space management is critical for maintaining optimal database performance and preventing performance issues. Insufficient disk space can lead to performance degradation and slow query execution. Regular monitoring of disk space usage can help identify potential performance issues and optimize database performance.

Disk space can be optimized by removing unnecessary files, compressing data, and using efficient storage techniques. Database log files can also impact disk space usage, and regular log file management is essential for maintaining optimal database performance. Primary keys and indexes can help improve query performance and reduce disk space requirements.

Database objects, such as tables and indexes, can impact disk space usage, and regular monitoring is essential for maintaining optimal database performance. A comprehensive disk space management strategy involves considering multiple factors, including data types, storage space, and query performance, to optimize database performance and prevent performance issues. By implementing effective disk space management practices, organizations can ensure their databases run smoothly and efficiently.

How ScaleGrid Addresses Database Performance Challenges

ScaleGrid provides an all-encompassing solution for database performance management, utilizing automation and specialized support to adeptly manage multiple workloads. The service offered by ScaleGrid proactively addresses issues related to database performance, guaranteeing efficient functioning across a variety of database environments. Employing the techniques provided by ScaleGrid enables organizations to enhance both their database’s effectiveness and overall operational efficiency.

Through its case studies, ScaleGrid demonstrates how various businesses have improved their management capabilities and boosted their databases’ performance using these services. An example includes an e-commerce company that realized substantial savings in costs along with enhanced performance after adopting solutions from ScaleGrid. Financial entities that incorporated the use of ScaleGrid experienced better system uptime as well as more robust monitoring mechanisms.

ScaleGrid stands out due to key offerings such as comprehensive integration for ongoing performance tracking, professional optimization assistance, high-availability system design strategies aimed at continuous operation, scalable infrastructures adaptable to changing demands and additional caching functionalities which serve Boosts in speed for data retrieval processes.

Integrated performance monitoring

ScaleGrid employs monitoring tools that operate in real-time to proactively detect any issues with database performance, enabling prompt corrective actions. By continuously tracking the response times, these tools assist in pinpointing bottlenecks within applications, thus ensuring the maintenance of optimal database performance and averting any decline. Hardware limitations can have a significant impact on database performance, emphasizing the crucial role of resources like RAM, hard disk space, and CPU.

Expert support for optimization

ScaleGrid offers professional support centered on enhancing performance optimization to help clients boost the efficiency of their databases. They provide targeted advice on optimizing tactics that are specifically suited for the distinct requirements of each client’s database environment.

By offering personalized assistance, businesses are equipped to tackle not only inefficient queries but also various other issues related to database performance effectively.

High availability architecture

The architecture of ScaleGrid is designed to sustain high availability, making certain that databases stay functional despite system failures. Through automated failover mechanisms, the service continues without interruption even during outages, thus reducing downtime and preserving smooth functioning, a vital aspect for web applications and essential systems.

Scalable solutions

ScaleGrid’s offerings provide a smooth scaling experience to meet the increasing demands of databases while maintaining optimal performance. Users can dynamically modify database resources as needed to manage fluctuating workloads, ensuring that expanding data requirements are managed effectively.

Caching support

By employing sophisticated caching techniques, ScaleGrid substantially boosts the speed of data retrieval, leading to a considerable enhancement in database performance. The adoption of these mechanisms leads to decreased access times and amplified throughput by ensuring that commonly accessed data is easily accessible.

The outcome is a database setting that operates with greater efficiency and responsiveness.

Summary

It is crucial to actively manage database performance in order to guarantee the efficiency and dependability of applications. Recognizing and tackling prevalent performance issues, keeping an eye on essential metrics, and employing established solutions are all necessary steps businesses must take to preserve optimal database performance. Utilizing services such as those offered by ScaleGrid for management can also boost the reliability and efficiency of databases. It’s important to note that a finely tuned database serves as the pivotal support for any responsive and prosperous application.