OpenRouter Integration

In this guide, we’ll show you how to integrate Langfuse with OpenRouter.

OpenRouter provides an OpenAI-compatible completion API to +280 language models and providers that you can call directly or using the OpenAI SDK. This allows developers to access a variety of LLMs through a single, unified interface.

Since OpenRouter uses the OpenAI API schema, we can utilize Langfuse’s native integration with the OpenAI SDK, available in both Python and TypeScript.

Get started

pip install langfuse openaiimport os # Set your Langfuse API keys LANGFUSE_SECRET_KEY="sk-lf-..." LANGFUSE_PUBLIC_KEY="pk-lf-..." # 🇪🇺 EU region LANGFUSE_HOST="https://cloud.langfuse.com" # 🇺🇸 US region # LANGFUSE_HOST="https://us.cloud.langfuse.com" # Set your OpenRouter API key (OpenRouter uses the 'OPENAI_API_KEY' environment variable) os.environ["OPENAI_API_KEY"] = "<YOUR_OPENROUTER_API_KEY>"Example 1: Simple LLM Call

Since OpenRouter provides an OpenAI-compatible API, we can use the Langfuse OpenAI SDK wrapper to automatically log OpenRouter calls as generations in Langfuse.

- The

base_urlis set to OpenRouter’s API endpoint. - You can replace

"qwen/qwen-plus"with any model available on OpenRouter. - The

default_headerscan include optional headers as per OpenRouter’s documentation.

# Import the Langfuse OpenAI SDK wrapper from langfuse.openai import openai # Create an OpenAI client with OpenRouter's base URL client = openai.OpenAI( base_url="https://openrouter.ai/api/v1", default_headers={ "HTTP-Referer": "<YOUR_SITE_URL>", # Optional: Your site URL "X-Title": "<YOUR_SITE_NAME>", # Optional: Your site name } ) # Make a chat completion request response = client.chat.completions.create( model="qwen/qwen-plus", messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Tell me a fun fact about space."} ], name="fun-fact-request" # Optional: Name of the generation in Langfuse ) # Print the assistant's reply print(response.choices[0].message.content)Example 2: Nested LLM Calls

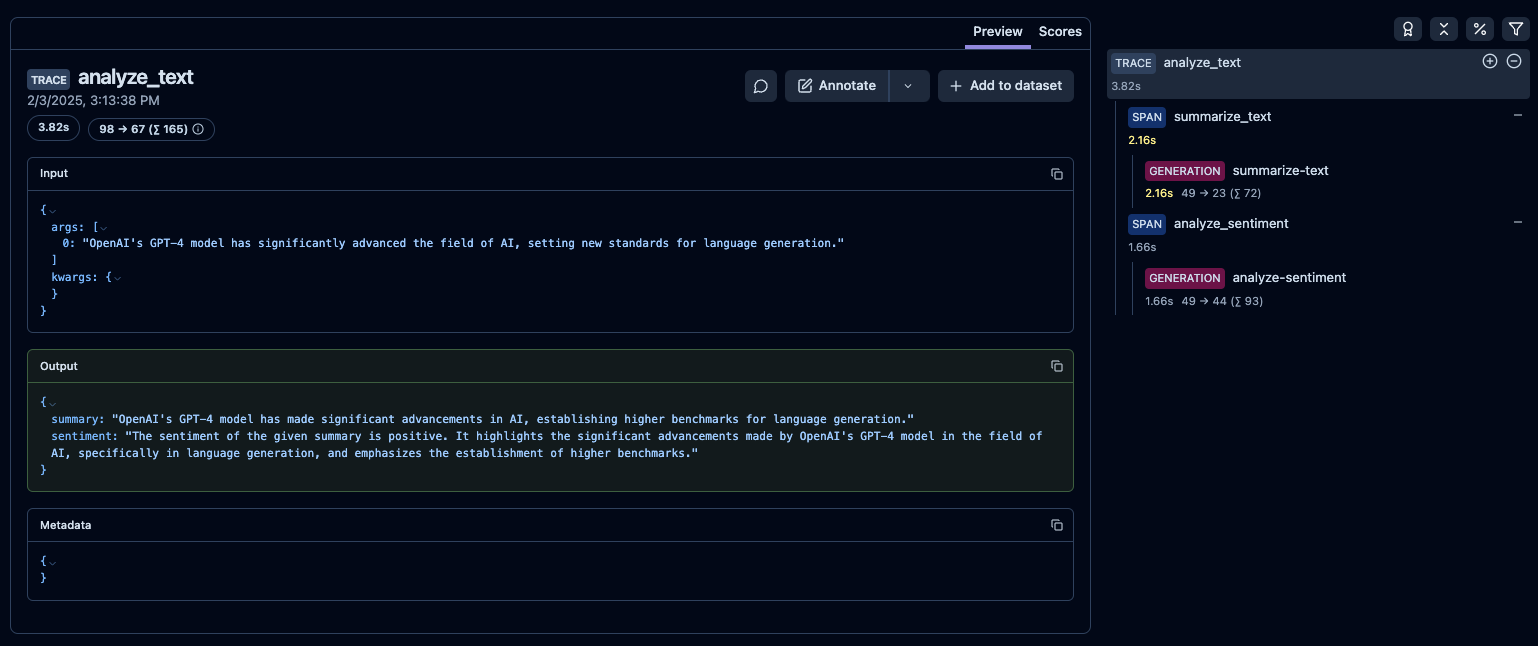

By using the @observe() decorator, we can capture execution details of any Python function, including nested LLM calls, inputs, outputs, and execution times. This provides in-depth observability with minimal code changes.

- The

@observe()decorator captures inputs, outputs, and execution details of the functions. - Nested functions

summarize_textandanalyze_sentimentare also decorated, creating a hierarchy of traces. - Each LLM call within the functions is logged, providing a detailed trace of the execution flow.

from langfuse import observe from langfuse.openai import openai # Create an OpenAI client with OpenRouter's base URL client = openai.OpenAI( base_url="https://openrouter.ai/api/v1", ) @observe() # This decorator enables tracing of the function def analyze_text(text: str): # First LLM call: Summarize the text summary_response = summarize_text(text) summary = summary_response.choices[0].message.content # Second LLM call: Analyze the sentiment of the summary sentiment_response = analyze_sentiment(summary) sentiment = sentiment_response.choices[0].message.content return { "summary": summary, "sentiment": sentiment } @observe() # Nested function to be traced def summarize_text(text: str): return client.chat.completions.create( model="openai/gpt-3.5-turbo", messages=[ {"role": "system", "content": "You summarize texts in a concise manner."}, {"role": "user", "content": f"Summarize the following text:\n{text}"} ], name="summarize-text" ) @observe() # Nested function to be traced def analyze_sentiment(summary: str): return client.chat.completions.create( model="openai/gpt-3.5-turbo", messages=[ {"role": "system", "content": "You analyze the sentiment of texts."}, {"role": "user", "content": f"Analyze the sentiment of the following summary:\n{summary}"} ], name="analyze-sentiment" ) # Example usage text_to_analyze = "OpenAI's GPT-4 model has significantly advanced the field of AI, setting new standards for language generation." analyze_text(text_to_analyze)

Public link to example trace in Langfuse

Learn More

- OpenRouter Quick Start Guide: https://openrouter.ai/docs/quick-start

- Langfuse OpenAI Integration: https://langfuse.com/integrations/model-providers/openai-py

- Langfuse

@observe()Decorator: https://langfuse.com/docs/sdk/python/decorators