One of my friends is really into using LLMs to automate office processes. He has a few different accounts, including a business ChatGPT account which he’s making heavy use of. Unlike most of the tech bros who constantly talk rubbish about AI, he’s really enthusiastic about it because he has real world uses that are making a big difference for his company. I can’t go into specifics, as it’s confidential, but I do want to speak in general terms about it.

Data Cleansing

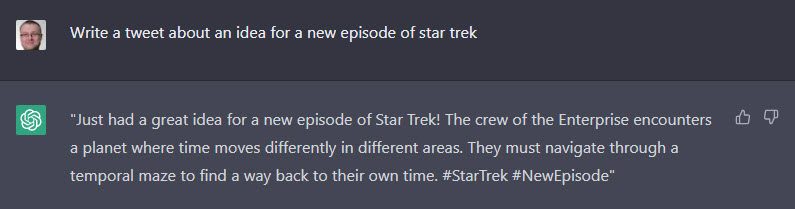

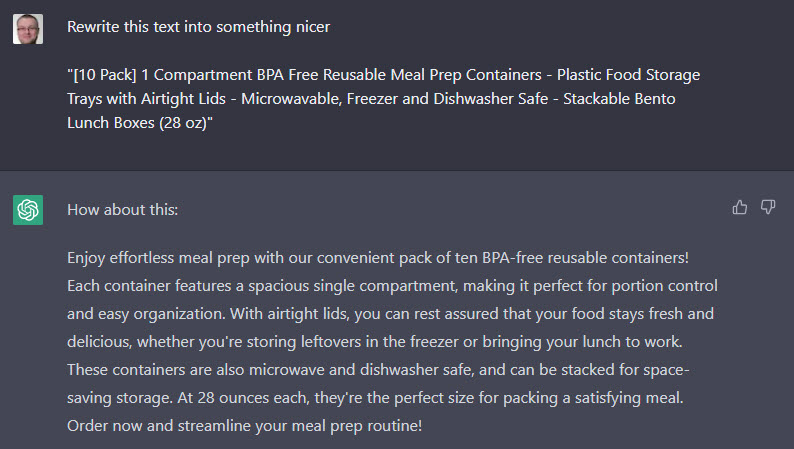

One of the processes he’s excited about is essentially data cleansing. His company has a lot of publicly available data about products, but the quality and quantity of that data varies considerably. What’s more, the data needs additional categorization.

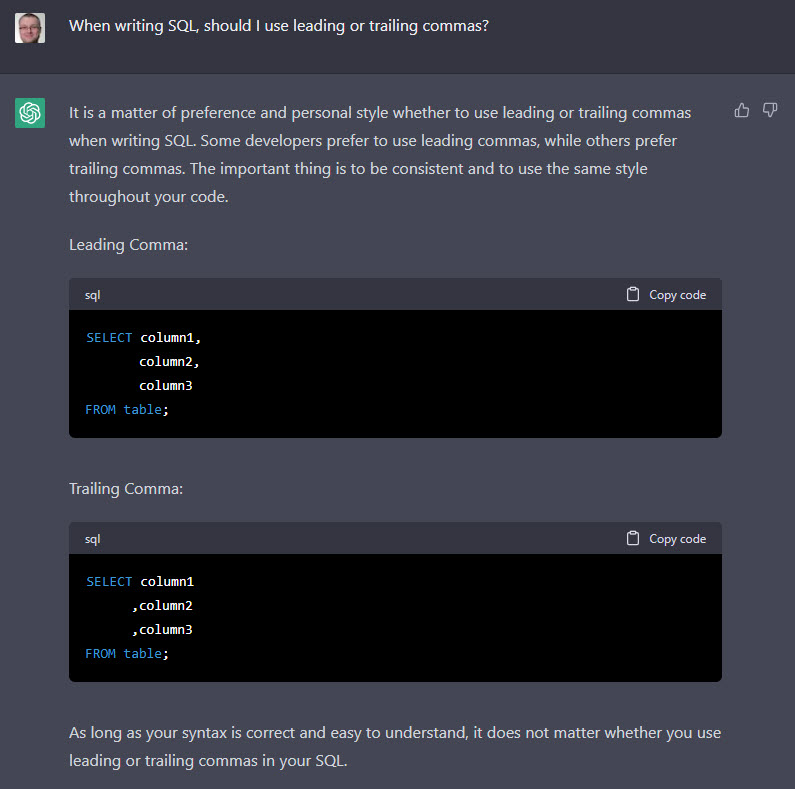

He’s never considered himself a “developer”, he’s a management type, but he’s actually done quite a bit of development over the years. With the assistance of ChatGPT he’s written some Python to submit source data to ChatGPT, get it to clean up the data, fill in some of the missing bits and categorize it. The cleansed data is dumped out to files where it can be checked and loaded back into the system.

He’s had this data cleansing process running overnight for a few weeks and it’s chugging through his data and doing a great job so far. Because of the nature of the task, he doesn’t have to deal with hallucinations or security issues, which is nice. His estimate is it would have taken about 10 years for his current folks to do this manually.

There are other task he’s already automated, or is in the process of automating, but I want to focus on this one.

But would you have done it manually?

We often hear about AI taking people’s jobs, but I’m not always convinced that’s true, and here’s why.

When we were talking I asked if he would have done this data cleansing manually if he didn’t have access to ChatGPT (or some other LLM) to help him. His answer was probably not. They couldn’t really commit to a 10 year project, or hire 10 times the people to make it a 1 year project, so they probably would have just made do with crappy data.

This reminds me of another automation story

This reminds me of another story. I was chatting to someone who was waxing lyrical about some automation, and how it had saved so much time for one of his teams. What he didn’t know is I had been part of that automation process, and I explained to him that the team in question never used to bother to perform this task in the past. So technically it wasn’t saving them any time. The automation had allowed them to do something that they should have always been doing, but never bothered to before. 🙂

But what about the current job cuts?

I’m not saying no jobs will be lost because of AI, the same way I’m not saying no jobs will be lost because of automation. What I am convinced of is some work will end up being done by AI and automation that would never have got done without it.

You can argue this stopped new people being employed to do the work, but I suspect those jobs would never have happened in the first place.

I rarely see people saying their company needs to do less work. What they want to do is to get more work done without hiring more people. I think that is a very different scenario.

But there are massive job cuts going on in the tech industry because of AI right? Wrong. The big players invariably say they are making these cuts because of AI, but that’s a pile of crap. In many cases they are getting rid of the people they over-hired during the pandemic or thinning out roles they “think” are no longer needed.

So why say it’s because of AI? If you shed 10,000 staff that sounds like either your business is screwed, or you were hiring stupid numbers of people for no reason in the past. Neither of these options sound good to investors. If you lay off 10,000 people and say you were able to streamline because of AI you sound supper efficient and cool. Investors love that crap.

Conclusion

I follow the AI space, and a big chunk of what is said is total rubbish. The press loves exaggerated claims and drama because it gets attention. As a result you can easily start to think the whole thing is rubbish, but let’s not throw the baby out with the bath water.

I can see uses for it, and coming back to the title of the post, I think some of them will be for automating tasks you wouldn’t otherwise have the time or money to do.

Cheers

Tim…

PS. I understand I’m tossing around the term AI, when I’m not really talking about AI. Chill! 🙂

PPS. I was chatting to my friend again last night and asked how the release of ChatGPT 5 had affected his workflows. The response was, “a lot”, and not in a good way. Not having control over when these things drop is a problem.

Update: A friend messaged me, and I thought it was worth sharing as they are very important questions. See his questions, and my responses below. 🙂

Q: Has he breached Data Protection law by sharing the data with the LLM?

A: No. All the data is publicly available. Nothing private is being shared with the LLM. When I mentioned, “I can’t go into specifics, as it’s confidential”, I was referring to my conversation, not the data itself.

Q: Has he breached IP laws by sharing outputs or work generated by others both internal and external to the business?

A: No.

Q: Does his company know he is doing this and has gotten approval to share this data etc. externally?

A: He’s the boss, so yes he has approval to do this. 🙂