I developed, together with others, the new CPU-time profiler for Java, which is now included in JDK 25. A few weeks ago, I covered the profiler’s user-facing aspects, including the event types, configuration, and rationale, alongside the foundations of safepoint-based stack walking in JFR (see Taming the Bias: Unbiased Safepoint-Based Stack Walking). If you haven’t read those yet, I recommend starting there. In this week’s blog post, I’ll dive into the implementation of the new CPU-time profiler.

It was a remarkable coincidence that safepoint-based stack walking made it into JDK 25. Thanks to that, I could build on top of it without needing to re-implement:

- The actual stack walking given a sampling request

- Integration with the safepoint handler

Of course, I worked on this before, as described in Taming the Bias: Unbiased Safepoint-Based Stack Walking. But Erik’s solution for JDK 25 was much more complete and profited from his decades of experience with JFR. In March 2025, whether the new stack walker would get into JDK 25 was still unclear. So I came up with other ideas (which I’m glad I didn’t need). You can find that early brain-dump in Profiling idea (unsorted from March 2025).

In this post, I’ll focus on the core components of the new profiler, excluding the stack walking and safepoint handler. Hopefully, this won’t be the last article in the series; I’m already researching the next one.

Main Components

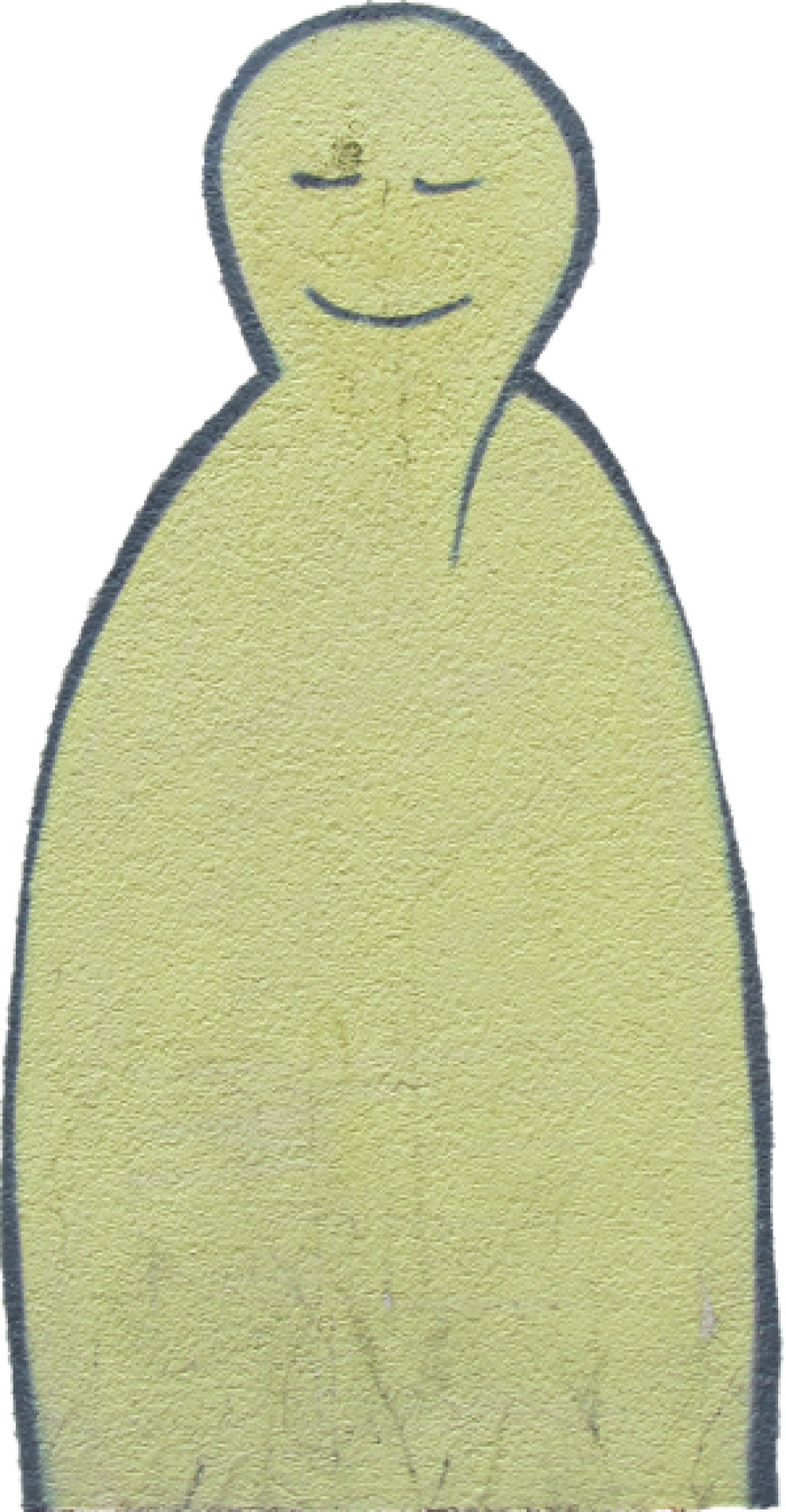

There are a few main components of the implementation that come together to form the profiler:

So basically, the Java front-end sets the sampling period via the JfrCPUTimeSampling class, the entry point for all functionality. This class instantiates the JfrCPUSamplerThread, which implements the out-of-thread sampling as a thread. It also deals with registering, unregistering, and updating the requested signals and the signal handler. The signal handler creates new sampling requests and pushes them into the thread-local JfrCPUTimeTraceQueue. This queue is a property of JfrThreadLocal and guarded by a tri-state lock to prevent concurrent access. We implemented the actual draining of the queue via the pre-existing code (see Taming the Bias: Unbiased* Safepoint-Based Stack Walking in JFR) in JfrThreadSampling. The draining is triggered either by a safepoint handler or the out-of-thread walking whenever a thread is in native.

It doesn’t look that complicated at first glance, but reaching this point took quite a while. Please don’t ask me how much fun debugging all this and the ensuing segmentation faults have been.

Now I’ll be covering all components in more detail.

Java Front-End

The Java front-end allows the user to specify the throttle and enable the events, especially the CPUTimeSample event. It parses a throttle period value like “1ms” into nanoseconds and a throttle rate like “1/ms” into events per second. When the event is enabled, the front-end only passes these values to the sampler implementation. It uses the two methods exposed via JNI in jdk.jfr.internal.JVM to pass the value:

public final class JVM { // ... /** * Set the maximum event emission rate for the CPU time sampler * * Use {@link #setCPUPeriod(long)} if you want a fixed sampling period instead. * * Setting rate to 0 turns off the CPU time sampler. * * @param rate the new rate in events per second */ public static native void setCPURate(double rate); /** * Set the fixed CPU time sampler period. * * Use {@link #setCPURate(double)} if you want a fixed rate with an auto-adjusted period instead. * * Setting period to 0 turns off the CPU time sampler. * * @param periodNanos the new fixed period in nanoseconds */ public static native void setCPUPeriod(long periodNanos); // ... } The front-end also deals with the case when multiple simultaneous recordings have different throttle values (the smallest computed period wins, source). It then passes the setting values into the main entry point of the sampler implementation:

JfrCPUTimeThreadSampling

This class handles the sampler’s lifecycle: It instantiates, enrolls, and disenrolls the actual JfrCPUSamplerThread and forwards most calls to it. For example, setting the sampling period to 0 automatically disenrolls the sampler. The class also has methods to create the actual event objects JfrThreadSampling uses to hide the implementation-specific parts.

// Stores the sampling period or rate // as both can be passed in by the user class JfrCPUSamplerThrottle { union { double _rate; u8 _period_nanos; }; bool _is_rate; public: // ... bool enabled() const { return _is_rate ? _rate > 0 : _period_nanos > 0; } // computes the sampling period by either returning the period // or using the number of active processors to compute the smallest // period that can at most produce the stated number of events // per second int64_t compute_sampling_period() const { ... } } class JfrCPUTimeThreadSampling : public JfrCHeapObj { friend class JfrRecorder; private: JfrCPUSamplerThread* _sampler; // start the sampler thread and enroll void create_sampler(JfrCPUSamplerThrottle& throttle); // set the throttle in the sampler instance if present void set_throttle_value(JfrCPUSamplerThrottle& throttle); // disenrolls the sampler, when the JVM exits or the // recording stops ~JfrCPUTimeThreadSampling(); // ... public: // use set_throttle_value to set the rate, // so we don't expose JfrCPUSamplerThrottle static void set_rate(double rate); static void set_period(u8 nanos); // triggered whenever a thread is created // and forwarded to the sampler instance static void on_javathread_create(JavaThread* thread); static void on_javathread_terminate(JavaThread* thread); // ... }; JfrCPUSamplerThread

This NonJavaThread implements out-of-thread sampling as a thread and deals with signals and the signal handler. It also ensures that there are no active signal handlers during the sampler’s disenrollment.

Upon enrollment, the sampler initializes the SIGPROF signal handler (source) to point to the CPU-time sampler’s handler.

When a new Java thread is created, and for all existing Java threads, when the sampler is enrolled, the sampler sets up the signals and the queue size of the thread-local request queues. The creation of the per-thread CPU-timer (source) is really important, as otherwise we won’t be getting any profiling signals. This is also the only reason the new CPU-time sampler only supports Linux; there is simply no (easy-to-use) equivalent of the per-thread CPU-timers on other platforms like Windows or Mac OS.

Whenever the set effective sampling period changes, the sampler modifies the timers of all Java threads. It uses its main thread loop to check the current throttle every 100ms.

But there is another function of this loop: The signal handler might find a Java thread that runs in native, which triggers the out-of-stack walking. When at least one thread triggered it, the sampler thread walks over the thread list to initiate the queue processing of every Java thread that wants to be sampled and is currently in native state. For every such Java thread, the sampler thread tries to acquire the dequeue lock (but waits if the queue is currently locked for enqueuing, as enqueuing is usually fast). Upon success, it uses the JfrThreadSampling methods to drain the queue and create events.

Without this out-of-stack walking, the request queue might overflow when the time between two safepoints is too long (usually when the thread is in native). The major downside is that it leads to out-of-thread stackwalking (see Taming the Bias: Unbiased* Safepoint-Based Stack Walking in JFR). But it doesn’t happen that often in reality (see follow-up blog post).

The main loop of this sampler thread uses a semaphore to synchronize enrollment and disenrollment. Concurrency is one of the major hurdles in this sampler, so locking is essential to prevent race conditions.

Now, before I explain what the signal handler does, I want to focus briefly on the tri-state locking of the request queue:

Tri-State Locking

A tri-state lock guards the queue:

This locking essentially prevents the two different drainage operations from happening simultaneously. It solves a major problem: We only want to walk a Java thread asynchronously out-of-thread if the Java thread is in native because then the Java stack doesn’t change. We get a safepoint on exit from the native state, which then tries to acquire the lock. But when the out-of-thread sampler is already draining the queue, then the safepoint handler waits, and the Java thread can’t proceed and change the Java thread. It might not be the best solution, because it essentially creates a synchronization point after every native state exit. But it’s the only solution I came up with. But as I stated before, this rarely happens. So it is not a problem in practice.

Now on to the signal handler:

Signal Handler

The signal handler is the cornerstone of the CPU-time profiler: It is triggered every n milliseconds of CPU-time for every Java thread.

It first checks that the thread is a proper Java thread, not shutting down, and in either native or Java state (so no transitioning states and not in safepoint handlers).

Afterwards, it tries to acquire the queue lock for enqueuing, but records a lost event if the lock is already acquired by another component or the queue is full. The former makes the inner part of the signal handler non-reentrant, reducing the queue access concurrency.

The number of lost samples is emitted per-thread every time the queue is drained via the CPUTimeSampleLost event. When the thread is neither in native nor in Java state, then this also increments the lost counter.

Now that the sampler has acquired the lock, it creates a sample request via the JfrSampleRequestBuilder, which is also used by the default JFR sampler. This request is then enqueued into the thread-local request queue, and upon success, the safepoint is triggered (see The Inner Workings of Safepoints for more information on this topic).

As explained before, if the sampled thread is in its native state, the asynchronous out-of-thread stack walking/queue draining by the JfrCPUSamplerThread is triggered by setting the thread-local and global flags.

You might have wondered why the CPUTimeSample event includes the current sampling period. One reason is that the sampling period can change between two obtained samples even without the user explicitly changing the throttle setting. This is because with a rate setting, the effective period changes whenever the number of CPUs changes. This might not happen that often in practice, but it can, and messes up the semantics of the individual samples.

There is another reason, which is explained in the code that computes the current sampling period in the signal handler:

// the sampling period might be too low for the current Linux configuration // so samples might be skipped and we have to compute the actual period int64_t period = get_sampling_period() * (info->si_overrun + 1); request._cpu_time_period = Ticks(period / 1000000000.0 * JfrTime::frequency()) - Ticks(0);

More information on timer overruns at timer_getoverrun(2).

Overall, the new CPU-time sampler gives you much more information than the default samplers, as the default sampler doesn’t record failed or lost samples. With new CPU-time sampler events, you can reconstruct every Java thread’s CPU usage.

Now on to the JfrThreadSampling class.

JfrThreadSampling

This class essentially implements the stack walking (see blog post) for the default JFR sampler. We reuse most of the code, create new methods that drain the CPU-time queue, and create the appropriate CPUTimeSample and CPUTimeSampleLost events using the JfrCPUTimeThreadSampling class. There are two differences from the default sampler:

- We also create a CPUTimeSample event when the stack walking fails; the created event has

failedproperty set to true and anullstacktrace. The default JFR sampler ignores such cases and doesn’t emit anything. - We also record whether or not the sample is probably affected by safepoint bias. We track whenever the JfrThreadSampling fails to obtain the top Java frame for a given sample request, using the top Java frame at safepoint as a fallback. This allows users to decide for themselves how to deal with such stack traces.

The final component is the CPU-time queue:

JfrCPUTimeTraceQueue

Please don’t ask me why it is named this way. I don’t know, probably “historical” reasons.

This queue stores the sample requests per Java thread. Its instances are located in the JfrThreadLocal objects of every Java thread.

The JfrCPUTimeSampleRequest combines both the JfrSampleRequest (used by JfrThreadSampling) and the sampling period, as explained before.

This queue is used, as also explained before, in three different places and guarded by a tri-state lock. Its implementation is the least finalized part of the whole CPU-time sampler implementation, as it was first developed as a

// Fixed size async-signal-safe SPSC linear queue backed by an array.

But now with all the locking, it probably doesn’t really need to be reentrant or safe. But the memory ordering and the proper usage of atomics are still areas where we could optimize the implementation to gain performance, as I’ve been cautious in the implementation. This is also the focus of the only currently open issue for the CPU-time profiler:

Clarify the requirements and exact the memory ordering in CPU Time Profiler: I used acquire-release semantics for most atomic variables, which is not wrong, just not necessarily optimal from a performance perspective.

Java 25’s new CPU-Time Profiler # ProBLEMS

It is important to note that this queue still needs to be bounded and can’t be allocated because the enqueuing happens in a signal handler.

Another point of debate, and likely focus of a follow-up blog post, is the sizing of the queue: The queue size is computed upon queue initialization before the timer for a thread is set (source):

#define CPU_TIME_QUEUE_CAPACITY 500 void JfrCPUTimeTraceQueue::resize_for_period(u4 period_millis) { u4 capacity = CPU_TIME_QUEUE_CAPACITY; if (period_millis > 0 && period_millis < 10) { capacity = (u4) ((double) capacity * 10 / period_millis); } resize(capacity); } This results in a queue size of 500 for the default profiling throttle setting and 5000 when setting the throttle to 1ms. But:

- It doesn’t change when the throttle changes, so maybe we should make the queue size period independent anyway? Or size it dynamically, which would necessitate rather complex synchronisation.

- Where does the number 500 come from? Intuition and some rudimentary benchmarking. It allows every thread to run for at least 5 seconds on the CPU in Java without a safepoint, without losing any requests, or for 5 seconds between two asynchronous out-of-thread stack walks when the thread is native. But in practice, this number might be too high.

Conclusion

The CPU-time sampler is composed of several tightly integrated components, carefully synchronized and refined through many iterations before becoming part of JDK 25. It’s by far the most significant change I’ve contributed to OpenJDK in my three and a half years as a contributor. But I couldn’t have done it alone—special thanks to Andrei Pangin, Jaroslav Bachorik, Markus Grönlund, Erik Österlund, and everyone else who supported and contributed along the way.

This post took far longer to finish than I originally planned—partly because other responsibilities took priority, and partly because writing it felt daunting. I procrastinated more than I’d like to admit; the scope just seemed overwhelming at times.

So, thank you for your patience. I hope it gave you a deeper understanding of how the new profiler works under the hood. I’m already preparing the next one, so stay tuned.

This blog post is part of my work in the SapMachine team at SAP, making profiling easier for everyone.