There are three important parts of Artificial Intelligence

- Natural Language Processing

- Speech

- Computer Vision

This post falls in the first category.

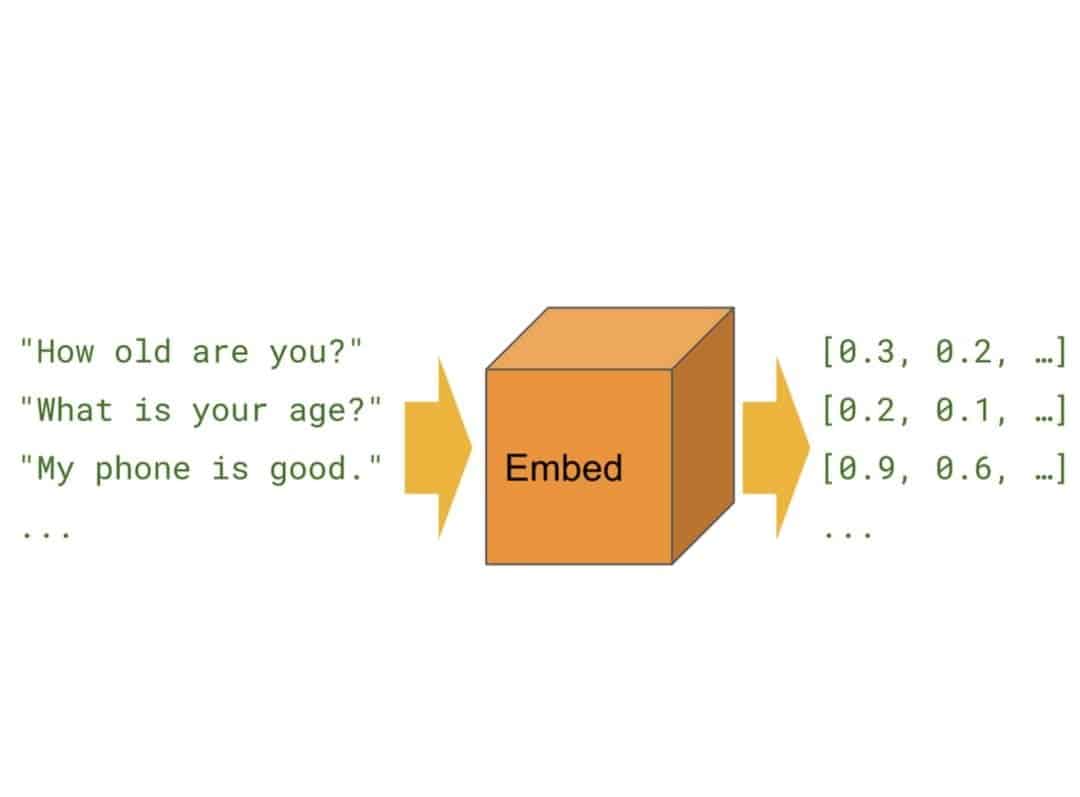

In this post, we will learn a tool called Universal Sentence Encoder by Google that allows you to convert any sentence into a vector.

Why convert words or sentences into a vector?

First, let us examine what a vector is. A vector is simply an array of numbers of a particular dimension. A vector of size 3×1 contain 3 numbers and we can think of it as a point in 3D space. If you have two vectors in this 3D space, you can calculate the distance between the points. In other words, you can say which vectors are close together and which are not.

A lot of effort in machine learning research is devoted to converting data into a vector because once you have converted data into a vector, you can use the notion of distances to figure out if two data points are similar to each other or not.

For example, if you can build a system which takes as input an image of a person’s face and returns a high dimensional vector such that all the images of the same person’s face cluster together in this high dimensional space, you can easily build a Face Recognition engine.

Similarly, if you build a system for converting an image into a vector where images of pedestrians cluster together and anything that is not a pedestrian is far away from this cluster, you can build a pedestrian detector. Histogram of Oriented Gradients does exactly this — it converts an image patch to a vector.

Now you can probably see how it would be really cool to represent words and sentences using a vector. You can use a tool like word2vec or GloVe to convert a word into a vector. The amazing thing about it is that if you convert the words “cat”, “animal”, “table” to their corresponding vectors, the words “cat” and “animal” will be located closer than the words “cat” and “table”.

Amazing, isn’t it?

Now, going from words to sentences is not immediately obvious. You cannot simply use word2vec naively on each word of the sentence and expect to get meaningful results.

So, we need a tool that can convert an entire sentence into a vector. What can we expect from a good sentence encoder? Let’s see by way of an example. Consider the three sentences below.

- How old are you?

- What is your age?

- How are you?

It is obvious that 1 and 2 are semantically the same even though 1 and 3 have more common words. A good sentence encoder will encode the three sentences in such a way that the vectors for 1 and 2 are closer to each other than say 1 and 3.

Google’s Universal Sentence Encoders

The best sentence encoders available right now are the two Universal Sentence Encoder models by Google. One of them is based on a Transformer architecture and the other one is based on Deep Averaging Network (DAN). They are pre-trained on a large corpus and can be used in a variety of tasks (sentimental analysis, classification and so on). Both models take a word, sentence or a paragraph as input and output a 512-dimensional vector.

The transformer model is designed for higher accuracy, but the encoding requires more memory and computational time. The DAN model on the other hand is designed for speed and efficiency, and some accuracy is sacrificed. The table below summarizes the two models.

| Transformer model | Deep Averaging Network (DAN) model | |

|---|---|---|

| Vector Length | 512 | 512 |

| Encoding time with sentence length | Non-Linear | Linear |

| Memory usage | High | Medium |

| Accuracy | Very High | High |

| Download Link | TransformerModel Link | DAN Model Link |

Embedding Text using Universal Sentence Encoder

Now, let’s run quickly through the cells and see what’s going on.

Install Dependencies

First, we have to make sure that all dependencies are met:

# Install the latest Tensorflow version. !pip3 install --quiet "tensorflow>=1.7" # Install TF-Hub. !pip3 install --quiet tensorflow-hub !pip3 install --quiet seaborn Import Modules

Next, we import the required modules. If you just want to perform the encoding, you only need tensorflow and tensorflow_hub.

import tensorflow as tf import tensorflow_hub as hub import matplotlib.pyplot as plt import numpy as np import os import pandas as pd import re import seaborn as sns Load Universal Sentence Encoder

In the next cell, we specify the URL to the model. Here, we have two choices. We can either go for

- Transformer Model : https://tfhub.dev/google/universal-sentence-encoder-large/3

- DAN Model : https://tfhub.dev/google/universal-sentence-encoder/2

We will proceed with DAN here but I would suggest you try out Transformer Architecture as well.

module_url = "https://tfhub.dev/google/universal-sentence-encoder/2" Now that we have the URL, we first have to load the model.

embed = hub.Module(module_url) Embed Messages using Encoder

Next let’s try to embed some words, sentences, and paragraphs using the Encoder. We will take the examples directly from Google’s Colab Notebook.

word = "Elephant" sentence = "I am a sentence for which I would like to get its embedding." paragraph = ( "Universal Sentence Encoder embeddings also support short paragraphs. " "There is no hard limit on how long the paragraph is. Roughly, the longer " "the more 'diluted' the embedding will be.") messages = [word, sentence, paragraph] Now comes the Tensorflow part. So we will create a new session and embed the sentences using the encoder. As we discussed earlier, the output will be 512 dimensional vector but to make the output more readable, we are going to print only first 3 elements of the vector for each message.

with tf.Session() as session: session.run([tf.global_variables_initializer(), tf.tables_initializer()]) message_embeddings = session.run(embed(messages)) for i, message_embedding in enumerate(np.array(message_embeddings).tolist()): print("Message: {}".format(messages[i])) print("Embedding size: {}".format(len(message_embedding))) message_embedding_snippet = ", ".join( (str(x) for x in message_embedding[:3])) print("Embedding: [{}, ...]\n".format(message_embedding_snippet)) The result will look something like this.

INFO:tensorflow:Using /tmp/tfhub_modules to cache modules. INFO:tensorflow:Downloading TF-Hub Module 'https://tfhub.dev/google/universal-sentence-encoder/2'. INFO:tensorflow:Downloaded TF-Hub Module 'https://tfhub.dev/google/universal-sentence-encoder/2'. Message: Elephant Embedding size: 512 Embedding: [-0.016987258568406105, -0.00894984696060419, -0.007062744814902544, ...] Message: I am a sentence for which I would like to get its embedding. Embedding size: 512 Embedding: [0.035313334316015244, -0.025384260341525078, -0.007880013436079025, ...] Message: Universal Sentence Encoder embeddings also support short paragraphs. There is no hard limit on how long the paragraph is. Roughly, the longer the more 'diluted' the embedding will be. Embedding size: 512 Embedding: [0.01879097707569599, 0.045365117490291595, -0.020010903477668762, ...] Semantic Textual Similarity using Universal Sentence Encoder

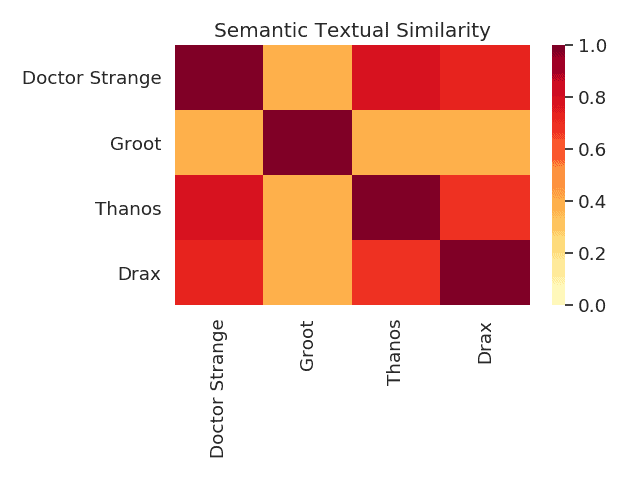

This is all well and good but what can we do with these numbers? As an example, let’s try to carry out a Semantic Textual Similarity Task. Since the embeddings produced by the models are normalized we can compute the semantic similarity of two sentences using a dot product of the vectors.

So here’s what we’re going to do. We are going to write a complete set of codes to process the script of Avengers: Infinity War in the memory of Stan Lee (RIP 🙁 ). We will extract all the dialogues of characters in the movie and then carry out a semantic similarity analysis on the dialogues to get a similarity matrix.

Process the script

First, we will copy the raw script from Transcripts Wiki and put it in a file – script-raw. Next, we need to remove the content enclosed in brackets as that’s not the dialogue. We will write the rest of the script to a file – script-processed.

import re script_processed = open("script-processed",'w') with open("script-raw",'r') as script_raw: for line in script_raw.readlines(): x = re.sub("[\(\[].*?[\)\]]", "", line) x = x.strip() script_processed.write("{}\n".format(x)) script_processed.close() Get dialogues from script

Once we have the processed script, we have to extract the characters and all of their dialogues in the entire movie and put them in a file. The basic logic we are going to use to get the character’s name is that the format followed throughout in the script is Character: Dialogue. We will also remove any blank dialogues. Finally, we will save the dialogues of a character in a file – character.txt.

chars = [] file_handles = {} script = open("script-processed",'r') for line in script.readlines(): line.strip() if line=="": continue else: try: if ":" not in line: continue character = line.split(":")[0] newline = " ".join(line.split(":")[1:]) if len(character.split())>2: print(line,character) input() if character=="": continue elif character in chars: file_handles[character].write("{}\n".format(newline.strip())) else: chars.append(character) file_handles[character] = open(character+".txt",'w') file_handles[character].write("{}\n".format(newline.strip())) except: print("Unknown error. Skipping line...") Here is the content of Collector.txt:

I don't have it. I told you. I sold it. Why would I lie? Like suicide. I didn't know what it was. Magnificent! Magnificent! Magnificent! Functions for Plotting Similarity Matrix

Let’s write the function for plotting the similarity plot.

def plot_similarity(labels, features, rotation): corr = np.inner(features, features) sns.set(font_scale=1.2) g = sns.heatmap(corr,\ xticklabels=labels,\ yticklabels=labels,\ vmin=0,\ vmax=1,\ cmap="YlOrRd") g.set_xticklabels(labels, rotation=rotation) g.set_title("Semantic Textual Similarity") plt.tight_layout() plt.savefig("Avenger-semantic-similarity.png") plt.show() def run_and_plot(session_, input_tensor_, messages_, labels_, encoding_tensor): message_embeddings_ = session_.run(encoding_tensor, feed_dict={input_tensor_: messages_}) plot_similarity(labels_, message_embeddings_, 90) Perform Semantic Similarity Analysis

First, let’s load some modules and the Encoder.

import tensorflow as tf import tensorflow_hub as hub import matplotlib.pyplot as plt import numpy as np import os import pandas as pd import re import seaborn as sns module_url = "https://tfhub.dev/google/universal-sentence-encoder/2" print("Loading model from {}".format(module_url)) embed = hub.Module(module_url) Next, we need to get a list of all text files present in the current directory. This will be the list of dialogues of characters.

text_files = [f for f in os.listdir() if f.endswith(".txt")] Now, we will read these files and load the dialogues of a character in a dictionary with a format – character: dialogue.

character_lines = {} for fname in text_files: character = fname[:-4] print("Reading file for {}".format(character)) character_line = "" with open(fname,'r') as g: for line in g.readlines(): character_line+=line.strip() if character_line == "": continue character_lines[character]=character_line To make things more interactive and easy to use, we will give the user an option to choose the characters to be used for the semantic similarity analysis.

# Select characters print("================================") print("Characters found:") for i in range(len(character_lines.keys())): print("{}: {}".format(i,list(character_lines.keys())[i])) print("================================") print("Enter character index to be used:") print("Enter q or Q to stop.") flag = True char_index = "" final_character_lines = {} characters = list(character_lines.keys()) while flag: char_index = input() if char_index.upper() == 'Q': flag=False else: char_index = int(char_index) final_character_lines[characters[char_index]]=character_lines[characters[char_index]] character_lines = final_character_lines print("================================") print("Characters selected:") for i in range(len(character_lines.keys())): print("{}: {}".format(i,list(character_lines.keys())[i])) print("================================") Now that we have the messages with us, we go back to tensorflow to run a session, and get the similarity matrix. We will display the similarity in a heat map. The final graph will be a 9×9 matrix (since we have 9 messages in the list) where each element (i,j) is colored based on the similarity. The darker the color, the higher the similarity.

similarity_input_placeholder = tf.placeholder(tf.string, shape=(None)) similarity_message_encodings = embed(similarity_input_placeholder) with tf.Session() as session: session.run(tf.global_variables_initializer()) session.run(tf.tables_initializer()) run_and_plot(session, similarity_input_placeholder, messages, similarity_message_encodings) And if you are interested in seeing the matrix for all the characters in the movie (without labels, of course):

Hope you enjoyed the post. Make sure that you try out with some interesting messages and share the results with us in the comment section 🙂 .

References

- Feature image and code reference from Tensorflow Hub

- Transcripts wiki for Avengers: Infinity War script