Conversational, Local, AI Companion

Late nights get lonely, so I built Operation Pinocchio—turning my AI skeleton cohost Little Timmy into a real, local, talking robot.

Late nights get lonely, so I built Operation Pinocchio—turning my AI skeleton cohost Little Timmy into a real, local, talking robot.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

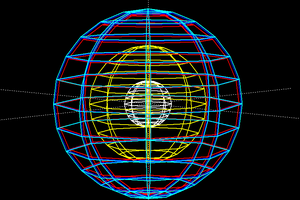

Screenshot 2025-11-05 115833.pngLittle Timmy early testingPortable Network Graphics (PNG) - 356.99 kB - 11/05/2025 at 18:40 | | |

Screenshot 2025-01-26 223355.pngVoice fine-tuning training loss functionPortable Network Graphics (PNG) - 161.57 kB - 11/05/2025 at 18:40 | | |

Screenshot 2025-10-28 014821.pngMe and TimmyPortable Network Graphics (PNG) - 4.33 MB - 11/05/2025 at 18:40 | | |

Screenshot 2025-11-05 115923.png3.3V to high voltage ignition with batteryPortable Network Graphics (PNG) - 350.60 kB - 11/05/2025 at 18:39 | | |

Screenshot 2025-11-05 024639.pngDiagram of the entire flowPortable Network Graphics (PNG) - 1.72 MB - 11/05/2025 at 18:39 | | |

I found these metal skulls. I think they are cast-iron, but they are easily weldable. I bought two of them because I was worried I would mess up one of them in my original project.

Luckily, I didn’t mess up the original, so I could cut the jaw off of the original while preserving the rest of the face, and cut the jar off the second skull while preserving the skull. Then it involved some welding, but this easily could be done with JB Weld or similar metal adhesives to add a hinge. Then I drilled big hole in the skull, jammed in some bearings that allowed an 8 mm bar to go through them, then drilled some 8 mm holes through the jaw, bada bing bada boom, I had an articulating jaw. I tapped some holes and screwed in some 3 mm bolts and used some springs I had on hand to make sure the jar would naturally be closed.

I welded on a little fastener for a lightweight servo (9 gm) on the back, and I use some fishing line to connect the mandibular arch (yeah, I’m getting anatomical) to the servo arm. Now, I needed a way to control the servo arm to make the jar look like it was opening naturally during speech.

I wanted the jaw to open the way human jaws open when they talk. This involved learning about something called “phonemes,” which are basically an agreed-upon nomenclature for different sounds. I focused on vowel sounds, because I think for most consonant sounds the human mouth is usually closed. Linguistics PhD’s can make fun of me now in the comments. Given my presupposition about the human mouth being closed for consonant sounds, and given that the mouth being closed is the default position, I needed to devise a way to figure out how much to open the mouth for different vowel sounds.

I had a hunch that a given voice with predictable frequencies would be able to identify dominant phonemes. Based on that hunch, I needed Little Timmy to have a voice so that I could identify the frequency of his phonemes. I used Piper TTS for speech generation, specifically https://github.com/rhasspy/piper, which conveniently contains a a Python package that spins up a web UI and lets you record a bunch of quotes into a microphone to create a training data set.

I recorded 142 clips of me reading but now statements while doing my best impersonation of Skeletor from the 80s cartoon He-Man (I wanted to do Cobra Commander/Starscream, but I can’t do that impression well and couldn’t find enough clean audio). After recording the audio, I ran a Python script that literally fine tuned the model I was using (lessac_medium.onnx) and when the loss curves started to flatten, I considered it done.

Now that I had a stable voice that was essentially mimicking my own impression of Skeletor, I wrote a little web interface for I could type in different words featuring the different phonemes.

How did I measure the phonemes, one might ask? At this point in the project, the speech-to-text (STT) fine-tuned model was running on a raspberry pi 5 quite adequately, with audio output via a USB to 3.5mm headphone jack adapter. I took a 3.5 mm female headphone jack and soldered the ground to an ESP 32 dev board, left or right signal (I forget, doesn’t matter) to a resistor divider that biased the incoming AC signal to 1.65V; this made sure there was no negative voltage going to an ESP 32 analog read pin, and I could sample the signal on the analog pin, use the Arduino FFT library to convert the signal to the frequency domain, then monitor for frequency peaks.

It just so happens that phonemes have different frequency peaks, and the “F1 formant” (lowest frequency peak) associated with most vowel phonemes coming out of Little Timmy’s mouth just so happens to occur between 200 Hz and 1,000 Hz. I had Little Timmy read lots of vowel sounds, I plotted them using Arduino IDE’s plot function, and I am measured the typical peaks for English common phonemes.

I really can’t believe this worked, but it just so happened to work quite well. It was huge. Now, I could identify the phoneme, and map that phoneme to typical “jaw openness” for different valves sounds. For example, the human jaw opens the most for the “ahhhh” sound, which is why doctors tell you to make that sound when they want you to open your jaw. The jaw opens much less for “oh,” “ew,” “eh,” “uh,” and so forth. Luckily, linguistic nerds made a lot of that information available, so I now had my workflow of frequency peak => phoneme => jaw openness => servo instruction.

I used a very basic 9 g servo to pull on the jaw so it opened appropriately based on the different phonemes.

OpenAI has a service and downloadable Python package called Whisper. People smarter than me figured out how to turn this into something called faster-whisper, which apparently uses something called Ctranslate, and this is now basically outside of the scope of this tutorial. Anyway, I initially had faster-whisper tiny_en (tiny English) model running on a raspberry pi 5, connected to an input from my microphone. It did a pretty good job converting my speech-to-text (STT).

The problem I had to overcome was having the STT model send the text payload as quickly as possible at the appropriate time, as I needed Little Timmy to respond rapidly, like a human. The Python package contains something called Silero Voice Activity Detection (VAD) and I leverage this to detect any time there was 0.5 seconds of silence and determined that as the queue to send a text payload to my AI preprocessor. A tricky part was that, on instances when I went on long diatribes, which are frequent, I needed it to transcribe in chunks without overlapping text in the output. It was a whole thing. This will be on get help at some point, but I figured out a way to transcribe speech of variable lengths, without repeating text, and send the final payload after a 0.5 second silence.

It worked pretty well on a raspi 5, but it still added about 0.5 – 0.75 seconds of latency before Little Timmy responded, which kind of ruined the illusion of me talking to a Real Boy. At this point in the project, since my Jetson Orin Nano had not yet arrived, I had purchased a functioning PC for a very fair price on Facebook Marketplace that just so happen to have an RTX 3060 graphics card in it with 12 GB of RAM (these cards tend to sell for around $300 on the secondary market, 12 GB VRAM is huge at that price, and I got a whole functioning computer for $600 so it was a win).

I moved the model from the raspi5 to the PC, got faster-whisper to run straight up in Windows terminal quite easily, then got a little greedy. I upgraded from tiny_en to base_en (this is the next model bigger). Since I was really greedy, and since it was later in 2025, I asked Claude in Cursor to write a Python script to record any time it heard me talking, familiarize itself with the github of faster-whisper, and record sound clips of appropriate length of me talking for approximately 1.5 hours. I clean my shop with my microphone on and basically talked for 90 minutes, using words I typically use, my local accent, and referencing tech objects (ESP32, I2C, etc.) that I would want it to appropriately transcribe. Then I downloaded the largest model of whisper that I could find to perform STT on the recordings. I corrected very rare errors in transcription, and bada bing bada boom, I had a training data set upon which whisper_base could be fine-tuned on my own voice. Since I was feeling cocky at this point, I also ensured it was fully using CUDA, which further decreased latency. The results were incredibly accurate, on par with Dragon Professional (which I use routinely) and quite plausibly superior.

Create an account to leave a comment. Already have an account? Log In.

Become a member to follow this project and never miss any updates

About Us Contact Hackaday.io Give Feedback Terms of Use Privacy Policy Hackaday API Do not sell or share my personal information

glgorman

glgorman

Lars Zimmermann

Lars Zimmermann