We did an informal poll around the Hackaday bunker and decided that, for most of us, our favorite programming language is solder. However, [Stephen Cass] over at IEEE Spectrum released their annual post on The Top Programming Languages. We thought it would be interesting to ask you what you think is the “top” language these days and why.

The IEEE has done this since 2013, but even they admit there are some issues with how you measure such an abstract idea. For one thing, what does “top” mean anyway? They provide three rankings. The first is the “Spectrum” ranking, which draws data from various public sources, including Google search, Stack Exchange, and GitHub.

The post argues that as AI coding “help” becomes more ubiquitous, you will care less and less about what language you use. This is analogous to how most programmers today don’t really care about the machine language instruction set. They write high-level language code, and the rest is a detail beneath their notice. They also argue that this will make it harder to get new languages in the pipeline. In the old days, a single book on a language could set it on fire. Now, there will need to be a substantial amount of training data for the AI to ingest. Even now, there have been observations that AI writes worse code for lesser-used languages.

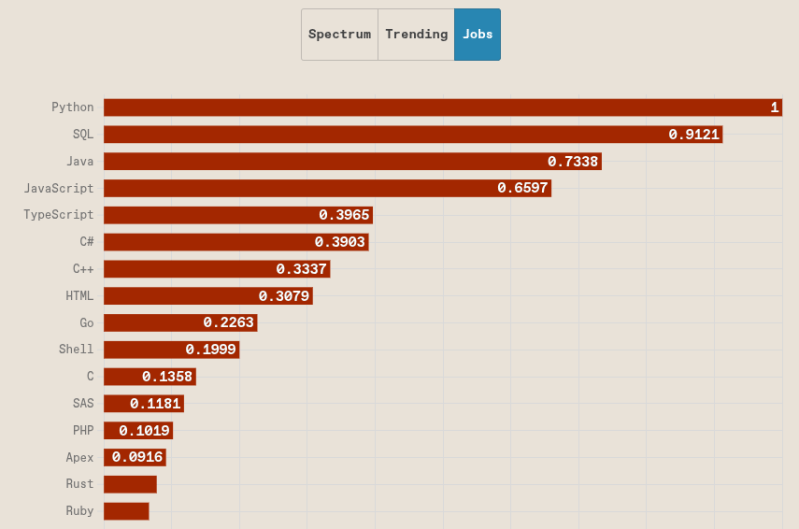

The other two views are by their trend and by the number of jobs. No matter how you slice it, if you want to learn something, it looks like it should be Python. Of course, some of this depends on how you define programmer, too. Embedded programmers don’t use PHP or Perl, as a rule. Business programmers are unlikely to know Verilog.

The other two views are by their trend and by the number of jobs. No matter how you slice it, if you want to learn something, it looks like it should be Python. Of course, some of this depends on how you define programmer, too. Embedded programmers don’t use PHP or Perl, as a rule. Business programmers are unlikely to know Verilog.

A few surprises: Visual Basic is still holding its own in the job market. Verilog outweighs VHDL, but VHDL still has more jobs than LabVIEW. Who would guess? There are still pockets of Ada. Meanwhile, Fortran and Arduino are about equally ranked, as far as jobs go (though we would argue that Arduino is really C++).

So you tell us. Do you agree with the rankings? Do you think hackers would rank languages differently? Will AI reduce us to describing algorithms instead of writing them? We aren’t holding our breath, but who knows what tomorrow brings? Discuss in the comments.

There is no “best language”, there is languages more fitted for some usecase (and there is bad language too).

My take is simple, learn a powerful language (C, C++, or the modern contendents), and a scripting language (Python, lua, etc.).

This way, you can both do heavy lifting with the powerful language, and mock ups/ handy tools with the scripting language.

Personally, it’s C+Python. As an exemple, I code a game for the game boy in C, but all my tools to convert sprites or pack the data are in python.

imho.

Can you tell more about how you made that game? What tools did you use? Do you run it in an emulator or also on a real gameboy?

Agree 100%, but it might also be wise to learn a language where you’re competition is retiring/dying off:

What percent of computer code still in use is written in COBOL and where is it most used?

Grok 3 AI answer excerpts:

Estimates for the percentage of actively used computer code (measured primarily by lines of code, or LOC) that is written in COBOL vary across sources, but the most commonly cited figure is around 80%. This is based on reports of approximately 220 billion lines of COBOL code still in production worldwide, representing the majority of legacy business and transaction-processing systems. However, this statistic originates from older analyses (e.g., Gartner in 1997 and repeated in sources up to 2023), and it likely refers to business-oriented code rather than all code globally (e.g., excluding web, mobile, or scientific applications). More recent or sector-specific estimates are lower:

IJARSCT/PCMag (2023) – 43% – Specifically of banking systems; handles $3 trillion in daily transactions.

CB Insights (2023) – 95% of ATM swipes – 80% of in-person credit card transactions – transaction-level usage in finance, not overall code.

COBOL Cowboys (2023) – 65% of active code – 70% of critical business logic – Emphasizes business transactions – 85% worldwide).

These figures highlight COBOL’s outsized role in high-stakes, high-volume systems where reliability trumps modernity. New COBOL code is still written annually (estimated 5 billion lines), but the total codebase grows slowly due to maintenance rather than greenfield development.

COBOL is predominantly used in legacy mainframe systems for transaction-heavy, data-intensive applications, especially where rewriting code would be prohibitively expensive or risky (e.g., in regulated environments). It powers about 90% of Fortune 500 companies’ core operations. The top industries and sectors include:

Finance and Banking (43% of systems): Core transaction processing, including 95% of U.S. ATM activity and 80% of in-person credit card swipes. Examples: Bank of New York Mellon (343 million LOC in 112,500 programs).

Insurance: Policy management, claims processing, and actuarial systems for risk analysis and premium calculation.

Government and Public Sector: Administrative and financial systems, including unemployment benefits processing and federal agencies (e.g., U.S. Department of Defense’s MOCAS system, ~2 million LOC since 1958).

Retail and Airlines/Travel: Point-of-sale systems, inventory management, and reservation processing (e.g., major hotel chains and airlines).

Healthcare and Automotive: Administrative billing, patient records, and supply chain systems.

Geographically, usage is highest in the United States (56% of known COBOL-using companies), followed by the UK and Brazil. It’s rare in startups or consumer apps but essential for mission-critical infrastructure. Efforts to modernize (e.g., via IBM’s watsonx AI for refactoring to Java) are underway, but full replacement remains challenging.

Ah, yes, the famous Ousterhout’s False Dichotomy. Or you could pick a language that is both powerful and convenient. But you will still have to learn C, I’m afraid, not because it is “powerful” (it isn’t), but because it’s everywhere.

It’s not a false dichotomy.

I write mostly embedded firmware. Mostly C and some C++.

For desktop applications and scripts I use mostly Python, batch/bash, C++, C# and C.

Python cannot run on most true embedded systems (I don’t count a raspberry pi as embedded).

I have played with micropython. So some embedded systems do support this flavor of python. But even then the low level drivers are written in C/C++. So python cannot replace C/C++ for embedded. Period.

C++ is now much better for embedded than a decade ago. By using a subset of C++ and useful modern features you can use C++ as a better C with objects, templates and generic programming without performance penalty. I often combine C and C++ in projects.

Using Python to write GUI desktop applications is not always easy. C# is easier for that in my experience. Though I have played with wxPython.

For scripts python is much faster to code. Especially due to the ability to interact with code live and use Jupyter notebooks for experimentation.

Are the types of applications and supported targets of languages expanding? Yes. Are lines between embedded and non-embedded being blurred? Somewhat. But different languages have completely different philosophies. Languages can also be described/categorized by various metrics. Such as Compiled vs interpreted. There are ways to compile python and ways to interpret C, but it’s a hassle. Will that always be the case? I don’t know and won’t make a prediction for it. But I firmly believe different languages have different applications. It’s a true dichotomy

I never said Python can replace C. In fact, I explicitly said that you will have to learn C — we will be stuck programming very fast PDP11 emulators for as long as the “industry” insists on it. And I don’t think Python is the answer to the “powerful and convenient” language question. It surely is closer than TCL was, but I’m afraid we still have a long road ahead.

disappointed to see you use “powerful” so thoughtlessly without defining it. i understand hating C, and i understand wanting different powers than everyone else does…i just don’t get anything out of your comment except your disdain. at any rate, it’s cowardly to say something like that without suggesting your own favorite language that is both “powerful” and “convenient” – however you’d define those words.

fwiw i’m a big fan of ML and i wish it wasn’t just a niche

I don’t enjoy the language (especially a lot of its tooling), but saying C isn’t powerful is the hottest of takes.

It’s about as powerful as a crippled macro-assembler, with half the instructions for the given architecture removed because they didn’t exist on PDP11.

Linux.

Agreed. C without the pre-processor and standard libs is nearly a 1 to 1 mapping of assembly. And separate header files? Ouch!

Agree 100%. However, another strategy might be to decrease your competition by learning a language like COBOL that is still in very wide use in some sectors. The competition is probably much lower due to coder retirements. This could also put you in a position to convert the COBOL code to a more modern language which you would also learn.

The question is why are you learning in that case – as the old largely dead but with a few hold outs languages are only of great value if you wish to work maintaining/transitioning from the legacy services that keep on trucking with them – which might interest you, is likely to get you a reasonably secure job, but it won’t appeal to everyone that wants to code.

Still a very valid option, but if you are going to choose to focus on the old largely dead language it better be either your hobby or because that tiny handful of jobs maintaining it are really what you want to do.

I’m not really seeing COBOL jobs pop up anymore. I know COBOL and it seems to be waning even more. My last job had us remove COBOL from the system. Maybe its the job boards I used to frequent?

I used to do COBOL, EDI, and other such programming for a while. Nowadays its almost all python/sql/javascript or I guess just modern stacks.

Want a language where recruiters fall over backwards to talk to you. Learn VHDL/Verilog. Put those 4 magic letters on my resume years ago and the emails have never stopped.

Thanks for the tip about not to contact recruiters because they’ll never stop sending you emails.

VHDL/Verilog or System Verilog now days are HDLs. So not computer languages at all. They are out of place in this discussion.

A language that instructs hardware to do something. How is it not a computer language?

Another one is COBOL. If you can read and update highly optimized COBOL code that has been modified by many people over the last half century, there will be no shortage of employers for you.

Ranking is invalid because it considers HTML a programming language

And also SQL. If anything this should be called “Top Development Skills”.

I’ve worked with ERP systems where the API is comprised entirely of SQL stored procedures. That qualifies as a programming language in my books.

But isnt that Calles ps-sql or some such?

What about Delphi Pascal?

Over a 40-year run, I’ve programmed for money in 14 different languages, the top ones in order of lines written, being: Perl, PHP, C++, Java, JavaScript, C, C# and a couple of Lisp variants. I’ve also hand-coded most of the typical markup languages: HTML. XML, JSON, CSS (arguably a language these days), a couple of proprietary SGML schemas and some in-house weirdo forms. On top of that most of the applications were database-forward, so there are five or six SQL variants mixed in for good measure and one truly bizarre object-DB. Add to that “play” languages that I’ve explored for fun, education or future-proofing, e.g.: Forth. Rust, Ada, and a handful of others. Except for three years around the Internet bubble, all of this has been at the same EDA company.

What are my “top” programming languages? Watever ones my employers ask of me. Just gimme a manual, I’ll figure it out. Syntax is almost never the problem. The problem of nearly everyday is thinking correctly about the day’s programming puzzle.

Z80 ASM. Same as always.

I heard there were 3 openings for that last year.

Top is application dependent.

I had to use Visual Basic at work because thats all I could use on a restricted system.

For non-time constrained stuff I go for python. For embedded/time constrained I go for C, C++, Go/tinygo.

TBH I am liking Go more and more.

Well, if someone needs a VB6 app maintained and forks over the dough i wouldn’t complain.

It is always a surprise (to me) to se SQL listed as a language. I always considered it a parallel discipline ( part of database design), more of an API. There is no database system that cant be crushed by a poorly designed query , believe me I have written a few bad ones :). To optimize SQL, you must understand the database structure and relationships. When we really needed our SQL to perform, the DBAs played a part in the SQL design. Lastly, outside of ‘triggers’ and ‘stored procedures’ all my SQL was called from some other language in the list. I might say ‘should I do this in C or Python’ but never thought ‘should I do this in C or SQL.

I suspect folks include SQL because they are thinking of things like PL/SQL, Transact-SQL and the various other procedural-language extensions that have been applied to SQL by various suppliers.

this survey stuff is all kind of irrelevant to me, i guess. people are getting paid big bucks to write bloated javascript…they don’t even know HTML / CSS / javascript, they just know JQuery. they’ll pull in a thousand or million-line library just so they don’t have to learn basic HTML features text-align. i imagine they switched to chatgpt these days, but a decade ago their main tool was stackoverflow. there could be a thousand of those people or a million of them, i don’t really care what they’re doing. for better or worse, my interest in their output is limited to how well stock Chrome handles it (pretty well, these days).

but i just wanted to add a bit of snark: hackaday editor’s favorite language isn’t solder, it’s youtube :P

100%. i actually find myself using perl relatively more often for SQL projects because the perl sql interface (dbd/dbi or whatever) is more familiar to me than the C equivalent.

I’ve used some languages at work and as a hobby, C, C++, Python, Java, Ruby, Lua, R, Go, JavaScript, but only Elixir that always make me excited to code

Today it is Python and C/C++ in my current job. In my career past, it was C (mostly) and Pascal. Of course there was assembly mixed in. For hobby work current, Python is top of the list. C/C++ second. And of course there were times I had to work with SQL, Fortran, C#, VB (yuck) over the years.

Programming has a lot has to do with the type of ‘job’ you are doing. Mine was in the field of Real Time automation (substations/power plants/etc.) and energy management systems today.

When i see the user interfaces in manufacturing environment (PLC/HMI, ERPs, MES, basically everything), it looks like the programming language is not so important as LEARNING THE STUPID PROGRAMMERS ABOUT SIMPLE AND INTUITIVE USER INTERFACES. Yes,, to learn them best with wooden sticks.

Disappointing to see the IEEE onboard the AI hype train. It’s not inevitable, it’s not the future, it’s a scam and a fad. The bubble’s popping next year. No one will be able to afford those services after the borrowed money’s all burned up and they have to charge customers what it actually costs.

LLM-powered anything and the concept of vibe coding are going to be radioactive for the duration of the long AI winter to follow.

Yep. Someone has to pay for those massive data centers with massive power consumption… And it shouldn’t be the government (my tax dollars) funding the boondoggle.

100%. I dislike AI, If I write code myself I know the roadmap and can easily debug bugs. If AI writes the code you first have to figure out how it is going about trying to do the thing, if it will work, what stuff is straight BS and so on.

Might as well skip the bug hunting, fixing and optimization of the crap code, which are the worst parts, and enjoy the problem solving of writing it yourself.

Really expensive and terrible too. Can’t wait for it to die

Agreed. I thought I’d give one a shot at a peice of code I’d written which I was having a bit of a hard time working out some kinks in. I asked it to review the code and see if it could suggest a fix for a particular function. It chugged away for a bit, spat the entire file back out (couple of hundred lines of python) with a single change… A comment in that function to indicate it didn’t work. Had the ai had a corporeal body it would have recieved a swift smack upside the head for it troubles.

Sorry but this is pure cope considering people can already run useful models on consumer-grade commodity hardware.

Well if they manage to train a model that actually programs halfway decently you’ll probably be able to run it locally on relatively modest hardware, so there is a good chance ‘vibe ‘coding” will last a long time. I agree its rather overhyped, but 99% of users won’t need something novel, or even remotely unusual so the LLM should be able to pull from the collective data of all the collaborative git like platforms and forum posts a suitable response. (I might be exaggerating the percentage but probably not by that much).

The thing vibe coding will never do well is efficient secure code that only does what it needs to, as its going to be pulling in lots of peripheral garbage and suboptimal logic for your specific needs as the structures it has plundered wasn’t written for exactly the same situation. But give how insanely overkill modern compute platforms generally are it largely won’t matter.

As a follow up thought, I wonder if the vibe coder will be on the hook for the security vulnerabilities at all, and how much analysis of vibe coding output would be needed to find common faults to probe for – its going to be next SQL injection attack style most likely, just toss in the billy drop tables equivalents for vibe coding and watch the lazy/incompetent vibers empire crumble.

One of the first actually thoughtful and pretty balanced comments I’ve seen on Hackaday in a while related to vibe coding. Well done,

definitely Ruby

alvais i have trouble with pip and python library, but with perl5 and ruby never.

C is the speed of light. 7 is the least used digit in computing. C’mon JavaScript!

Surprising to see that for Apple ecosystem Objective-C stills almost equal to Swift.

Meh… programming is just ones and zeros at the end of the day.

is existence of so many different languages causing

questioning for necessity and cost reasons

outside the computing industry?

AI Overview

Yes, the existence of many different human

languages raises questions about their

necessity and cost outside of the computing

industry. This questioning is often balanced

against the immense cultural and

cognitive benefits of linguistic diversity.

Here is an overview of the challenges and

benefits associated with the multiplicity of

human languages.

Challenges and costs of language diversity

Economic costs:

…

Social and relational costs:

…

Arguments for the necessity and value of

language diversity

…

In conclusion, the questioning of linguistic

diversity often stems from the clear and

measurable economic and practical costs,

particularly in a globalized world. However,

this perspective often overlooks the profound,

long-term cultural, social, and cognitive benefits.

Arguments about the necessity of so many

languages are part of an ongoing debate between

the desire for global efficiency through

standardization and the desire to preserve

the richness and diversity of human culture.

Computer languages legal and maintenance liability costs an issue too?

SQL has wonderful and quite powerful pre-processing – WITH command; not sure why it is called “clause”, it is really cached-in-RAM-tables created on the fly for further processing. If you are good with Cubes, it gets even better, automagically generated tallies (if needed) and other niceties.

SQL It is not a programming language per se, since it is missing things needed with the structural programming (no loops … no forking … etc). As the name implies, it is QUERYING language, but it is not only for querying the data, it is also for updating, adding, editing, massaging, formatting the data, etc.

ANSI SQL doesn’t have other niceties, like Lambda functions, BUT you can fake with the same WITH command. That’s what it is for. Inline pre-processing and things.

Done a fair amount of PL/SQL (Oracle, obviously), and T-SQL (Microsoft’s itch to overtake Oracle’s PL/SQL), they are okay for most purposes, but sooner or later you run into its other limitations of not really being a substitute for proper programming language. Scripting, yes, amazing interwoven records processing and SQLs, but that’s about all. You will hit that limitation rather quickly, no way to call anything outside the narrow scope, etc. Oh, if you have a full-blast dedicated Oracle server (machine) you sure can do a built-in (though, scaled down) Java in addition to PL/SQL, so there, Oracle has added Java for good measure : ]

Used about as many SQL variants as there exists, and the differences seem to be rather trifle, almost every dialect will have some kind of lookup functions, obviously, casting and formatting, conditional this and that (IF – THEN – ELSE), and a mandatory bunch of legacy tricks with all kinds of unexpected easter eggs inherited from the largest-paid projects of the past. Things that also appear in almost all SQLs are exporting to CSV (or binary, or ASCII string), fetching/reading or storing files on the server itself (machine), massaging XML or JSON (or its own invented format), remote calls on other linked servers (obviously, the same software), and some other esoteric doodas.

Once you get comfortable with SQL and start using advanced capabilities like recursive CTEs and window functions you can simulate looping and other procedural capabilities. Platforms like Snowflake will allow you to do machine learning and interact with LLMs through simple native SQL functions.