A Tiered Approach to AI: The New Playbook for Agents and Workflows

A Small Language Model (SLM) is a neural model defined by its low parameter count, typically in the single-digit to low-tens of billions. These models trade broad, general-purpose capability for significant gains in efficiency, cost, and privacy, making them ideal for specialized tasks.

While I’ve been cautiously testing SLMs, their practical value is becoming clearer. For example, smaller, fine-tuned models are already highly effective for generating embeddings in RAG workflows. The rise of agentic systems is making an even stronger case. A recent Nvidia paper argues that most agent tasks — repetitive, narrowly-scoped operations — don't need the power of a large model.

This suggests a more efficient future: using specialized SLMs for routine workflows and reserving heavyweight models for genuinely complex reasoning. With this in mind, here are the strongest reasons to consider SLMs — and where the trade-offs bite.

AI Everywhere: From Cloud to Pocket

SLMs unlock deployment scenarios that are simply impossible for their larger cousins, particularly in edge computing and offline environments. Models with fewer than 3 billion parameters can run effectively on smartphones, industrial sensors, and laptops in the field. This capability is critical for applications that require real-time processing without relying on a cloud connection. Think of a manufacturing firm embedding a tiny model in AR goggles to provide assembly instructions with less than 50ms of latency, or an agricultural drone analyzing crop health in a remote area with no cellular service.

To me, this is the most compelling and durable reason to be excited about SLMs. As AI becomes more deeply integrated into every facet of our work and lives, we will increasingly demand access to models that run on all our devices, regardless of internet connectivity. The ability to function offline or with minimal resources is a fundamental advantage that massive, cloud-dependent models cannot easily replicate, positioning SLMs as essential components of a truly ubiquitous AI future.

The Specialist's Edge

It is a common assumption that more parameters equal better performance, but for domain-specific tasks, carefully fine-tuned SLMs often outperform their larger, general-purpose counterparts. By training a smaller model on a narrow dataset, you can create an expert that is more accurate and reliable for a specific function than a jack-of-all-trades LLM. We have seen this play out in benchmarks: the 3.8B parameter Phi-3 model nearly matched the 12B parameter Codex in a bug-fixing test, and a math-specific 1.5B model achieved performance on par with 7B generalist models on key benchmarks, demonstrating a four-to-five-fold advantage in performance-per-parameter.

Counterpoint: the trade-off for this high performance, however, is brittleness. A model that has been hyper-specialized for one task will excel within its training distribution but can fail catastrophically when presented with something outside of it. Furthermore, fine-tuning for one capability, like conversation, can degrade another, like coding performance. This reality means that adopting a specialized model strategy often requires building, maintaining, and serving a portfolio of different models, which introduces its own operational complexity that teams must be prepared to manage.

The Need for Speed

By their nature, SLMs deliver substantially lower latency, making them suitable for real-time interactive applications. Achieving first-token latency under 100 milliseconds becomes possible, which is a critical threshold for voice assistants, gaming AI, and other systems where a half-second delay would render the application unusable. This speed, which stems from lower memory bandwidth and faster computations, directly translates into a more natural and responsive user experience.

Counterpoint: while SLMs hold the theoretical advantage here, I wouldn't underestimate the engineering investments foundation model providers are making to accelerate their flagship models. Users already unknowingly interact with "flash versions" of capable models — larger than typical SLMs but optimized enough to deliver acceptable responsiveness for most use cases. The latency gap continues narrowing as both camps optimize aggressively.

Lowering Your AI Bill

While deploying even a moderately-sized LLM might require a cluster of more than 20 GPUs, an SLM can often run effectively on a single high-end workstation with consumer-grade hardware. The cost difference is stark, with studies showing 10 to 30 times lower costs for compute and energy when comparing a 7B model to a 70B alternative. For instance, a logistics company that replaced GPT-4o-mini with Mistral-7B for a specific task saw its per-query cost drop from $0.008 to $0.0006, saving around $70,000 per month. This efficiency allows teams to operate with more predictable budgets and deploy multiple specialized models for the price of a single large one.

Counterpoint: cost advantages shrink when tasks demand broad world knowledge or multi-step reasoning. Cloud providers also keep trimming API prices, and some large-model vendors benefit from scale. My experience echoes what many founders say: pricing is rarely the deal-breaker — especially with competitive open-weights via OpenRouter and with Azure’s enterprise-friendly OpenAI pricing. The main exception seems to be the Claude family of models, which are excellent but pricey enough that they must be used judiciously. For most teams I talk with, the focus is less on the base cost and more on diligent monitoring and optimization of their existing LLM usage.

Keeping Your Data Yours

The ability to run models entirely within organizational boundaries fundamentally changes the security equation for regulated industries. Hospitals deploying Meerkat-8B for patient symptom analysis ensure protected health information never traverses external networks, while European banks running Gemma-2B within their OpenShift clusters satisfy stringent ECB audit requirements without compromising transaction data sovereignty. Defense contractors maintain completely air-gapped deployments for mission-critical systems, achieving immunity from supply chain disruptions that could cripple cloud-dependent alternatives. This local control extends beyond mere compliance checkboxes — it preserves intellectual property and maintains competitive advantages that would evaporate if sensitive data flowed through external APIs.

Counterpoint: the trade-off involves assuming the full burden of infrastructure management, security patching, and GPU orchestration that cloud providers typically handle. Proprietary LLM providers are also starting to close this gap. Google, for instance, has announced that its Gemini models will be available for local deployment through its Google Distributed Cloud platform. This solution offers a fully managed on-premise cloud that can even be run in a completely air-gapped configuration.

Most agent tasks are repetitive, narrowly-scoped operations. They don’t need the conversational breadth or the cost of a large model.

From Idea to Production, Faster

The smaller size of SLMs allows for iteration cycles that are orders of magnitude faster than with LLMs. Fine-tuning a model to adapt to new data, enforce a strict JSON output, or learn domain-specific terminology can be done in GPU-hours instead of weeks. Parameter-efficient methods (e.g., LoRA) and fine tuning services put this within reach of small teams. This agility is crucial in production environments where system requirements are constantly evolving.

Counterpoint: the catch is that fine-tuning and post-training often aren't optional—many small models fail completely on structured tasks without customization, adding engineering overhead and requiring high-quality fine-tuning datasets that may be scarce or expensive to create. What appears as flexibility often becomes mandatory complexity.

Building with AI Legos

SLMs fit neatly into service-oriented designs. Instead of a monolith, you compose a system from simple, reliable pieces: an entity extractor, a sentiment rater, a compliance checker, each fine-tuned for its niche and scaled independently. For example, a financial services firm could build a processing pipeline that combines separate, fine-tuned models for entity extraction, sentiment analysis, and compliance checking, with each component doing one thing exceptionally well.

Counterpoint: while elegant in theory, this approach introduces significant orchestration complexity in practice. Managing the routing logic, inter-model communication, and version dependencies across a fleet of specialized models is a non-trivial engineering challenge. For smaller teams, the overhead required to build and maintain such a distributed system can sometimes outweigh the efficiency gains it promises.

Beyond the Binary: A Tiered Approach to AI

The debate between large and small models is evolving beyond simple capability trade-offs. Jakub Zavrel of Zeta Alpha recently noted that we've reached an inflection point where frontier models are "good enough" for multi-agent systems. The new bottleneck is not raw model capability, but architecture and specialization — the ability to break down complex problems into modular, specialized components.

The bottleneck in AI is no longer model capability — it's system architecture. The new challenge is breaking down problems into modular, specialized components.

This shift makes a powerful case for an "SLM-first" architecture. Instead of relying on a single monolithic model, systems can be composed of a fleet of efficient SLMs, each an expert in its narrow domain. A more powerful and expensive LLM is reserved only for tasks requiring complex, open-domain reasoning.

For teams that are LLM-first today, the migration path is pragmatic: log your workflows, cluster recurring tasks, and fine-tune small specialists to handle them. Route tasks intelligently by policy and measure three key metrics — cost per action, latency to decision, and task reliability. Done right, your systems will become cheaper, faster, and more robust without sacrificing the option to escalate when the job truly demands it.

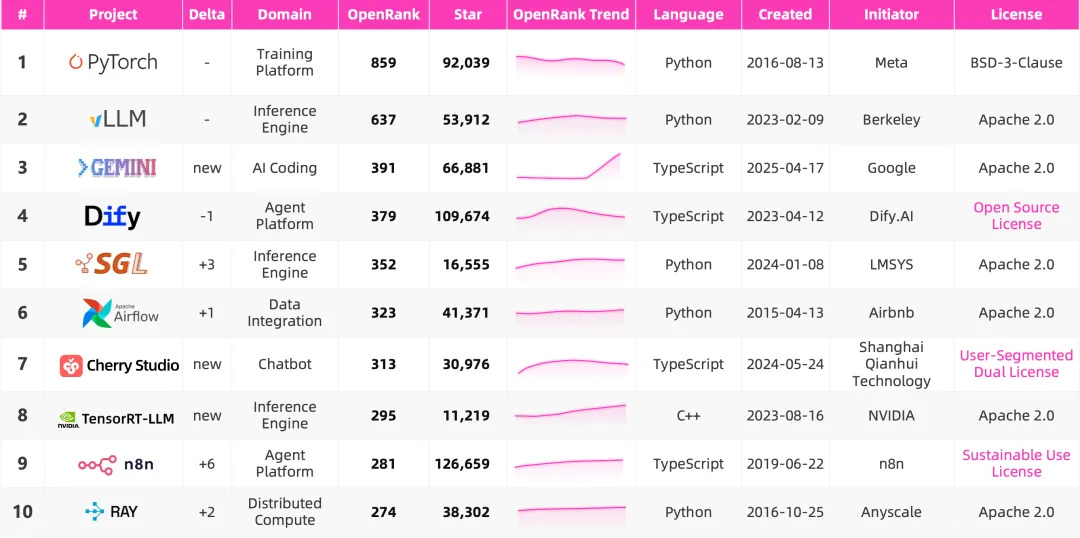

Top 10 Open-Source Projects in the Large Model Ecosystem

This leaderboard ranks the ten most influential open-source projects in the AI development ecosystem using OpenRank, a metric that measures community collaboration rather than simple popularity indicators like stars. The list spans the entire technology stack, from foundational infrastructure such as PyTorch for training and Ray for distributed compute, to high-performance inference engines like vLLM, SGLang, and TensorRT-LLM. At the application level, it features agent platforms and development tools including Dify, n8n, and Gemini, which are predominantly built with TypeScript, in contrast to the Python-based infrastructure. The significant influence of academic research is evident, as three key projects — vLLM, Ray, and SGLang (SGL) originated from UC Berkeley’s Sky Computing and RISE Labs, demonstrating a direct path from academic innovation to production-ready tools.

Ben Lorica edits the Gradient Flow newsletter and hosts the Data Exchange podcast. He helps organize the AI Conference, the AI Agent Conference, the Applied AI Summit, while also serving as the Strategic Content Chair for AI at the Linux Foundation. You can follow him on Linkedin, X, Mastodon, Reddit, Bluesky, YouTube, or TikTok. This newsletter is produced by Gradient Flow.