Kubernetes - Basics

Overview

Spring Boot development with docker & kubernetes.

Github: https://github.com/gitorko/project61

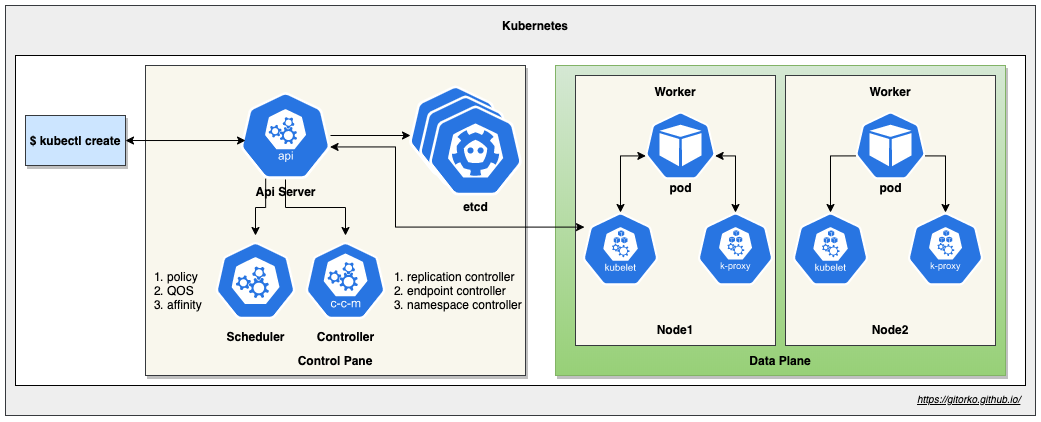

Kubernetes

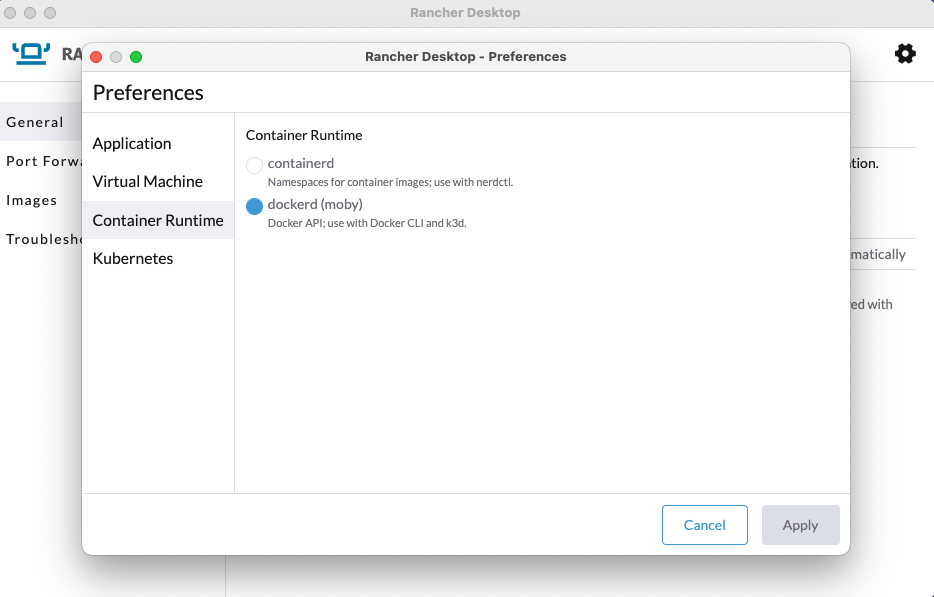

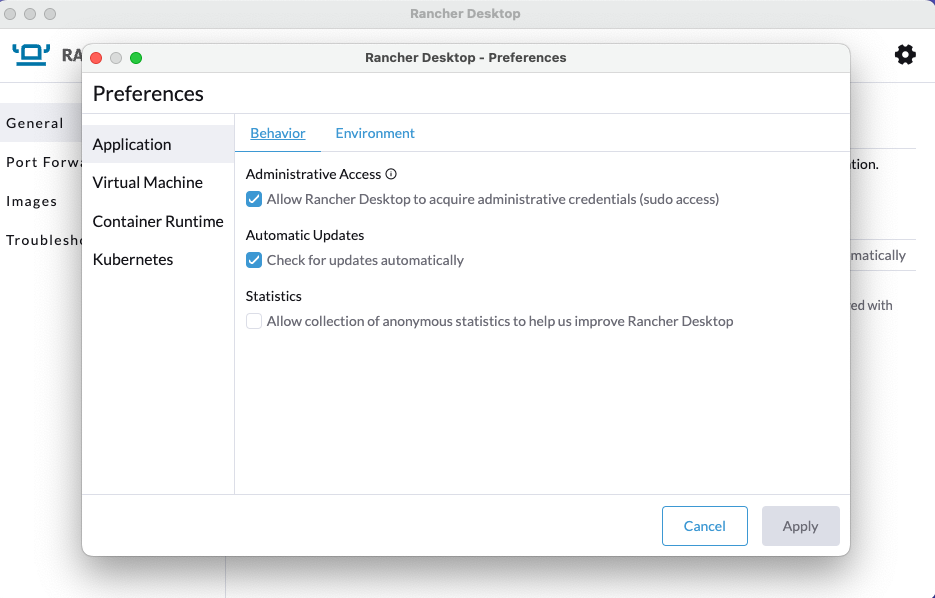

Rancher Desktop

Rancher Desktop allows you to run Kubernetes on your local machine. Its free and open-source.

Disable Traefik, select dockerd as container in the settings.

If you get the below error when you run kubectl, its mostly due to .kubeconfig file already present from docker desktop installation.

1I0804 20:09:34.857149 37711 versioner.go:58] Get "https://kubernetes.docker.internal:6443/version?timeout=5s": x509: certificate signed by unknown authority 2Unable to connect to the server: x509: certificate signed by unknown authority Delete the .kube folder and restart Rancher Desktop.

1rm -rf ~/.kube Docker Desktop

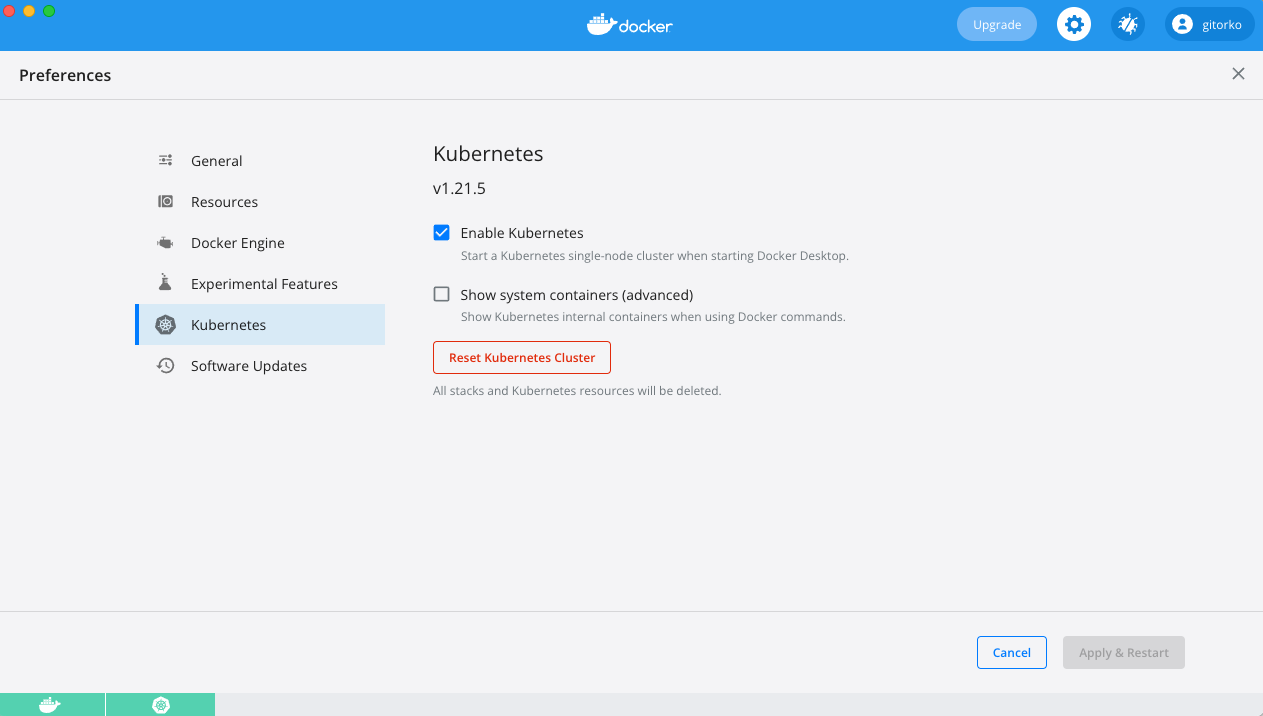

Docker Desktop allows you to run Kubernetes on your local machine. Do refer the latest licensing terms as they have changed.

Once kubernetes is running, check kubectl.

1export KUBECONFIG=~/.kube/config 2kubectl version Kubernetes Dashboard

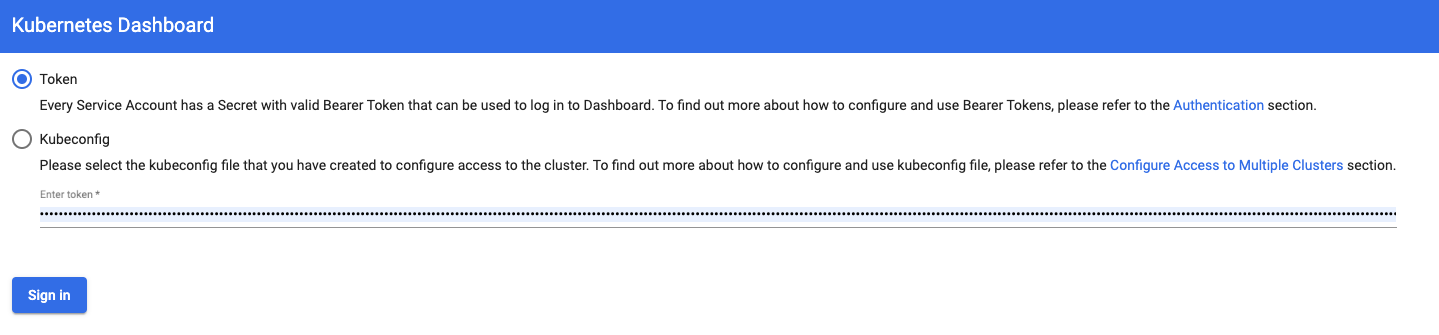

If you want to visualize the kubernetes infra, you can install the dashboard UI.

https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/

1kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml 2kubectl proxy Open the dashboard url in a browser

To get the token to login run the below command

1kubectl -n kube-system describe secret default|grep -i 'token:'|awk '{print $2}' 2kubectl config set-credentials docker-for-desktop --token="${TOKEN}" Now provide the token and login.

Clean up

1kubectl --namespace kube-system get all 2kubectl delete -f https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml Build & Deployment

Build the project

1git clone https://github.com/gitorko/project61.git 2cd project61 3./gradlew clean build Docker

There are 2 ways you can build the docker image, either run the docker build command or use the google jib library.

To build via docker build command

1docker build -f k8s/Dockerfile --force-rm -t project61:1.0.0 . 2docker images | grep project61 To build via jib plugin run the below command. This way building the docker image can be part of the build process

1./gradlew jibDockerBuild Test if the docker image is working

1docker rm project61 2docker run -p 9090:9090 --name project61 project61:1.0.0 http://localhost:9090/api/time

Daemon mode

1docker run -d -p 9090:9090 --name project61 project61:1.0.0 2docker image prune Kubernetes Basics

Now let's deploy the project on a kubernetes cluster. Check if kubernetes commands work

1kubectl version 2kubectl config get-contexts 3kubectl config use-context docker-desktop 4kubectl config set-context --current --namespace=default 5kubectl get nodes 6kubectl get ns 7kubectl get all 8kubectl cluster-info We will now deploy just the docker image in kubernetes without needing any yaml files and using port forwarding access the api. Very rarely you will need to do this as most k8s deployment is done via yaml.

1kubectl run project61-k8s --image project61:1.0.0 --image-pull-policy=Never --port=9090 2kubectl port-forward project61-k8s 9090:9090 http://localhost:9090/api/time

You can also create a service and access the pod. Get the port from the NodePort. Again this is to understand the fundamentals, a yaml file will be used later.

1kubectl expose pod project61-k8s --type=NodePort 2kubectl get -o jsonpath="{.spec.ports[0].nodePort}" services project61-k8s Change the port that you got in the last command and test this api: http://localhost:

Check the pods,services & deployments.

1kubectl get all You can access the bash terminal of the pod

1kubectl get pods 2kubectl exec -it project61-k8s -- /bin/bash 3ls Clean up.

1kubectl delete pod project61-k8s 2kubectl delete service project61-k8s 3kubectl get all Kubernetes Yaml

Now we will deploy via the kubernetes yaml file.

1kubectl apply -f k8s/Deployment.yaml --dry-run=client --validate=true 2kubectl apply -f k8s/Deployment.yaml http://localhost:9090/api/time

Scale the deployment

1kubectl scale deployment project61-k8s --replicas=3 Look at the logs

1kubectl logs -f deployment/project61-k8s --all-containers=true --since=10m Clean up

1kubectl delete -f k8s/Deployment.yaml Helm

Now lets deploy the same project via helm charts

1brew install helm 1helm version 2helm install project61 mychart 3helm list 4kubectl get pod,svc,deployment Get the url and invoke the api

1curl http://$(kubectl get svc/project61-k8s -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'):9090/api/time 1http://localhost:9090/api/time Clean up

1helm uninstall project61 Debugging

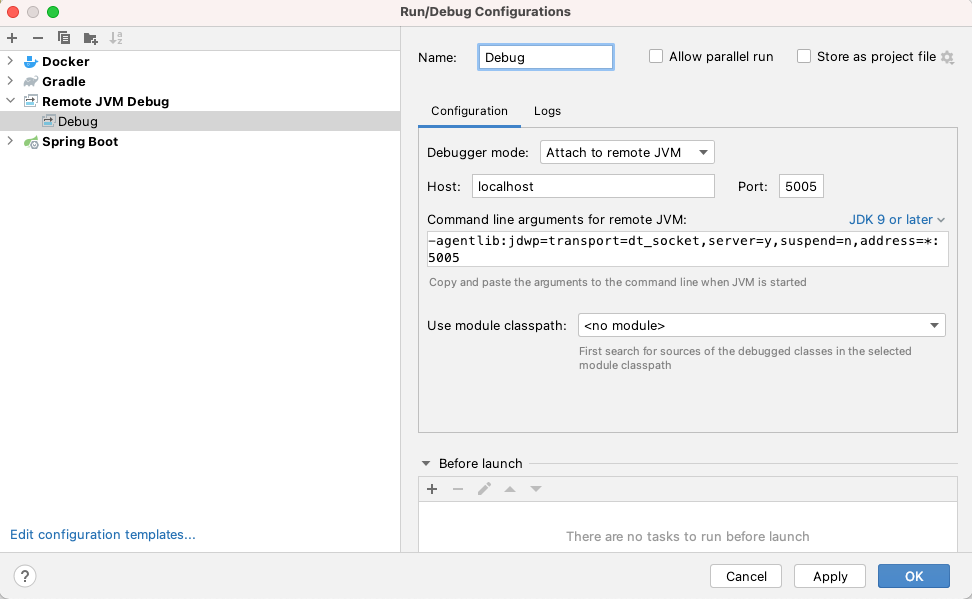

To attach a debugger to the application follow the below steps

Docker Debug

To debug the docker image start the pod with the debug port on 5005 enabled.

1docker stop project61 2docker rm project61 3docker run -p 9090:9090 -p 5005:5005 --name project61 project61:1.0.0 Enable remote JVM debug in intellij

1-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=*:5005

http://localhost:9090/api/time

Now when you request the api, the debug breakpoint in intellij is hit.

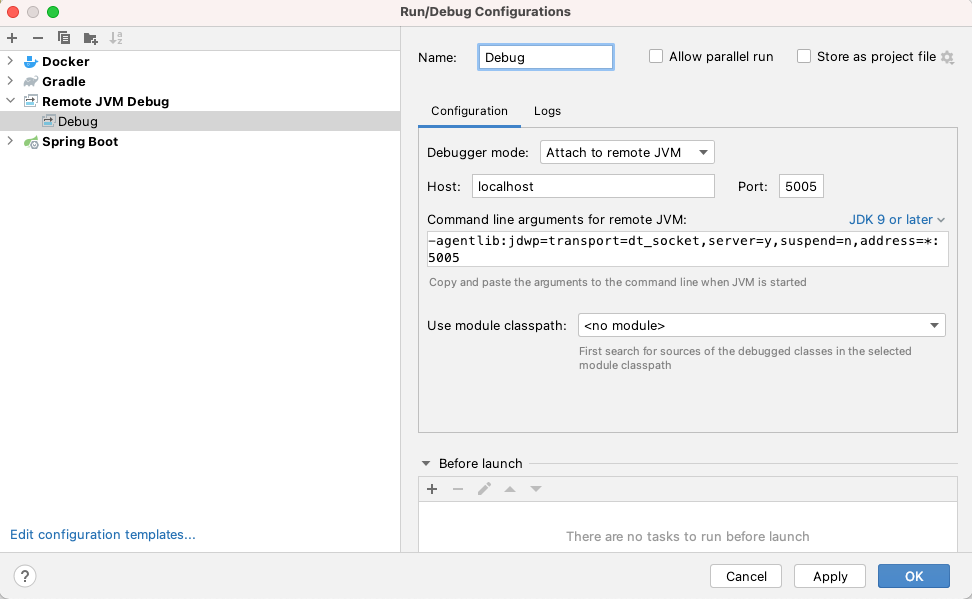

Kubernetes Debug

To debug the kubernetes pod start port forwarding to the port 5005

1kubectl get pod 2kubectl port-forward pod/<POD_NAME> 5005:5005 Now when you request the api, the debug breakpoint in intellij is hit.

Telepresence

To debug the kubernetes pod you can use telepresence. It will swap the prod running on kubernetes with a proxy pod that redirects traffic to your local setup.

Install telepresence

1sudo curl -fL https://app.getambassador.io/download/tel2/darwin/amd64/latest/telepresence -o /usr/local/bin/telepresence 2sudo chmod a+x /usr/local/bin/telepresence Start the project61 application in debug mode in intellij, change the port to 9095 in application yaml, as we will be testing debugging locally.

Run the telepresence command, that will swap the kubernetes pod with a proxy pod and redirect all requests on 9090 to 9095.

1telepresence --namespace=default --swap-deployment project61-k8s --expose 9095:9090 --run-shell 2kubectl get pods Note that port here is 9090 that is the kubernetes port for incoming requests. Telepresence will redirect these to 9095 port where your local instance is running.

http://localhost:9090/api/time

Now when you request the api, the debug breakpoint in intellij is hit.

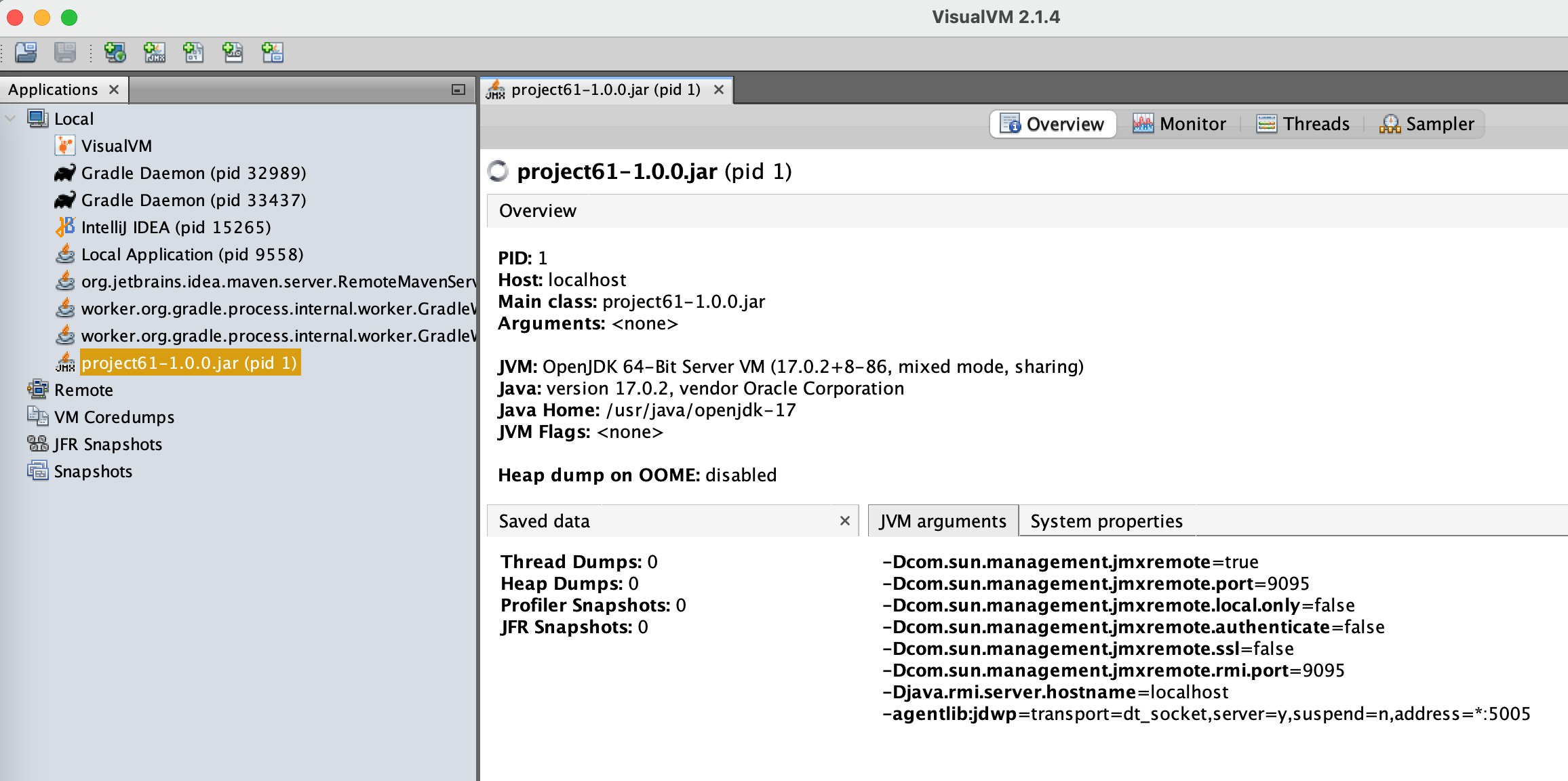

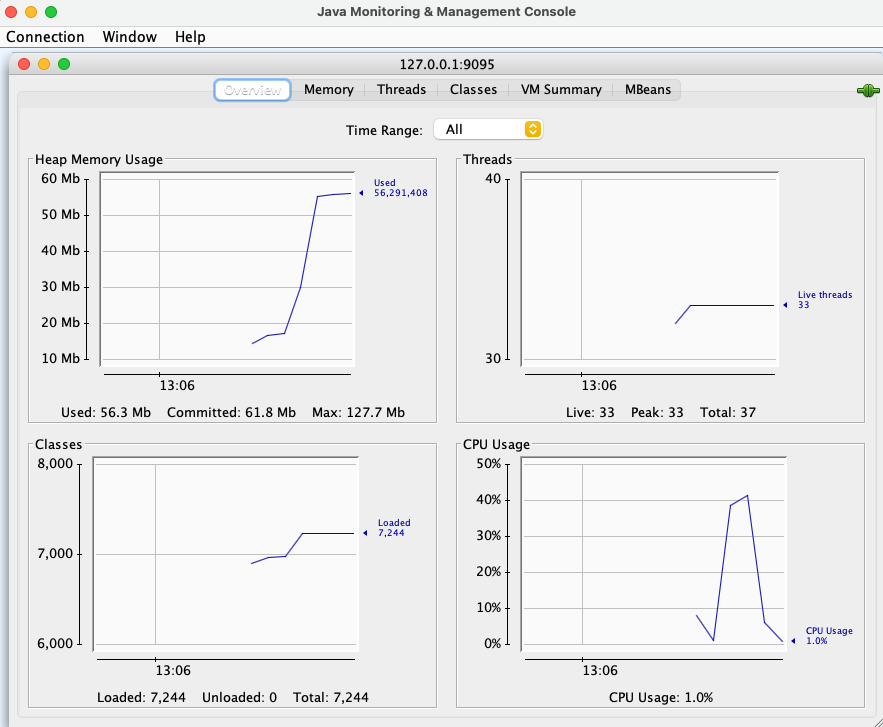

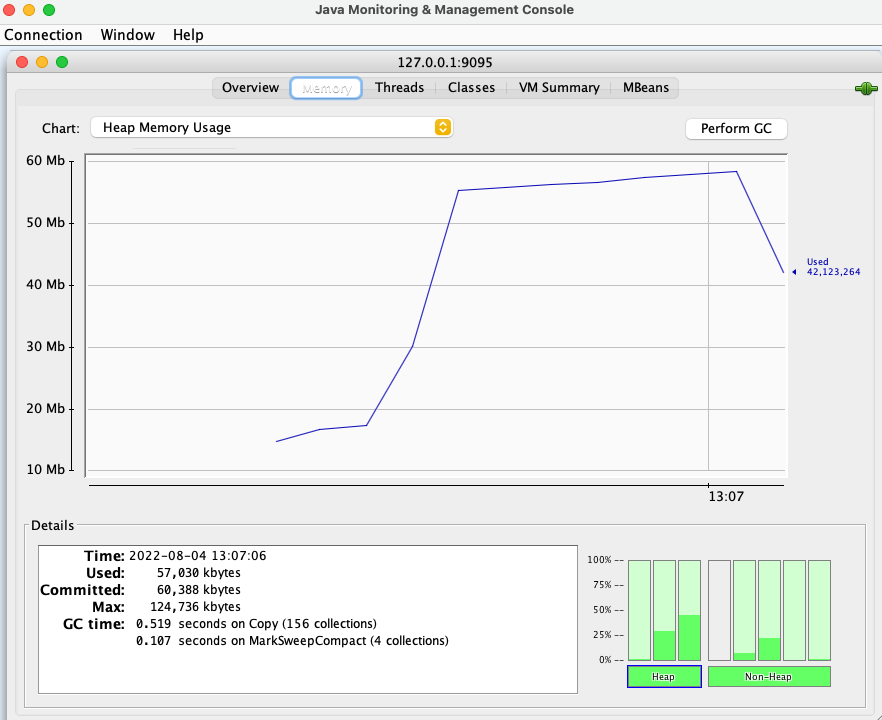

JVM Monitoring

To hook jConsole or VisualVM

Docker

To connect to JMX port, start docker image with port 9095 exposed. The docker image already has the settings to enable JMX.

1docker stop project61 2docker rm project61 3docker run -p 9090:9090 -p 9095:9095 --name project61 project61:1.0.0 Kubernetes

To connect to JMX port, start port forwarding, The docker image already has the settings to enable JMX.

1kubectl get pod 2kubectl port-forward pod/<POD_NAME> 9095:9095 VisualVM

Connect to the port

1http://localhost:9095

JConsole

1jconsole 127.0.0.1:9095

Jenkins CI/CD

Fork the github project61 repo so you have your own github project to push the code & clone it.

https://github.com/gitorko/project61

Download the jenkins war file and run the below command.

1java -jar jenkins.war --httpPort='8088' Follow the default steps to install plugin and configure jenkins. The default password is printed in the console log.

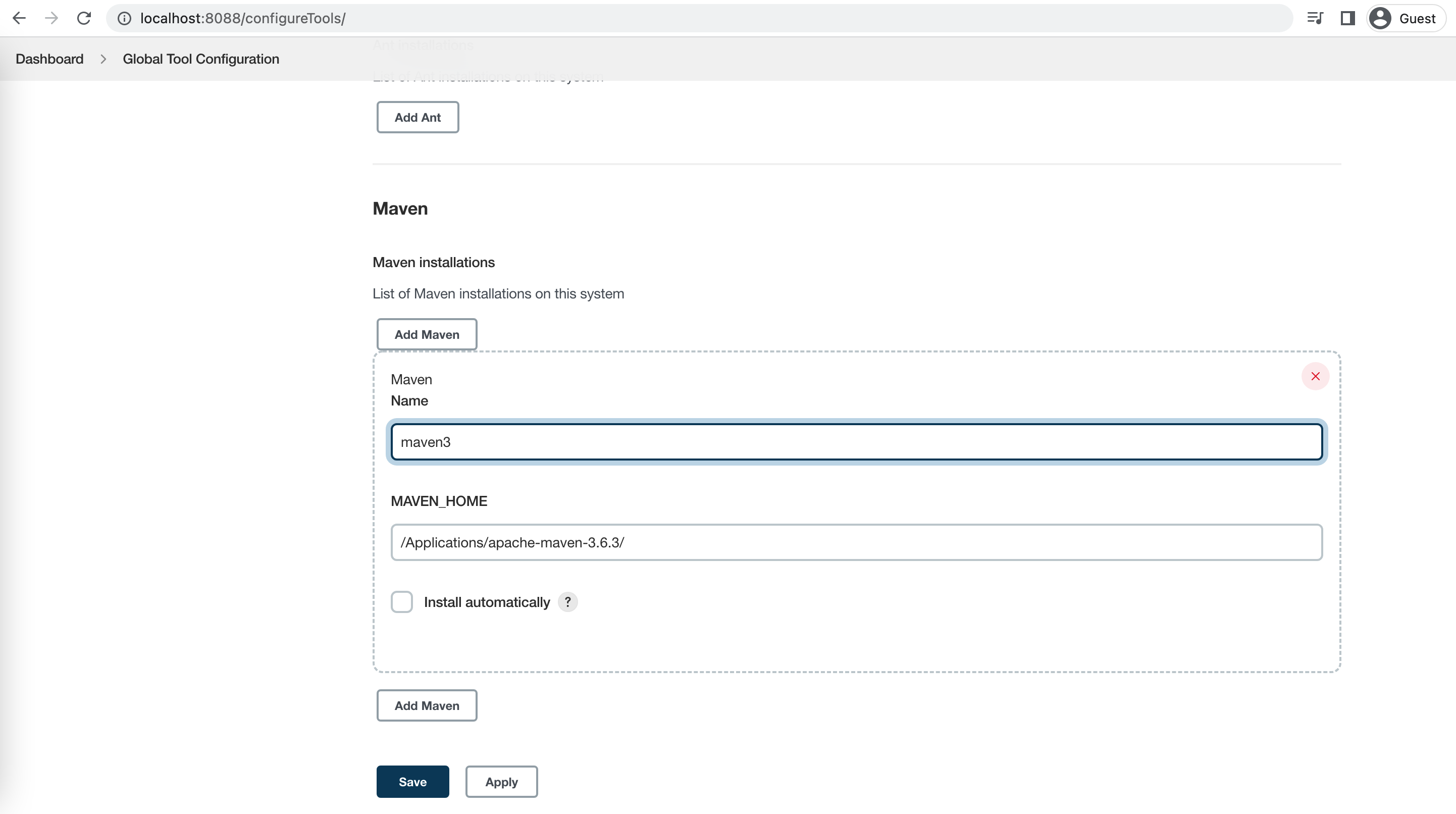

Goto Global Tool Configuration and add Java 17, Maven

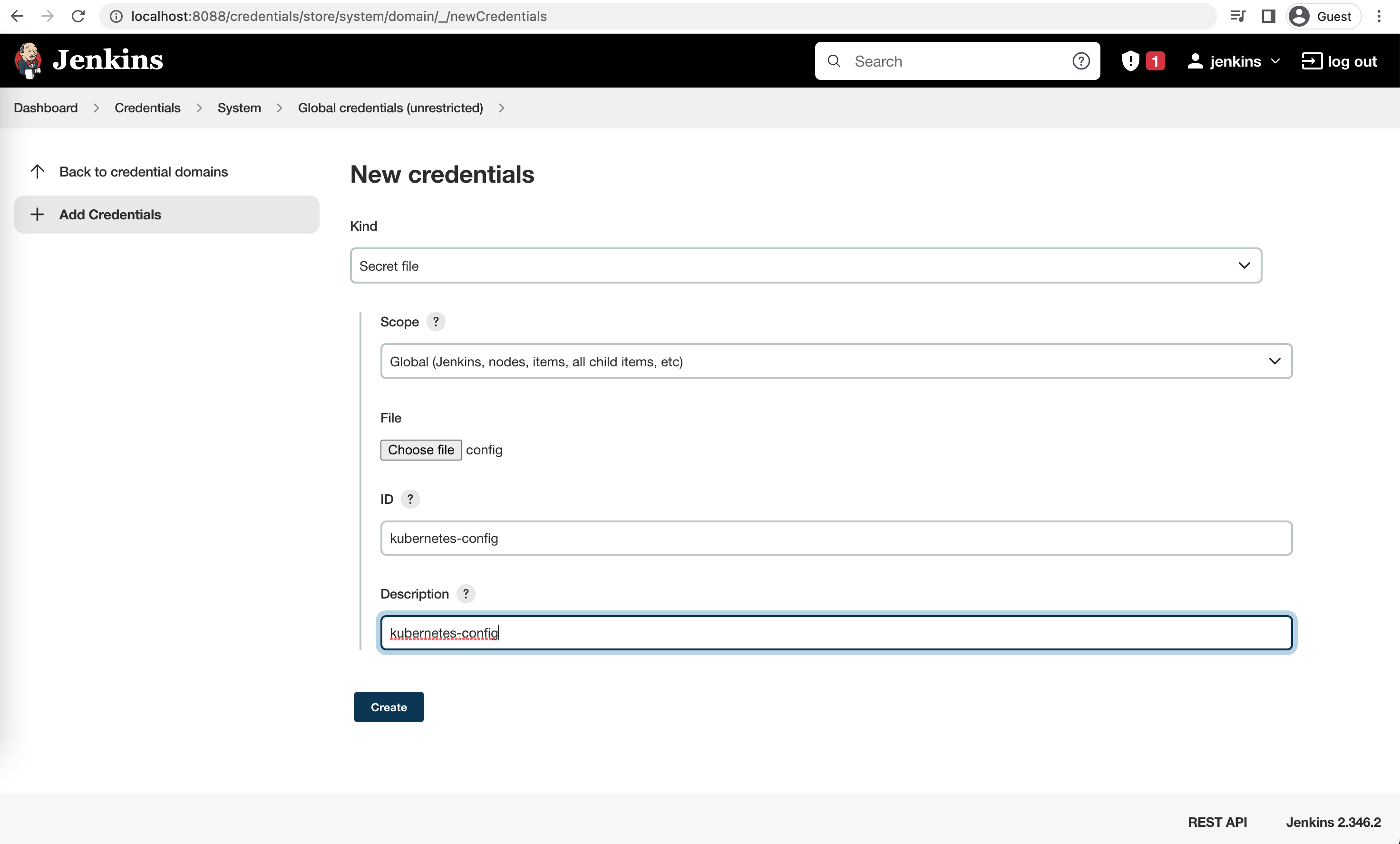

Add the kubernetes config as a credential

1/Users/$USER/.kube/config

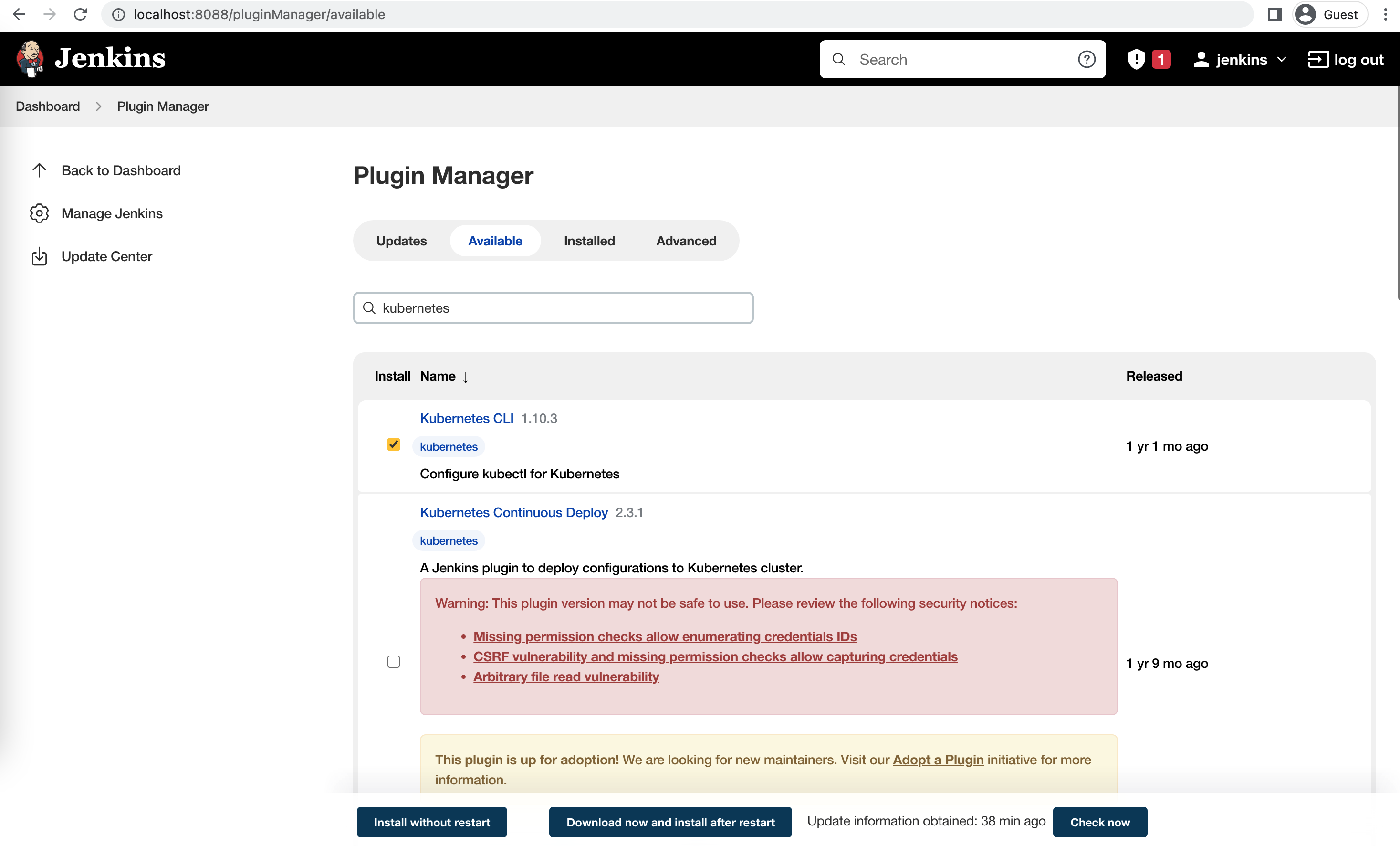

Install the kubernetes CLI plugin

https://plugins.jenkins.io/kubernetes-cli/

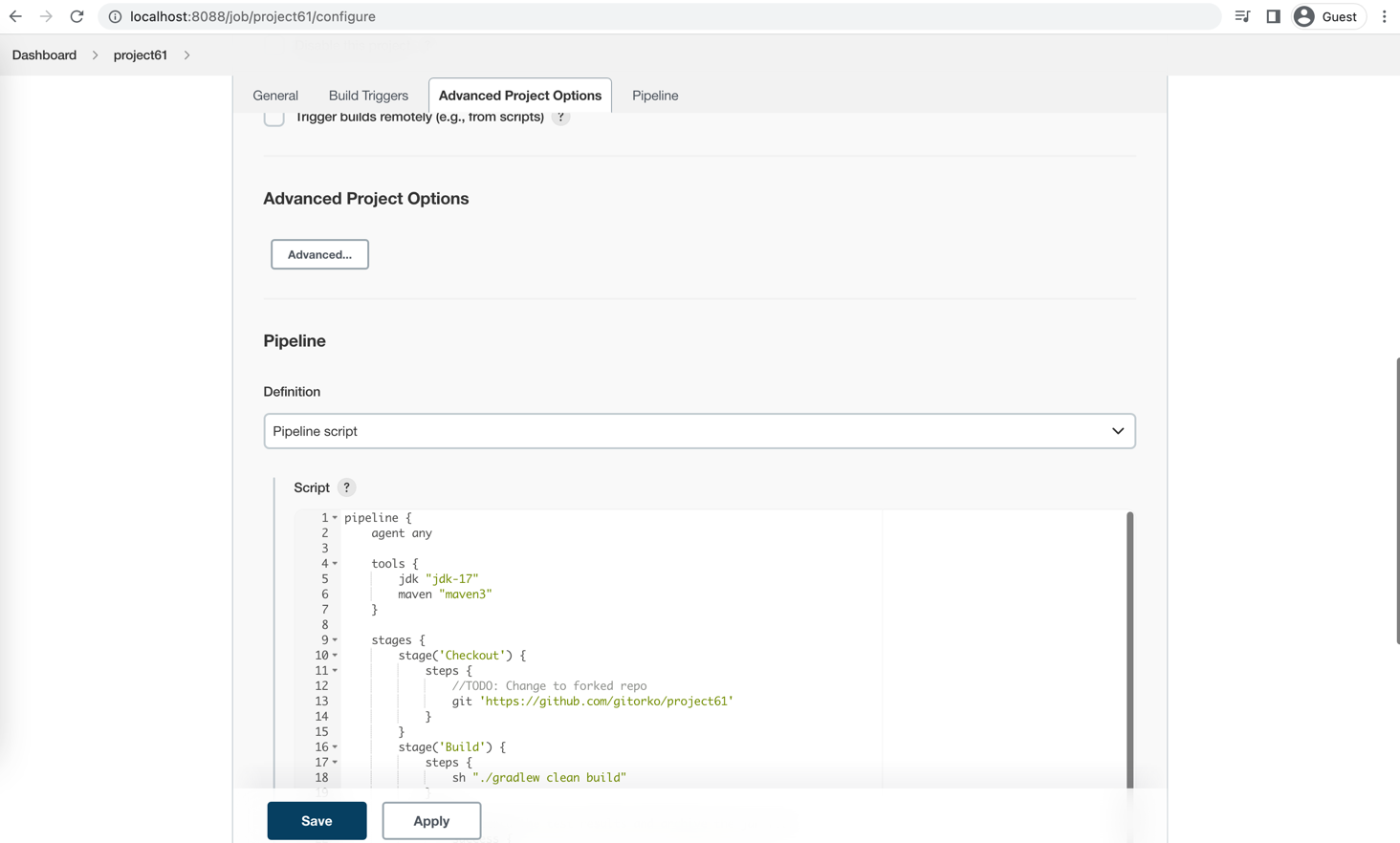

Then create a pipeline item and copy the content of Jenkinsfile, Enter the GitHub url of your forked project. Save and run the job.

1pipeline { 2 agent any 3 4 tools { 5 jdk "jdk-17" 6 maven "maven3" 7 } 8 9 stages { 10 stage('Checkout') { 11 steps { 12 //TODO: Change to forked repo 13 git url: 'https://github.com/gitorko/project61', branch: 'master' 14 } 15 } 16 stage('Build') { 17 steps { 18 sh "./gradlew clean build" 19 } 20 post { 21 // record the test results and archive the jar file. 22 success { 23 junit 'build/test-results/test/TEST-*.xml' 24 archiveArtifacts 'build/libs/*.jar' 25 } 26 } 27 } 28 stage('Build Docker Image') { 29 steps { 30 sh "./gradlew jibDockerBuild -Djib.to.tags=$BUILD_NUMBER" 31 } 32 post { 33 // record the test results and archive the jar file. 34 success { 35 junit 'build/test-results/test/TEST-*.xml' 36 archiveArtifacts 'build/libs/*.jar' 37 } 38 } 39 } 40 stage ('Push Docker Image') { 41 steps { 42 //TODO: docker hub push 43 echo "Pushing docker image" 44 } 45 } 46 stage('Deploy') { 47 steps { 48 withKubeConfig([credentialsId: 'kubernetes-config']) { 49 sh ''' 50cat <<EOF | kubectl apply -f - 51apiVersion: apps/v1 52kind: Deployment 53metadata: 54 name: project61-k8s 55spec: 56 selector: 57 matchLabels: 58 app: project61-k8s 59 strategy: 60 rollingUpdate: 61 maxSurge: 1 62 maxUnavailable: 1 63 type: RollingUpdate 64 replicas: 1 65 template: 66 metadata: 67 labels: 68 app: project61-k8s 69 spec: 70 containers: 71 - name: project61 72 image: project61:$BUILD_NUMBER 73 imagePullPolicy: IfNotPresent 74 ports: 75 - containerPort: 9090 76 resources: 77 limits: 78 cpu: "1" 79 memory: "500Mi" 80--- 81kind: Service 82apiVersion: v1 83metadata: 84 name: project61-k8s 85spec: 86 ports: 87 - port: 9090 88 targetPort: 9090 89 name: http 90 selector: 91 app: project61-k8s 92 type: LoadBalancer 93 ''' 94 } 95 } 96 } 97 } 98}

Each jenkins job run creates a docker image version by build number, kubectl terminates the old pod and starts the new pod.

1docker images |grep project61 2kubectl get pods -w Clean up the docker images as they consume space.

1docker rmi project61:1 2kubectl delete -f k8s/Deployment.yaml You can configure a 'GitHub hook trigger for GITScm polling' to deploy when a commit is pushed to github.

Resources

Kubernetes Samples

Build a custom nginx image

1docker build -f k8s-manifest/Dockerfile1 --force-rm -t my-nginx:1 . 2docker build -f k8s-manifest/Dockerfile2 --force-rm -t my-nginx:2 . Create an alias for kubectl as k

1alias k="kubectl" https://kubernetes.io/docs/reference/kubectl/cheatsheet/

01. Create a simple pod

1k apply -f k8s-manifest/01-create-pod.yaml 2k get all 3k delete -f k8s-manifest/01-create-pod.yaml 4k logs pod/counter 5k describe pod/counter 02. Create ngnix pod, use port forward to access

Create nginx pod and enter pods bash prompt

1k apply -f k8s-manifest/02-nginx-pod.yaml 2k get all 3k port-forward pod/nginx 8080:80 4k exec -it pod/nginx -- /bin/sh 5k delete -f k8s-manifest/02-nginx-pod.yaml 03. Create ngnix pod with html updated by another container in same pod

1k apply -f k8s-manifest/03-nginx-pod-volume.yaml 2k get pods -w 3kubectl get -o jsonpath="{.spec.ports[0].nodePort}" service/nginx-service 4k delete -f k8s-manifest/03-nginx-pod-volume.yaml 04. Create job

Run once and stop. output is kept till you delete it.

1k apply -f k8s-manifest/04-job.yaml 2k get all 3k delete -f k8s-manifest/04-job.yaml 05. Liveness probe

Liveness probe determines when pod is healthy, here file is deleted after 30 seconds causing pod to restart

1k apply -f k8s-manifest/05-liveness-probe.yaml 2k get pods -w 3k delete -f k8s-manifest/05-liveness-probe.yaml 06. Readiness probe

Readiness probe determines when to send traffic

1k apply -f k8s-manifest/06-readiness-probe.yaml 2k port-forward pod/nginx 8080:80 3k delete -f k8s-manifest/06-readiness-probe.yaml 07. Cron Job

Cron job runs every minute

1k apply -f k8s-manifest/07-cron-job.yaml 2k get job.batch -w 3k delete -f k8s-manifest/07-cron-job.yaml 08. Config

Configure configMap and secrets.

1k apply -f k8s-manifest/08-config.yaml 2k logs pod/busybox 3k delete -f k8s-manifest/08-config.yaml config map as volume

1k apply -f k8s-manifest/08-config-volume.yaml 2k logs -f pod/busybox 3k edit configmap app-setting 4k get configmap app-setting -o yaml 5k exec -it pod/busybox -- /bin/sh 6 7k delete -f k8s-manifest/08-config-volume.yaml 09. Deployment with Load Balancer

1k apply -f k8s-manifest/09-deployment.yaml 2k get all 3k port-forward service/nginx-service 8080:8080 4 5k scale deployment.apps/nginx --replicas=0 6k scale deployment.apps/nginx --replicas=3 7 8k delete -f k8s-manifest/09-deployment.yaml 10. External service

Proxies to external name

1k apply -f k8s-manifest/10-external-service.yaml 2k get services 3k delete -f k8s-manifest/10-external-service.yaml 11. Host Path Volume

1k apply -f k8s-manifest/11-volume-host-path.yaml 2k get all 3k delete -f k8s-manifest/11-volume-host-path.yaml 12. Persistent Volume & Persistent Volume Claim

1k apply -f k8s-manifest/12-pesistent-volume.yaml 2k get pv 3k get pvc 4k get all 5k delete -f k8s-manifest/12-pesistent-volume.yaml 14. Blue Green Deployment

1k apply -f k8s-manifest/14-deployment-blue-green.yaml 2k apply -f k8s-manifest/14-deployment-blue-green-flip.yaml 3 4k delete service/nginx-blue 5k delete deployment/nginx-v1 6 7k delete -f k8s-manifest/14-deployment-blue-green.yaml http://localhost:31000/ http://localhost:31000/

15. Canary Deployment

1k apply -f k8s-manifest/15-deployment-canary.yaml 2 3k delete deployment/nginx-v2 4 5k delete -f k8s-manifest/15-deployment-canary.yaml 1while true; do curl http://localhost:31000/; sleep 2; done References

https://github.com/GoogleContainerTools/jib

https://www.docker.com/products/docker-desktop/

https://birthday.play-with-docker.com/kubernetes-docker-desktop/

https://www.getambassador.io/docs/telepresence/latest/quick-start/qs-java/