A more secure way to handle secrets in OpenShift

Learn how to handle secrets in OpenShift. Discover why volume mounts are preferred over environment variables for enhanced security and operational flexibility.

Learn how to handle secrets in OpenShift. Discover why volume mounts are preferred over environment variables for enhanced security and operational flexibility.

.png?itok=_u2IXDEC)

Learn how to deploy Model Context Protocol (MCP) servers on OpenShift using ToolHive, a Kubernetes-native utility that simplifies MCP server management.

Welcome back to Red Hat Dan on Tech, where Senior Distinguished Engineer Dan Walsh dives deep on all things technical, from his expertise in container technologies with tools like Podman and Buildah, to runtimes, Kubernetes, AI, and SELinux! In this episode, Eric Curtin joins to discuss Sorcery AI, a new AI code review tool that has been helping to find bugs, review PRs, and much more!

An in-depth analysis of the OpenShift node-system-admin-client certificate lifecycle, validity, rotation, and manual renewal in PKI management.

Pick a topic below that interests you, then go through one of the self-paced tutorials!

.png?itok=GUVbPa1o)

When operating within restricted environments, mirroring OpenShift operators can

Integrate the Kubernetes MCP server with OpenShift and VS Code to give AI assistants a safe, intelligent way to interact with your clusters.

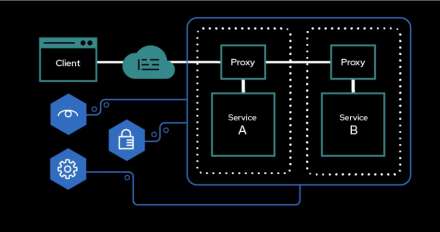

Discover how OpenShift Service Mesh 3 streamlines microservices architecture, secure communication, traffic management, and observability.

.png?itok=GUVbPa1o)

Explore on-cluster image mode on OpenShift, a cloud-native approach to operating system management that treats your OS exactly like a container image.

Walk through how to set up KServe autoscaling by leveraging the power of vLLM, KEDA, and the custom metrics autoscaler operator in Open Data Hub.

.png?itok=GUVbPa1o)

Learn how to declaratively assign DNS records to OpenShift Virtualization VMs using policy controllers and annotations, streamlining the VM lifecycle.

Learn how to integrate s390x workers into your OpenShift cluster in order to

Building AI apps is one thing—but making them chat with your documents is next-level. In Part 3 of the Llama Stack Tutorial, we dive into Retrieval Augmented Generation (RAG), a pattern that lets your LLM reference external knowledge it wasn't trained on. Using the open-source Llama Stack project from Meta, you'll learn how to:- Spin up a local Llama Stack server with Podman- Create and ingest documents into a vector database- Build a RAG agent that selectively retrieves context from your data- Chat with real docs like PDFs, invoices, or project files, using Agentic RAGBy the end, you'll see how RAG brings your unique data into AI workflows and how Llama Stack makes it easy to scale from local dev to production on Kubernetes.

Learn how to integrate Ansible Automation Platform with Red Hat Advanced Cluster Management for centralized authentication across multiple clusters.

Learn how to perform signature verification on images signed with Cosign's bring-your-own PKI (BYO-PKI) feature in OpenShift using the ClusterImagePolicy.

Explore the latest features in network observability 1.9, an operator for OpenShift and Kubernetes that provides insights into your network traffic flows.

Welcome back to Red Hat Dan on Tech, where Senior Distinguished Engineer Dan Walsh dives deep on all things technical, from his expertise in container technologies with tools like Podman and Buildah, to runtimes, Kubernetes, AI, and SELinux! In this episode, Eric Curtin joins to discuss Sorcery AI, a new AI code review tool that has been helping to find bugs, review PR's and much more!

Welcome back to Red Hat Dan on Tech, where Senior Distinguished Engineer Dan Walsh dives deep on all things technical, from his expertise in container technologies with tools like Podman and Buildah, to runtimes, Kubernetes, AI, and SELinux! In this episode, you'll see a live demo on Ramalama's new RAG capability, allowing you to use your unique data with a local LLM. Learn More: https://developers.redhat.com/articles/2025/04/03/simplify-ai-data-integration-ramalama-and-rag5.

Learn how to migrate to Vector in this quick demo.

Learn how the Red Hat build of OpenTelemetry provides a powerful and flexible solution for comprehensive metrics and logs reporting in complex environments.

Learn how a pattern engine plus a small LLM can perform production-grade failure analysis on low-cost hardware, slashing inference costs by over 99%.

.png?itok=GUVbPa1o)

Learn how to migrate the default log store in OpenShift 4 from Elasticsearch to Loki with this step-by-step guide.

This video by Stevan Le Meur is an illustration of how to deploy a pod on Kubernetes.

Discover a highly resilient multicluster architecture with Red Hat OpenShift Service Mesh, Connectivity Link, and Advanced Cluster Management for Kubernetes.