Simone sensing Jamie Lidell’s live to be heard just one seat away

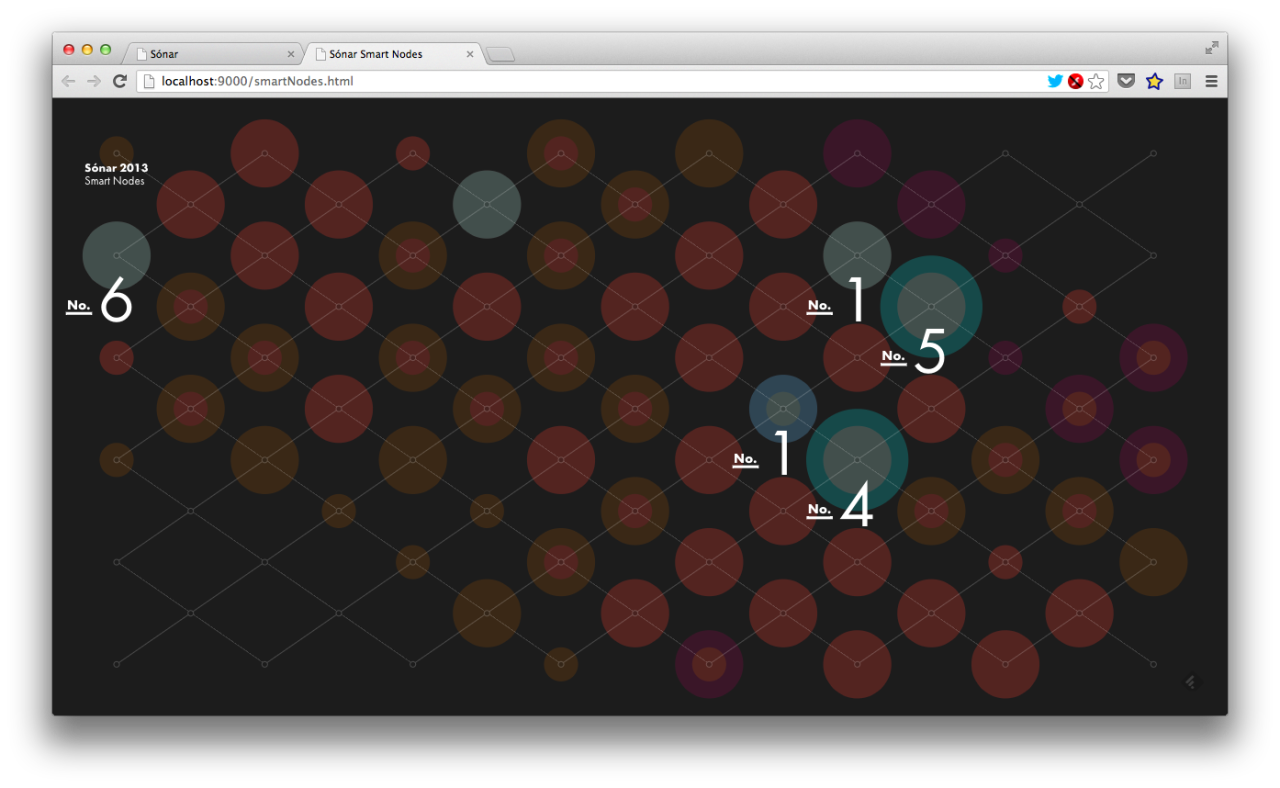

Sónar is just beginning and we are well underway, although. a few kinks need to be worked out, in general we are quite pleased with the outcome. Our visualization is even somewhat supported on mobile devices.

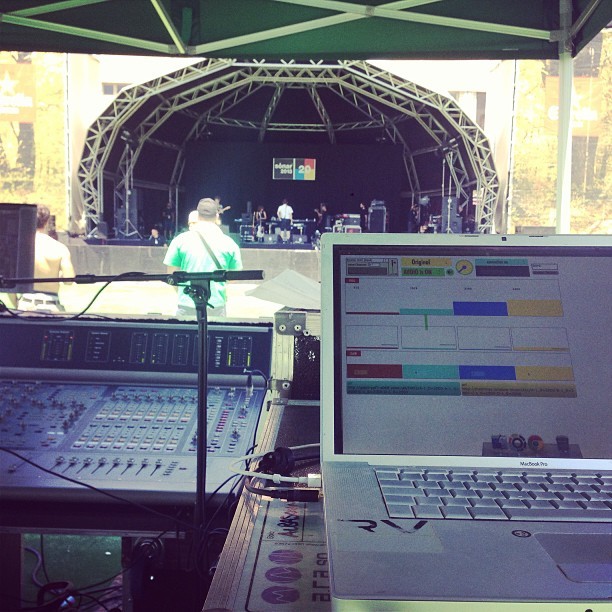

The sofa is now ready to play some live data from the Sonar Village Stage

After an insane run to Ikea in Barcelona we finally found the things we needed. They will be gently slaughtered, but for the greater good of all of other sofas.

Along a road littered with the debris of failed attempts and fried transistors, last night Simo and Ken finally solved one of the larger technical hurdles. A circuit and code was finalized which can parse rhythm components as analyzed from Max/MSP and be replicated with the vibration motors via Arduino. Also a “volume” level has been implemented which will give the “sitter” the ability to control the intensity of the vibrating rhythm.

The rhythm of a musical performance is typically measured in BPM (beats per minute), an easily detected value. However, within an urban space, there are multiple ways to define rhythm. There’s a physical layer of people, noise, temperature, bikes shared, pollution which are visible, but have to be sensed contextually. There is also a virtual layer of activity in a city, such as FourSquare check-ins, Facebook likes, Instagram pictures, Tweets, etc. these remain mostly “hidden,” but their value reveals another side of how the rhythm of a city can be understood.

We will define the BPMs of the two environments and translate them into an experience that binds these two (seemingly) disparate contexts in real-time: bringing the rhythm of the festival into the city and the rhythm of the city into the festival.

The main goal of One Seat Away is to use connectivity and sensing to make more sense of the urban space around us and merge it with music and rhythms as a way of experiencing data in a tangible way: something that one can feel and not necessarily have to understand in detail or rationally decode.

Sofa shopping.