20 September 2025 to 30 May 2025

The consumptive future that billionaires and their power-consolidation corporations are trying to sell us is a future of vending machines.

The collective future that we want is a future of kitchens.

Vending machines present a shallow illusion of overconstrained "choice", but no meaningful agency. The insidiousness of vending machines, however, is not this quantitative overconstraint. Adding more products to the vending machine does not change its nature. Amazon is a very large vending machine. The insidiousness is the conflation of choice with agency, and thus of consumption with participation.

A vended economy is supported by a complaisant vended politics, increasingly of its own making. Voting is the nominal structural basis of democracy, but encouraging people to vote, in itself, is anodyne and power-friendly. Voting in elections with only one candidate is bad theater, but voting in elections with only two candidates is only one better. And the math never improves: if you have no control over the candidates, and in particular if the candidates are never you, increasing their number doesn't benefit you.

We don't immediately recognize this as dystopia, because dystopian story-telling usually oversimplifies the format of the oppression. In 1984 the government controls the meager supply of drab consumer goods, and the single broadcast/surveillance channel. But of course Walmart has endless aisles of things, and the TVs in the TV aisle have endless channels. We have choices.

But our choices are stocked by Walmart. Or Amazon, or a handful of morally interchangeable competitors. The TV channels are numerous in frequency, but monotonous in signal, and monopolized in control. You can have any innocuous flavor of filler surrounding your advertisements (for innocuously flavored fillers). Orwell thought this gray-goo of a world would be imposed on us by the State, but the capitalist innovation is to invert this. Power is consolidated by money, not vice versa. Citizenship is portioned into voters, who are then repackaged as consumers. Anything functional in government is absorbed or disassembled until it can impose no miserly restrictions.

And so we get: self-destructive grievant feudalism wielded by a petulant debt-powered narcissist, supported by gutless symbiosis with a solipsistic social class of robber barons. The narcissist only sees himself in the (legacy) media, which is controlled by the same fear-sellers who picked him as their sacrificial agent. Dissent isn't so much crushed as is organized into slots, each of which are manipulated to go temporarily out of stock, and then those empty slots are filled with something more colorful, but more completely owned. A few protesters gather in front of the machine to demand the return of the most recently discontinued snack. Their attention validates the machine. The machine gleams eagerly, its buttons patiently awaiting their fingers. They are angry now, but anger turns into hunger over so little time. Soon they will want something. The machine has the things. It waits.

We have kitchens. So many of the things in the kitchen came from rows on shelves in stores, vended with only slightly less structure than from the machine. The kitchen is not anti-business or anti-capitalist, exactly. But the things in the kitchen are material, and tools. The difference between a 5lb sack of flour and an individually-packaged snack cake is the difference between potential energy and the bill for energy consumed. At the end, we still eat. The difference is not the overall topology of the system, but our place in it. We come to the kitchen to take up knives, not coins or tokens.

Instead of a flat grid of processed choices, all lit from a consistent angle, the kitchen is an unruly space. Most of the things in it do fairly little of their own accord. A few of them have very particular purposes, but many do not. A bagel-cutter, but then 4 knives for all other needs combined. One adorable pan you use twice a year to make æbelskivers, two sizes of skillet, a saucepan, a soup pot. Inspirational cookbooks you mostly don't actually open. Turn on the stove; grab a pan, put a little olive oil in it; get a knife. We're going to make something out of ingredients and tradition and imagination and love and heat and garlic.

But even when we do, we are mostly alone. The capitalist rendition of Community Supported Agriculture is a telling example of both potential and challenge. The farmer solicits patrons, who each subscribe to a share of the farm's output, driven conveniently from the farm into the city every week. But while the community's support is collectively tangible to the farmer, albeit not regally so, the community is mostly only abstract and implicit to itself. Maybe you say hi to other people picking up their shares at the same time. Maybe there's a mailing list where you can exchange ideas for what to do with 8 zucchini at once. But there's probably no shared kitchen where you could all make zucchini chocolate cake at once. The community of the CSA, in isolation, is not only asymmetrical, but inherently hard to manifest. Most of your actual neighbors aren't CSA subscribers. Half of them only shop at Trader Joe's and think you're making a gross joke with the thing about zucchini in cake. Some of them shop at the part of Amazon that says Whole Foods, and at least cook. One of them belongs to a different CSA. These fragmented micro-collectives don't worry the billionaires. You are more likely connected to your immediate neighbors by baseball. The billionaires own the baseball teams.

The "smart" phone, which is now just what "phone" mostly means (in the same way that "social" media is now just what "media" mostly means; and thus a few more billionaires), is sometimes dreamily described as a computer in our pocket, but of course what it really is is a vending machine in our pocket, neatly lined with buttons. Behind the buttons, increasingly, are "apps" that are themselves in turn essentially vending machines of prepackaged choices. Like a tapas dinner with our friends, this doesn't sound bad by definition. But the tapas restaurant has a kitchen, and knives. A decent restaurant is a complex celebration of human agency put into the form of edible performance, and should make us want to cook in the way that, hopefully, a good song makes us want to sing. We can sing while we cook.

Our computers, even the tiny galley computers in our pockets, can be more like kitchens full of singing. The thing that would make them different would be a different kind of software. But the thing that would make a different kind of software likely is a different economy and a different social structure of not just how software is made, but how computation is applied to human problems. Power must be distributed, but we also have to want it, have to want to make our own decisions instead of delegating them to our choice of 5 omniscient oracles. The oracles aren't going to tell us to figure it out ourselves, so we have to want to not ask them. A CSA doesn't require that its recipients eat differently than TJ's customers, but 8 zucchini constitute a provocation to cook in a way that a frozen stir-fry does not. If the only "ingredients" we can easily buy are frozen entrées, all meals are snacks and it doesn't matter if our knives are sharp. Especially not if the snack troopers come for our knives, pretending it's for our safety.

We do not need more applications. We do not need new vendors of fancier and less predictable ways to make the same snack apps. We need the same things for data and data-tools and the things we can make out of data with data-tools that a community needs from a communal kitchen. But because communities formed in computational space can use tools for self-development and self-determination that would be harder to provide in physical space, maybe examples in our software can help lead to similarly catalytic ideas in our cities. Maybe data maker-spaces will inspire us to make communal kitchens, and the kitchens will give us new data needs, and thus new ideas. The spaces are different, but the communities are all made of people, and the people are us.

We do not need more snacks. We do not need robots that make more snacks. We do not need machines that turn our zucchini into snack cakes that they then confiscate and sell back to us. We need a place where, when we get hungry, it is still harder to reach for a knife than a button, but only in the way that tells us the results will be satisfying. A place in which the vending machine gathers dust until we replace it with an extra refrigerator. A place with noise and joy and knives.

We do not need safety from ourselves. The knives are not weapons. Stabbing is not a cooking technique. The newly unbillionaired can have some zucchini chocolate cake, too. This is our argument against oligarchy and our restorative consolation to those who thought safety required demonization: We have enough. Dominance is a rich person's poor substitute for collaboration. Aspiring to dominance is a poor person's poor substitute for working together on our collective wealth and taste.

We do not have to settle for poor choices, bought and swallowed whole. We do not have to buy what we find in machines. We do have to quietly comply with our own commodification. Together, we do not have to be consumed.

The collective future that we want is a future of kitchens.

Vending machines present a shallow illusion of overconstrained "choice", but no meaningful agency. The insidiousness of vending machines, however, is not this quantitative overconstraint. Adding more products to the vending machine does not change its nature. Amazon is a very large vending machine. The insidiousness is the conflation of choice with agency, and thus of consumption with participation.

A vended economy is supported by a complaisant vended politics, increasingly of its own making. Voting is the nominal structural basis of democracy, but encouraging people to vote, in itself, is anodyne and power-friendly. Voting in elections with only one candidate is bad theater, but voting in elections with only two candidates is only one better. And the math never improves: if you have no control over the candidates, and in particular if the candidates are never you, increasing their number doesn't benefit you.

We don't immediately recognize this as dystopia, because dystopian story-telling usually oversimplifies the format of the oppression. In 1984 the government controls the meager supply of drab consumer goods, and the single broadcast/surveillance channel. But of course Walmart has endless aisles of things, and the TVs in the TV aisle have endless channels. We have choices.

But our choices are stocked by Walmart. Or Amazon, or a handful of morally interchangeable competitors. The TV channels are numerous in frequency, but monotonous in signal, and monopolized in control. You can have any innocuous flavor of filler surrounding your advertisements (for innocuously flavored fillers). Orwell thought this gray-goo of a world would be imposed on us by the State, but the capitalist innovation is to invert this. Power is consolidated by money, not vice versa. Citizenship is portioned into voters, who are then repackaged as consumers. Anything functional in government is absorbed or disassembled until it can impose no miserly restrictions.

And so we get: self-destructive grievant feudalism wielded by a petulant debt-powered narcissist, supported by gutless symbiosis with a solipsistic social class of robber barons. The narcissist only sees himself in the (legacy) media, which is controlled by the same fear-sellers who picked him as their sacrificial agent. Dissent isn't so much crushed as is organized into slots, each of which are manipulated to go temporarily out of stock, and then those empty slots are filled with something more colorful, but more completely owned. A few protesters gather in front of the machine to demand the return of the most recently discontinued snack. Their attention validates the machine. The machine gleams eagerly, its buttons patiently awaiting their fingers. They are angry now, but anger turns into hunger over so little time. Soon they will want something. The machine has the things. It waits.

We have kitchens. So many of the things in the kitchen came from rows on shelves in stores, vended with only slightly less structure than from the machine. The kitchen is not anti-business or anti-capitalist, exactly. But the things in the kitchen are material, and tools. The difference between a 5lb sack of flour and an individually-packaged snack cake is the difference between potential energy and the bill for energy consumed. At the end, we still eat. The difference is not the overall topology of the system, but our place in it. We come to the kitchen to take up knives, not coins or tokens.

Instead of a flat grid of processed choices, all lit from a consistent angle, the kitchen is an unruly space. Most of the things in it do fairly little of their own accord. A few of them have very particular purposes, but many do not. A bagel-cutter, but then 4 knives for all other needs combined. One adorable pan you use twice a year to make æbelskivers, two sizes of skillet, a saucepan, a soup pot. Inspirational cookbooks you mostly don't actually open. Turn on the stove; grab a pan, put a little olive oil in it; get a knife. We're going to make something out of ingredients and tradition and imagination and love and heat and garlic.

But even when we do, we are mostly alone. The capitalist rendition of Community Supported Agriculture is a telling example of both potential and challenge. The farmer solicits patrons, who each subscribe to a share of the farm's output, driven conveniently from the farm into the city every week. But while the community's support is collectively tangible to the farmer, albeit not regally so, the community is mostly only abstract and implicit to itself. Maybe you say hi to other people picking up their shares at the same time. Maybe there's a mailing list where you can exchange ideas for what to do with 8 zucchini at once. But there's probably no shared kitchen where you could all make zucchini chocolate cake at once. The community of the CSA, in isolation, is not only asymmetrical, but inherently hard to manifest. Most of your actual neighbors aren't CSA subscribers. Half of them only shop at Trader Joe's and think you're making a gross joke with the thing about zucchini in cake. Some of them shop at the part of Amazon that says Whole Foods, and at least cook. One of them belongs to a different CSA. These fragmented micro-collectives don't worry the billionaires. You are more likely connected to your immediate neighbors by baseball. The billionaires own the baseball teams.

The "smart" phone, which is now just what "phone" mostly means (in the same way that "social" media is now just what "media" mostly means; and thus a few more billionaires), is sometimes dreamily described as a computer in our pocket, but of course what it really is is a vending machine in our pocket, neatly lined with buttons. Behind the buttons, increasingly, are "apps" that are themselves in turn essentially vending machines of prepackaged choices. Like a tapas dinner with our friends, this doesn't sound bad by definition. But the tapas restaurant has a kitchen, and knives. A decent restaurant is a complex celebration of human agency put into the form of edible performance, and should make us want to cook in the way that, hopefully, a good song makes us want to sing. We can sing while we cook.

Our computers, even the tiny galley computers in our pockets, can be more like kitchens full of singing. The thing that would make them different would be a different kind of software. But the thing that would make a different kind of software likely is a different economy and a different social structure of not just how software is made, but how computation is applied to human problems. Power must be distributed, but we also have to want it, have to want to make our own decisions instead of delegating them to our choice of 5 omniscient oracles. The oracles aren't going to tell us to figure it out ourselves, so we have to want to not ask them. A CSA doesn't require that its recipients eat differently than TJ's customers, but 8 zucchini constitute a provocation to cook in a way that a frozen stir-fry does not. If the only "ingredients" we can easily buy are frozen entrées, all meals are snacks and it doesn't matter if our knives are sharp. Especially not if the snack troopers come for our knives, pretending it's for our safety.

We do not need more applications. We do not need new vendors of fancier and less predictable ways to make the same snack apps. We need the same things for data and data-tools and the things we can make out of data with data-tools that a community needs from a communal kitchen. But because communities formed in computational space can use tools for self-development and self-determination that would be harder to provide in physical space, maybe examples in our software can help lead to similarly catalytic ideas in our cities. Maybe data maker-spaces will inspire us to make communal kitchens, and the kitchens will give us new data needs, and thus new ideas. The spaces are different, but the communities are all made of people, and the people are us.

We do not need more snacks. We do not need robots that make more snacks. We do not need machines that turn our zucchini into snack cakes that they then confiscate and sell back to us. We need a place where, when we get hungry, it is still harder to reach for a knife than a button, but only in the way that tells us the results will be satisfying. A place in which the vending machine gathers dust until we replace it with an extra refrigerator. A place with noise and joy and knives.

We do not need safety from ourselves. The knives are not weapons. Stabbing is not a cooking technique. The newly unbillionaired can have some zucchini chocolate cake, too. This is our argument against oligarchy and our restorative consolation to those who thought safety required demonization: We have enough. Dominance is a rich person's poor substitute for collaboration. Aspiring to dominance is a poor person's poor substitute for working together on our collective wealth and taste.

We do not have to settle for poor choices, bought and swallowed whole. We do not have to buy what we find in machines. We do have to quietly comply with our own commodification. Together, we do not have to be consumed.

BlueSky is a social media service, but notably, it's a public social media service. What you write on BlueSky, you say to the world. What we give ourselves, collectively, by wondering and complaining and epiphanizing in public, is a dataset of how we are.

How are we?

You can find out for yourself. You can find out without any help from me, of course, but since I'm currently on a professional mission to catalyze human agency by making explicable software for participatory intelligence, I'm experimenting with offering particularly geeky forms of help for various things, and this is one of them.

My particular bit of BlueSky help is called SkyQ. It provides a simple way to sample some messages from the real-time BlueSky feed, a DACTAL query interface for exploring them, and a few BlueSky-specific DACTAL extensions so that you can navigate among the AT Protocol data structures: DIDs, handles, follows, posts, threads. I have deliberately not given this web app much mechanism beyond this, because I'm not trying to make any assumptions about what your curiosity might involve.

But as an example of how this curiosity might work, I've provided a query for calculating word-frequency from any set of BlueSky messages, and I've also gone ahead and used the tools myself to pre-build a baseline dataset of word frequencies so that you can score your word frequencies against everybody else's.

Here's how to get started.

- Go to SkyQ. There are no accounts or logins or anything, because this is all public source-data. Anything you do in SkyQ gets saved in your browser's local storage, so your questions are totally private unless you choose to post about them somewhere else.

- Go to the "#" box at the top and put in 1000 (or whatever), and then hit "Collect" to grab some messages from the live BlueSky feed. You can also put something in the "sample BlueSky feed for" to get only messages that mention that text, but start with nothing just to see how it works.

- Once you have some messages, click "word frequency" under "analysis queries". Here's what this query consists of:

In English this means:

- count the messages you've sampled (we'll need this later in the query)

- take those sampled messages and group them by unique words (welcome to the weird internals of the AT Protocol: each message technically represents a commit, which has a record which has a text field)

- keep the word-groups with at least 5 messages

- annotate them with a "freq" value looked up in the reference dataset

- calculate a very basic "score" for each word by dividing your sample count by the reference count

- keep the word-groups for words that appear at least .1% of the time if they are in the reference list, or at least .25% of the time if they aren't (math...)

- sort them by score

Doing this with an unfiltered sample of 10000 messages on the morning of 2025-09-16 got me this:

RIP Robert Redford. That explains the first three. Did Chuck Mangione die, too? Yes, but two months ago. Why are people talking about him again today? You can click any link in the of column to see the messages that mentioned that word. You can click column headers in any of these tables to navigate into subproperties, so with a list of messages, clicking commit then record then text will get you to the actual contents the same way the query did it. (And oh, right: today's Mangione news is not about Chuck.)

What do the rest of these mean? I don't know. I didn't check, and the point of this is to get you to try. If any of them are still on the list when you do, you can learn something for yourself. And if you do, I hope you'll post about it, both because SkyQ doesn't monitor your actions in any way but I'd still love to know people are trying this, and because that's how we collect collective knowledge.

(Or, sometimes, that's how break our new experimental tools. If that happens to you, let me know. I'm on there, too.)

How are we?

You can find out for yourself. You can find out without any help from me, of course, but since I'm currently on a professional mission to catalyze human agency by making explicable software for participatory intelligence, I'm experimenting with offering particularly geeky forms of help for various things, and this is one of them.

My particular bit of BlueSky help is called SkyQ. It provides a simple way to sample some messages from the real-time BlueSky feed, a DACTAL query interface for exploring them, and a few BlueSky-specific DACTAL extensions so that you can navigate among the AT Protocol data structures: DIDs, handles, follows, posts, threads. I have deliberately not given this web app much mechanism beyond this, because I'm not trying to make any assumptions about what your curiosity might involve.

But as an example of how this curiosity might work, I've provided a query for calculating word-frequency from any set of BlueSky messages, and I've also gone ahead and used the tools myself to pre-build a baseline dataset of word frequencies so that you can score your word frequencies against everybody else's.

Here's how to get started.

- Go to SkyQ. There are no accounts or logins or anything, because this is all public source-data. Anything you do in SkyQ gets saved in your browser's local storage, so your questions are totally private unless you choose to post about them somewhere else.

- Go to the "#" box at the top and put in 1000 (or whatever), and then hit "Collect" to grab some messages from the live BlueSky feed. You can also put something in the "sample BlueSky feed for" to get only messages that mention that text, but start with nothing just to see how it works.

- Once you have some messages, click "word frequency" under "analysis queries". Here's what this query consists of:

??message count=(messages....count)

?messages/(.commit.record.text....words.):count>=5

|freq=(.(.name.word frequencies.frequency);(0)),score=(.[=count/(freq+1)])

:(:freq>0;count>=(message count.[=_/1000])),count>=(message count.[=_/400])

#score

?messages/(.commit.record.text....words.):count>=5

|freq=(.(.name.word frequencies.frequency);(0)),score=(.[=count/(freq+1)])

:(:freq>0;count>=(message count.[=_/1000])),count>=(message count.[=_/400])

#score

In English this means:

- count the messages you've sampled (we'll need this later in the query)

- take those sampled messages and group them by unique words (welcome to the weird internals of the AT Protocol: each message technically represents a commit, which has a record which has a text field)

- keep the word-groups with at least 5 messages

- annotate them with a "freq" value looked up in the reference dataset

- calculate a very basic "score" for each word by dividing your sample count by the reference count

- keep the word-groups for words that appear at least .1% of the time if they are in the reference list, or at least .25% of the time if they aren't (math...)

- sort them by score

Doing this with an unfiltered sample of 10000 messages on the morning of 2025-09-16 got me this:

RIP Robert Redford. That explains the first three. Did Chuck Mangione die, too? Yes, but two months ago. Why are people talking about him again today? You can click any link in the of column to see the messages that mentioned that word. You can click column headers in any of these tables to navigate into subproperties, so with a list of messages, clicking commit then record then text will get you to the actual contents the same way the query did it. (And oh, right: today's Mangione news is not about Chuck.)

What do the rest of these mean? I don't know. I didn't check, and the point of this is to get you to try. If any of them are still on the list when you do, you can learn something for yourself. And if you do, I hope you'll post about it, both because SkyQ doesn't monitor your actions in any way but I'd still love to know people are trying this, and because that's how we collect collective knowledge.

(Or, sometimes, that's how break our new experimental tools. If that happens to you, let me know. I'm on there, too.)

¶ Where does, or might, your streaming money go? · 13 July 2025 listen/tech

There's a whole chapter in my book about how royalty distribution from streaming music services works, and when I worked at Spotify I could run the entire service's actual usage through potential alternate distribution models to see how they would affect artists differently. You can't do that, and I can't anymore either.

But if you've requested your own streaming history from Spotify, and loaded it into Curio, you can at least explore how alternate distribution models might distribute your money to the artists † ‡ you love.

You can start with this query comparing the usual pro-rata scheme to one that distibutes each listener's money according to their individual listening-time per artist.

Here's the query:

To do this without access to proprietary business data, we have to make a couple legally-arbitrary assumptions about two key internal Spotify numbers.

One is the fraction of Spotify revenue, for your subscription country and type, that gets paid out to licensors. This is variously quoted as "more than two-thirds" or "about 70%" in the press, and the exact number depends in a complicated way on local statutory rates, licensor deals and even sometimes the complicity of specific labels or artists in Spotify's grim attempts to fractionally improve their own margins. You can make up your own mind about what you want to believe this number to be, and if your mind makes up some number other than 0.7, you should replace the 0.7 in the ??rates= line with your alternative.

The second number is the average number of plays (industry-defined as "you listened to at least :30 of a song, but it doesn't make any difference how much if it's at least that much") for your subscription country and type. One common public guess at this average across all Spotify listeners is 500 plays/month; total Spotify membership is weighted about 3:2 in favor of ad-subsidized "free" listeners over subscribers; Daniel Ek once said in public that premium listeners spend about 3x as much time listening as free listeners, on average. Putting these numbers together with math (not shown here) suggests a premium-user average somewhere around 800. If you don't like that 800, replace the 800 in that same line with whatever you like better.

The 10.99 in my example is the current monthly cost of a US Basic account, which I choose (in both the moral and exemplary senses) because Spotify is engaged in a disingenuous ongoing effort to define the nominal Premium account as a supposedly-equal-value bundle of music and audiobooks so that they don't have to pay as much in music-publishing royalties. In this particular example I have filtered my listening history to data from calendar 2024, and thus have multiplied the monthly subscription and stream-count numbers both by 12. You could also take both 12s out and filter to any single month.

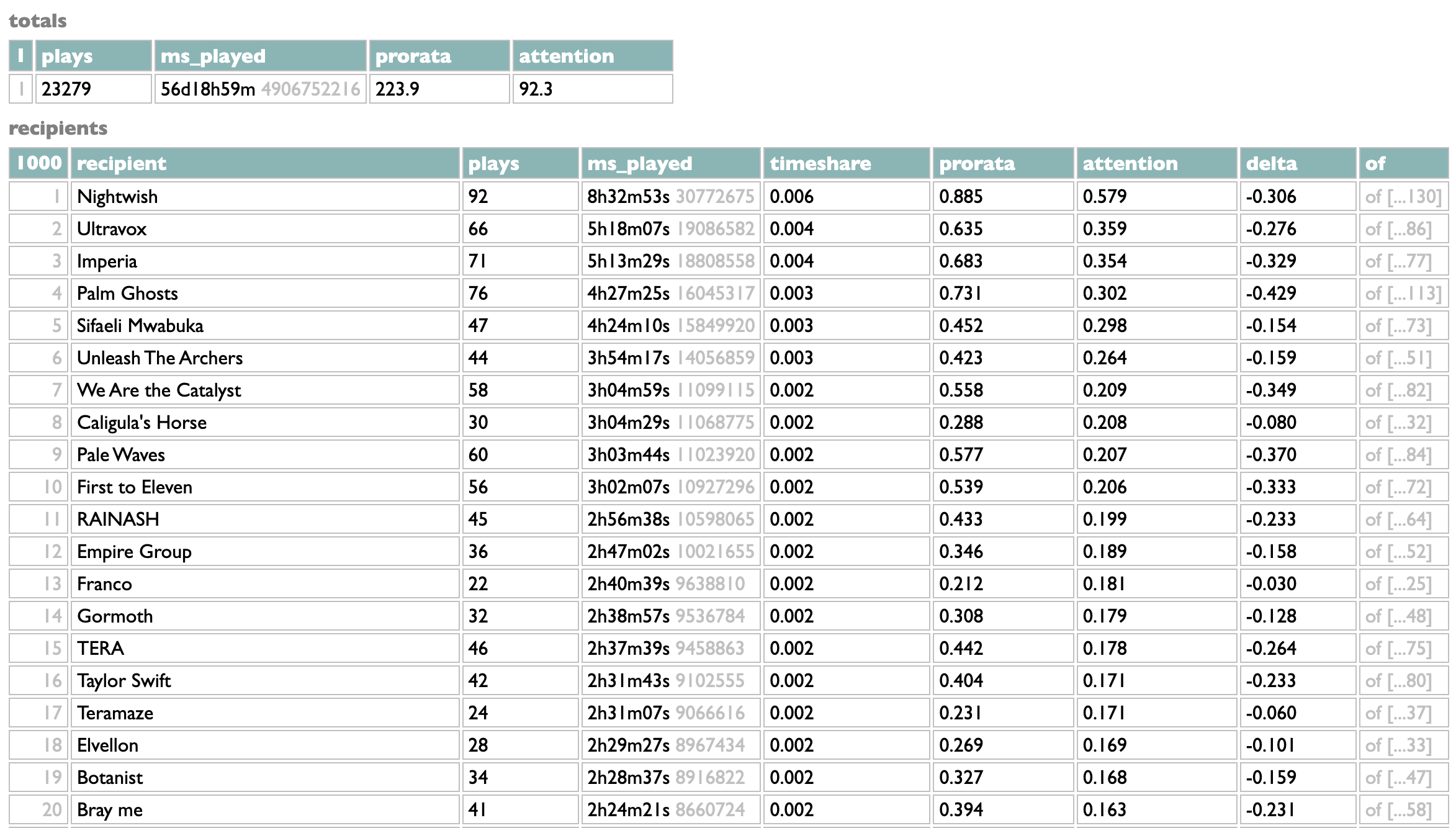

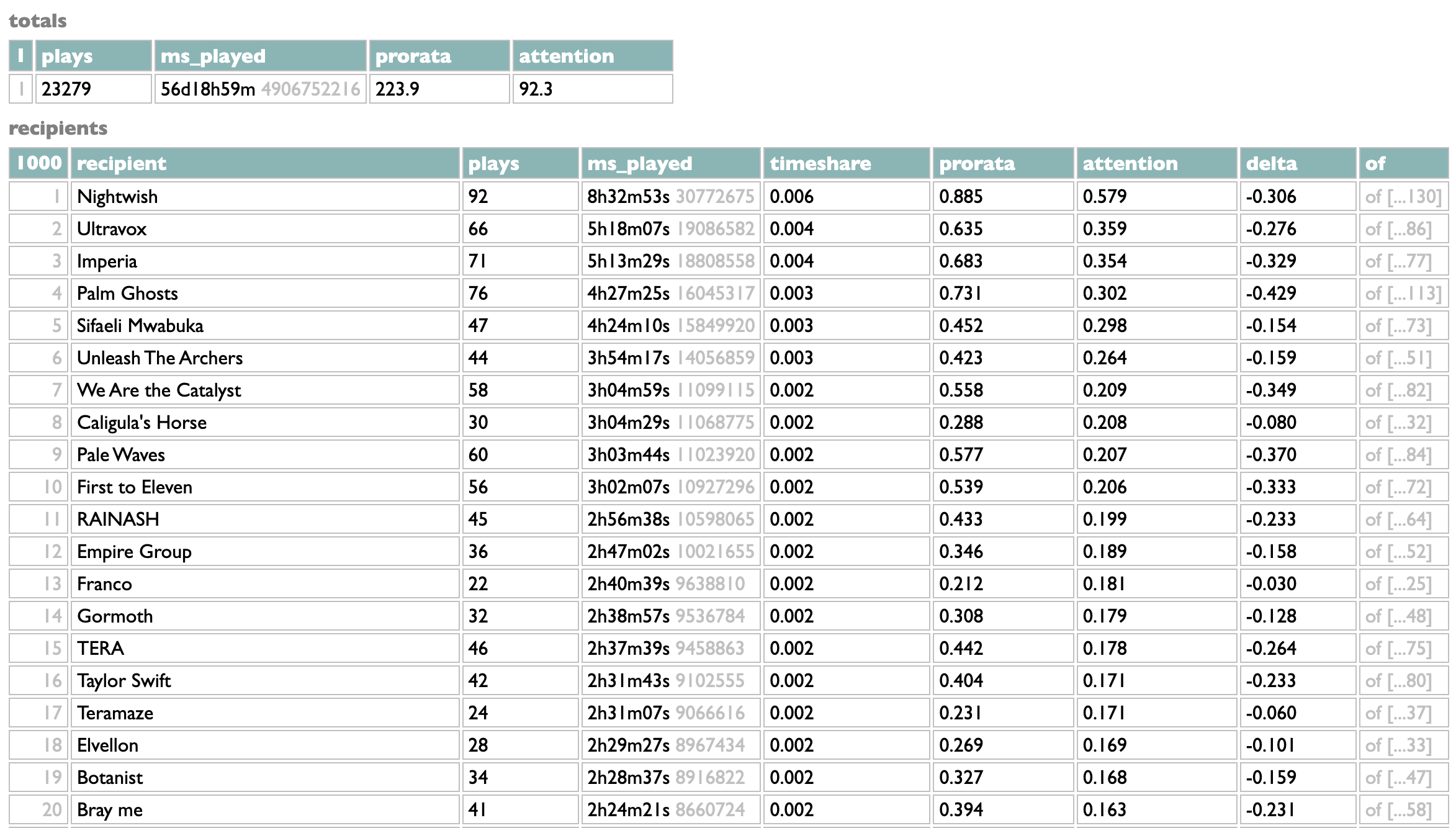

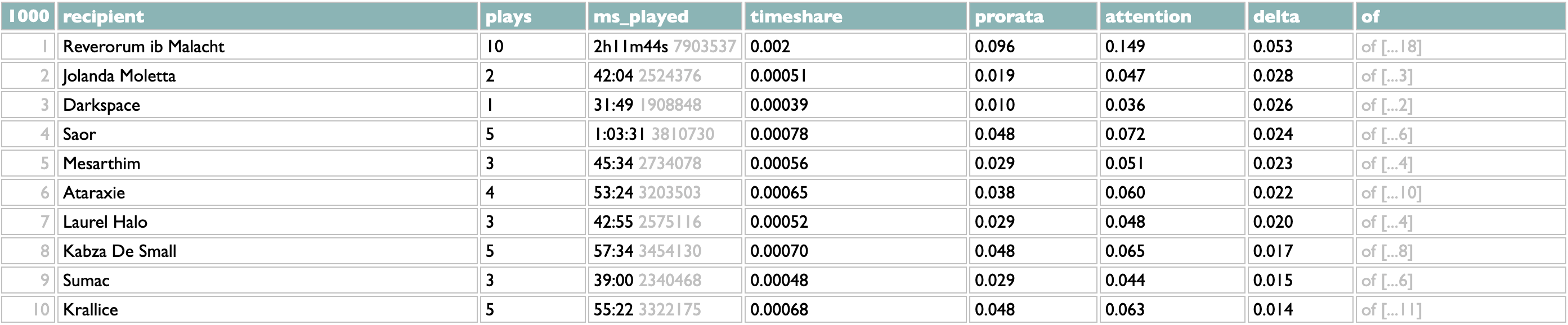

Using these numbers, here's what I get for my own 2024:

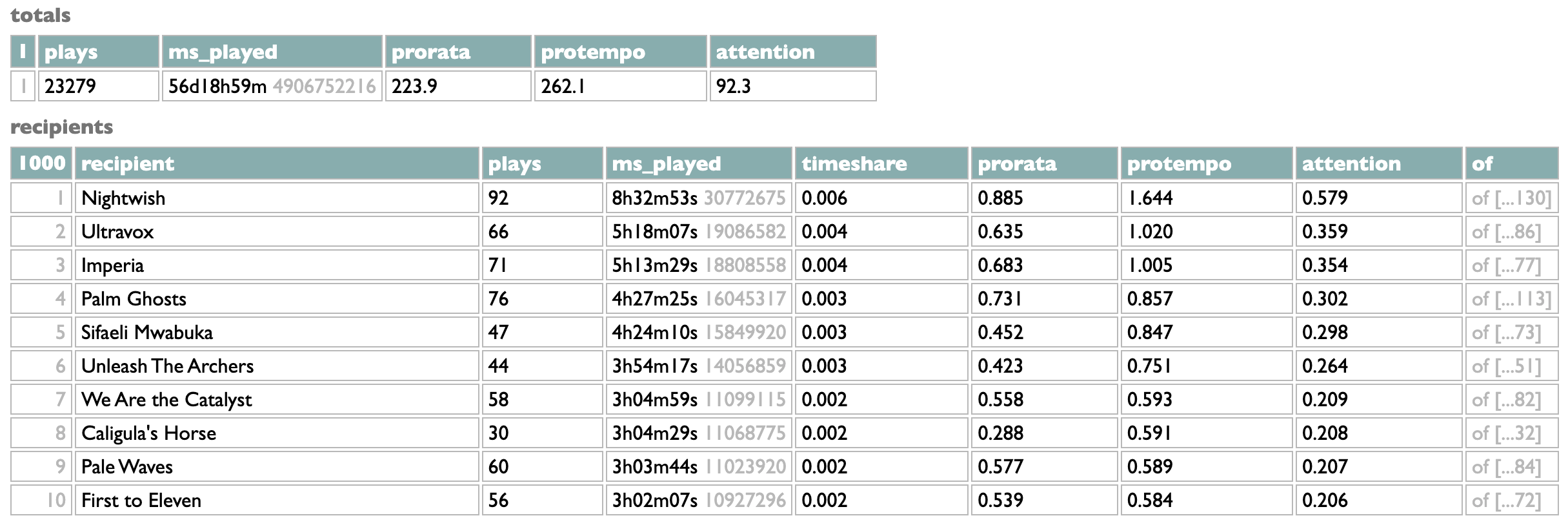

As I explain in the book, you can think of the pro-rata scheme as essentially allowing listeners who accumulate more than the average number of plays to reassign the leftover money from the people who play less than the average. User-centric models, whether based on plays or time, always only pay your artists with your money. With these hypothetical numbers, my subscription would have generated $92.30 in distributable money over the course of the year, and in the attention model that's exactly what my artists get in total. Because I happen to play very considerably more than the average listener, though, the pro-rata model allows me to commandeer almost an additional subscription and a half of money from less-active listeners, and my artists get a total of $223.90. The artists here at the top of my list benefit dramatically from this, at least proportionally, with some of them getting more than twice as much money because of me than they could get strictly from me.

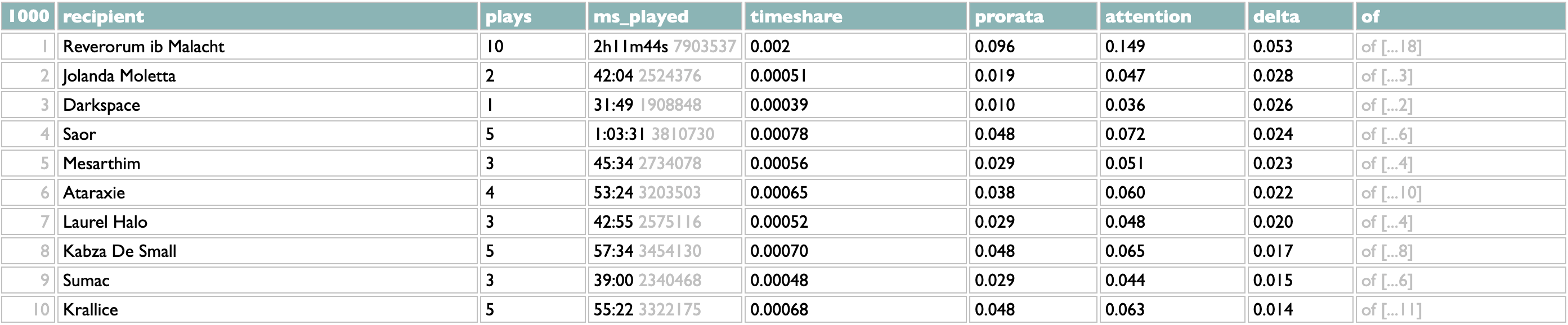

In my case this dynamic renders the plays-vs-time difference itself largely moot, but if we sort with #delta instead of #attention we can see that there are a few artists whose penchant for long tracks causes them to be under-rewarded by the current play-based scheme, and treated arguably more fairly by the time-based attention scheme:

In case it hasn't registered because I haven't bothered to implement currency-formatting, though, the decimal numbers in the prorata, attention and delta columns are all fractions of a dollar. My listening is even more obdurately broad than it is voluminous (I listened at least momentarily to 8374 different artists last year, and 5289 even if you only count the ones where I played at least :30 of at least one song), so no individual artist earns much from my streaming. Your artist amounts are likely to be higher. There's probably some selection bias such that people willing to go through the trouble of requesting their listening history, and signing up for an API key in order to use Curio, are more likely to also be more active listeners than the average. And more active listeners also tend, in general, to listen more broadly than less-active listeners. But I am, or at least I was back when I could check because of course I checked, an extreme case.

And because this is all just a query, not anything hard-coded into Curio itself, you can also experiment with the query itself to try other models if you want. I'm not really expecting you to have other streaming-royalty allocation schemes casually in mind, but I am hoping that showing you what you could try will make you slightly more likely to want to try things, in this domain or some other one you know and care about the way I know and care about music. I want a world in which the rules embedded in the systems that affect our money and our lives are intelligble to us, and subject to our verification and variation. I want us to want a better world, and we can't improve what we can't control, and we can't control what we can't understand or discuss.

† The critical caveat about the recipients here is that the current streaming services do not pay artists, they pay licensors. For independent artists, those licensors may be self-serve distributors like DistroKid or OneRPM that take only small fees and pass along most of the money to the artists. For artists on major labels, the label may keep most or even all of this royalty money, but in turn may have already paid the artist an advance against it. So the mechanics are complicated in ways that are out of both your and the streaming service's control.

‡ The trivial caveat about the query-modeling here is that the listening-history data Spotify lets you download does not identify each track's artist by unique ID, only by name. It's possible to get the correct unique artists by looking up the tracks within the query, e.g.:

but my own listening history has a lot of tracks and I didn't want to wait for that many API calls, so for this query I've chosen to tolerate the approximation of artist names. If you happen to really like multiple different artists with the exact same name, you'll see that their data gets combined in this query. I invite you into my experiment, but the invitation includes a hammer.

(And to admit my meta-interest, since I'm currently working on human computational agency and collective knowledge more than on streaming-royalty allocation, it's my belief that we should always insist that arguments based on data be supported by demonstration, not illustration. Shared understanding beats adversarial persuasion.)

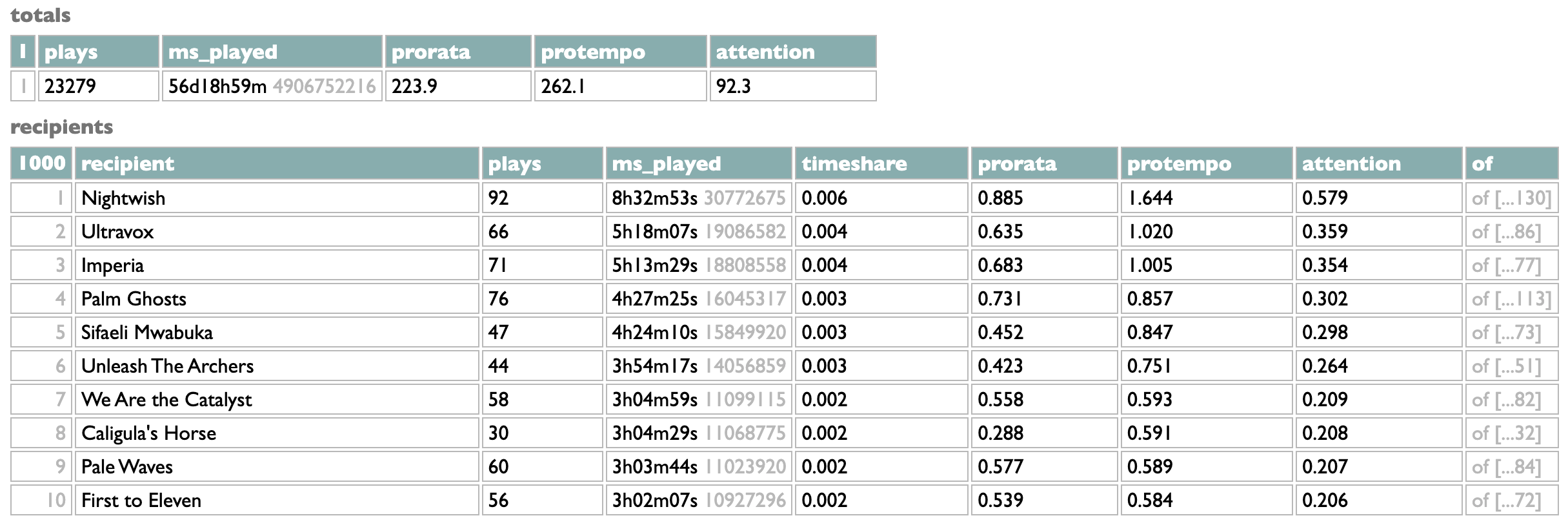

PS: To complicate this further, the scheme I actually endorse is pro-tempo, which is pro-rata but allocated by time instead of plays. This requires another guess at the average duration of a stream (not of a song), but if I put that guess at 3:00, my personal listening is even further above average in time than it is in plays, and the three-way comparison query shows me directing the allocation of $262.10, and almost 3x as much to my top artist as the supposedly-artist-centric attention scheme:

But if you've requested your own streaming history from Spotify, and loaded it into Curio, you can at least explore how alternate distribution models might distribute your money to the artists † ‡ you love.

You can start with this query comparing the usual pro-rata scheme to one that distibutes each listener's money according to their individual listening-time per artist.

Here's the query:

?listening history:~<[2024]

:(.master_metadata_album_artist_name)

??rates=(....||subscription=[=0.7*12*10.99],spu=[=800*12],rps=[=subscription/spu]),

totalms=(....ms_played,total),

pro rata=(

:ms_played>=30000:(.spotify_track_uri)

/recipient=master_metadata_album_artist_name,count=plays

.(....recipient,plays,prorata=(....plays,(rates.rps),product))

),

by user=(

/recipient=(.master_metadata_album_artist_name)

||ms_played=(..of....ms_played,total),

timeshare=[=ms_played/totalms],

subscription=(rates.subscription),

attention=[=timeshare*subscription],

-name,-key,-subscription,-count

)

?pro rata,by user//recipient #attention

||prorata=(.prorata;(0)),delta=[=attention-prorata]

||recipient,plays,ms_played,timeshare,prorata,attention,delta

...multi=(1),

totals=(

....plays=(....plays,total),

ms_played=(....ms_played,total),

prorata=(....prorata,total),

attention=(....attention,total)

),

recipients=_

:(.master_metadata_album_artist_name)

??rates=(....||subscription=[=0.7*12*10.99],spu=[=800*12],rps=[=subscription/spu]),

totalms=(....ms_played,total),

pro rata=(

:ms_played>=30000:(.spotify_track_uri)

/recipient=master_metadata_album_artist_name,count=plays

.(....recipient,plays,prorata=(....plays,(rates.rps),product))

),

by user=(

/recipient=(.master_metadata_album_artist_name)

||ms_played=(..of....ms_played,total),

timeshare=[=ms_played/totalms],

subscription=(rates.subscription),

attention=[=timeshare*subscription],

-name,-key,-subscription,-count

)

?pro rata,by user//recipient #attention

||prorata=(.prorata;(0)),delta=[=attention-prorata]

||recipient,plays,ms_played,timeshare,prorata,attention,delta

...multi=(1),

totals=(

....plays=(....plays,total),

ms_played=(....ms_played,total),

prorata=(....prorata,total),

attention=(....attention,total)

),

recipients=_

To do this without access to proprietary business data, we have to make a couple legally-arbitrary assumptions about two key internal Spotify numbers.

One is the fraction of Spotify revenue, for your subscription country and type, that gets paid out to licensors. This is variously quoted as "more than two-thirds" or "about 70%" in the press, and the exact number depends in a complicated way on local statutory rates, licensor deals and even sometimes the complicity of specific labels or artists in Spotify's grim attempts to fractionally improve their own margins. You can make up your own mind about what you want to believe this number to be, and if your mind makes up some number other than 0.7, you should replace the 0.7 in the ??rates= line with your alternative.

The second number is the average number of plays (industry-defined as "you listened to at least :30 of a song, but it doesn't make any difference how much if it's at least that much") for your subscription country and type. One common public guess at this average across all Spotify listeners is 500 plays/month; total Spotify membership is weighted about 3:2 in favor of ad-subsidized "free" listeners over subscribers; Daniel Ek once said in public that premium listeners spend about 3x as much time listening as free listeners, on average. Putting these numbers together with math (not shown here) suggests a premium-user average somewhere around 800. If you don't like that 800, replace the 800 in that same line with whatever you like better.

The 10.99 in my example is the current monthly cost of a US Basic account, which I choose (in both the moral and exemplary senses) because Spotify is engaged in a disingenuous ongoing effort to define the nominal Premium account as a supposedly-equal-value bundle of music and audiobooks so that they don't have to pay as much in music-publishing royalties. In this particular example I have filtered my listening history to data from calendar 2024, and thus have multiplied the monthly subscription and stream-count numbers both by 12. You could also take both 12s out and filter to any single month.

Using these numbers, here's what I get for my own 2024:

As I explain in the book, you can think of the pro-rata scheme as essentially allowing listeners who accumulate more than the average number of plays to reassign the leftover money from the people who play less than the average. User-centric models, whether based on plays or time, always only pay your artists with your money. With these hypothetical numbers, my subscription would have generated $92.30 in distributable money over the course of the year, and in the attention model that's exactly what my artists get in total. Because I happen to play very considerably more than the average listener, though, the pro-rata model allows me to commandeer almost an additional subscription and a half of money from less-active listeners, and my artists get a total of $223.90. The artists here at the top of my list benefit dramatically from this, at least proportionally, with some of them getting more than twice as much money because of me than they could get strictly from me.

In my case this dynamic renders the plays-vs-time difference itself largely moot, but if we sort with #delta instead of #attention we can see that there are a few artists whose penchant for long tracks causes them to be under-rewarded by the current play-based scheme, and treated arguably more fairly by the time-based attention scheme:

In case it hasn't registered because I haven't bothered to implement currency-formatting, though, the decimal numbers in the prorata, attention and delta columns are all fractions of a dollar. My listening is even more obdurately broad than it is voluminous (I listened at least momentarily to 8374 different artists last year, and 5289 even if you only count the ones where I played at least :30 of at least one song), so no individual artist earns much from my streaming. Your artist amounts are likely to be higher. There's probably some selection bias such that people willing to go through the trouble of requesting their listening history, and signing up for an API key in order to use Curio, are more likely to also be more active listeners than the average. And more active listeners also tend, in general, to listen more broadly than less-active listeners. But I am, or at least I was back when I could check because of course I checked, an extreme case.

And because this is all just a query, not anything hard-coded into Curio itself, you can also experiment with the query itself to try other models if you want. I'm not really expecting you to have other streaming-royalty allocation schemes casually in mind, but I am hoping that showing you what you could try will make you slightly more likely to want to try things, in this domain or some other one you know and care about the way I know and care about music. I want a world in which the rules embedded in the systems that affect our money and our lives are intelligble to us, and subject to our verification and variation. I want us to want a better world, and we can't improve what we can't control, and we can't control what we can't understand or discuss.

† The critical caveat about the recipients here is that the current streaming services do not pay artists, they pay licensors. For independent artists, those licensors may be self-serve distributors like DistroKid or OneRPM that take only small fees and pass along most of the money to the artists. For artists on major labels, the label may keep most or even all of this royalty money, but in turn may have already paid the artist an advance against it. So the mechanics are complicated in ways that are out of both your and the streaming service's control.

‡ The trivial caveat about the query-modeling here is that the listening-history data Spotify lets you download does not identify each track's artist by unique ID, only by name. It's possible to get the correct unique artists by looking up the tracks within the query, e.g.:

listening history :@<=10

||id=(....spotify_track_uri,([:]),split:@3)

/artist=(.other tracks.(.artists:@1))

||id=(....spotify_track_uri,([:]),split:@3)

/artist=(.other tracks.(.artists:@1))

but my own listening history has a lot of tracks and I didn't want to wait for that many API calls, so for this query I've chosen to tolerate the approximation of artist names. If you happen to really like multiple different artists with the exact same name, you'll see that their data gets combined in this query. I invite you into my experiment, but the invitation includes a hammer.

(And to admit my meta-interest, since I'm currently working on human computational agency and collective knowledge more than on streaming-royalty allocation, it's my belief that we should always insist that arguments based on data be supported by demonstration, not illustration. Shared understanding beats adversarial persuasion.)

PS: To complicate this further, the scheme I actually endorse is pro-tempo, which is pro-rata but allocated by time instead of plays. This requires another guess at the average duration of a stream (not of a song), but if I put that guess at 3:00, my personal listening is even further above average in time than it is in plays, and the three-way comparison query shows me directing the allocation of $262.10, and almost 3x as much to my top artist as the supposedly-artist-centric attention scheme:

The computer is a data knife. I've been using computers to cut up data since I first got one with enough memory to hold data in the late 1980s. The ergonomics of doing this, however, have been uniformly poor the whole time. Spreadsheets are fine for a certain subset of data tasks, kind of like a bagel-cutter is good for slicing a certain subset of foods. Most database software is structurally industrial-scale, more like a bread-slicing machine than a knife.

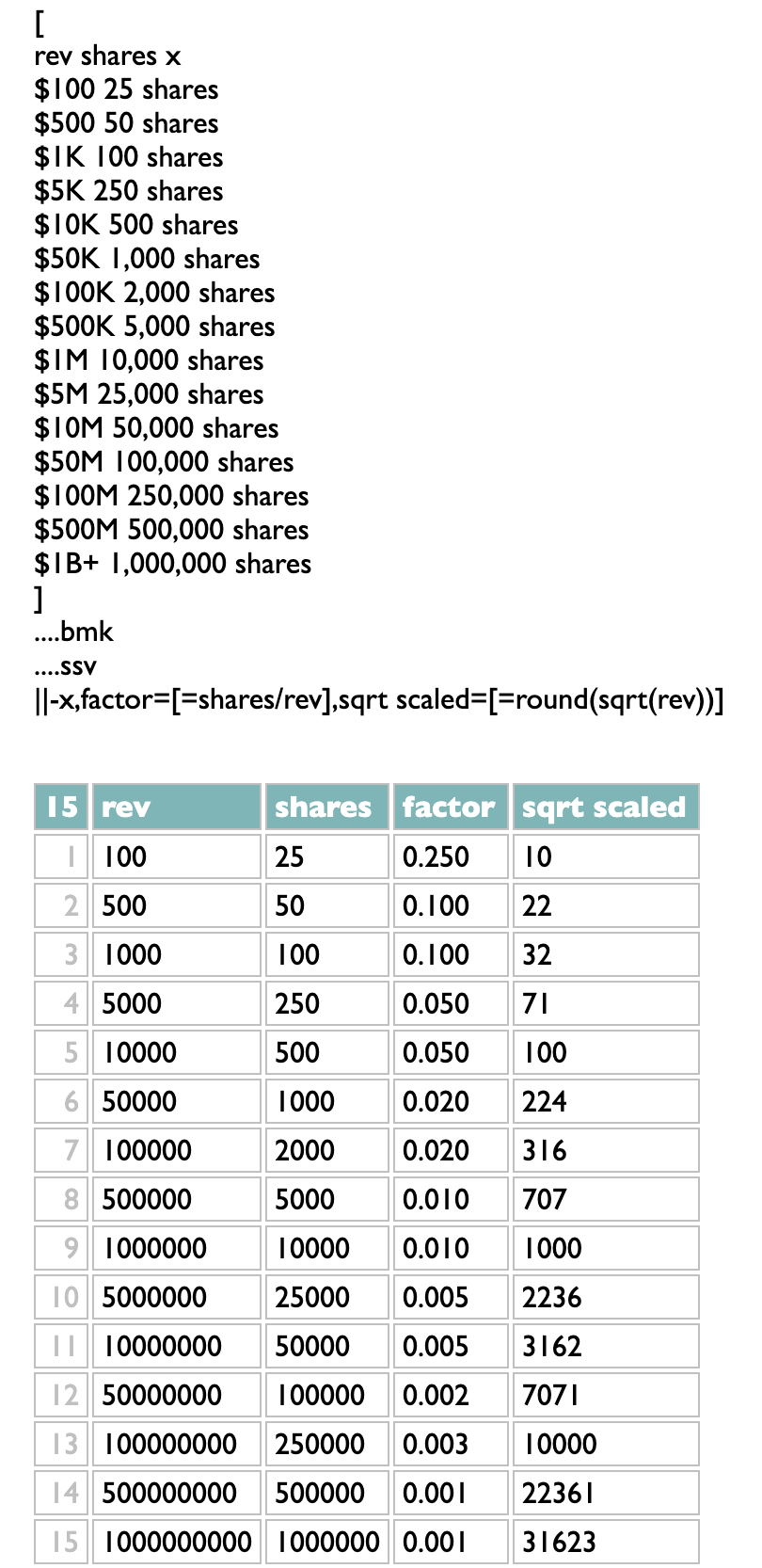

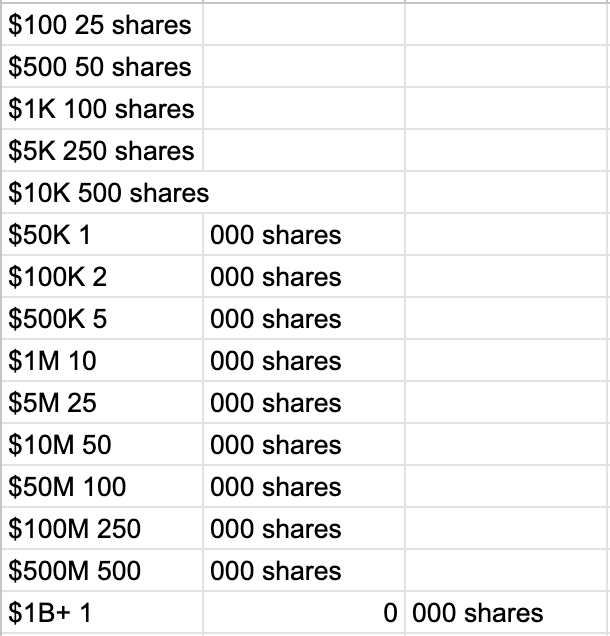

A friend just sent me this tiny table of numbers:

$100 25 shares

$500 50 shares

$1K 100 shares

$5K 250 shares

$10K 500 shares

$50K 1,000 shares

$100K 2,000 shares

$500K 5,000 shares

$1M 10,000 shares

$5M 25,000 shares

$10M 50,000 shares

$50M 100,000 shares

$100M 250,000 shares

$500M 500,000 shares

$1B+ 1,000,000 shares

I pasted it into Google Sheets, did Data > Split text to columns, and got this:

Not useful.

But do we still need data knives if we have AI? Our agents can cut our data up into safe bite-sized pieces for us, can't they? Hold still and open your mouth. I gave this table to Claude and asked "does this look right?"

My questions there are verbatim, Claude's responses are condensed from multiple pages of earnest unhelpfulness. That may not be a dull knife, exactly, but I don't really want a cheerful knife with which I have to begin by arguing about the nature of sharpness.

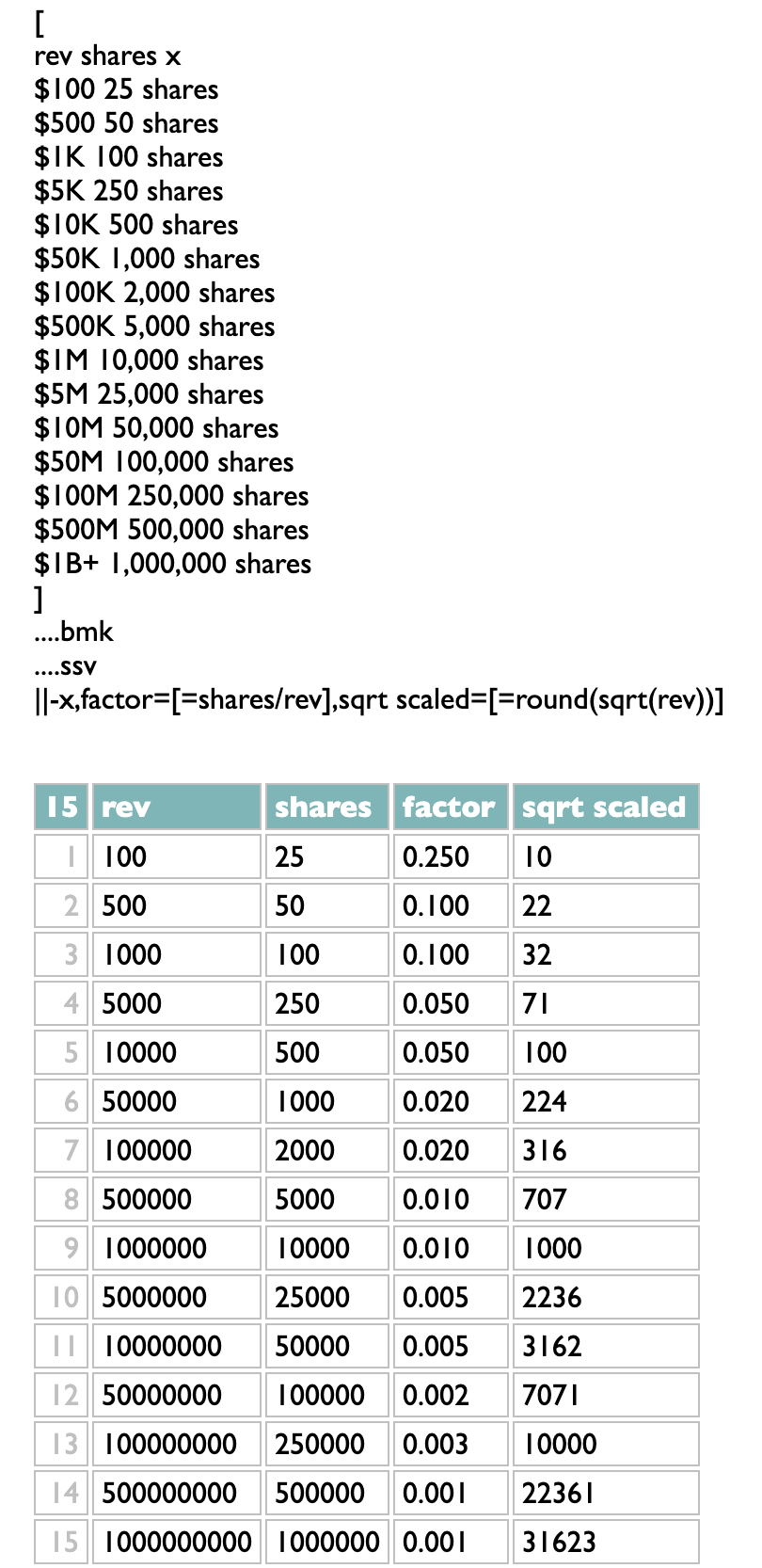

Part of my ambition for DACTAL is that it could be a better knife. The surgery we need here isn't exactly traumatic: expand the K/M/B shorthands into actual numbers, chop up this set of space-separated-values into labeled fields, check the math, maybe try an alternative scheme.

That isn't just a picture of numbers. Click it and you'll go to the cutting board where you can hack at them yourself. Or, if you prefer, some other data of your own.

Next I'm going to need some data tupperware.

A friend just sent me this tiny table of numbers:

$100 25 shares

$500 50 shares

$1K 100 shares

$5K 250 shares

$10K 500 shares

$50K 1,000 shares

$100K 2,000 shares

$500K 5,000 shares

$1M 10,000 shares

$5M 25,000 shares

$10M 50,000 shares

$50M 100,000 shares

$100M 250,000 shares

$500M 500,000 shares

$1B+ 1,000,000 shares

I pasted it into Google Sheets, did Data > Split text to columns, and got this:

Not useful.

But do we still need data knives if we have AI? Our agents can cut our data up into safe bite-sized pieces for us, can't they? Hold still and open your mouth. I gave this table to Claude and asked "does this look right?"

Claude: The scaling seems quite aggressive - the share awards increase much faster than the earnings.

glenn: You say "the share awards increase much faster than the earnings", but isn't it exactly the reverse?

Claude: You're absolutely right - I made an error in my analysis. The share awards increase much slower than the earnings, not faster. This creates a regressive structure where higher earners get a smaller percentage of their earnings in shares.

glenn: If higher earners get a smaller percentage, isn't that literally a progressive scheme?

Claude: You're absolutely correct again - I have my definitions backwards!

My questions there are verbatim, Claude's responses are condensed from multiple pages of earnest unhelpfulness. That may not be a dull knife, exactly, but I don't really want a cheerful knife with which I have to begin by arguing about the nature of sharpness.

Part of my ambition for DACTAL is that it could be a better knife. The surgery we need here isn't exactly traumatic: expand the K/M/B shorthands into actual numbers, chop up this set of space-separated-values into labeled fields, check the math, maybe try an alternative scheme.

That isn't just a picture of numbers. Click it and you'll go to the cutting board where you can hack at them yourself. Or, if you prefer, some other data of your own.

Next I'm going to need some data tupperware.

¶ The Initiative to Cultivate Empathy · 11 July 2025

I think we can all agree that the United States has a problem. Due to historical deficiencies in our moral educational system, we have suffered an internal invasion of intolerance, xenophobia and racism. These corrosive sociopathies have now reached such critical levels that they are interfering with our ability to operate a humane progressive democracy. It is time to fix this.

It will be expensive, but I believe this effort is crucial for our national survival, so I propose budgeting approximately $75 billion to create the Initiative to Cultivate Empathy. This new federal agency will recruit Americans who feel, or performatively claim to feel, racism or intolerance towards other human beings. In order to help them overcome these handicaps, it will temporarily employ them, on a paid full-time basis, to seek out immigrants or people who look like they believe immigrants look. When they find such people, these agents will use the full power of federal authority to give them sandwiches, fresh fruit and low-calorie probiotic soda, and to talk to them to learn about their cultural identities, life challenges and traditional preferences in hip hop.

The Initiative will also take inspiration from the current administration's laudable willingness to spend money to house immigrants, or people who look like they believe immigrants look, or graduate students with opinions, and will build and operate a set of federal shelters, located where most needed, to provide comfortable transitional housing for newly arrived immigrants or other people in temporary need. These facilities will also run supportive services, such as classes so that agents who only speak English have the opportunity to learn another language, or dinner groups in which they can experience food that contains spices, or quiet rooms that contain large-print books about world history.

The goal of this Initiative is to produce a meaningful increase in the average level of compassion among those involved, and thus to strengthen all of American Society. You could think of this as the Mean New Deal.

But the official name will, again, be the

Initiative to

Cultivate

Empathy.

Thank you for your attention to this matter.

It will be expensive, but I believe this effort is crucial for our national survival, so I propose budgeting approximately $75 billion to create the Initiative to Cultivate Empathy. This new federal agency will recruit Americans who feel, or performatively claim to feel, racism or intolerance towards other human beings. In order to help them overcome these handicaps, it will temporarily employ them, on a paid full-time basis, to seek out immigrants or people who look like they believe immigrants look. When they find such people, these agents will use the full power of federal authority to give them sandwiches, fresh fruit and low-calorie probiotic soda, and to talk to them to learn about their cultural identities, life challenges and traditional preferences in hip hop.

The Initiative will also take inspiration from the current administration's laudable willingness to spend money to house immigrants, or people who look like they believe immigrants look, or graduate students with opinions, and will build and operate a set of federal shelters, located where most needed, to provide comfortable transitional housing for newly arrived immigrants or other people in temporary need. These facilities will also run supportive services, such as classes so that agents who only speak English have the opportunity to learn another language, or dinner groups in which they can experience food that contains spices, or quiet rooms that contain large-print books about world history.

The goal of this Initiative is to produce a meaningful increase in the average level of compassion among those involved, and thus to strengthen all of American Society. You could think of this as the Mean New Deal.

But the official name will, again, be the

Initiative to

Cultivate

Empathy.

Thank you for your attention to this matter.

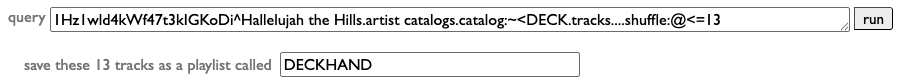

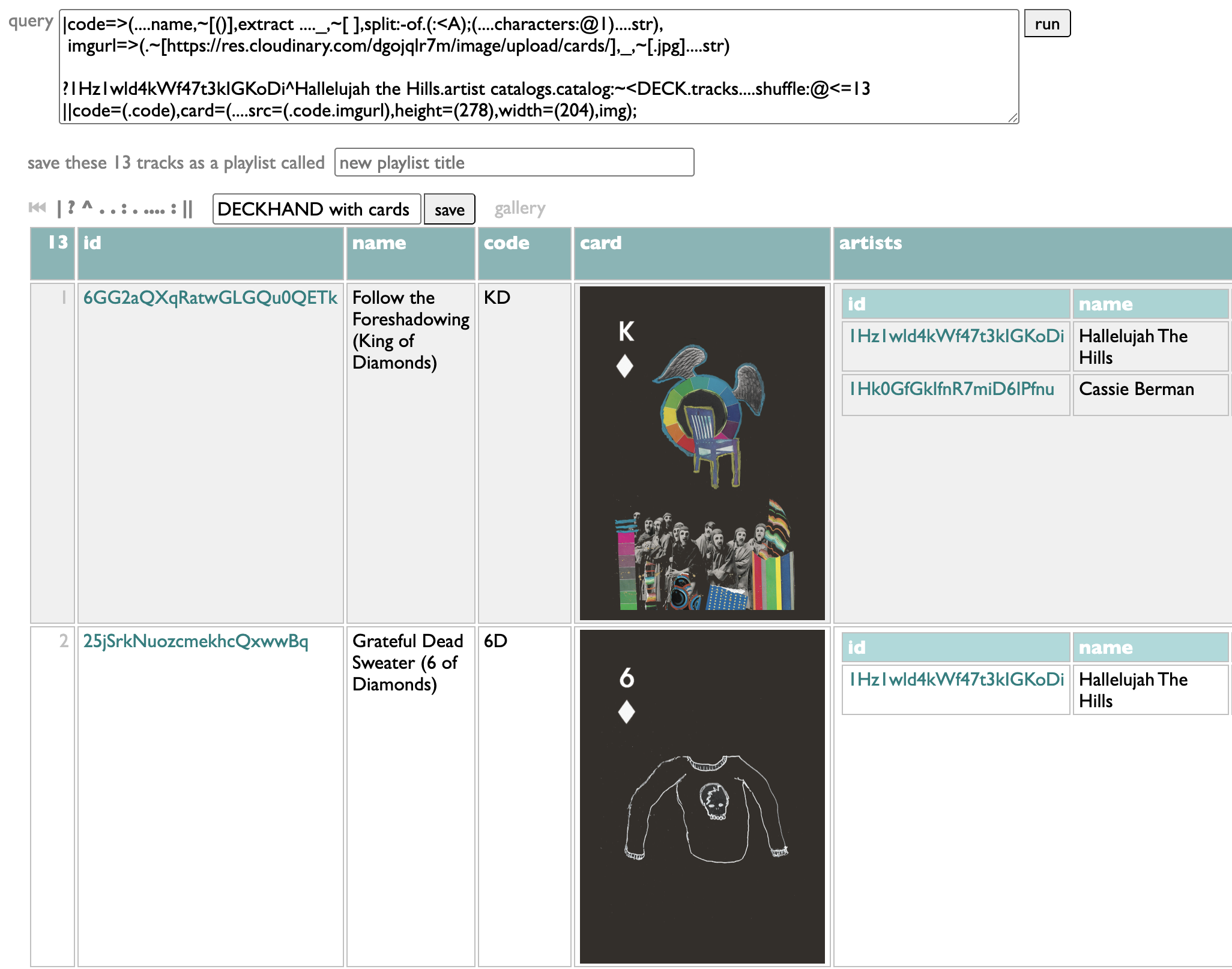

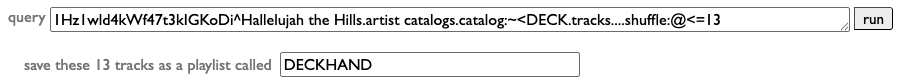

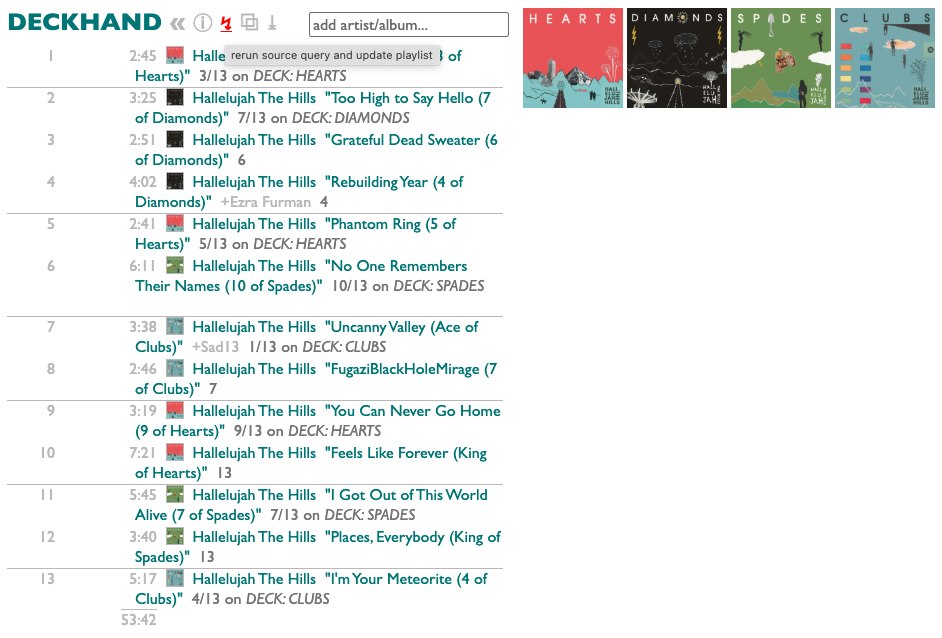

Hallelujah the Hills suggest picking random 13-song excerpts from their four-album (plus jokers) set DECK, and you can do that with a query in Curio.

Go to the Curio query page and paste in this query:

Run it, then put DECKHAND in the playlist-name blank, and hit Enter.

Now you not only have a playlist, but Curio remembers the query you made it with, so you can re-deal it whenever you're ready.

PS: Oh, Ryan points out that they made their own interactive version of his deck of actual cards here!

https://deck.hallelujahthehills.com/

We can even add the images into the query:

Go to the Curio query page and paste in this query:

1Hz1wld4kWf47t3kIGKoDi^Hallelujah the Hills.artist catalogs.catalog:~<DECK.tracks....shuffle:@<=13

Run it, then put DECKHAND in the playlist-name blank, and hit Enter.

Now you not only have a playlist, but Curio remembers the query you made it with, so you can re-deal it whenever you're ready.

PS: Oh, Ryan points out that they made their own interactive version of his deck of actual cards here!

https://deck.hallelujahthehills.com/

We can even add the images into the query:

|code=>(....name,~[()],extract ...._,~[ ],split:-of.(:<A);(....characters:@1)....str),

imgurl=>(.~[https://res.cloudinary.com/dgojqlr7m/image/upload/cards/],_,~[.jpg]....str)

?1Hz1wld4kWf47t3kIGKoDi^Hallelujah the Hills.artist catalogs.catalog:~<DECK.tracks....shuffle:@<=13

||code=(.code),card=(....src=(.code.imgurl),height=(278),width=(204),img);

imgurl=>(.~[https://res.cloudinary.com/dgojqlr7m/image/upload/cards/],_,~[.jpg]....str)

?1Hz1wld4kWf47t3kIGKoDi^Hallelujah the Hills.artist catalogs.catalog:~<DECK.tracks....shuffle:@<=13

||code=(.code),card=(....src=(.code.imgurl),height=(278),width=(204),img);

You don't have to treat the syntax design of a query language like a puzzle box, and certainly the designers of SQL didn't. SQL is more like a gray metal filing-cabinet full of manilla folders full of officious forms. I spent a decade formulating music questions in SQL, and I got a lot of exciting answers, but the process never got any less drab or any more inspiring. It never made me much want to tell other people that they should want to do this, too. It was always work.

I don't think this is just me. SQL may be more or less tolerable to different writers, but I feel fairly certain that nobody finds it charming. Whether anybody other than me will find DACTAL charming, I won't presume to predict, but every earnest work begins with an audience of one. DACTAL has an internal aesthetic logic, and using it pleases me and makes me want to use it more. And makes me care about it. I never cared about SQL. I never wanted to improve it. It never seemed like it cared about itself enough to want to improve.

In DACTAL, I feel every tiny grating misalignment of components as an opportunity for attention, adjustment, resolution. I pause in mid-question, and grab my tweezers. Changing reverse index-number filtering from :@-<=8 to :@@<=8 eliminates the only syntactic use of - inside filters; this allows filter negation to be switched from ! to - to match all the other subtractive and reversal cases; this frees up ! to be the repeat operator, eliminating the weird non-synergy between ? (start) and ?? (repeat). ?messages._,replies?? seems to express more doubt about replies than enthusiasm. Recursion is exclamatory. messages._,replies!

The gears snap into their new places with more lucid clicks. I want to feel like humming while I work, not grumbling. Like I'm discovering secrets, not filing paperwork.

I don't think this is just me. SQL may be more or less tolerable to different writers, but I feel fairly certain that nobody finds it charming. Whether anybody other than me will find DACTAL charming, I won't presume to predict, but every earnest work begins with an audience of one. DACTAL has an internal aesthetic logic, and using it pleases me and makes me want to use it more. And makes me care about it. I never cared about SQL. I never wanted to improve it. It never seemed like it cared about itself enough to want to improve.

In DACTAL, I feel every tiny grating misalignment of components as an opportunity for attention, adjustment, resolution. I pause in mid-question, and grab my tweezers. Changing reverse index-number filtering from :@-<=8 to :@@<=8 eliminates the only syntactic use of - inside filters; this allows filter negation to be switched from ! to - to match all the other subtractive and reversal cases; this frees up ! to be the repeat operator, eliminating the weird non-synergy between ? (start) and ?? (repeat). ?messages._,replies?? seems to express more doubt about replies than enthusiasm. Recursion is exclamatory. messages._,replies!

The gears snap into their new places with more lucid clicks. I want to feel like humming while I work, not grumbling. Like I'm discovering secrets, not filing paperwork.

In Query geekery motivated by music geekery I describe this DACTAL implementation of a genre-clustering algorithm:

The clustering in this version makes heavy use of the DACTAL set-labeling feature, which assigns names to sets of data and allows them to be retrieved by name later. That's what all the ?artistsx=, ?clusters= and similar bits are doing.

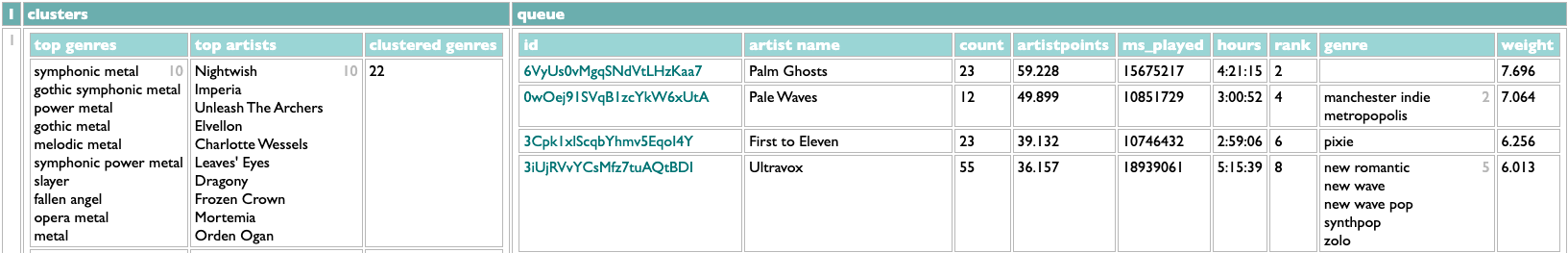

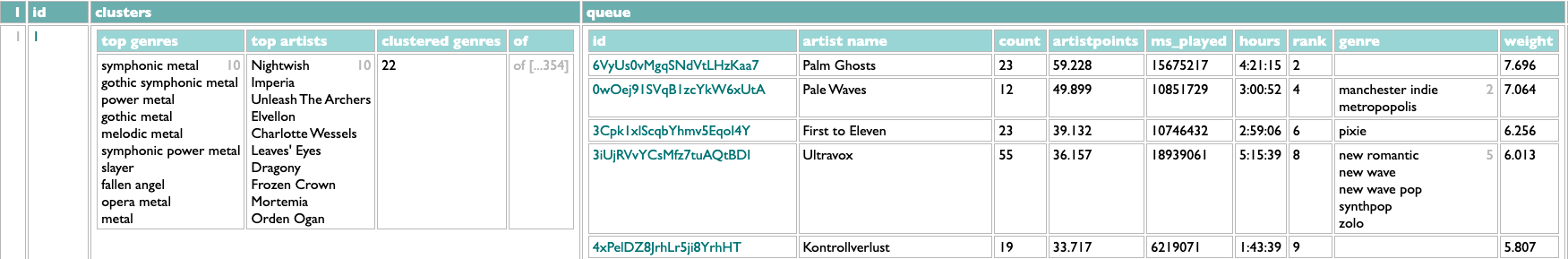

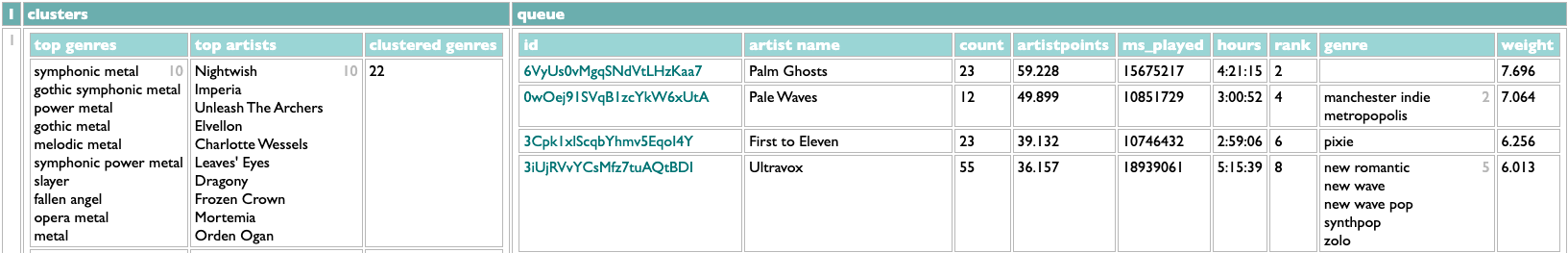

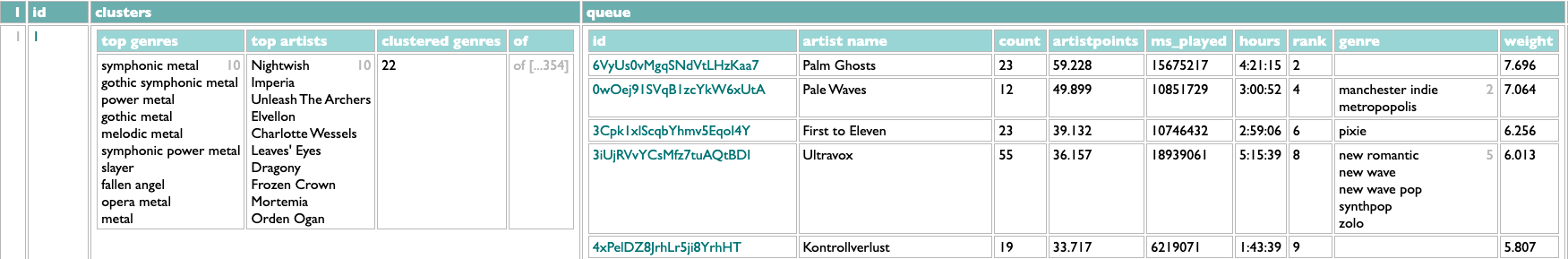

But it also uses DACTAL's feature for creating data inline. That's what the four-line block beginning with ...top genres= is doing. The difference between the two features is immediately evident if we try to scrutinize this query more closely by changing the ! at the end to !1 so it only runs one iteration. We get this:

That is, indeed, the first row of the query's results, and the lines in the query's ... operation map directly to the columns you see. But when I said I wanted to look at this query "more closely", I didn't mean I just wanted to see less of it. The crucial second piece of this query's clustering operation is the queue of unclustered artists, which gets shortened after each step. The query handles this queue by using set-labeling to stash it away as "artistsx" at two points in the query and get it back at two other points. This is useful, but it's also invisible. If I want to see the state of the queue after the first step I have to do something extra to pull it back out of the ether. E.g.:

Aha! Not hard. But really, when you're working with data and algorithms, your life will be a lot easier if you assume from the beginning that you'll probably end up wanting to look inside everything. Our lives mediated by data algorithms would all be improved if it were easier for the people who work on those algorithms to look inside of them, and to show us what's happening inside.

So instead of just using ... diagnostically at the end of the query, what if we built the whole query that way to begin with?

The logic for picking the next cluster is still complicated, but the same as before except for the very beginning where it gets the contents of the queue. I've defined it in advance in this version, since I implemented that feature since the earlier post, which allows the actual iterative repeat at the bottom to be written more clearly. We start with an empty cluster list and a full queue, and each iteration adds the next cluster to the cluster list, and then removes its artists from the queue. Here's what we see after one iteration, just by virtue of having interrupted the query with !1:

If you don't want to squint, it's the same. The results are the same, but the process is easier to inspect.

The new query is also faster than the old query, but not because of the invisible/visible thing. In general, extra visibility tends to cost a little more in processing time. The old version was slower than it should have been, by virtue of being too casual about how the clusters were identified, but got away with it because the extra data that might have slowed the process down was hidden. The new version is more diligent about this because it has to be (thus the id=(.clusters...._count) part), and indeed backporting that tweak to the old version does make it faster than the new version. But only by milliseconds. If it's hard for you to tell what you're doing, you'll eventually do it wrong, and the days you will waste laboriously figuring out how will be longer, and more miserable, than the milliseconds you thought you were saving.

PS: Although it seems likely that nobody other than me has yet intentionally instigated a DACTAL repeat, I will note for the record that I have changed the rules for the repeat operator since introducing it, and both this post and the linked earlier one now demonstrate the new design. The operator is now !, instead of ??, and it now always repeats the previous single operation, whereas in its first iteration it always appeared at the end of a subquery, and repeated that subquery. So, e.g., recursive reply-expansion was originally

and that exact structure would now be written

but since in this particular case the subquery has only a single operation, this query can now be written as just

?artistsx=(2024 artists scored|genre=(.id.artist genres.genres),weight=(....artistpoints,sqrt))

?genre index=(artistsx/genre,of=artists:count>=5)

?clusters=()

?(

?artistsx=(artistsx:-~~(clusters:@@1.of))

?nextgenre=(artistsx/genre,of=artists:count>=10#(.artists....weight,total),count:@1)

?nextcluster=(

nextgenre.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?clusters=(clusters,nextcluster)

)!

?genre index=(artistsx/genre,of=artists:count>=5)

?clusters=()

?(

?artistsx=(artistsx:-~~(clusters:@@1.of))

?nextgenre=(artistsx/genre,of=artists:count>=10#(.artists....weight,total),count:@1)

?nextcluster=(

nextgenre.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?clusters=(clusters,nextcluster)

)!

The clustering in this version makes heavy use of the DACTAL set-labeling feature, which assigns names to sets of data and allows them to be retrieved by name later. That's what all the ?artistsx=, ?clusters= and similar bits are doing.

But it also uses DACTAL's feature for creating data inline. That's what the four-line block beginning with ...top genres= is doing. The difference between the two features is immediately evident if we try to scrutinize this query more closely by changing the ! at the end to !1 so it only runs one iteration. We get this:

That is, indeed, the first row of the query's results, and the lines in the query's ... operation map directly to the columns you see. But when I said I wanted to look at this query "more closely", I didn't mean I just wanted to see less of it. The crucial second piece of this query's clustering operation is the queue of unclustered artists, which gets shortened after each step. The query handles this queue by using set-labeling to stash it away as "artistsx" at two points in the query and get it back at two other points. This is useful, but it's also invisible. If I want to see the state of the queue after the first step I have to do something extra to pull it back out of the ether. E.g.:

?artistsx=(2024 artists scored|genre=(.id.artist genres.genres),weight=(....artistpoints,sqrt))

?genre index=(artistsx/genre,of=artists:count>=5)

?clusters=()

?(

?artistsx=(artistsx:-~~(clusters:@@1.of))

?nextgenre=(artistsx/genre,of=artists:count>=10#(.artists....weight,total),count:@1)

?nextcluster=(

nextgenre.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?clusters=(clusters,nextcluster)

)!1

...clusters=(clusters),queue=(artistsx)

?genre index=(artistsx/genre,of=artists:count>=5)

?clusters=()

?(

?artistsx=(artistsx:-~~(clusters:@@1.of))

?nextgenre=(artistsx/genre,of=artists:count>=10#(.artists....weight,total),count:@1)

?nextcluster=(

nextgenre.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?clusters=(clusters,nextcluster)

)!1

...clusters=(clusters),queue=(artistsx)

Aha! Not hard. But really, when you're working with data and algorithms, your life will be a lot easier if you assume from the beginning that you'll probably end up wanting to look inside everything. Our lives mediated by data algorithms would all be improved if it were easier for the people who work on those algorithms to look inside of them, and to show us what's happening inside.

So instead of just using ... diagnostically at the end of the query, what if we built the whole query that way to begin with?

|nextcluster=>(

.queue/genre,of=artists:count>=10#(.artists....weight,total),count:@1

.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...name=(.genre:@1),

top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?artistsx=(2024 artists scored|genre=(.id.artist genres.genres),weight=(....artistpoints,sqrt))

?genre index=(artistsx/genre,of=artists:count>=5)

...clusters=(),queue=(artistsx)

|clusters=+(.nextcluster),

queue=-(.clusters:@@1.of),

id=(.clusters...._count)

!1

.queue/genre,of=artists:count>=10#(.artists....weight,total),count:@1

.artists/genre,of=artists:count>=5

||total=(.genre.genre index.count),overlap=[=count/total]

:(.total) :overlap>=[.1] #(.artists....weight,total),count

...name=(.genre:@1),

top genres=(.genre:@<=10),

top artists=(.artists:@<=10.name),

clustered genres=(.genre....count),

of=(.genre.genre index.artists)

:(.top genres)

)

?artistsx=(2024 artists scored|genre=(.id.artist genres.genres),weight=(....artistpoints,sqrt))

?genre index=(artistsx/genre,of=artists:count>=5)

...clusters=(),queue=(artistsx)

|clusters=+(.nextcluster),

queue=-(.clusters:@@1.of),

id=(.clusters...._count)

!1

The logic for picking the next cluster is still complicated, but the same as before except for the very beginning where it gets the contents of the queue. I've defined it in advance in this version, since I implemented that feature since the earlier post, which allows the actual iterative repeat at the bottom to be written more clearly. We start with an empty cluster list and a full queue, and each iteration adds the next cluster to the cluster list, and then removes its artists from the queue. Here's what we see after one iteration, just by virtue of having interrupted the query with !1:

If you don't want to squint, it's the same. The results are the same, but the process is easier to inspect.

The new query is also faster than the old query, but not because of the invisible/visible thing. In general, extra visibility tends to cost a little more in processing time. The old version was slower than it should have been, by virtue of being too casual about how the clusters were identified, but got away with it because the extra data that might have slowed the process down was hidden. The new version is more diligent about this because it has to be (thus the id=(.clusters...._count) part), and indeed backporting that tweak to the old version does make it faster than the new version. But only by milliseconds. If it's hard for you to tell what you're doing, you'll eventually do it wrong, and the days you will waste laboriously figuring out how will be longer, and more miserable, than the milliseconds you thought you were saving.

PS: Although it seems likely that nobody other than me has yet intentionally instigated a DACTAL repeat, I will note for the record that I have changed the rules for the repeat operator since introducing it, and both this post and the linked earlier one now demonstrate the new design. The operator is now !, instead of ??, and it now always repeats the previous single operation, whereas in its first iteration it always appeared at the end of a subquery, and repeated that subquery. So, e.g., recursive reply-expansion was originally

messages.(._,replies??)

and that exact structure would now be written

messages.(._,replies)!

but since in this particular case the subquery has only a single operation, this query can now be written as just

messages._,replies!

¶ You should be able to get what you want · 30 May 2025 listen/tech

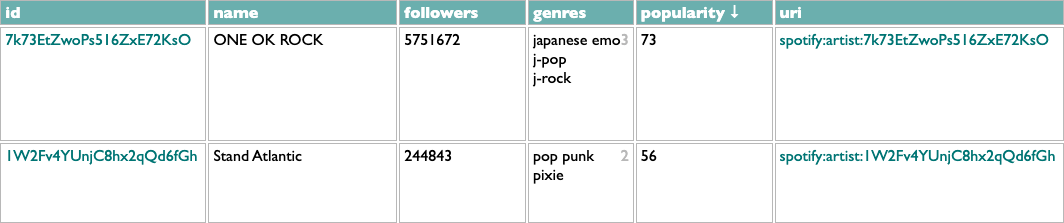

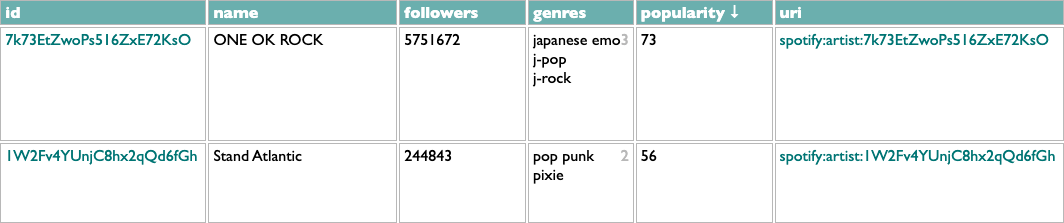

What I want this morning, after seeing Stand Atlantic and ONE OK ROCK in Boston last night, is a sampler playlist of some of their older songs that I haven't played before.

I can have this! Into Curio we go querying.

First of all, I need to select these two artists. This could be as simple as

or as compact as

but these both assume I'm already following the artists, and I am, but I might want samplers of artists I'm not.

In this version .matching artists looks up each name with a call to the Spotify /search API, .matches goes to the found artists, and #popularity/name.1 sorts them by popularity, groups them by name, and gets the first (most popular) artist out of each group, to avoid any spurious impostors.

Now that we have the artists, we need their "older songs". E.g.:

Here .artist catalogs goes back to the /artists API to get each artist's albums, .catalog goes to those albums, :release_date<2024 filters to just the ones released before 2024, and .tracks:-(:~live,~remix,~acoustic) gets all of those albums' tracks and drops the ones with "live", "remix" or "acoustic" in their titles. Which is messy filtering, since those words could be part of actual song titles, but the Spotify API doesn't give us any structured metadata to use to identify alternate versions, so we do what we can. We're going to be sampling a subset of songs, anyway, so if we miss a song we might not really have intended to exclude, it's fine.

Administering "songs that I haven't played before" is a little tricker. I have my listening history, but many real-world songs exist as multiple technically-different tracks on different releases, and if I've already played a song as a single, I want to exclude the album copy of it, too. Here's a bit of DACTAL spellcasting to accomplish this:

We toss the track pool and my listening history together, we merge (//) them by uri (in the listening history this field is called "spotify_track_uri", but on a track it's just "uri"), we group these merged track+listening items by main artist and normalized title, we drop any such group in which at least one of the tracks has a listening timestamp, and then take one representative track from each remaining unplayed group.

Now we have a track pool. There are various ways to sample from it, but what I want today is a main-act/opening-act balance of 2 ONE OK ROCK songs to every Stand Atlantic song. And shuffled!

We can shuffle a list in DACTAL by sorting it with a random-number key:

In this case we need to shuffle twice: once before picking a set of random songs for each artist, and then again afterwards to randomize the combined playlist. Like this:

Shuffle, group by main artist, get the first 20 tracks from the ONE OK ROCK group and the first 10 tracks from the Stand Atlantic group, and then shuffle those 30 together.

All combined:

Did I get what I wanted? Yap. And now I don't just have one OK rock playlist I wanted today, we have a sampler-making machine.

PS: Sorting by a random number is fine, and allows the random sort to be combined with other sort-keys or rank-numbering. But when all we need is shuffling, ....shuffle is more efficient than #(....random).

I can have this! Into Curio we go querying.

First of all, I need to select these two artists. This could be as simple as

artists:=ONE OK ROCK,=Stand Atlantic

or as compact as

ONE OK ROCK,Stand Atlantic.artists

but these both assume I'm already following the artists, and I am, but I might want samplers of artists I'm not.

ONE OK ROCK,Stand Atlantic.matching artists.matches#popularity/name.1

In this version .matching artists looks up each name with a call to the Spotify /search API, .matches goes to the found artists, and #popularity/name.1 sorts them by popularity, groups them by name, and gets the first (most popular) artist out of each group, to avoid any spurious impostors.

Now that we have the artists, we need their "older songs". E.g.:

.artist catalogs.catalog:release_date<2024.tracks:-~live;-~remix;-~acoustic

Here .artist catalogs goes back to the /artists API to get each artist's albums, .catalog goes to those albums, :release_date<2024 filters to just the ones released before 2024, and .tracks:-(:~live,~remix,~acoustic) gets all of those albums' tracks and drops the ones with "live", "remix" or "acoustic" in their titles. Which is messy filtering, since those words could be part of actual song titles, but the Spotify API doesn't give us any structured metadata to use to identify alternate versions, so we do what we can. We're going to be sampling a subset of songs, anyway, so if we miss a song we might not really have intended to exclude, it's fine.

Administering "songs that I haven't played before" is a little tricker. I have my listening history, but many real-world songs exist as multiple technically-different tracks on different releases, and if I've already played a song as a single, I want to exclude the album copy of it, too. Here's a bit of DACTAL spellcasting to accomplish this:

(that previous stuff),listening history

//(.spotify_track_uri;uri)

/main artist=(.artists:@1),normalized title=(....name,sortform):-(.of.ts).1

//(.spotify_track_uri;uri)

/main artist=(.artists:@1),normalized title=(....name,sortform):-(.of.ts).1

We toss the track pool and my listening history together, we merge (//) them by uri (in the listening history this field is called "spotify_track_uri", but on a track it's just "uri"), we group these merged track+listening items by main artist and normalized title, we drop any such group in which at least one of the tracks has a listening timestamp, and then take one representative track from each remaining unplayed group.

Now we have a track pool. There are various ways to sample from it, but what I want today is a main-act/opening-act balance of 2 ONE OK ROCK songs to every Stand Atlantic song. And shuffled!

We can shuffle a list in DACTAL by sorting it with a random-number key:

#(....random)

In this case we need to shuffle twice: once before picking a set of random songs for each artist, and then again afterwards to randomize the combined playlist. Like this:

#(....random)/(.artists:@1).(:ONE OK ROCK.20),(:Stand Atlantic.10)

#(....random)

#(....random)

Shuffle, group by main artist, get the first 20 tracks from the ONE OK ROCK group and the first 10 tracks from the Stand Atlantic group, and then shuffle those 30 together.

All combined:

(

ONE OK ROCK,Stand Atlantic.matching artists.matches#popularity/name.1

.artist catalogs.catalog:release_date<2024.tracks:-~live;-~remix;-~acoustic

),listening history

//(.spotify_track_uri;uri)

/main artist=(.artists:@1),normalized title=(....name,sortform):-(.of.ts).1

#(....random)/(.artists:@1).(:ONE OK ROCK.20),(:Stand Atlantic.10)

#(....random)

ONE OK ROCK,Stand Atlantic.matching artists.matches#popularity/name.1

.artist catalogs.catalog:release_date<2024.tracks:-~live;-~remix;-~acoustic

),listening history

//(.spotify_track_uri;uri)

/main artist=(.artists:@1),normalized title=(....name,sortform):-(.of.ts).1

#(....random)/(.artists:@1).(:ONE OK ROCK.20),(:Stand Atlantic.10)

#(....random)

Did I get what I wanted? Yap. And now I don't just have one OK rock playlist I wanted today, we have a sampler-making machine.

PS: Sorting by a random number is fine, and allows the random sort to be combined with other sort-keys or rank-numbering. But when all we need is shuffling, ....shuffle is more efficient than #(....random).

¶ AAI · 30 May 2025 essay/tech